Introduction

With RDMA finding its place in existing infrastructures more and more often, PCIe SSDs have become the next best thing in the world of high-performance storage and every system administrator’s cure for massively improving performance. The performance provided by NVMe SSDs measures in tens of thousands of parallel command queues that are able of delivering a consistent, high-bandwidth, low-latency user experience. Designed for enterprise workloads that require top performance (e.g., real-time data analytics, online trading platforms, etc), the NVMe interface provides much faster performance and greater areal density compared to legacy storage types. But, don’t get it wrong… In spite of being tailored for intense workloads, PCIe SSDs are not an enterprise-only thing. As prices for flash go down, SMBs and ROBOs will enjoy this tech soon!

So, why not take advantage of NVMe drives’ performance and add one or two of those things to your cluster? The thing is, that squeezing all the PCIe SSDs’ performance is not that straightforward. But, StarWind knows the trick! Let’s see how we made it possible.

Why NVMe over Fabrics?

If we put the pieces to the puzzle, it’s quite obvious that storage devices and storage network protocols like iSCSI and Fiber Channel have got a tight grip on our mission-critical daily operations that any business relies on, and iSCSI has made it clear for everyone – it’s here for good!

There’s a problem with iSCSI though: it was not designed to talk to flash. When using iSCSI with an SSD, you would not be able of achieving the flash performance you longed for. Several reasons for such occurrence would include

- Awful limits of the single short command queues by the NVMe drive – capping the underlying storage performance.

- The utilization of a single/two controllers in the connectivity of multiple drives. In other words, dispatching all the I/O to the available CPU cores when processing the application demand for storage.

iSCSI – Single short command queue is a performance bottleneck.

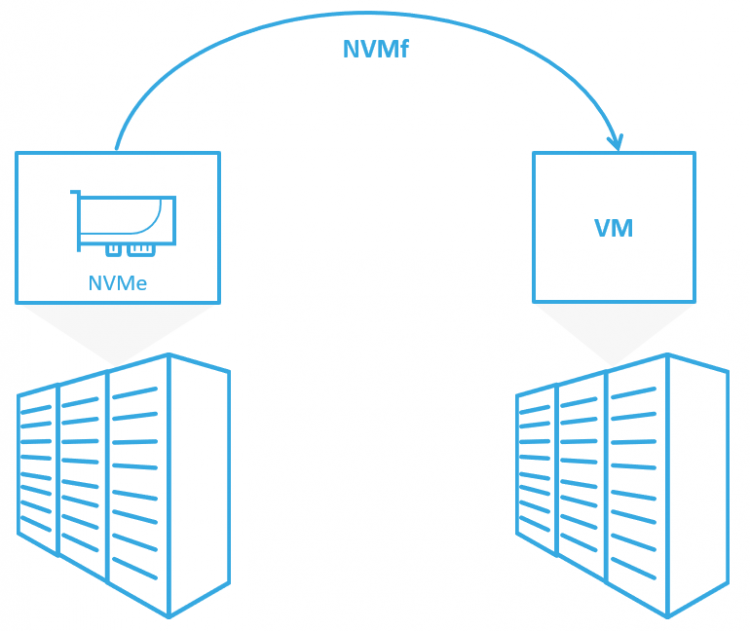

So, since iSCSI is inefficient for PCIe flash, it is necessary to use a different tech to present such drives in underlying storage over the network. The solution is NVMe over Fabrics – the protocol developed to enjoy the performance that all flavors of flash can provide. When comparing iSCSI to NVMe-oF, it becomes evident – the latency involved in the communication of iSCSI storage between partner hosts has got to go. With the backward compatibility of NVMe-oF with InfiniBand, RoCE and iWARP, the best technology to fill this gap would be RDMA.

RDMA in NVMe-oF

With RDMA, it becomes possible to bypass the OS kernel, as well as utilizing the CPU for processing I/O. As a result, the technology becomes an even more attractive option for establishing and structuring both the Command Channel and the Data Channel.

Another advantage would include non-serialized non-locking access to different Name Space IDs for different Memory Regions, with possibilities of multiplying Name Space IDs for sharing a single Memory Region.

In other words, RDMA is able of mapping multiple Memory Regions and utilize the capabilities of dedicated NVMe controllers making it a truly lightweight point-to-point (between one initiator host and one target), or many-to-many (between multiple initiator hosts and multiple initiator targets) communication protocol with no unnecessary CPU load.

StarWind NVMe-oF

With all the performance there for the taking, the biggest challenge is creating a target for client connections to the storage. NVMe, and consequently NVMe over fabrics driver, is much simpler and more lightweight with just the block layer.

Improve the storage performance of mission-critical VM’s.

The addition of NVMe-oF to StarWind VSAN functionality has several goals of eliminating factors that would include:

- Elevated limits of command queue lengths.

- Utilization of numerous controllers for the connectivity of multiple drives.

Resulting in an improvement of your infrastructure ROI, which is achieved by eliminating the storage performance overhead of costly NVMe drives, you, basically, need fewer NVMe drives in your storage to grant your VMs the required performance. In the long run, thanks to NVMe-oF, you will always get the predicted performance growth if you keep on adding PCIe SSDs to your cluster.

Just a couple of words about our implementation of this protocol. The single short command queue is replaced with 64 thousand command queues, 64 thousand commands each. Such a design allows reducing the latency remarkably, as well as benefit from all the IOPS that an NVMe drive can provide. By changing the way how the storage is accessed, we managed to squeeze a bit more IOPS from a PCIe SSD presented over the network than even its vendor would expect! Almost fully bypassing the kernel with NVMe-oF, we managed to remarkably offload CPU and achieve the latency that was only 10 microseconds higher than in the vendor’s datasheet.

If you are wondering about the performance benefit it offers, I can clarify that, when comparing the latency between local and partner storage, it becomes clear that, the implementation of NVMe-oF would almost eliminate any additional latency when accessing the storage volume presented by the partner. Want to see how successful StarWind NVMe-oF implementation is? Check out this article series: https://www.starwindsoftware.com/hyperconvergence-performance-high-score.

How did StarWind accomplish such an outstanding utilization of PCIe drives? Well, we designed the industry’s first all-software NVMe-oF Initiator for Windows and complemented StarWind VSAN with the Linux SPDK NVMe-oF Target. That tandem enables Windows clients to access the NVMe drives in the server world fast and with minimal performance loss (RDMA is here for good).

Conclusion

NVMe-oF is a protocol designed to squeeze maximum IOPS out of PCIe SSDs and present them over the network to the entire cluster.

The problem is that NVMe-oF is available only to the operating systems of the Linux family. Admins who use industry-standard hypervisors are left to the protocols that are proven to be inefficient for flash. This being said, another challenge these days is bringing NVMe-oF to the hypervisors. And, that’s where StarWind comes into the play!