At this year’s Explore 2023 conference, VMware made a number of interesting announcements in the field of artificial intelligence (AI). Now, as generative AI technologies come to the forefront, it’s especially important to organize infrastructure for them – that is, to prepare software and hardware in such a way as to utilize resources required for AI and ML most efficiently. This is because the demands in the Corporate AI sector already require entirely different capacities to support these tasks.

One of the pioneers in this new industry has been VMware, which not only collaborates with major partners like NVIDIA to develop the Private AI initiative for building high-performance GenAI systems but also provides the entire community with a set of tools for working with open technologies. VMware also organizes partnerships with these technology providers. The third important point is that VMware doesn’t forget to utilize tools in the AI and machine learning field within its own product line, which is continuously evolving and being enriched with new technologies and products. We will discuss all of this in this article.

1. Private AI initiative by VMware and NVIDIA

Today, organizations are keen to use AI technologies but are concerned about the risks to intellectual property, data breaches, and access control to artificial intelligence models. These issues underscore the need to implement and use corporate private AI.

VMware recently recorded an interesting video on this topic:

Let’s delve into this important announcement in more detail. Here’s what private AI within your organization can offer compared to the public infrastructure of ChatGPT:

- Distribution: computational power and AI models will be located close to the data. This requires infrastructure that supports centralized management.

- Data Confidentiality: The organization’s data remains in its possession and is not used to train other models without the company’s consent.

- Access Control: Access and audit mechanisms are in place to comply with company policies and regulatory rules.

Private AI doesn’t necessarily require private clouds; the main thing is to adhere to confidentiality and control requirements.

The VMware Private AI approach

VMware specializes in managing workloads of various natures and has extensive experience in implementing successful private AI. Key benefits of the VMware Private AI approach include:

- Choice: Organizations can easily switch between commercial AI services or open models to adapt to business requirements.

- Confidentiality: modern security methods ensure the confidentiality of data at all stages of its processing.

- Performance: AI tasks perform equal or better than bare metal counterparts in industry benchmarks run on NVIDIA-powered VMware.

- Management: A unified management approach reduces costs and the risk of errors.

- Time-to-value: AI environments can be quickly brought up and down in seconds, increasing flexibility and responsiveness to emerging challenges.

- Efficiency: Rapid deployment of enterprise AI environments and optimization of resource utilization reduces the overall costs of infrastructure and tasks associated with AI.

Thus, the VMware Private AI platform offers a flexible and efficient way to implement enterprise private AI.

VMware Private AI Foundation in partnership with NVIDIA

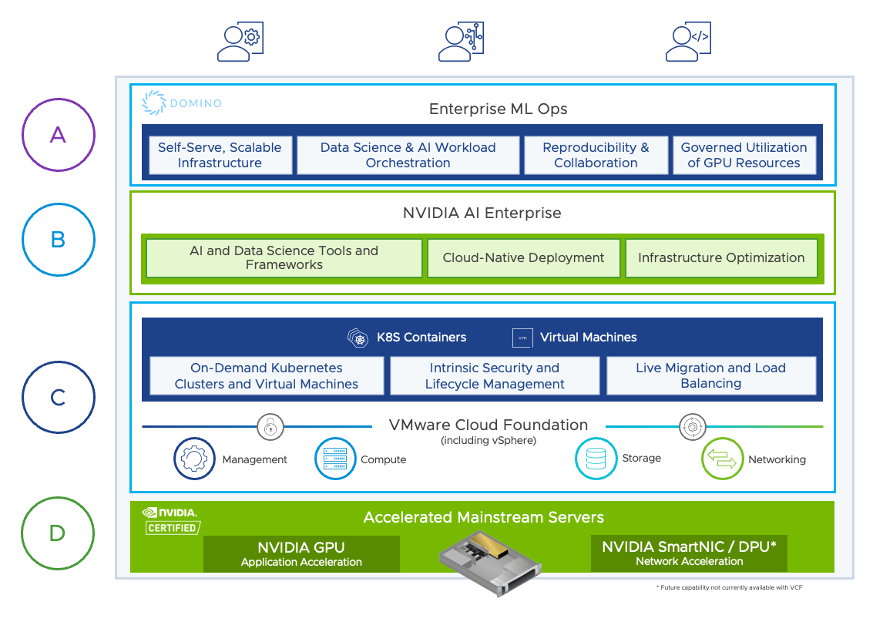

VMware partners with NVIDIA to create a universal platform, the VMware Private AI Foundation with NVIDIA. This platform will help enterprises customize large language models (LLMs), create more secure models for internal use, offer generative AI as a service, and securely scale result generation tasks.

The solution is based on VMware Cloud Foundation and NVIDIA AI Enterprise environments and will offer the following benefits:

- Data center scaling: Multiple GPU I/O paths allow AI workloads to scale up to 16 virtual GPUs in a single virtual machine.

- Performance storage: VMware vSAN Express architecture provides optimized storage based on NVMe storage and GPUDirect storage over RDMA technology, and also supports direct data transfer from storage to GPU without the participation of the CPU.

- vSphere Virtual Machine Images for Deep Learning: rapid prototyping with pre-installed frameworks and libraries.

The solution will use NVIDIA NeMo – a cloud-native framework as part of NVIDIA AI Enterprise that simplifies and speeds up the implementation of generative AI.

VMware Private AI Hardware Architecture

From a hardware perspective, VMware Private AI Foundation with NVIDIA will initially run on systems from Dell Technologies, Hewlett Packard Enterprise (HPE), and Lenovo, which will feature NVIDIA L40S GPUs, NVIDIA BlueField-3 DPUs, and NVIDIA ConnectX-7 SmartNIC network cards.

- NVIDIA L40S delivers 1.2x more generative AI model inference performance and 1.7x more training performance compared to NVIDIA A100 Tensor Core GPU.

- NVIDIA BlueField-3 DPUs accelerate, offload, and isolate massive compute workloads from virtualization, networking, storage, security, and other cloud AI services from the GPU or CPU.

- NVIDIA ConnectX-7 SmartNICs provide smart, high-performance networking capabilities for data center infrastructure, offloading the most demanding workloads to smart NICs.

VMware Private AI Software Architecture

VMware AI Labs division and its partners have developed an AI services solution that provides data privacy, AI flexibility, and integrated security. The architecture offers:

- Using the best models and tools tailored to business needs.

- Fast implementation thanks to documented architecture and code.

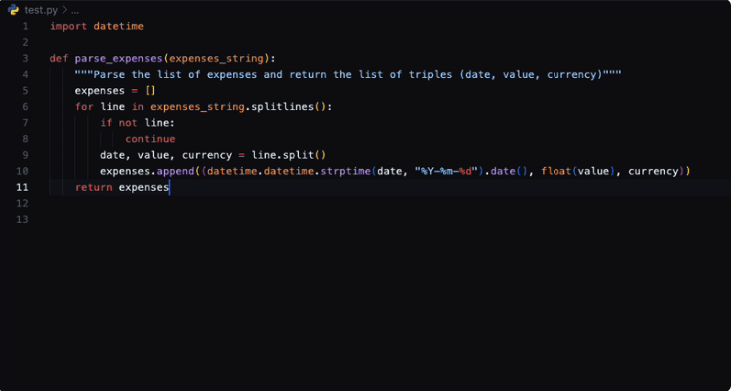

- Integration with popular open-source projects such as ray.io, Kubeflow, PyTorch, pgvector, and Hugging Face models.

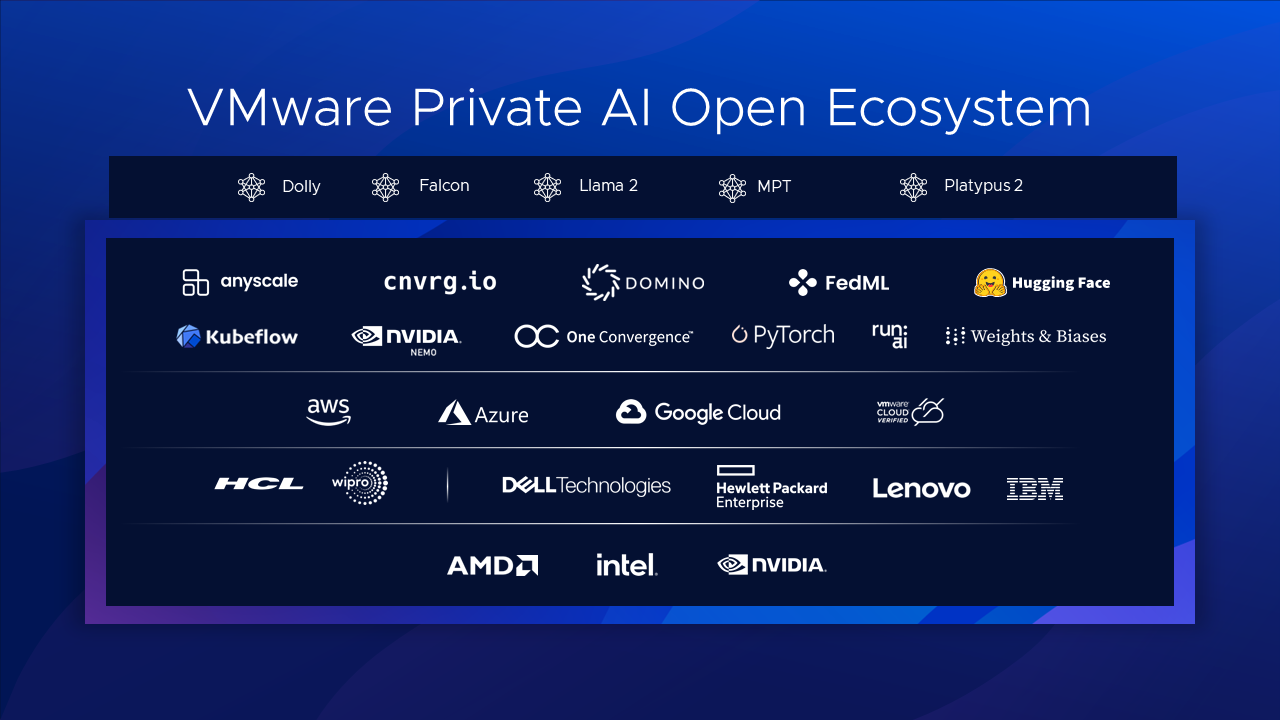

The architecture supports commercial and open MLOps tools from VMware partners, such as the MLOps toolkit for Kubernetes, as well as various add-ons (for example, Anyscale, cnvrg.io, Domino Data Lab, NVIDIA, One Convergence, Run:ai, and Weights & Biases). The platform already includes the most popular tool for generative AI, PyTorch.

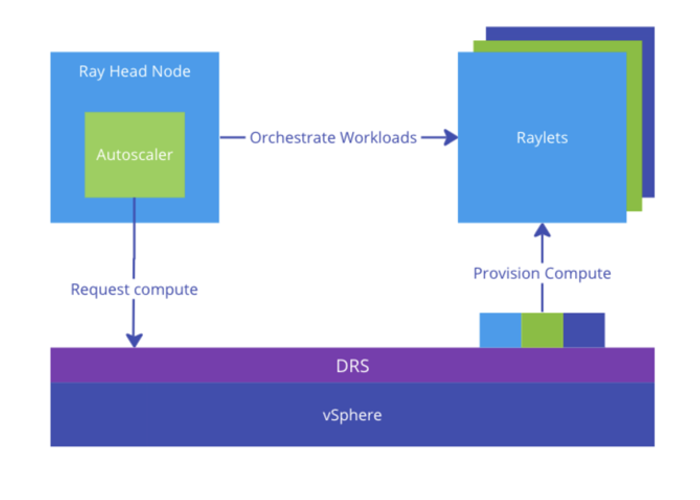

Collaboration with AnyScale expands the application of Ray AI to on-premise use cases. Integration with Hugging Face makes it easy and fast to implement open models.

The Private AI solution is already used in VMware’s own data centers, showing impressive results in cost, scale, and developer productivity.

To get started with VMware Private AI, you can start by reading the reference architecture and code examples. In addition, you may find this article from VMware very useful, as well as this material on platform deployment.

2. Open architecture VMware Private AI Reference Architecture for Open Source

The VMware Private AI Reference architecture for Open Source integrates innovative OSS (open source software) technologies to provide an open reference architecture for creating and using OSS models based on the VMware Cloud Foundation. At the Explore 2023 conference, VMware announced collaboration with leading companies that create value in enterprise AI in the IT infrastructure of large organizations based on Open Source:

- Anyscale: VMware brings the widely used open computing platform Ray to VMware Cloud environments. Ray on VMware Cloud Foundation makes it easy for data engineers and MLOps to scale AI and Python workloads by allowing them to use their own compute resources for ML workloads rather than moving to the public cloud.

- VMware, Domino Data Lab and NVIDIA have partnered to provide a unified analytics, data science and infrastructure platform optimized, verified and supported for AI/ML deployments in the financial industry.

- Global System Integrators: VMware partners with leading systems integrators such as Wipro and HCL to help customers realize the benefits of Private AI by building and delivering turnkey solutions that combine VMware Cloud with solutions from an ecosystem of AI partners.

- Hugging Face: VMware collaborates with Hugging Face to ensure SafeCoder runs on virtual platforms, as announced at VMware Explore. SafeCoder is a commercial coding assistant solution for enterprises, including services, software, and support. VMware uses SafeCoder internally for its processes and publishes reference architecture with code examples to ensure the fastest time to value for clients when deploying and operating SafeCoder on VMware infrastructure.

- Intel: VMware vSphere/vSAN 8 and Tanzu are optimized for the Intel Intelligent Technology Software Suite to leverage new embedded AI accelerators on the latest 4th Gen Intel Xeon Scalable processors.

In addition, VMware announces the launch of a new VMware AI Ready program (currently available only for private solutions, such as in partnership with NVIDIA), which will provide ISVs (independent software vendors) with the tools and resources needed to validate and certify their products on the VMware Private AI Reference architecture. The program will be available to ISVs specializing in ML and LLM Ops, data engineering, AI developer tools, as well as embedded AI applications. The new program is expected to become active by the end of 2023.

3. Intelligent Assist technologies for using Generative AI in VMware products

Also at Explore 2023, VMware introduced Intelligent Assist, a family of generative AI solutions trained on VMware’s own data to simplify and automate aspects of enterprise IT systems in the era of multi-cloud operations.

Intelligent Assist features will be integrated into VMware Cross-Cloud services and will be built on the VMware Private AI architecture. The following VMware products are expected to work with Intelligent Assist:

- VMware Tanzu with Intelligent Assist (currently in Tech Preview status) – this tool as part of the Tanzu Intelligence Services family of technologies will improve operations by making them more proactive and significantly optimize workflows through the use of a chatbot with generative AI based on a large language model (LLM), which uses a federated data architecture in VMware Tanzu Hub integrated solutions.

<iframe width=”600″ height=”338″ src=”https://www.youtube.com/embed/lj6DxP5nQws” title=”Intelligent Assist in VMware Tanzu Hub” frameborder=”0″ allow=”accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share” allowfullscreen></iframe>

- Workspace ONE with Intelligent Assist (currently in Tech Preview, known as Project Oakville) will allow users to create quality scripts using natural language prompts, resulting in a faster and more efficient scripting process.

- NSX+ with Intelligent Assist (currently in Tech Preview) – This tool will allow security analysts to quickly and accurately determine the relevance of security audit results and effectively eliminate threats.

Conclusions

VMware has prepared well for the new wave of generative AI technologies, which accelerated at the beginning of 2023 with the emergence of ChatGPT. It’s evident that VMware had been working on this significantly in advance, so they stand a good chance of capitalizing on the explosive hype in this industry. If this year marked the breakthrough of “consumer” AI in the form of ChatGPT, then the next year will likely be the year of corporate AI, where technologies developed now will find widespread use in organizational management tools, initially as “co-pilots”, and later on potentially replacing actual managers at various levels in large organizations.

For all this to happen, a reliably functioning infrastructure is needed, with support for these technologies both at the software and hardware levels – and it seems that VMware is technologically poised to become a dependable platform for the new organization of corporate IT.