Introduction

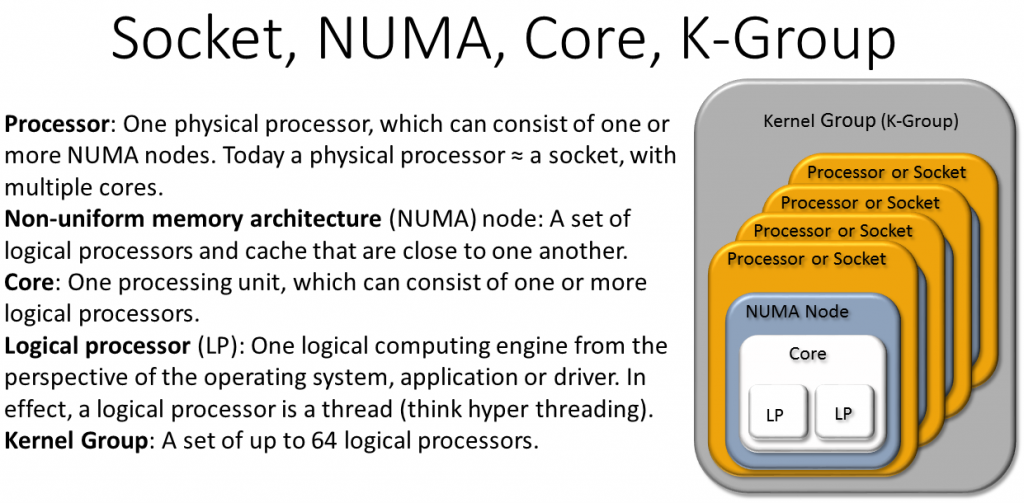

With Windows Server 2012 Hyper-V became truly NUMA aware. A virtual NUMA topology is presented to the guest operating system. By default, the virtual NUMA topology is optimized by matching the NUMA topology of physical host. This enables Hyper-V to get the optimal performance for virtual machines with high performance, NUMA aware workloads where large numbers of vCPUs and lots of memory come into play. A great and well known example of this is SQL Server.

Non-Uniform Memory Access (NUMA) comes into play in multi-processor systems where not all memory is accessible at the same speed by all the cores. Memory regions are connected either directly to one or more processors. Processors today have multiple cores. Groups of such cores that can access a certain amount of memory at the lowest latency (“local memory”) are called NUMA nodes. A processor has one or more NUMA nodes. When cores have to get memory form another NUMA node, it’s slower (“remote memory”). This allows for more flexibility in serving compute and memory needs which helps to achieve a higher density of VMs per host. This comes at the cost of performance. Modern applications optimize for the NUMA topology where the cores leverage local, high speed memory. As such it’s beneficial to the performance of those applications when running in a VM that this VM has an optimized virtual NUMA layout based on the physical one of the host.

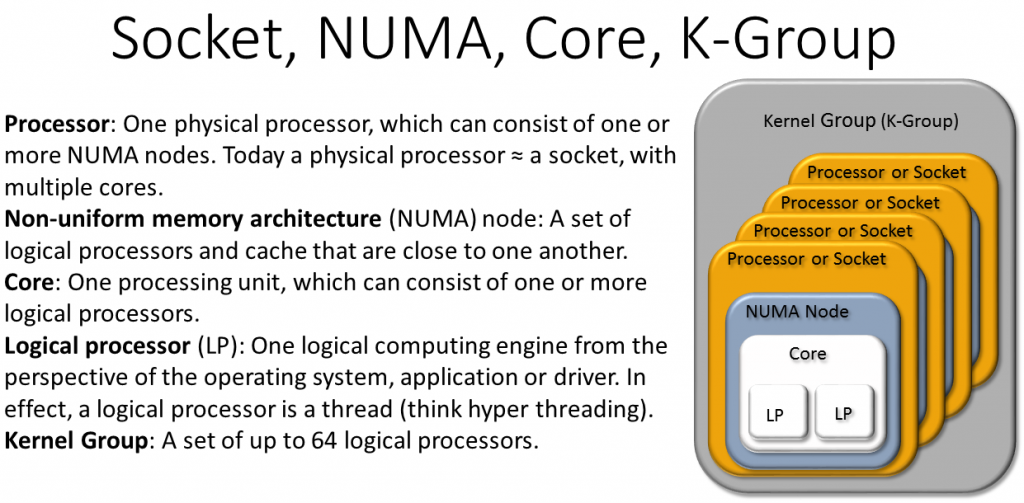

An overview of CPU sockets, cores, NUMA nodes and K-Groups based on slide deck by Microsoft

An overview of CPU sockets, cores, NUMA nodes and K-Groups based on slide deck by Microsoft

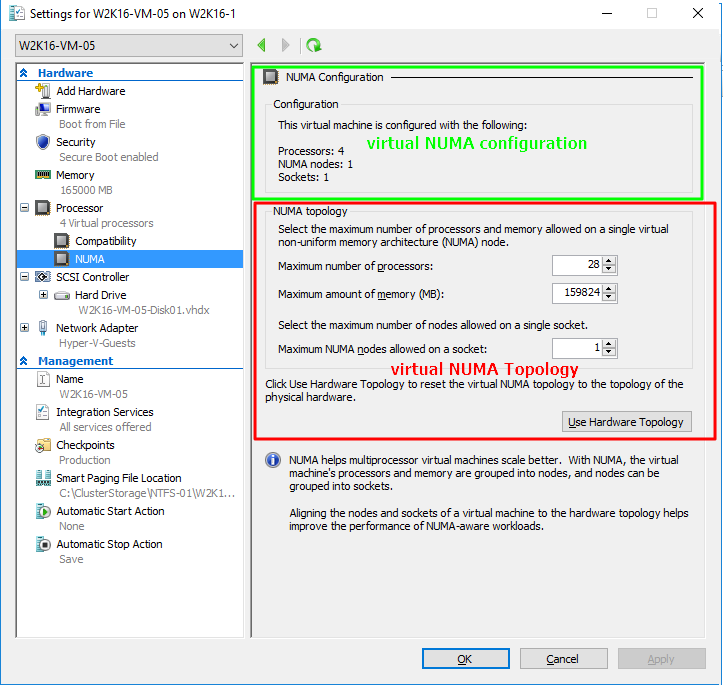

When reading Hyper-V Virtual NUMA Overview we gain a better understanding of virtual NUMA settings and its relation to static and dynamic memory. It is also useful to take a closer look the virtual NUMA configuration and the virtual NUMA topology with some scenarios in order to gain a better understanding of what this all means.

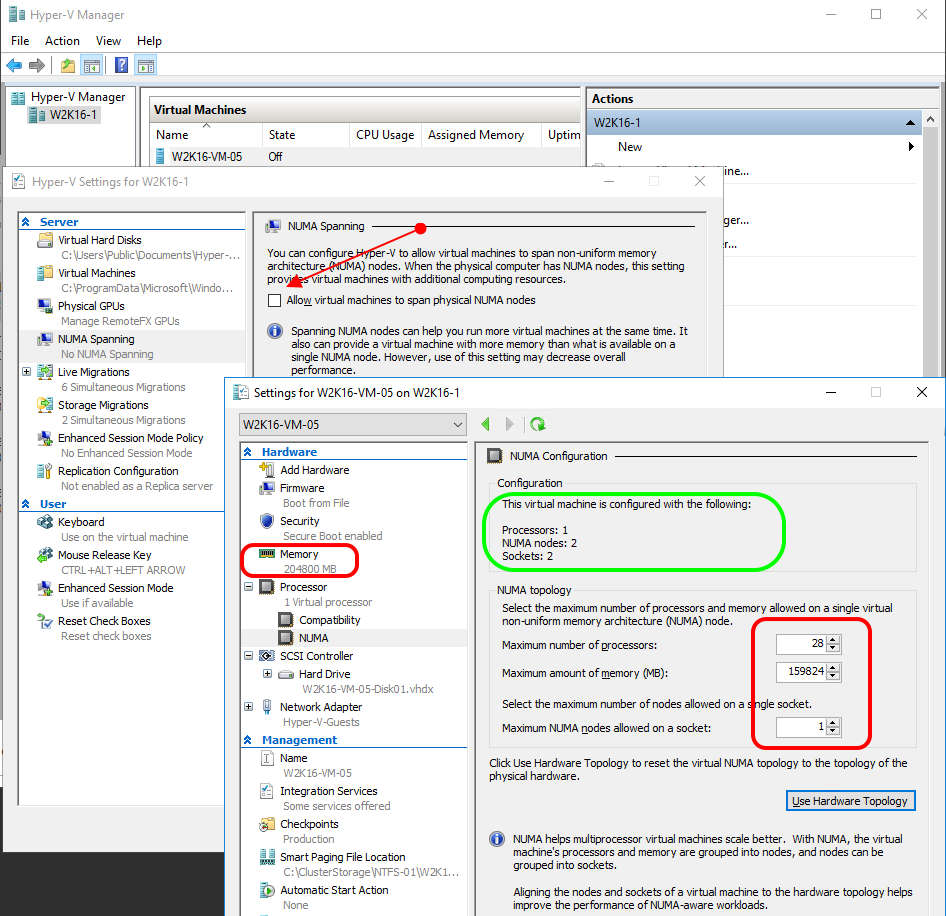

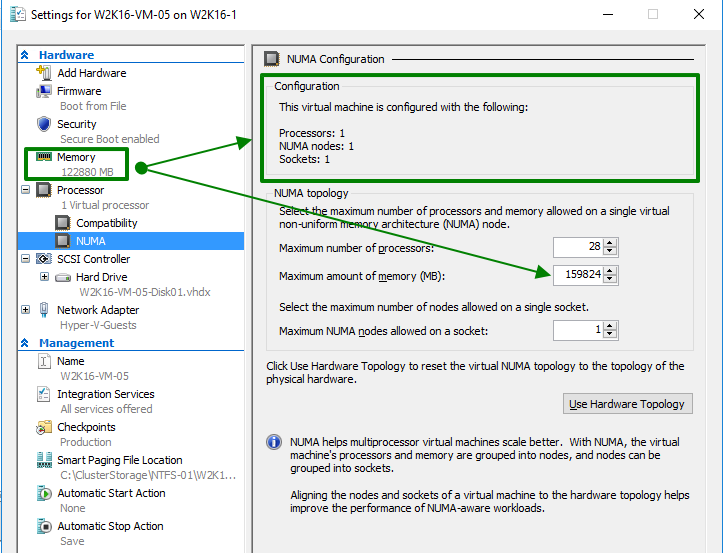

A VM has a NUMA configuration (green), based on its virtual NUMA topology (red). The moment a VM needs more memory than a single NUMA node can provide we get more virtual NUMA nodes provisioned. The same happens, when a VM needs more vCPUs than a single NUMA node can provide. All this is done for you based on memory and CPU configuration of the VM and the host.

NUMA spanning, virtual NUMA topology & virtual NUMA Configuration

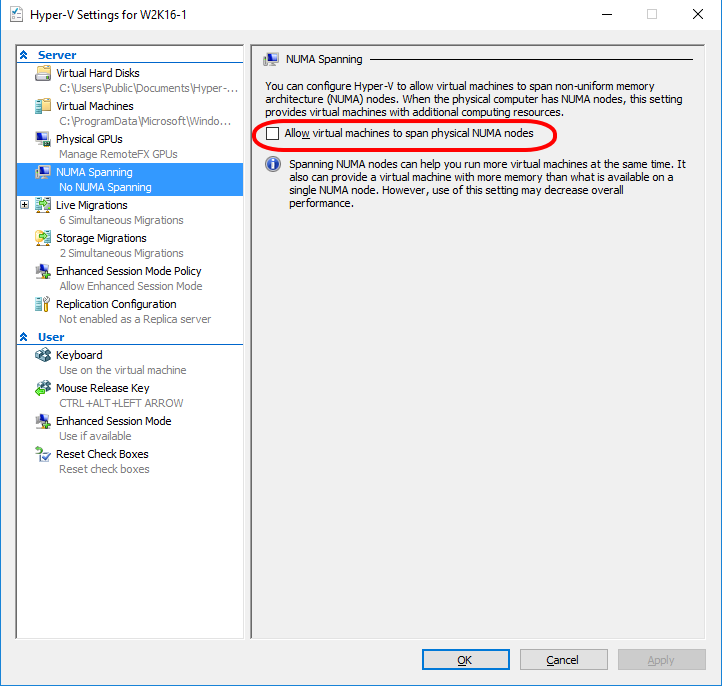

NUMA spanning

NUMA spanning is set at the Hyper-V host level and works with both static and dynamic memory. The rules of behavior do differ. By default, NUMA spanning is allowed.

This affects all virtual machines running that Hyper-V host. NUMA spanning determines the virtual machine behavior when there is insufficient memory on the host to perform an optimal mapping between the virtual NUMA nodes and the host’s physical NUMA nodes.

With NUMA spanning enabled the virtual machine can split one or more virtual NUMA nodes over two or more physical NUMA nodes. The drawback is that performance can suffer when CPU cycles use slower memory from another NUMA node instead of its own. As with (live) migration, startup or restore the memory layout and availability can differ depending on the resources available at the time we might see inconsistent performance as a result of such actions.

When NUMA spanning is disabled, Hyper-V will not start, restore, or accept a live migration of a virtual machine if it would be forced to split a virtual NUMA node between two or more physical NUMA nodes. This ensures that virtual machines always run with optimal performance. The drawback is that it could lead to a virtual machine not starting, restoring, or (live) migrating.

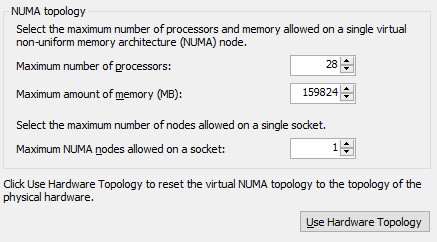

Virtual NUMA topology

The virtual NUMA topology ideally follows your hardware topology, and it generally smart to do so and not mess with these settings. You can play with the NUMA topology to your hearts content but when it’s time to get serious for production use Microsoft made it easy for you to revert back to the hosts physical topology. The virtual machine setting has a “Use Hardware Topology” button. This comes in handy after experimenting and testing to reset the virtual NUMA topology to match the underlying physical one. This is also hand when you import / migrate virtual machines form anther host.

That’s already one tip for you: use this button when you’ve migrated to new hardware to make sure you have the best possible topology. Practically this also means that keeping your cluster nodes specification the same helps out a lot here. When upgrading a cluster to new hardware or migrating VMs to a new cluster it pays to make sure the virtual NUMA is optimized.

If you have hosts with different hardware topologies one should configure the virtual NUMA topology for the lowest common denominator if you want the flexibility of being able to use all hosts in the cluster as equal partners.

Virtual NUMA configuration

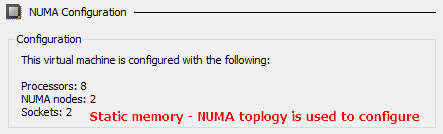

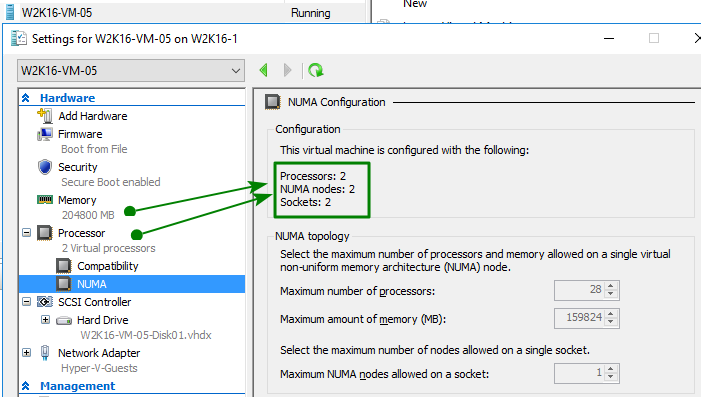

The virtual NUMA configuration is configured for you at the VM level but it only really comes into play with static memory configurations. Here we see a virtual machine with 8 vCPU that gets 2 NUMA nodes and 2 sockets. Based on the NUMA topology we see above and which follows the physical topology.

The picture above should tell you that this VM has more memory than s single NUMA node can provide as 8 vCPU is well within the limits of a single NUMA node.

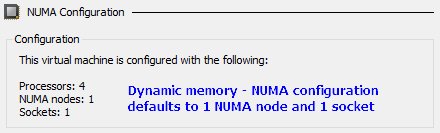

With dynamic memory you cannot have virtual NUMA and vice versa. We need to choose what we want to use. A virtual machine that has Dynamic Memory enabled effectively has only one virtual NUMA node, and no NUMA topology is presented to the virtual machine. This is regardless of the number of vCPU (4) that virtual machine has assigned, the amount of memory it has been assigned or what the underlying NUMA topology looks like (in our example below while the host has 2 physical NUMA nodes).

When using dynamic memory, the virtual NUMA settings are ignored. This means that with dynamic memory enabled you have the following situation:

- NUMA spanning setting on the host must be enabled when you have virtual machines that are large enough to be split between two or more physical NUMA nodes.

- NUMA spanning setting can be disabled, but in that case the virtual machine must fit entirely within a single physical NUMA node, or the virtual machine will not start, or be restored or migrated.

We’ll now take a look at some scenarios with NUMA Spanning disabled as this gives us a better understanding of how things work.

Scenario 1 – NUMA spanning disabled with dynamic memory

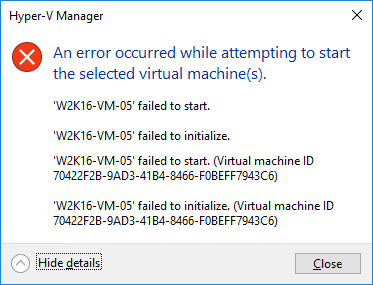

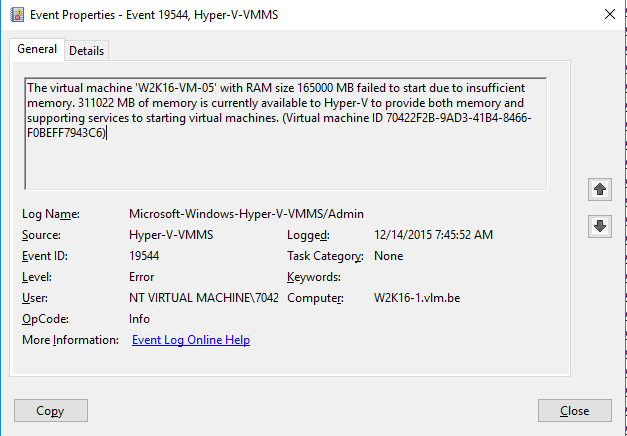

The situation with dynamic memory is easily demonstrated. When you disable NUMA spanning on a host and configure a virtual machine with more startup memory than a single physical NUMA node can provide you see that it won’t start.

The Hyper-V-VMMS event log reflects this and you’ll find an event ID 19544.

Indeed 16500 MB of memory is more than 1 physical NUMA node can deliver. But as you can see there is way more memory available on the host. Half of it however is on the other NUMA node and not available to the VM in this scenario. Clear enough. For high performance virtual machines with lots of memory and CPU use, more than a single NUMA Node can supply that and with NUMA aware workloads you want to have virtual NUMA turned on, which means using static memory. That’s the way to get the best possible performance combined with the optimal use of resources without suffering the drawbacks of NUMA spanning (slower memory access). Dynamic memory will not do when it comes to the best possible performance.

Alright! That seems easy enough to understand. Now let’s move on to static memory scenarios.

Scenario 2 – NUMA spanning disabled with static memory

We’ll look at how virtual NUMA settings relate to NUMA Spanning being enabled or disabled on the host when using static memory. In the above MSDN mentioned we find this nice table to show of the benefits and disadvantages of having NUMA spanning enabled or disabled.

NUMA SpanningBenefitsDisadvantages

| Enabled (default setting) |

|

|

| Disabled |

|

|

Whilst experimenting with different and sometimes very artificial (weird, absurd or unique, call it whatever you want) configurations I did ran into a situation that needs a bit more explaining. Why? Well it made me doubt the correctness of what’s in the table, in particular, the yellow highlighted part. As it turns out the table content is correct. But it lacks the details needed for me to understand it right away.

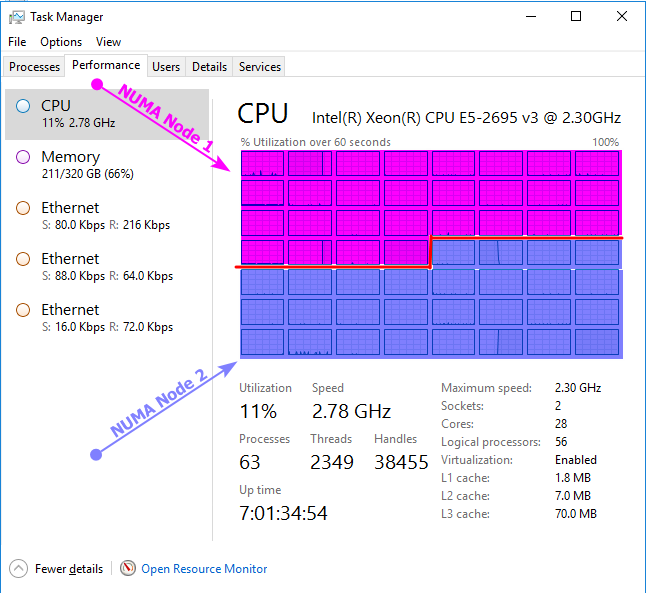

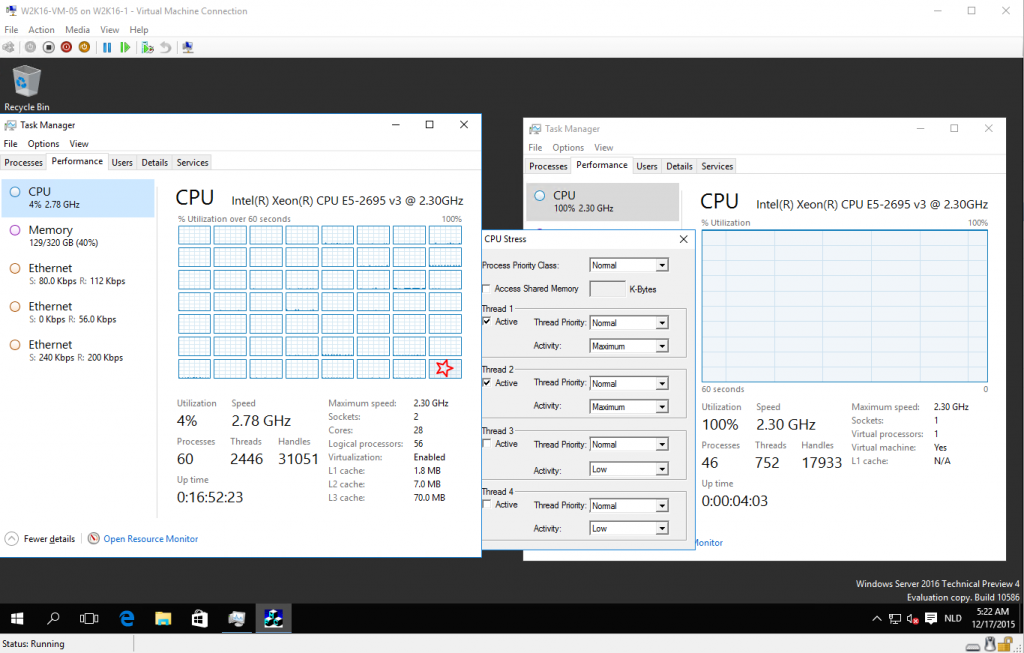

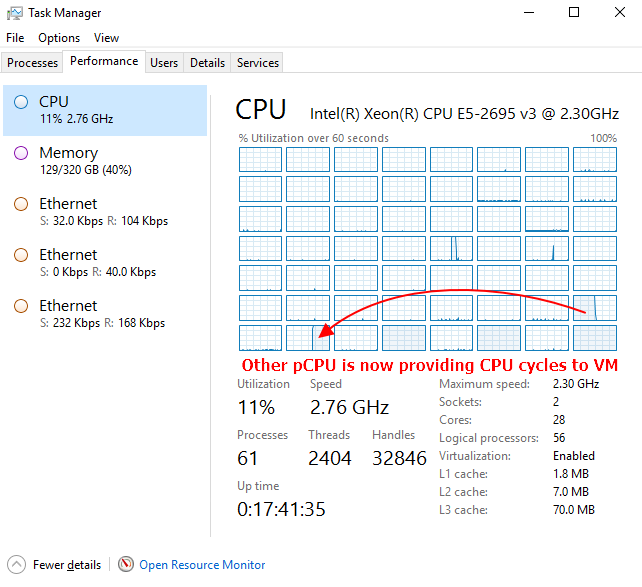

Let’s take a look at our nice Hyper-V host that has NUMA spanning disabled. It has 320GB of memory, and has 2 (dual socket) CPUs with 14 cores. The physical NUMA topology of this servers looks like this is Task Manager.

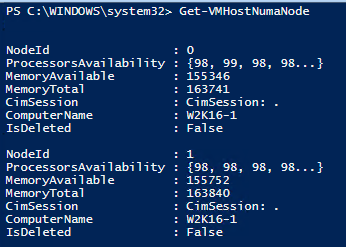

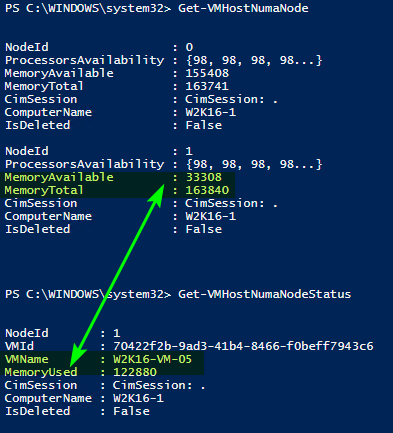

Each NUMA nodes has fast direct access to 50% of the memory (160GB). At rest (meaning no VMs running) we take a look at the NUMA node setup by running Get-VMHostNumaNode. We see this host has 2 NUMA nodes and minus what the parent partition needs and host reserve that’s auto assigned we see the available memory is split evenly between the NUMA nodes.

We now configure a single virtual machine on that host with 1 vCPU. We assign it 200GB of static memory. This means we cannot assign that VM enough memory from just one single physical NUMA node. I took 1 vCPU deliberately to prove a point here. The difference between a vCPU and a virtual NUMA node comes into play here. A virtual machine, regardless of the number of virtual CPUs, gets a certain number of NUMA nodes configured. How many is determined by the settings of the NUMA topology.

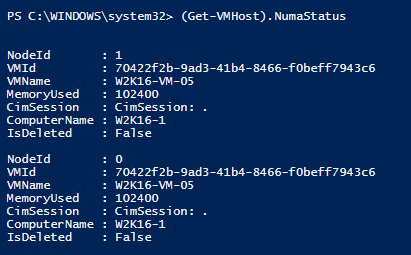

With NUMA spanning disabled on the host my bet was this VM would not start. But it did start, even with NUMA spanning disabled on that host! Why? Well because of the NUMA configuration, as you can see the VM is allowed to use multiple NUMA nodes, it’s not allowed to span them. So we effectively see that the 2 NUMA nodes of the VM each get 50% of the total memory form one of the physical NUMA nodes.

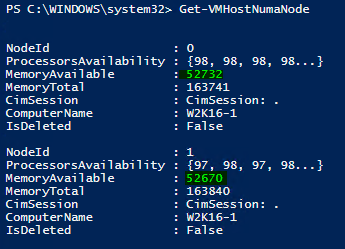

Running Get-VMHostNumaNode shows us that both NUMA node 1 and NUMA node 2 have 100GB less available memory, which sums to the 200GB we assigned to our virtual machine.

Running (Get-VMHost).NumaStatus (or use Get-VMHostNumaNodeStatus) shows us that our test VM, the only one running, has gotten it’s memory 50% from NUMA node 1 and 50% from NUMA node 2.

Let’s look at what the table says again “Virtual machines will fail to start if any virtual NUMA node cannot be placed entirely within a single physical NUMA node.” Note “any Virtual NUMA node”. I’d change that to “Virtual machines will fail to start if any single virtual NUMA node cannot be placed entirely within any single physical NUMA node.” to be more precise, but, I’m not a native speaker.

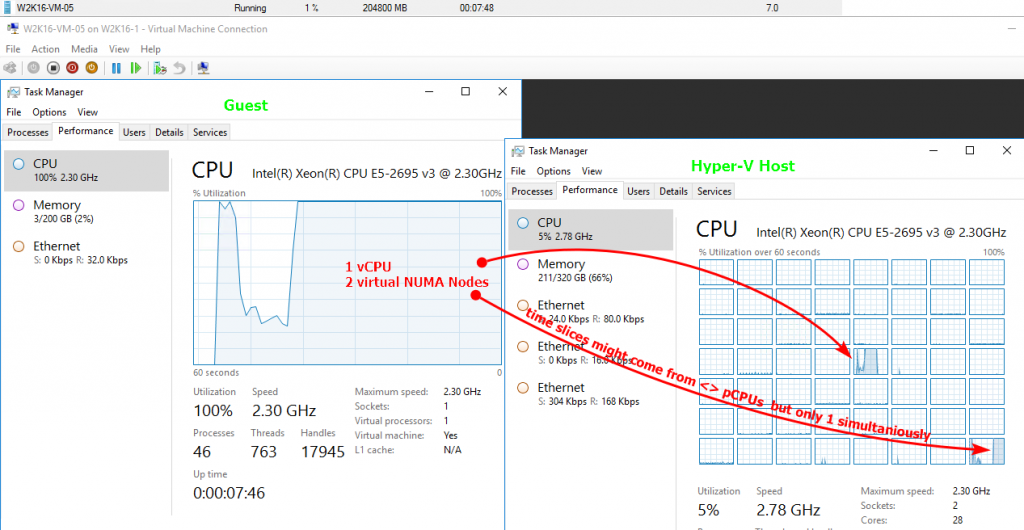

Well indeed we have 2 virtual NUMA nodes configured as our single vCPU requires more memory than the NUMA topology (that wisely follows our hardware topology) can deliver. So the VM gets configured with 2 virtual NUMA nodes. It also gets two sockets. OK, 1 processor with 2 NUMA nodes is easy to grasp, but two sockets? How does that compute. Well it’s virtualization of the underlying physical situation! To me this was a bit confusing at first. But once you wrap your head around it you understand the behavior better. In the end vCPUs are just scheduled slices of compute time on the physical cores. As long as these time slices op compute in a single virtual NUMA node stay within the boundaries of a single physical NUMA node for the fastest possible memory access you have the behavior you desire. It has the added benefit that a virtual machine will start / live migrate under more conditions than if it tied a vCPU to one and only one virtual NUMA node in a single socket. The restriction of not spanning NUMA nodes is reflected in a single virtual NUMA node being constrained to single physical NUMA node and not allowing spanning to another physical one. Other than that it can use multiple physical NUMA nodes even if NUMA spanning is disabled. Granted the 1 vCPU scenario is ridiculously fabricated and won’t use more than the equivalent of a single physical CPU compute from a single physical NUMA node while it gets memory from two physical NUMA Nodes. It’s not going to provide the equivalent of 2 CPU to a single CPU, multiple NUMA Nodes or not.

Yes, this is an artificial scenario for demonstration purposes and not a great performance scenario. The moment however you have this scenario with 2 or more vCPUs the CPU time slices are spread across the 2 physical NUMA nodes optimizing memory, that’ where the benefits of this behavior comes into its own.

Ben Armstrong, currently principal PM at the Hyper-V team and an expert in all things Hyper-V, provided us with a more detailed and accurate statement

“When NUMA spanning is disabled, each virtual NUMA node of a virtual machine will be restricted to a single physical NUMA node. So, for a virtual machine with virtual NUMA disabled (or with virtual NUMA node enabled – but a single virtual NUMA node configured) it will be constrained to a single physical NUMA node. For a virtual machine with virtual NUMA enabled, and multiple virtual NUMA nodes, each virtual NUMA node may be placed on separate physical NUMA nodes – as long as each virtual NUMA node is fully contained in any given physical NUMA node.”

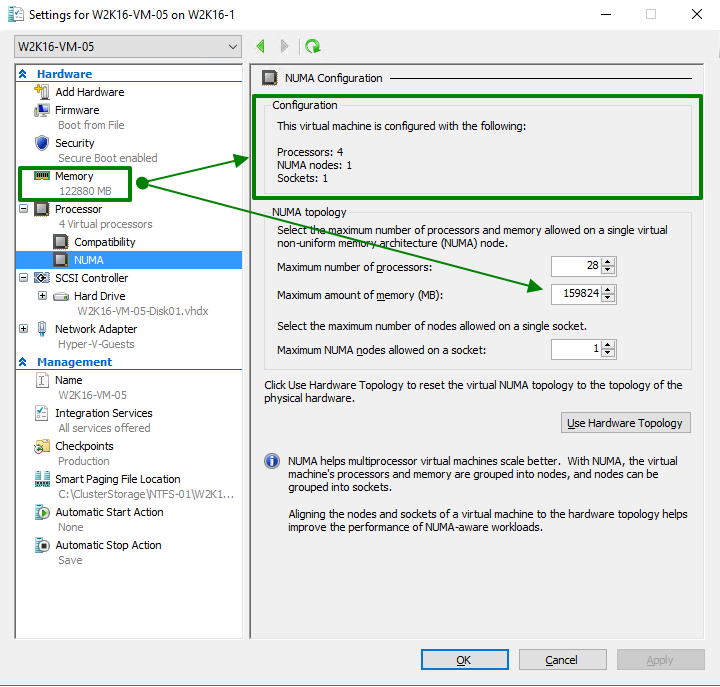

Now let’s set this virtual machines memory to an amount where it will fit into one (physical/virtual NUMA node).

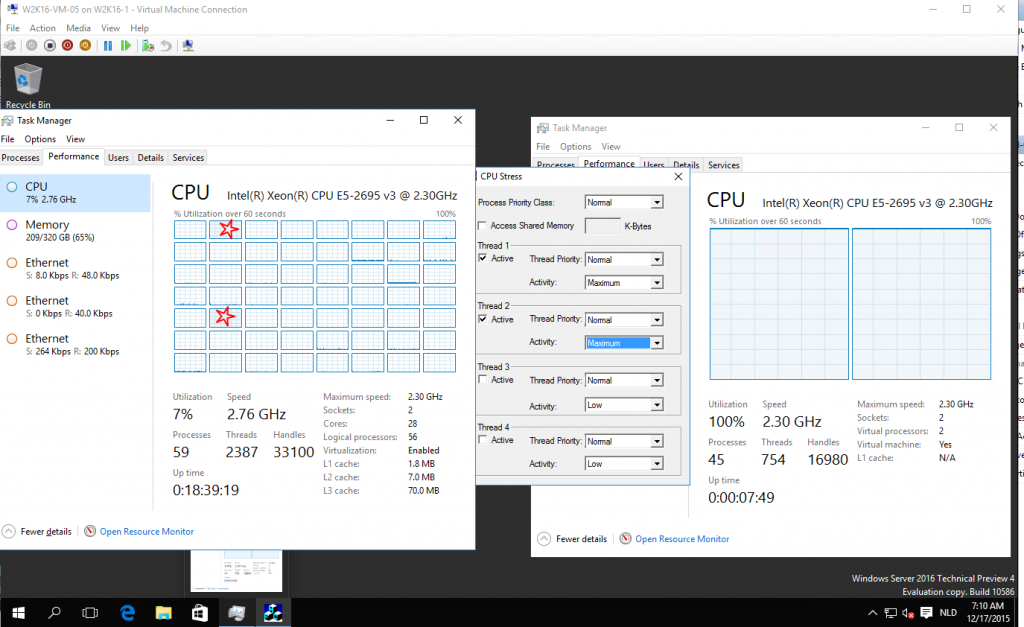

When we now start that virtual machine and put a load 100% CPU load on the VM we see only one pCPU on being taxed in one physical NUMA node delivering memory reflecting the virtual NUMA settings. No spanning is going on, there is no need. Now there could be more pCPU being used within the same virtual NUMA node if, but this in an otherwise idle node, only running this VM.

This is also clear when we look at the results of Get-VMHostNumaNode and Get-VMHostNumeNodeStatus

Likewise, if you would add vCPUs but keep the memory below the maximum of what fits in a physical NUMA node we’ll see more pCPUs used within the same physical NUMA node. NUMA spanning is disabled-and only one virtual NUMA node configured as you can see on the underlying NUMA topology.

Starting this virtual machine with 100% CPU load shows pCPU on being taxed in one physical NUMA node being taxed in compliance reflecting the virtual NUMA settings. No NUMA node spanning is going on. You can play with NUMA spanning, number of vCPUs and memory to test the behavior and get a better understanding of the behavior.

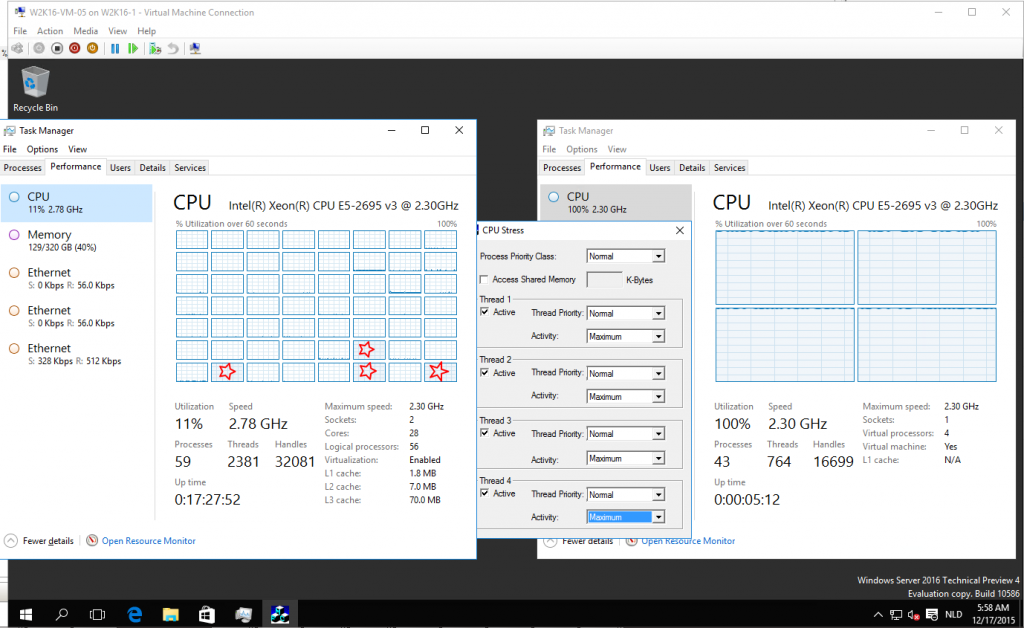

On final thing to note is that no matter what you do, vCPU are slices of compute time and whether you reserved entire CPUs for a VM or not you cannot hard affinitize vCPU to vCPUs. It’s not needed. You’ll get your compute when it’s available or as reserved but the Hyper-V scheduler will decide what pCPU delivers them. So you will see this the below happen when another physical CPU is put into service delivering compute cycles to the virtual machine.

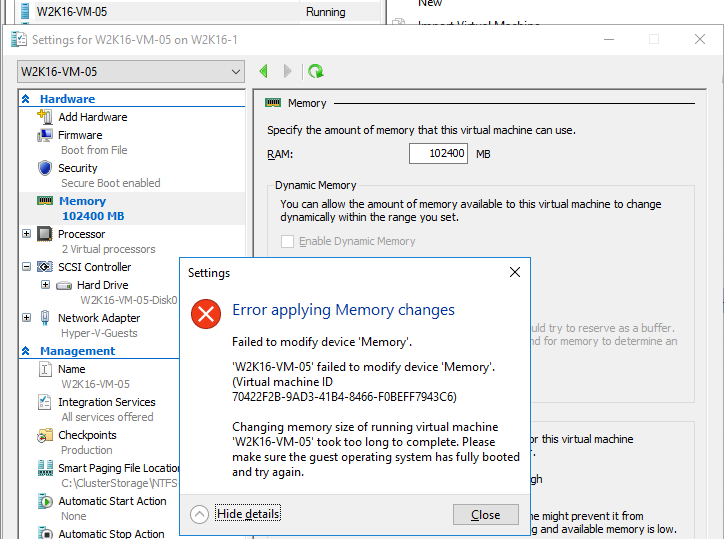

Windows Server 2016 on-line changing of static memory

In Windows Server 2016 Hyper-V we have gained a new capability. We can now adjust the static memory of a running virtual machine up or down. Pretty cool! Now, this will no work under certain scenarios. Hyper-V won’t allow you to do so when the virtual machine is live migrating for example. But more related to our subject at hand it will not allow a change in memory size that changes the virtual NUMA topology of a virtual machine that’s running.

Below you see a virtual machine with 2 vCPUs and more memory that a single physical NUMA node can provide and as a result it gets configured with 2 virtual NUMA nodes.

You can change the memory up and down a bit without issues but when we try to change the memory size to a lesser amount so that it could be served by a single NUMA node it fails.

It does try, but you’ll have to cancel and it will remain with its current NUMA configuration. Likewise, when we start with a virtual machine that has memory server by a single node, so it has a single virtual NUMA node and try to augment it to more than that single NUMA node can provide it will fail. So while you can adjust static memory values for a running Windows Server 2016 virtual machine you cannot change the NUMA topology on line.

Conclusion

It’s highly beneficial to read up on Virtual NUMA and a good place to start is with the Hyper-V Virtual NUMA Overview on TechNet. The next best thing is to experiment. I encourage anyone who want to leverage virtual NUMA to validate their assumptions. You’ll learn a lot, at least I did. This is especially important when you’re banking on a certain behavior. You’ll understand a lot better what to use when, why and you’ll know what to expect in behavior from your choices. In general, virtual NUMA works well and delivers the best possible results for 99.99% of all use cases, provided you chose the best option for your needs.