Introduction

With Windows Server 2016 we have gained some very welcome capabilities to do cost effective VDI deployments using all in box technologies. The main areas of improvement are in storage, RemoteFX and with Discrete Device Assignment for hardware pass-through to the VM. Let’s take a look at what’s possible now and think out loud on what solutions are possible as well as their benefits and drawbacks.

Storage

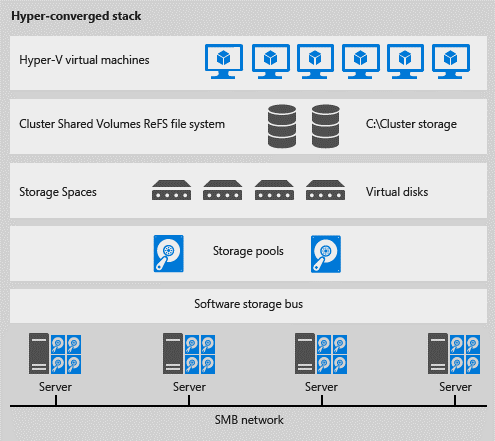

In Windows Server 2016 we have gotten Storage Spaces Direct (S2D) which can be used in a hyper-converged deployment. This is the poster child use case for converged storage. We also have the option now to combine this with Storage Replica for added disaster recovery options on top of high availability when required.

RemoteFX

Improved RemoteFX now supports OpenGL 4.4 and OpenCL 1.1. Finally, I should say! This is huge in allowing Hyper-V to be a real world valid option in many VDI scenarios. We also get the capability back to do RemoteFX with VMs running the Windows Server OS. This is something that wasn’t possible anymore with Windows 2012R2.

RemoteFX has some significant vRAM related improvements. Next to a maximum of 1 GB shared memory we also get up to 1GB (it used to be 256MB) of dedicated memory per VM for the GPU (512MB on a 32 bit Windows 10 version) which makes thing better for application functionality that’s only available with certain amount of memory such as Adobes hardware acceleration in Photoshop. This dedicated memory can now be configured independently from the number of monitors or the chosen resolution. That’s pretty neat as with this flexibility we can optimize better for the use cases at hand without our hand being forced by the chosen number of monitors or screen resolution.

We get a performance boost due to the virtual machine to Host transport now using a more performant VMBus. This also enabled the use of RemoteFX with generation 2 VMs, which is very welcome. It was a drawback that the most performant & capable generation of Hyper-V virtual machines did not support RemoteFX. This is now solved!

Finally, they implemented various stability and application compatibility improvements along with a number of bug fixes. All these should make the performance better with many workloads.

Discrete Device Assignment

Discrete Device Assignment (DDA) allows us to pass through a PCIe device such as a GPU or NVMe disk directly to the VM. This is great for those high performance workloads we never were able to virtualize before without reverting to other vendors. It exposes the native capabilities of the device to the VM and for that it needs to install the native drivers for that device. GPUs and NVMe disks seem to be the 2 supported uses cases other devices can also be used such as wireless, storage controllers, … but for now that’s not a promoted or supported use case. But think about the possibilities it opens up. When a developer needs to test with wireless for example he could now do so in a virtual machine. You have to remember this isn’t just for VDI, these capabilities exist on you client Hyper-V as well.

Guest can be Windows 10, Windows Server 2012 R2 / 2016 and Linux on generation 1 and 2 VMs.

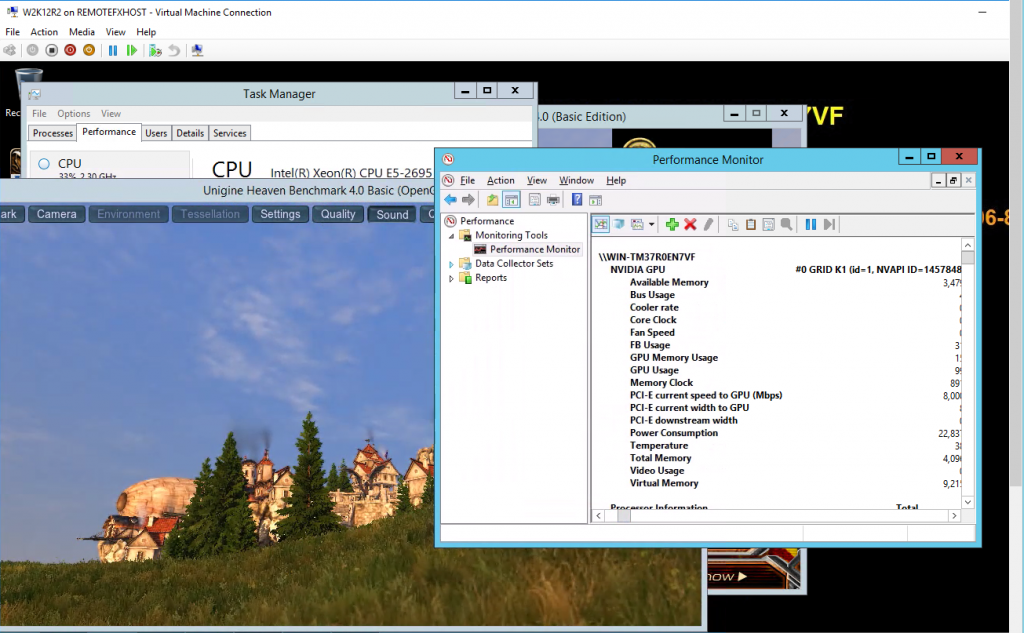

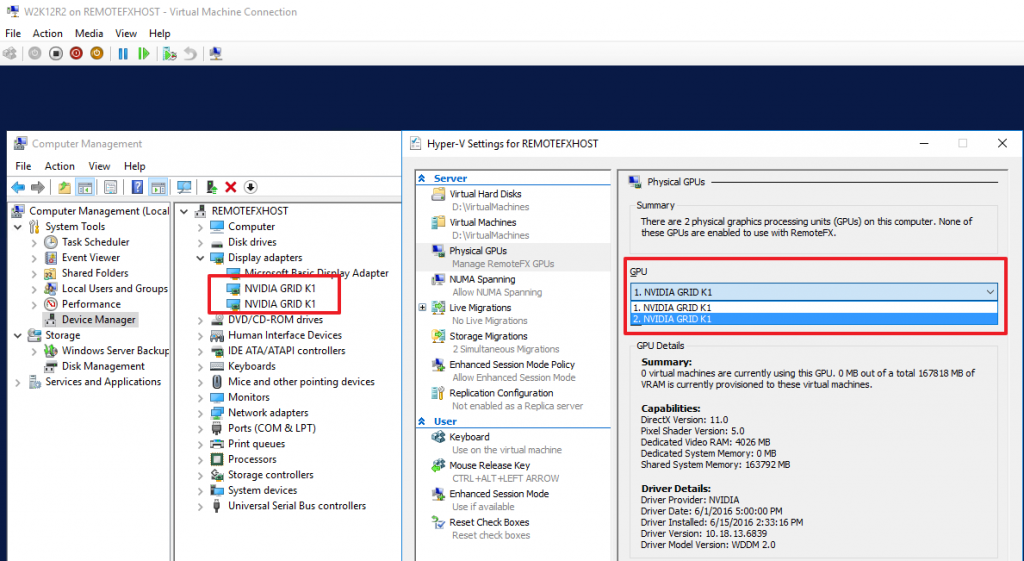

Figure 1: DDA of an NVIDIA GRID GPU in a Windows Server 2012 guest running on a Windows Server 2016 host.

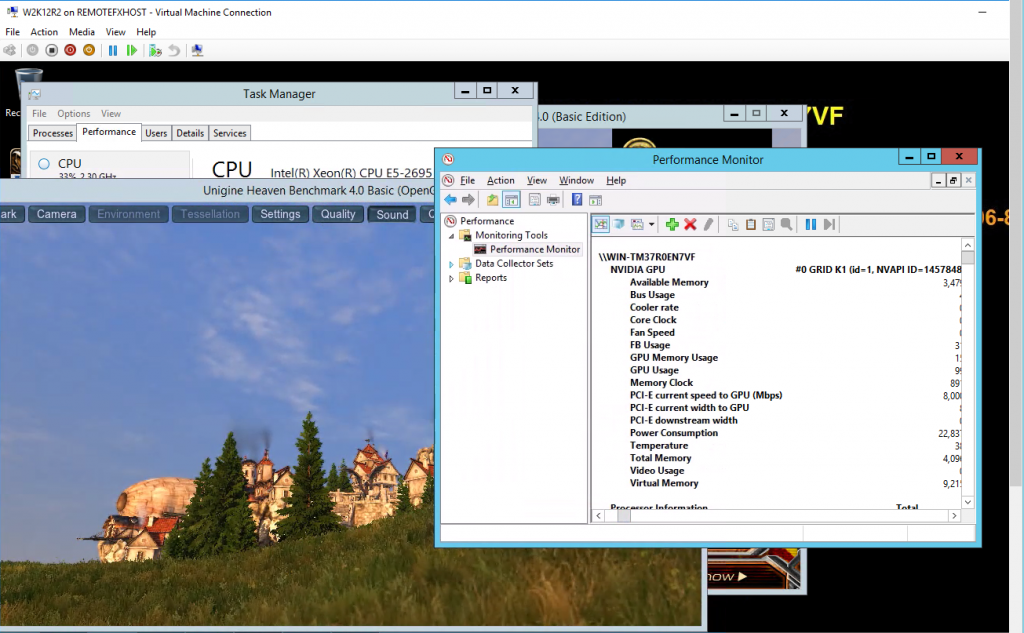

Now I recently acquired an Intel NVMe disk to do some lab work with and to help me deal with optimizing performance with nested virtualization. I could not resist the urge to mount one in one of the DELL R730 lab servers that also has NVIDIA GRID K1 card do experiment with Windows Server 2016 TPv5 – we’re serious about leveraging our investment in the Windows operating system – what can I say 🙂 .

An Intel NVME disk mounted in a DELL R730 next to a NVIDIA GRID K1 GPU card.

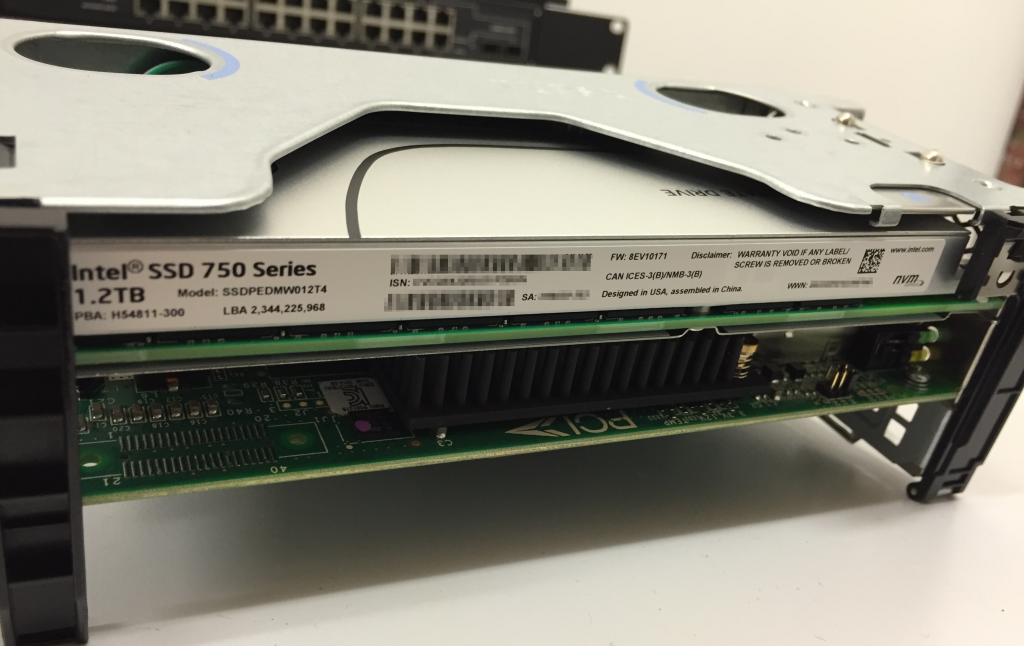

To illustrate some of the points I mentioned even more take a look at the next figure of a Windows 2012 R2 virtual machine. It’s a generation 2 virtual machine running Windows Server 2012R2 that has a NVIDIA GRID K1 GPU assigned via DDA as well as an Intel NVMe disk via DDA. Not the OpenGL workload by the way.

A Windows Server 2012 R2 generation 2 virtual machine leveraging DDA with a NVIDA GRID K1 GPU and an Intel NVMe disk.

This single screenshot demonstrates a lot of the new capabilities in Windows Server 2016 as the virtual machine OS VHDX is running on Storage Spaces Direct. The only thing not set up in this demo is Storage Replica.

That’s simple. You should use what you need. It’s allowed to have multiple needs, so in that case you can use multiple solutions.

It perfectly fine to deploy your VMs without a RemoteFX GPU, with a RemoteFX GPU or with a DDA GPU. There’s nothing stopping you from mixing these VMs on a host. It all depends on the needs. While you don’t have over provision gigantically, do not ever make the mistake of cutting corners to save the budget. A saved budget of a failed project is a loss all over.

You do have to realize that many use cases today, if not most of them, will require RemoteFX. The graphical demands even by “ordinary knowledge workers” have become pretty high and without any GPU support the experience might very well not be a good one. This is important. Dissatisfied users with bad experiences are the killer of many VDI projects. You cannot “save” on CPU, memory, storage or graphics. You’ll want 2-4 cores, 8GB of memory minimally, SSDs to store the VHDX files and GPUs for RemoteFX. When it comes to discrete device assignment (DDA) this is an option for the most demanding workloads.

The biggest benefit of DDA is that you pass on the native capabilities of the hardware to the virtual machine and that this is dedicated to that virtual machine. If you do a simple comparison with a VHDX on Storage Spaces leveraging a bunch of SSDs and a single NVMe disk assigned to that virtual machine the difference won’t be noticeable. But when you fire up another 20 virtual machines on Storage Spaces with a decent IO workload you might start to notice.

The big limitation you should be aware of is that VMs with DDA devices do not support high availability through clustering and as such don’t offer failover and live migration. But you can mix clustered high available VMs with non-clustered VMs with a DDA device on the same nodes in a cluster. The latter VMs with DDA just won’t be high available and capable of fail over. Pretty much like any VM today you have not added to the cluster but is running on a cluster node.

Let’s look at some examples.

We can take an NVIDIA GRID card and assign one or more of the GPUs on the card for DDA while leaving the remainder for use with RemoteFX GPUs. That’s not a problem. So RemoteFX and DAA can coexist on the same host and card.

Figure: A NVIDIA GRID K1 card that has 2 GPU used for DDA to a VM and the other 2 are available for use with RemoteFX.

You could opt to use 1 GPU or 3 for DDA and the remainder for RemoteFX. It’s all pretty flexible. When you are small engineering business this might work and be cost effective. For larger environments you might want to go for a S2D VDI deployment dedicated to RemoteFX which allows all VM to be highly available as well. With the new generation NVDIA cards (Tesla M60 for example), scaling out RemoteFX to new heights seems possible.

For extreme high performance needs you might opt for dedicated non clustered hosts where GPUs and NVMe disks are available for DDA scenarios. It depends on the scale of the needs and of the business.

You can use a DDA NVMe disk in a high performance VM consuming whatever vCPU they need to drive the IOPS to that disk. If that VM also need GPU capabilities, you can choose to leverage RemoteFX or DDA for GPU pass through. As you have seen it’s quite possible to use DDA to assign multiple devices of different types to a VM.

The message here is really that you don’t have to deploy all the VMs in the same manner. Whatever combination you chose you just need to be aware of the benefits and limitations. It’s early days yet but I’ll be keeping a keen eye on what happens in that space the next 12 months.

Storage Is Included & Enhanced

All the above is true no matter what storage solution you use. When it comes to hyper converged storage solutions you can now use the native Windows Server 2016 solutions, Storage Spaces Direct (S2D). You can get yourself 4 nodes with the hardware you need to deploy a highly performant S2D solution.

Figure by Microsoft: Storage Spaces Direct – hyper-converged

By adding NVIDIA GRID cards to those and you can leverage both RemoteFX and DDA.

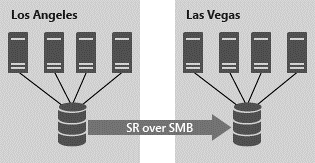

On top of that you can implement Storage Replica to replicate the volumes to another S2D deployment for high availability. If you use none clustered hosts you can also do server to server Storage Replica.

Figure by Microsoft: Cluster to Cluster Replication

Now do note that the DDA devices on other hosts and as such also on other S2D clusters have other IDs and the VMs will need some intervention (removal, cleanup & adding of the GPU) to make them work. This can be prepared and automated, ready to go when needed. When you leverage NVME disks inside a VM the data on there will not be replicated this way. You’ll need to deal with that in another way if needed. Such as a replica of the NVMe in a guest and NVMe disk on a physical node in the stand by S2D cluster. This would need some careful planning and testing. It is possible to store data on a NVMe disk and later assign that disk to a VM. You can even do storage Replication between virtual machines, one does have to make sure the speed & bandwidth to do so is there. What is feasible and will materialize in real live remains to be seen as what I’m discussing here are already niche scenarios. But the beauty of testing & labs is that one can experiments a bit. Homo ludens, playing is how we learn and understand.

Conclusion

These scenarios demand knowledge and the capability to deploy and support such a solution. In an all in box solution it’s sometimes the case that these skills are all sourced in-house. This provides freedom and flexibility at the cost of the responsibility to make it all work. This is perhaps the biggest contrast with commercial hyper converged solutions that deliver the support for you but might put restrictions on what you can do. In these scenarios those skills need to be sourced externally and then you also need to make sure the solution doesn’t “only” support the hyper-converged storage deployment but also supports GPU cards to use with RemoteFX and DDA.

Those technologies also require some engineering skills to deploy, tune and support. Some vendors might very well be more suited to support you or offer affordable storage solution that suits your needs and scale better. It’s up to you to do your due diligence and find out. All I’ve done here are share some lab and experiments with you at the high level which help me understand the capabilities & possibilities they offer better. I do notice a keen interest by integrators to work on supporting S2D solutions, where needed enhanced with some tooling to make life easier on top of the OEM offerings. It’s early days yet but the possibilities are there.

We’ve never had more and better capabilities available in Windows Server 2106 to deploy a VDI solution that can cover a very large percentage of the needs you might find inside of an organization. The cost saving can be very significant without sacrificing on capabilities when it comes to dedicated persistent VDI. This, in my opinion is a deployment model that is the best fit for the majority of companies that until now have not yet implemented any form of VDI due to the cost and the fact it only serves a too small subset of their needs. It basically provides the benefits of VDI with the capabilities and experience of a physical desktop deployment. Interesting times are head of us, that’s for sure.

You might also have noticed that many of these capabilities are now available in Azure and you have the option to choose, depending on the company’s needs, where to deploy these solutions. This is progress and will help people adopt cloud when it suits them. All this while having the same capabilities – feature wise – on-premises and in Azure. The future looks promising.