I am going to try to address a few issues I have seen quite a lot in my virtualization career. It is not that you have to take extra care when virtualizing, but your virtual environment will never be better than the foundation you build it on. The reason you do not see that many people fuss about it in non-virtualized environments (anymore). I believe, that resources are in abundance today. Well, they were so ten years ago as well, but since then we have only seen higher and higher specification on server hardware. It was the reason for starting to virtualize. Do not get me wrong – Lots of people care about the performance of their virtual and physical environments. Yet some have not set them self up for a successful virtualization project. Let me elaborate…

1. Hardware matters

This is your foundation! Servers, network, and storage – The holy trinity of every IT system and virtualized environment. I do not care if you choose vendor A or vendor B. What I care about is that you have thought it through and made your choices based on your needs. For a server; specifications of course matter, but also manageability could be a valid reason for not choosing a given vendor. You will have a hard time realizing the benefit of virtualization if you instead have to work hard with the physical servers to manage them and tailor them to your shop. Likewise, network and storage matters. Are you going to choose large monolithic systems? Which can do a million things that you might not need or are you buying a system tailored for your use case? I am not pitching to buy one of systems. However, if you need to move large amounts of traffic over the network, two 10Gb/s ports might be better and cheaper than servers with eight plus 1Gb/s network adaptors. Even though it might seem more expensive, to begin with.

Know what you need and design after that. Every aspect of the trinity needs to be correctly setup to perform optimally. Let me give you an example. The physical hosts BIOS settings matter more than you might think! For example, what do you expect a host, which has suffered a PSOD, to do? Restart and enter back into the production environment without a known root cause or wait until someone have time to look at the error and correct it before letting it back into the production environment. I am a firm believer in the latter. What you might find more important, is performance related settings. Has the memory settings be set up correctly so the hypervisor has a chance of understanding the physical memory topology. Last example, how is the power settings on the physical CPU? Does the processor shutdown down individual cores? Does it throttle the speed of cores? How is turbo boost handled? Is it even active?

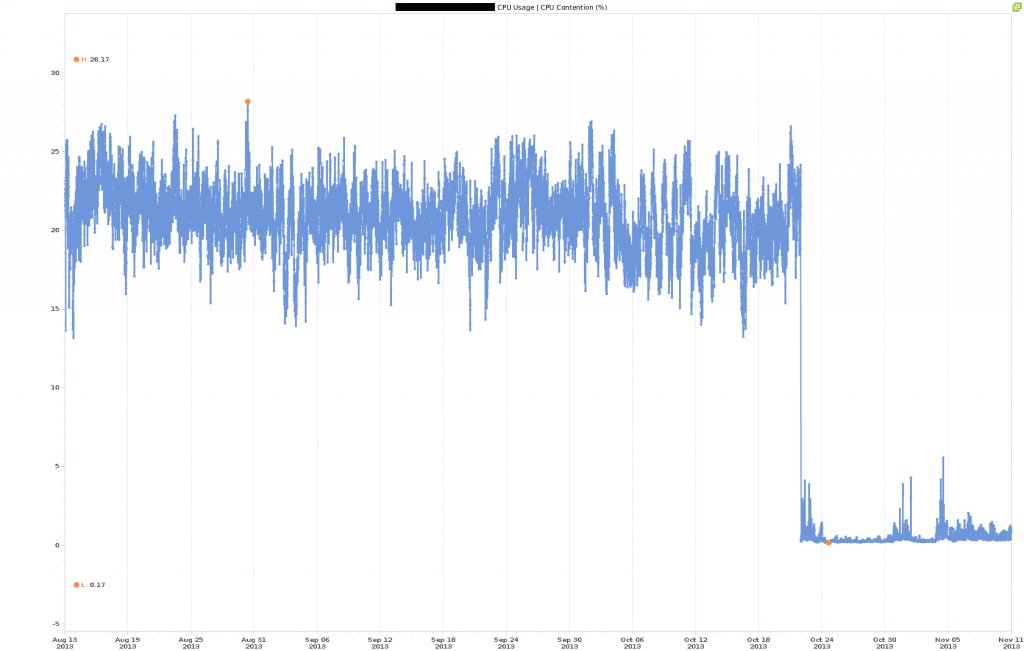

I have borrowed the performance chart below from another blog post I did around BIOS settings. Note that before the BIOS settings were changed, CPU contention where around 20% on average and after only a few percent, with peaks as high as 6% again compared to around 28% before. A huge improvement.

Source: https://michaelryom.dk/hp-bios-settings

At that time, the environment had around 70 hosts and 800 VMs, which ran sluggishly. Fast forward, a few years and the same environment had 125 hosts, but 3500 VMs. That’s an 80% increase in the host, but a 400+% increase in VMs. That is the difference between installing and designing a virtual environment! May I add that the ROI just got so much better! Frankly speaking, 30-50 hosts were heavily underutilized due to FUD and application configurations issues.

2. Symmetry is essential

A cluster needs to be uniform! Hardware configurations need to uniform! It makes no sense to have one network adapter running 1Gb/s and the other running at 10Gb/s. It can work, if well designed, but if something breaks, like the 10Gb/s network adapter. You are stuck with a slow network which will affect backup times, vMotion times and your business users or customers. Also, non-uniform cluster configurations tend to suffer from inconsistent performance, which can make troubleshooting a nightmare.

I understand that not all businesses have large budgets, which can make it hard to follow a hardware fresh cycle and buy all new hardware at the same time. Even so, CPU, Memory and network configuration should be the same to avoid too many problems with performance predictability and consequences of hardware failures. Symmetrical and standardized settings and configurations are what makes automation possible.

3. KISS – Keep It Simple Stupid

When designing, it is not a matter of using all the features. It a matter of using the features needed and those that will be enabled the end goal, whatever that maybe. Remember complexity is not your friend – That is why I think you should make it as simple as possible. With complexity also comes lack of visibility, lack of understanding and prolonged time to resolution. So remember when designing if you cannot tie what you are designing to a requirement from the business, you are not designing in the best interest of your business and you might be adding unnecessary complexity to the solution.

Let me recap.

- Make sure your foundation is good to build upon

- Make it uniform and standardized

- KISS and avoid complexity

I know this is not anything new or big – but still, I walk into business’s were throwing more hardware at the problem seems to be the norm or they forget to optimize what they have and instead build complex structures of the resource pool, reservation, and limits. All to archive what they could have had without if they had just slowed down and built the solution correctly from the ground and up. So next time we meet I hope it is because everything is running great. In addition, you are starting to realize that the true value of a virtualized environment starts when not only servers are virtualized, but network and storage becomes part of it. This is the true enabler for automation.