Introduction

Ever since Windows Server 2012 we have SMB Direct capabilities in the OS and Windows Server 2012 R2 added more use cases such as live migration for example. In Windows Server 2016, even more, workloads leverage SMB Direct, such as S2D and Storage Replication. SMB Direct leverages the RDMA capabilities of a NIC which delivers high throughput at low latency combined with CPU offloading to the NIC. The latter save CPU cycles for the other workloads on the hosts such as virtual machines.

Traditionally, in order for SMB Direct to work, the SMB stack needs direct access to the RDMA NICs. This means that right up to Windows Server 2012 R2 we had SMB Direct on running on physical NICs on the host or the parent partition/management OS. You could not have RDMA exposed on a vNIC or even on a host native NIC team (LBFO). SMB Direct was also not compatible with SR-IOV. That was and still is, for that OS version common knowledge and a design consideration. With Windows Server 2016, things changed. You can now have RDMA exposed on a vSwitch and on management OS vNICs. Even better, the new Switch Embedded Teaming (SET) allows for RDMA to be exposed in the same way on top of a vSwitch. SET is an important technology in this as RDMA is still not exposed on a native Windows team (LBFO).

As an early adopter of SMB Direct, I have always studied the technology and pretty soon via Mellanox user manuals such as MLNX_VPI_WinOF_User_Manual_v5.35.pdf we found some experimental capabilities in Windows Server 2016. I’m talking about the ability to expose an RDMA to a VM vNIC. This is not supported in any way right now. But it can be done, imperfect and tedious as it is. The Mellanox document gives us the information on how to do so. What follows is a glimpse into a possible future via experimentation. Again, this is NOT supported and is still limited in what you can do. But it sure is interesting. I have a couple of use cases for this and I’d love to get some POCs lines up in the future if Microsoft adds this as a fully functional and supported solution.

Join me in the lab! Remember that this is buggy, error-prone, sometime frustrating early poking around and you should never ever do this on production servers.

Putting the power of Mellanox RoCE RDMA into a virtual machine is just too tempting not to try.

Setting it up

Let’s start with some prerequisites we need to take care of. We need a Hyper-V host that supports SR-IOV. A DELL R730 served us well here. We also need NICs that support SR-IOV and RDMA. For that purpose, we used Mellanox ConnectX-3 Pro cards. The OS has to be Windows Server 2016 on both the parent partition and on the guests VM. At the moment of writing, I still need to get some lab time on this with RS3.

Make sure you have SR-IOV enabled on the host or parent partition. That means that it’s set in the BIOS and enabled on the RDMA NICs.

On every host involved where you want RDMA in the guest to add the following registry DWORD registry key “EnableGuestRdma” to the HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\mlx4_bus\Parameters path and set it to “1” (enabled). After you have done this enable and disable the NIC in device manager to have the setting take effect.

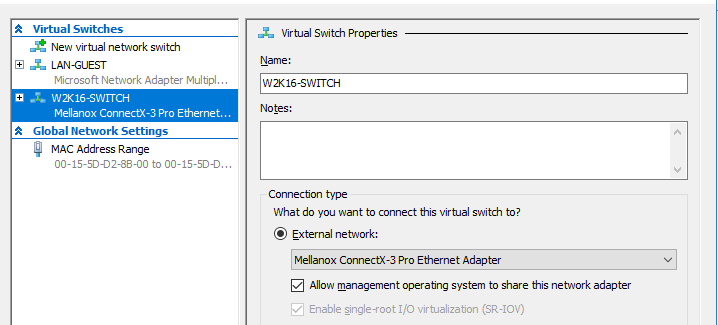

Create then a vSwitch with SR-IOV enabled as follows:

|

1 |

New-VMSwitch W2K16-Switch -NetAdapterName MyRDMANIC -EnableIov $true |

Note: this works with SET but it’s limited in various ways. For now, at the time of writing, Guest RDMA only works on Mellanox port. With a SET vSwitch of more than 1 member you’ll have to map the vmNIC to port 1 of Mellanox NIC via Set-VMNetworkAdapterTeamMapping Like this Set-VMNetworkAdapterTeamMapping -VMNetworkAdapterName “Network Adapter” -VMName VM1 -PhysicalNetAdapterName “My Physical NIC”. For this reason, I have opted to not use SET in this demo and focus on SMB Direct in the guest without other distractions of SET for now.

Deploy a VM (x64 bit, Windows Server 2016 – just like the host, fully updated) and create a vNIC that you attach to your SR-IOV enabled vSwitch.

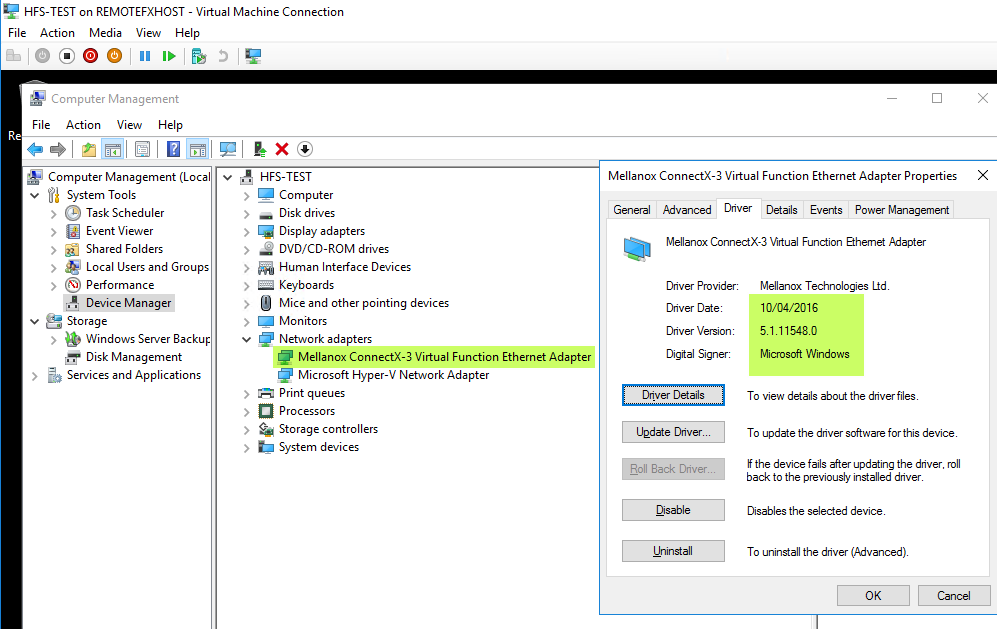

Boot your VM, log in and go take a look in device manager under Network adapters and System devices. As you can see we find a Mellanox ConnectX-3 Virtual Function Ethernet Adapter for which Windows Server 2016 in the VM has the onboard drivers.

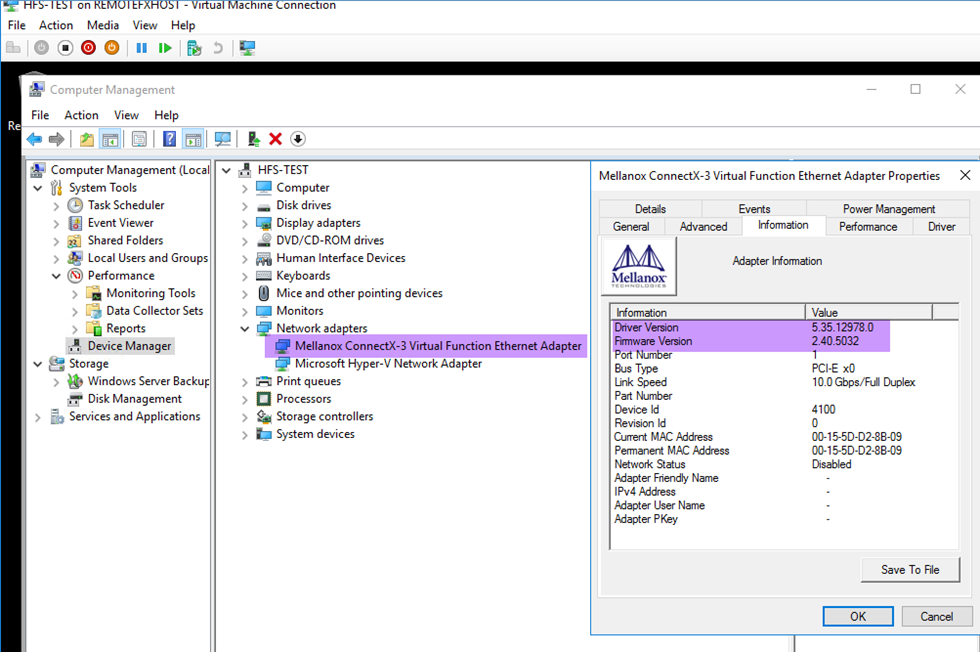

For the best results, we opt to install the Mellanox drivers in the guest as well. I’m keeping the parent partition and the guest partition at the same driver versions.

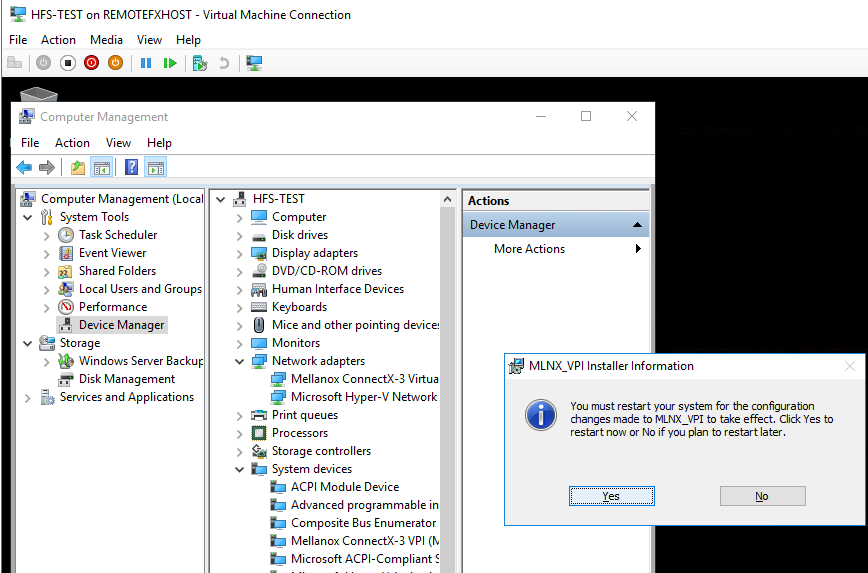

I restart the VM for good measure and voila … the Mellanox driver shows up

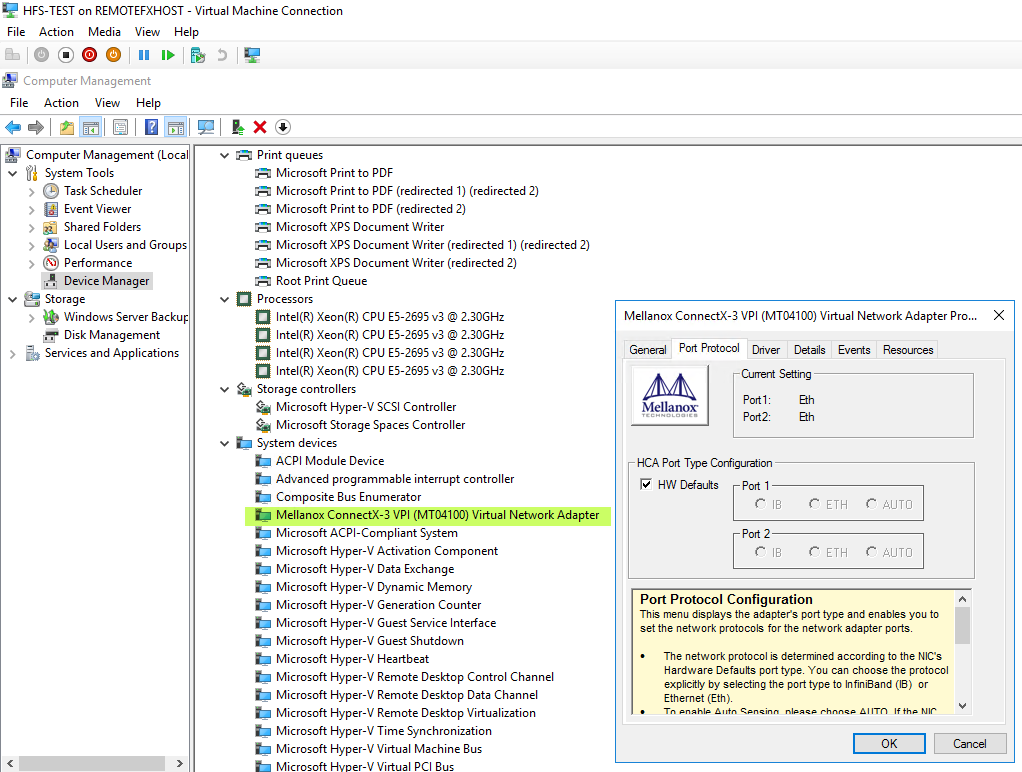

and in the System devices for the Mellanox ConnectX-3 VPI (MT04100) Virtual Network Adapter we see more of the Mellanox menus.

Between those two devices, we can control any aspect of the rNIC that’s exposed and available to the VM. The only thing we deal with on the Hypervisor level for the VM is the VLAN tagging and some of the RDMA configuration options are not controlled from within the VM.

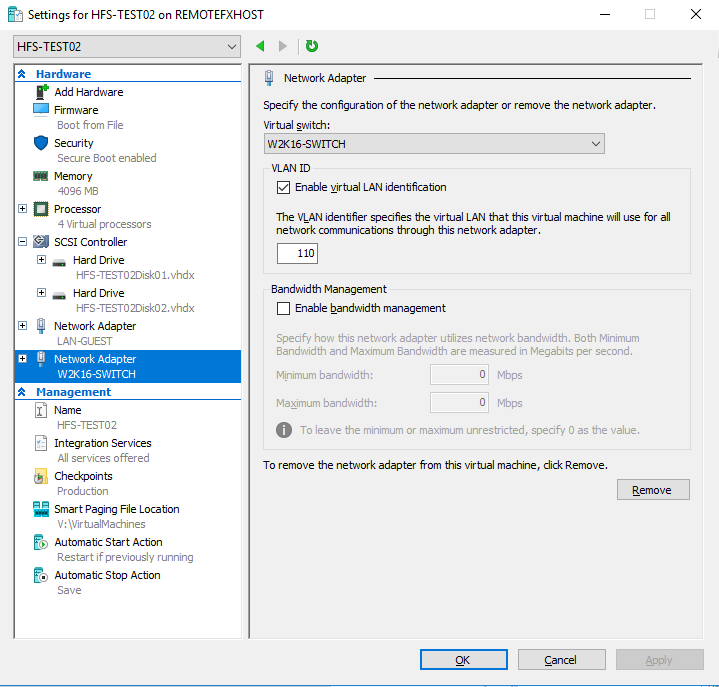

For the demo, we actually created a 2 vNIC virtual machine. This has one “normal” LAN NIC connected to a standard vSwitch and one vRDMA NIC connected to the SR-IOV enabled vSwitсh.

In the VM setting, this gives as in the figure below.

As you can see we tag the VLAN on the vRDMA NIC in the vNIC Settings. So now it’s time to set up RDMA in the guest itself. The Mellanox counters itself are not yet ready for this scenario in the guest so I cannot use those to show you priority tagging at work or any other Mellanox counter goodies or so. We will use the native windows RDMA Activity performance counters and the SMB Direct Connections counters to check traffic in the guest. On the parent partition, we can follow what’s happening with the Mellanox counters.

I’m not going to document setting up DCB configurations on the host here, that’s been described more than enough all over the place. My blog might be a good place to get that information. The best thing you can do before you start even to get RDMA to flow in or out a VM is to make sure your RDMA works in the lab between the physical hosts.

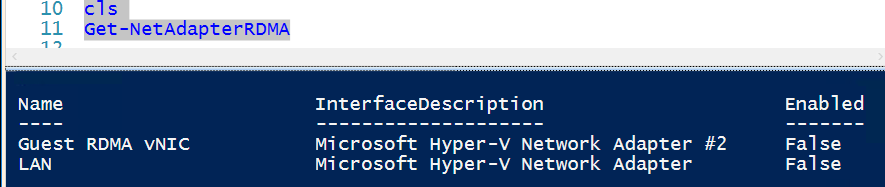

We must not forget to enable RDMA on the vNIC in the VM. You can see this by running Get-NetAdapterRDMA we still need to enable guest RDMA inside our VM.

We do this by running Enable-NetAdapterRdma on “Guest RDMA vNIC” – the one attached to our SR-IOV enabled vSwitch.

|

1 |

Enable-NetAdapterRDMA -Name "Guest RDMA vNIC" |

“Guest RDMA vNIC” will leverage the S-IOV virtual function Mellanox NIC for RDMA. Running Get-NetadapterRdma will confirm it’s enabled.

You might have to restart the VM after any changes to get things to work. It’s all a bit finicky.

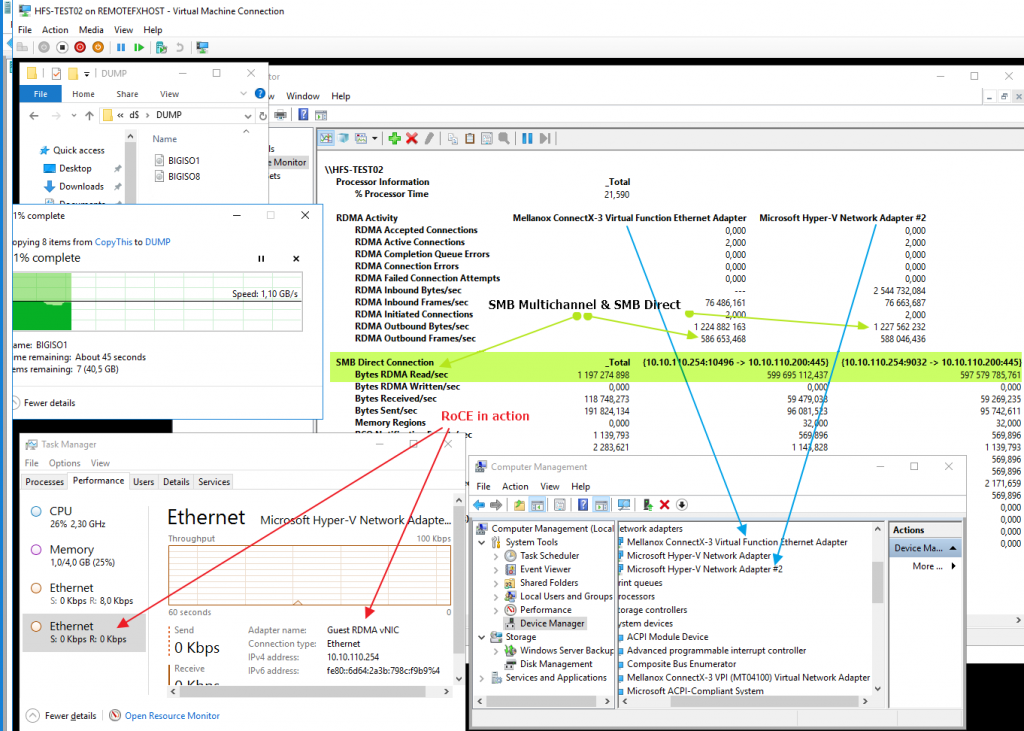

Showtime! Let’s copy some data from the guest to another host over RDMA. We use a separate physical host with RDMA enabled as the target to avoid any VM to VM magic that might bypass a vSwitch. The result in the screenshot below is RDMA to/from a virtual machine. We are copying data (large ISO files) from within the virtual machine to a physical host over RDMA. The behavior is like what you expect RoCE RDMA traffic to look like. No IO to be seen in task manager but clearly RDMA / SMB Direct traffic in PerMon of the virtual machine.

On the physical machine, the RDMA traffic can also be visualized with the Mellanox performance counters. These counters don’t work in the VM, but this is all very early days, not supported, experimenting in the lab. But hey, this is sweet! I do have use cases for this. Some industrial apps that dump vast amounts of metrics to file shares can leverage this for example. Also for moving data around in GIS or Aerospace engineering environments, this can be very useful. Remember that this isn’t just about speed & low latency but also CPU offloading. Not hammering the CPU’s to serve network traffic leaves more resources for the actual payloads in the VMs.

Conclusion and observations

It’s geeky cool to see RDMA traffic flow in and out of a virtual machine. It offers a glimpse at what will be possible in the future and at how some things are probably being handled for Azure workloads. The push for ever more affordable performance and throughput is still moving forward. I have several workloads and use cases where this capability interests me in order to virtualize even more workloads than ever before.

As we already stated above this is today only an experiment and not supported at all. But you get a glimpse of the future here.

The whole DCB configuration inside of the guest and NetAdapterQoS support in the guest is still something to figure out, as it’s not there for now it seems. One of the main issues that will have to be resolved when (if) all the SMB Direct traffic is tagged with the same priority for FPC/ETS. When we start mixing parent partition (management OS) and guest RDMA/SMB Direct traffic over the pNICs this will lead to potentially even more competition and congestion issues due to ever more workloads leveraging SMB 3 over the same traffic class. The question is whether more traffic classes will be allowed in the future, noting that the number of lossless queues is limited to 2 or 3. The future will show how things evolve both for RDMA, DCB and SMB Direct. Sure, 25/50/100 Gbps networking is here and will help mitigate these issues by the virtue of raw bandwidth in some cases. Designs with these use cases in mind will also help. For now, we’ve just taken a look at some early capabilities that are not supported yet and have lots of questions on top of great prospects. The next steps will be to experiment with the public previews of RS3 (Windows Server Insider Preview Builds).