Introduction

The most important part of any server infrastructure is the performance of the underlying storage which creates a direct dependency on the performance of the mission-critical applications. With all the available options for selecting the highest-performing underlying storage for your host taken into account, as well as the consideration of a lot of finger pointing once a storage array doesn’t perform according to plan, the responsibility involved makes the decision even more difficult than it seems.

Scope

The goal which served as a stimulus for writing this post today was blowing the dust of another long-planned virtualization project of improving the current storage performance of our existing infrastructure with the implementation of a device from the NVMe lineup.

Intel – the manufacturer which has been testing the patience of all storage enthusiasts with the news about releasing its long-awaited NVMe line. After they finally came out, the price was not as high as everyone expected, but couldn’t be considered a small change for your piggy bank.

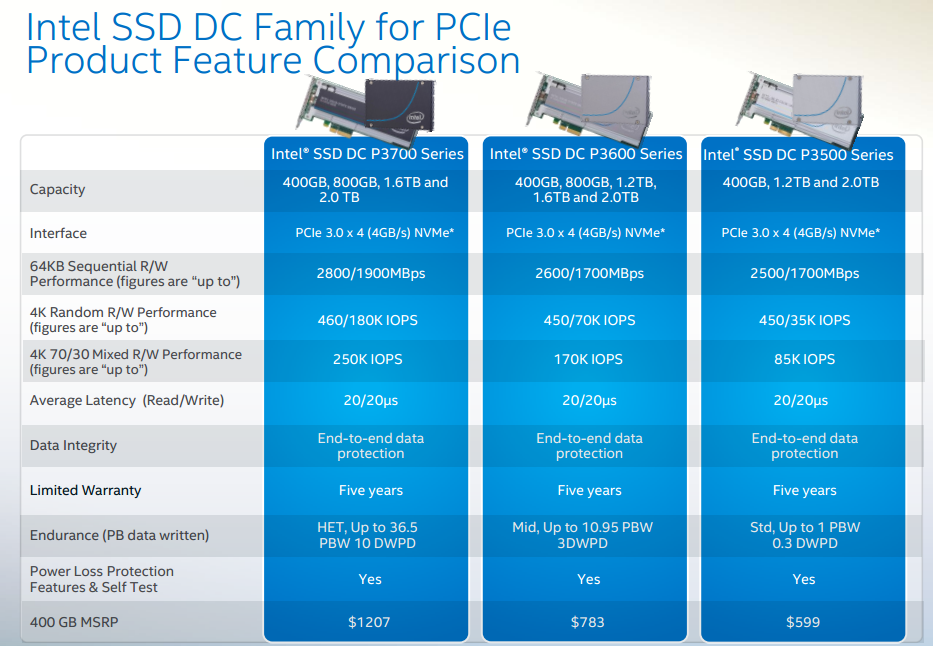

Just in case you weren’t aware, the Intel SSD DC product line boasts the following specs and consists of:

The NVMe Data Center-focused product line spans from volumes of 400GB to massive 2TB sizes of jaw-dropping performance that was specified on their website. The DC P3700 series provides the highest durability and write performance with up to 465,000/180,000 random read/write IOPS. The sequential performance is just as great – 2,800/1,900 MB/s read/write. The DC P3700 features HET (High Endurance Technology) MLC and 10 DWPD (Drive Writes Per Day) of endurance, up to 36.5 PBW for the 2TB model.

All models feature protection from host power failure via capacitors. All P series SSDs feature a five-year warranty period and an MTBF (Mean Time Between Failures) of 2 million hours.

Out of all the possible limitations, just wanted to specify the lack of configuring a RAID out of NVMe drives. We will test it as soon as it will be possible, but – at the moment, the only available RAID controller which is more or less compatible with NVMe is the Tri-Mode SerDes storage controller, which Broadcom indicates provides a 50 percent reduction in latency and a 60 percent increase in throughput compared to the previous generation products.

In an attempt of completely utilizing the performance of the NVMe for today’s benchmark, we grabbed the single drive within our arms reach and inserted the 800BG version of the P3700 into our ESXi host.

Benchmark Configuration

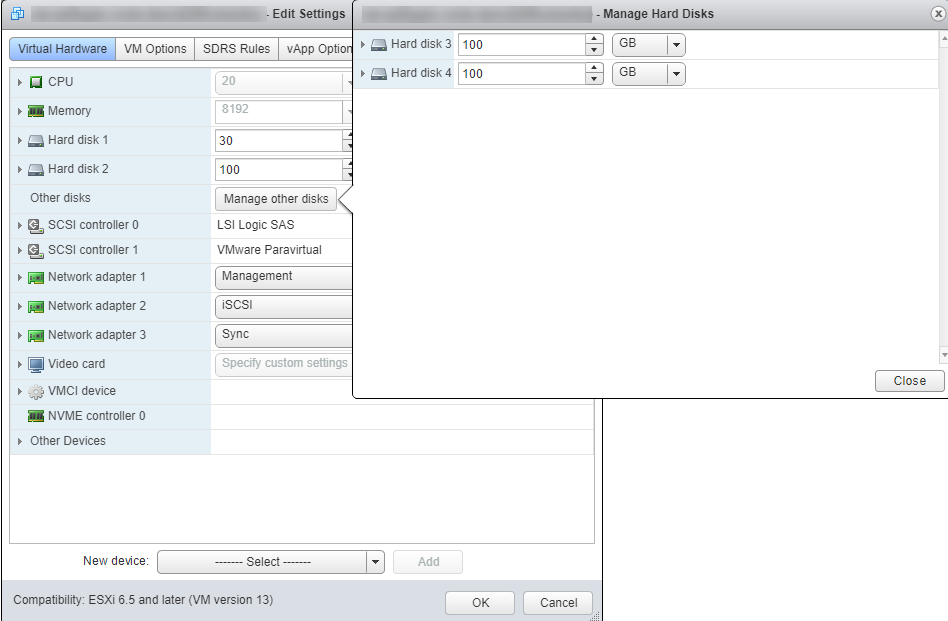

The following VM configuration was used to meet the maximum performance requirements and create a placeholder for a workload intensive application:

CPU:

- 8 sockets with a single core each

- Hardware CPU/MMU Virtualization

- No Reservation/Limit/Shares applied

RAM:

- 8GB

- No Reservation/Limit/Shares

The scope of the benchmark fell within testing the following SCSI Controllers:

- pvSCSI – driver merges are based on IOs only, and not throughput, which means that when the virtual machine is requesting a lot of IO, but the storage is not capable to deliver, the PVSCSI driver would arrange the interrupts. This results in a performance benefit for IO demanding applications.

- LSI – increases merges as IOs and IOPS increase. No merges are used with few IOs or low throughput. This results in efficient IO at large throughput and low latency IO when the throughput is not as high.

In addition to that, there is something else that could set the two apart – Queue Depth limitations:

|

PVSCSI |

LSI Logic SAS |

|

| Default Adapter Queue Depth |

245 |

128 |

| Maximum Adapter Queue Depth |

1,024 |

128 |

| Default Virtual Disk Queue Depth |

64 |

32 |

| Maximum Virtual Disk Queue Depth |

256 |

32 |

The other vSCSI controller which we took into account was the NVMe Controller. It was designed to reduce software overhead by over 50% compared to AHCI SATA SCSI device. Reduced guest I/O processing overhead with virtual NVMe devices by connecting directly to PCIe bus on the server/workstation, leads to more transactions per minute by eliminating any additional source of latency which would slow down the VM performance.

The results of our benchmark should directly specify the best performing virtual SCSI controller and find out which one supports the highest throughput, lowest latency, and a minimal processing cost for a particular workload.

Windows Server 2012 was used for the generation of our benchmark results, and the use of a tool that has proven itself over the years – DiskSPD (https://gallery.technet.microsoft.com/DiskSpd-a-robust-storage-6cd2f223)

The following article was used for the modification of the QueueDepth within the VM in the process of benchmarking.

For comparison purposes, the differentiation points worth considering were the IOPS, Latency, CPU Utilization, and for the specification of a tie-breaker, the following patterns were chosen:

- 4k blocks

- Read/Write performance

- 8 threads

- 64 outstanding I/O operations

- Disabled software cache

- Duration of 10 seconds

- 10gb test file

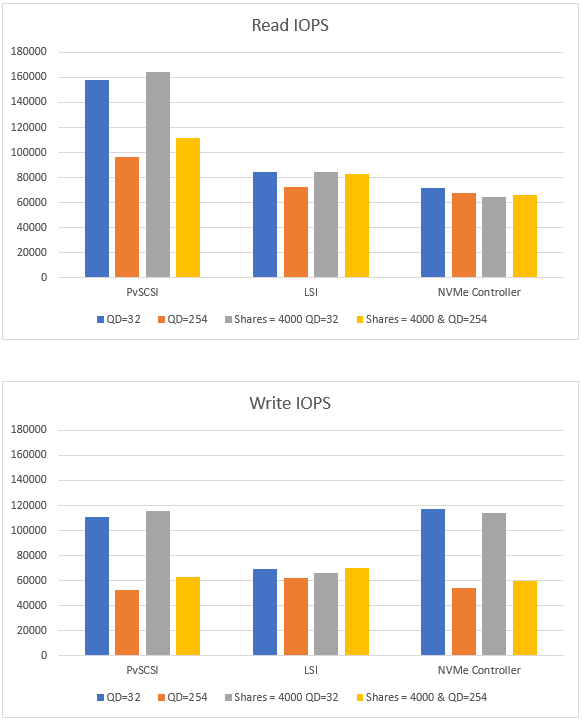

- The current comparison shows that the pvSCSI controller can shovel out a higher amount of reading IOPS.

- When comparing between two of the highest performing controllers, the pvSCSI and NVMe, it becomes clear that the NVMe shows a higher QD32 Write IOPS performance.

- LSI in the current IOPS comparison stood out as a universal controller providing average IOPS for all workloads.

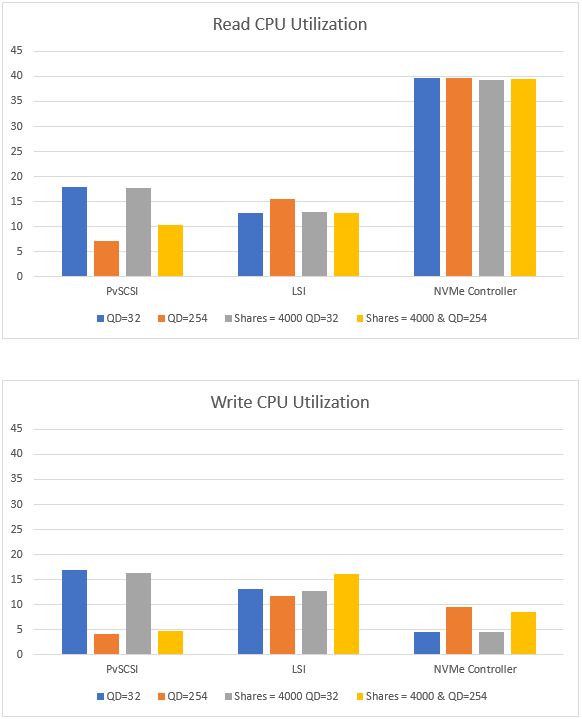

- NVMe has demonstrated the highest read CPU utilization and not the best IOPS performance for that amount of utilization. It did, in fact, show the lowest utilization and highest write IOPS performance.

- PvSCSI has demonstrated the lowest CPU utilization for workloads with a QD=254, and the highest for workloads with a QD=32

- LSI demonstrated itself as an all well rounder as far as IOPS and the utilization cost goes.

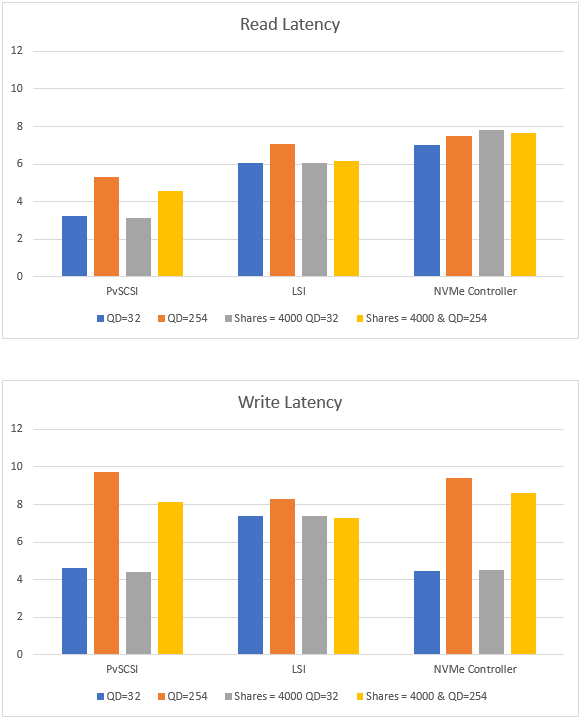

- PvSCSI has proven to show the lowest read latency, for the highest IOPS performance for any type of workload.

- While PvSCSI and NVMe show a near identical write latency, the PvSCSI and NVMe Controllers also demonstrate the highest latency for the lowest IOPS performance of high QD workloads.

- LSI has demonstrated the highest latency for the average IOPS performance.

Conclusion

I would like to specify how the controllers, should have theoretically behaved in each benchmark.

Due to PVSCSI and LSI Logic Parallel/SAS being essentially the same when it comes to overall performance capability, PVSCSI is still more efficient in the number of host compute cycles that are required to process the same number of IOPS. This means that if you have a very storage IO intensive virtual machine, this is the controller to choose to ensure you save as many CPU cycles as possible that can then be used by the application or host.

CPU utilization difference between LSI and PVSCSI at hundreds of IOPS is insignificant, but at massive amounts of IO–where 10-50K IOPS are streaming over the virtual SCSI bus, PVSCSI can save a large number of CPU cycles.

The difference between the LSI Logic SAS and PVSCSI controller at very low IOPS is not measurable but with larger numbers of IOPS, the PVSCSI controller saves a huge amount of CPU cycles.

To summarize, our benchmarks have proven which one of the vSCSI controllers is the best option for the most common virtualization workload. Hope that you have selected the SCSI controller of your preference for your workload in particular. If not – take your time in specifying and benchmarking each one.