Introduction

Imagine an environment that has many, many hundreds of virtual machines to backup, every day, multiple times a day with no exceptions. These virtual machines live on all-flash arrays. The size of those virtual machines varies from small to large in CPU, memory, and storage. They are diverse in their content, and quite a lot of them contain data that does not deduplicate well. What is also important is that they have severe rack unit constraints, both in numbers and in cost. So they need a solution in a small footprint.

They want to have concurrent backup jobs running without observing noticeable slowdowns in throughput. Next to that, they also need the ability to do multiple restores in parallel without affecting performance too much. The source clusters for all this are recent era all-flash arrays and servers in a mix of SAN and HCI solutions like StarWind or S2D.

Everything we mentioned above means they require backup storage capacity. But capacity alone won’t cut it. There is also the need for excellent performance in as little as possible rack units.

For SAN-based Hyper-V clusters, there is the request to keep leveraging Off-Host proxies (yes, they use Veeam Backup & Replication v10), while we also need to have a performant solution for HCI based Hyper-V deployments.

We deploy the solution in a hardened, non-domain joined setup where the backup infrastructure has no dependencies on the fabrics and workloads it is protecting. On top of that, we secure it all with credential disclosure preventative measures, firewall rules, an internet proxy, and MFA. But those are subjects for other articles. Finally, there is also a need for long term retention and off-site, air-gapped backup copies. The 3-2-1 rule still applies, and having an air-gapped copy is the new normal in the era of ransomware and wipers.

The challenges

There are a couple of challenges that combined present an opportunity. For one, the need for multiple concurrent back-ups and restore jobs sounds like a match for NVMe storage or at least SSD. That comes with a price tag. On the other hand, we require capacity. HDDs can deliver capacity efficiently, but when you also need performance, there is a kink in the cable. We need large amounts of spindles to achieve even acceptable IOPS and latencies. With HDDs this comes at a cost in power consumption, cooling, and rack space.

As it happens in this situation, the price of a rack unit per month was significant enough to warrant spending money to reduce rack units, especially if it also brought other benefits. To give you an idea, going from 32 rack units to 6 rack units reduces hosting costs over five years so significantly it becomes economically feasible. Yes, their hosting is (too) expensive. On top of that, by going all-flash, we could give them stellar performance and capacity in only six rack units with room to expand if ever needed. Luckily prices for NVMe and SSD are dropping. We combined that with smarts in negotiations and design to achieve a viable solution.

The solution

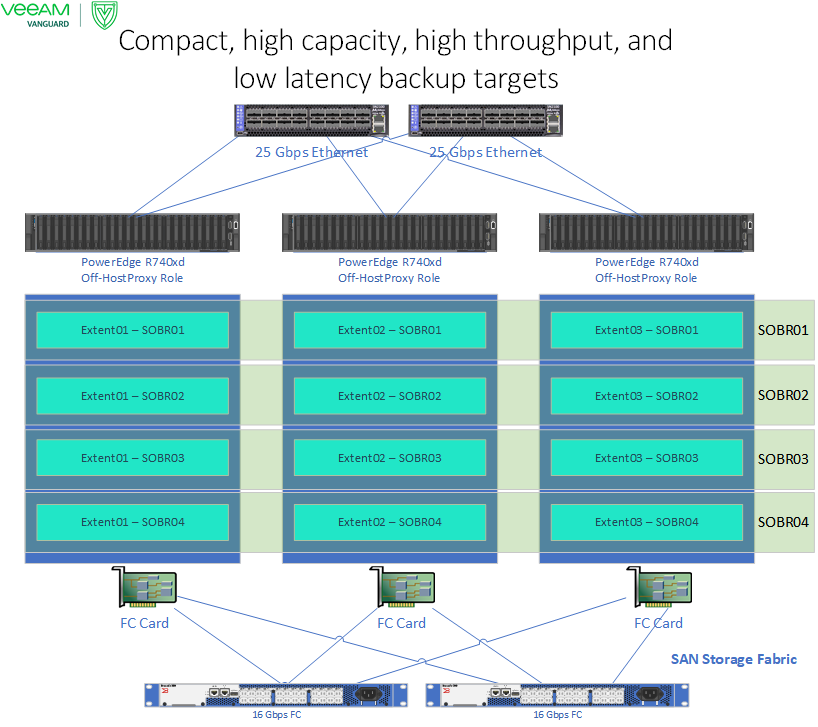

In the end, we built a backup target solution based on Veeam Scale-Out Backup Repositories (SOBR). SOBR rocks for scale-out backups in regards to flexibility and life cycle management of the backup repository. It also delivers “air-gapped” off-site copies via the capacity tier. For that, we leverage S3 compatible object storage that supports immutability via object lock.

The building blocks of a SOBR are extents. These are the actual storage units (a LUN, a file share) that live on a server. You can have multiple SOBR extents on the same server, but generally, you do not want to build a SOBR out of extents living solely on the same server. That defeats the scale-out purpose. But you can certainly start that way with a SOBR based on one server, with one or multiple extents. The reason for the latter, in that case, might be to work around LUN size limitations or provide yourself with some wiggle room and not consume all available storage in a single extent.

We used DELL R740XD servers to build all-flash backup target servers. For our needs, we chose the 24x 2.5″ (hot-swap) front bay configuration with 8 NVMe and 16 SSD slots.

With our initial designs, we used Dell H740P controllers. These allow for running in passthrough mode, which you set in BIOS, and it has support for stand-alone storage spaces (non S2D) use. If mirror accelerated parity (MAP) doesn’t work out, we can always create an NVMe and SSD pool and leverage those with backup copy based tiering. When ReFS gives us trouble, we can revert to RAID mode and use NTFS where deduplication could help with the capacity efficiency. The drawback of that would be with the chosen front bay is we have the 8 NVMe slots that cannot be used by the controller. Now we know that it works, we just use the HBA330 controllers for storage spaces.

The physical disks we decided on are the 6TB Intel P4610 mixed-use NVMes and 15TB Read Intensive SSDs. The NVMes deliver the performance tier in Mirror Accelerated Parity volumes while the SSDs provide the capacity. Hence we don’t mind that they are read-intensive disks. Why not all SSD? Because the price difference between mixed workload SSDs and NVMes isn’t big enough, and NVMes have the edge over SSDs when it comes to concurrent IO. So we decided to go with both types and leverage mirror accelerated parity.

The R740’s run Windows Server 2019 Standard LTSC. The OS boots from a RAID 1 BOSS (Boot Optimized Storage Solution) card. The actual backup targets live on mirror accelerated parity ReFS (64K Unit allocation size) volumes existing out of NVMe and SSD disks.

ReFS begins rotating data once the mirror has reached a specified capacity threshold. By default, this is at 85%. As backup targets ingest large amounts of data, we set the threshold to 75% and will adjust when needed. We’ll have to monitor that to find the sweet spot with destaging needlessly.

Setting this threshold for ReFS in Windows Server 2019 (1809 and higher) is done via the registry.

Key: HKEY_LOCAL_MACHINE\System\CurrentControlSet\Policies

ValueName (DWORD): DataDestageSsdFillRatioThreshold

ValueType: Percentage

Set-ItemProperty -Path HKLM:\SYSTEM\CurrentControlSet\Policies -Name DataDestageSsdFillRatioThreshold -Value 75We keep the capacity of the virtual disks under 50TB to ensure we mitigate any ReFS issues related to large volumes (Microsoft did already address these at the time of writing this article, but we are a bit wary). Still, with three servers, as an example, we built 4 SOBRs of 120TB (3*40TB extents) for a total of 360 TB of backup storage in six rack units. That’s enough for the current needs. It also allows for enough free space to enable automatic rebuilds and replace disks easily. We also have spare slots for some growth. Not too shabby, not too shabby at all. If we need more capacity, well, we scale out with more nodes.

We also implemented some registry optimizations Microsoft recommends for backup targets using ReFS.

fsutil behavior set DisableDeleteNotify ReFS 1

New-ItemProperty -Path HKLM:\System\CurrentControlSet\Control\FileSystem -name RefsEnableLargeWorkingSetTrim -PropertyType DWord -Value 1 -Confirm:$false -VerboseOn top of that, we like the flexibility of more SOBRs and smaller extents for when we need to rearrange backups, do migrations, and upgrades. It gives us options to maneuver, which is something anyone who ever had to manage storage will appreciate, but it often lost on armchair managers and bean counters.

Redundant ReFS on Storage Spaces, combined with data integrity streams, provides auto-repair of bit rot. That is an excellent capability to have and is yet another option in your arsenal to guarantee your backups will be available for their actual purpose, restoring data when needed.

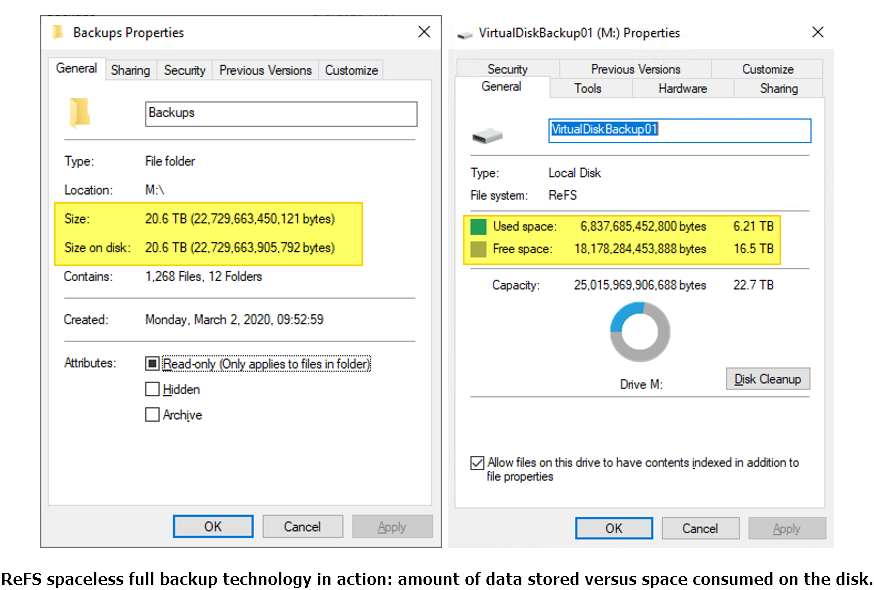

ReFS also delivers fast metadata-based operations (fast clone). That speeds up synthetic backup processes. It also provides space efficiency, which allows for better GFS retention of backups due to less space consumed. These space efficiencies are an often forgotten gem and cause some confusion when people first see it (see the image below). But it is a pleasant surprise.

ReFS also allows for fast restores without any overhead of rehydration that deduplication induces. What’s not to like, right?

It is worth noting that Veeam will compress the data as well before sending it over, so the actual space savings over the raw data to back up is even better.

The servers have plenty of memory (256GB), dual-socket, 12-14 core CPUs, as well as a dual-port Mellanox 25 or better Gbps ethernet card (RDMA capable) and a dual-port Emulex 16Gbps Fibre Channel card. The CPU core count is something you need to balance based on the number of servers you will have for your SOBR.

Let’s go over this quickly. When you want to do concurrent backups at scale, you need memory and cores. That’s a given, we all know this. The Mellanox 25Gbps (or better) cards provide us with connectivity to dedicated backups networks, which are also the ones Hyper-V (clusters) use for SMB traffic. We set both (SMB) networks (dedicated subnet / VLAN) as the preferred networks in Veeam Backup & Replication. That means these networks serve CSV, live migration, HCI storage, as well as backup traffic. By the way, I do leverage jumbo frames on the SMB networks. That works well, but you have to make sure you implement it well all the way through, end to end.

For a tip on making sure you optimize the Veeam Backup & Replication server with preferred backup networks, see my blog Optimize the Veeam preferred networks backup initialization speed.

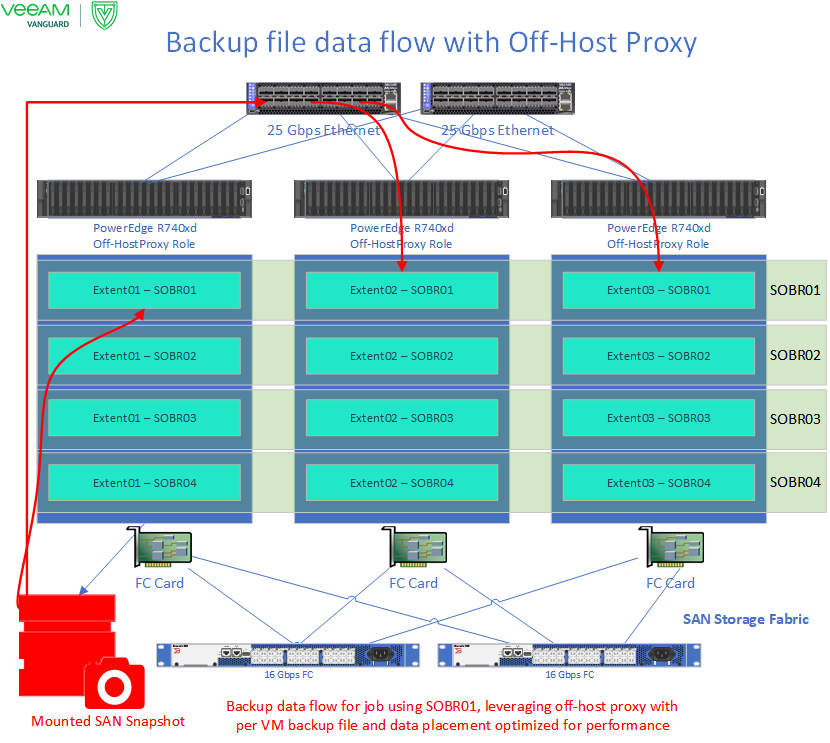

The FC cards help us with the request to leverage Off-Host proxies for use with some of the SAN-based deployments. We combine the Off-Host proxy roles with the backup repository roles, which means that any server that hosts backup repositories (extents) is an Off-Host proxy as well.

Having 16Gbps Fibre Channel cards and 25Gbps NICS means we have both S2D and Shared Storage covered for on-host proxy backups with ample bandwidth.

This way, we can leverage the off-host proxy and limit the backup traffic to only the backup repository servers holding the SOBR extents. Veeam reads the source data from the SOBR servers (off-host proxies), where the SAN snapshot is mounted. It then writes that data to the extents in the SOBR.

There are now two options for backup data flows. With the first option, no backup data goes over the network while writing to the target. This data flow occurs when the off-host proxy is the same as the backup repository server that also hosts the extent.

The second data flow occurs when the extents live on another server. In this case, Veeam writes the backup data to those extents over the 25Gbps network. That is what will happen when you are optimizing the SOBR backup files placement for performance and use per VM backup files. Note that we also leverage the “Perform full backup when the required extent is offline” option just in case an extent is offline. See Backup File Placement for more information.

In no case, when leveraging off-host proxies with this setup, data is copied directly from the source servers to the SOBR extents. The SMB networks in SAN-based Hyper-V clusters remain dedicated to their roles and don’t have to handle backup traffic. The heavy lifting is done by the iSCSI or FC fabric and between the SOBR extents. Still, if Off-Host proxy backups fail or they want to abandon it in the future, they have the 25Gbps NICs right there to failover to or use by default. All scenarios are covered.

Now with the SANs in this project, I could not mount a transportable snapshot to more than one off-host proxy. The hardware VSS software did not allow for this. Veeam, on the other hand, does. It would try and mount the snapshot on the off-host proxy hosting the extent where it wanted to write a particular VM’s backup data. But, as said, the SAN did not allow for that. To make this work, we set the off-host proxy to one fixed choice per backup job. To make sure we leverage them all, we alternate the backup jobs with respect to which off-host proxy they leverage.

You can also opt to use dedicated off-host proxy nodes. That is also a valid solution, but that adds extra servers (costs) to the solution. As our servers are powerful enough to handle both roles, we went with that. It also adds the benefits that the off-host proxies scale right along with the number of backup repository servers you might add to scale the solution.

Tip from the field

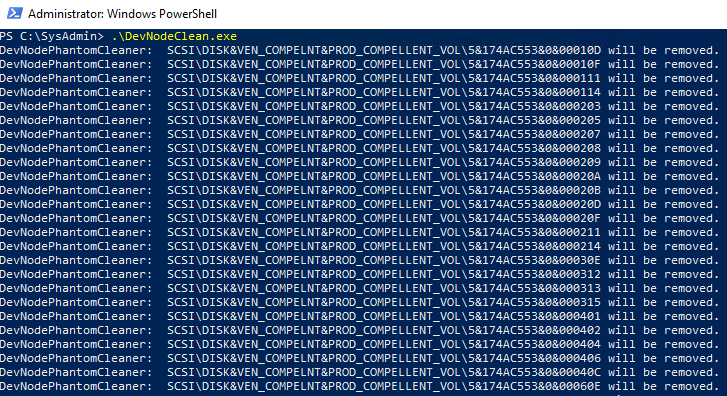

Here’s a tip when you leverage off-host proxies with Hyper-V. Due to the constant mounting and unmounting of the snapshots, your system’s registry might (will) fill up with many phantom volumes. Thousands of them over time. There once was a bug in Windows Server 2008(R2) due to this and aggravated by Hyper-V backups, which lead to extremely long boot times or, ultimately, failure to boot due to a ridiculously large registry file. It is the software that creates the registry entries that has to clean them up. The Windows OS does not know that for 3rd party software. Many hardware VSS providers do not do this. To avoid possible issues, I tend to have a scheduled task to clean them out preemptively. Veeam added this registry cleaning to VBR 9.5 Update 4. But it only works for Veeam controlled storage integrations. With Hyper-V, you leverage the SANs hardware VSS framework. So you have to take care of this yourself. I do this via devnodeclean.exe. See my blog post Use cases for devnodeclean.exe for more information on all this as well as a demo script to automate it.

Just add it to a scheduled task you run with elevated permission regularly to prevent registry bloat. It works with Windows Server 2016/2019 in case you are wondering.

Does this work?

Yes, it does. Mirror Accelerated Parity is doing its job and works fine for this use case with stand-alone storage spaces. Just do not make the mistake of building a too small performance tier with a big HDD based capacity tier, and you’ll be fine. With all-flash, you can opt to forgo the performance tier. We have chosen to use it here.

The off-host proxy role on a stand-alone storage spaces server is not causing any problems, bar the usual but infrequent phantom snapshot we need to clean up on the SANs. But with 25Gbps or better becoming more prevalent and HCI making progress, this part might be retired over time anyway.

The only known issues (in the past) were ReFS related. Under stress from many concurrent jobs and large ReFS volumes, ReFS would slow down to a trickle. See Windows 2019, large REFS, and deletes. We expect the latest updates delivered the final fixes. For us, ReFS is working well. We have good hopes for the vNext of the LTSC, where the ReFS improvements in 1909 (and later) will be incorporated.

Meanwhile, to be on the safe side, we keep our ReFS sizes down to a reasonable size. We can live with that, and we accomplished our mission. ReFS right now delivers for us. We have all we want, space efficiency, high capacity, bit rot protection, high throughput. For shorter-term retention times, we find adding deduplication to ReFS not very useful. The real long retention goes into the capacity tier of our SOBR anyway.

Another option you have is to use Windows Server 1909 Semi-Annual Channel (SAC) or a later release for the repositories. These versions have improved ReFS bits that enhance performance, but these will not be 100% backported to 1809. The drawback of using SAC is a supported lifetime cycle of 24 months, which is shorter than with LTSC. Which means you must be able to upgrade faster. That is not a given in all environments, especially since SAC releases are “Core” only, but PowerShell and automation are your friends. Having the approval, space, and hardware to do the actual rebuilds is often the biggest challenge.

By the way, if you don’t like ReFS, Veeam now also supports Linux backup targets, which, combined with the XFS, delivers similar capabilities as ReFS. It is good to have options, and I hope some competition here motivates Microsoft to keep improving its ReFS offerings.

Conclusion

When it comes to building high capacity, low latency, and high throughput backup targets, we are in a good situation today by leveraging flash storage. The only drawback here is that it can seem expensive. However, when considering all factors, including the cost of hosting (rack units, power consumption, cooling), that is (partially) mitigated. Add to that the benefits it offers, such as more concurrent backup tasks at high speed, the ability to do fast restores and recovery, and you might very well have found yourself a business case. Prices for SSDs keep going down, and their capacities outgrow those of HDDs. Take a look at StarWind Goes Rogue: HCA All-Flash Only for more on that subject. This evolution will also trickle down more and more into backup targets. To me, this means that the benefits are that good that we’ll see more flash-based backup solutions in the future augmented by object-based storage for long term add off-site retention. You can even add deduplication with ReFS now in Windows Server 2019, but we have not needed it so far.

The commodity hardware available today, combined with modern operating systems, allows us to build backup targets that we tailored to our requirements. You can do this with the skills you already have in-house. Even better, without paying for boutiques like prices and needing niche skillsets for proprietary hardware that limits you to what the vendor decides to offer. That, people, is the power of Veeam, which has always given us choices and options when it comes to backup targets. Once more, it allowed us to deliver an excellent, purposely designed solution.

Every design has benefits and drawbacks to consider. The flexibility of Veeam Backup & Recovery allows us to come up with the best possible answer every time. I like that.