VM hardware resources

Each Virtual Machine is a collection of resources provided by the infrastructure layer, usually organized in a pool of resources and assigned dynamically (or in some case statically) to each VM.

Each VM “see” a subset of the physical resources in a form of a virtual hardware components defined usually by the following minimum elements:

- Hardware platform type (x86 for 32 bit VM or x64 for 64 bit VM)

- Virtual Hardware type (depending by the virtualization layer)

- Virtual CPU (and maybe virtual sockets and virtual cores)

- Virtual RAM (and maybe also a Persistent RAM)

- Virtual disk connected to a virtual controller

- Virtual NIC

Then there can be also additional hardware components, that maybe are not mandatory, but maybe are useful on specific use cases. Or are needed for some basic operations, like, for example, installing the guest OS where a video driver, a keyboard and a mouse device are needed to use the remote console.

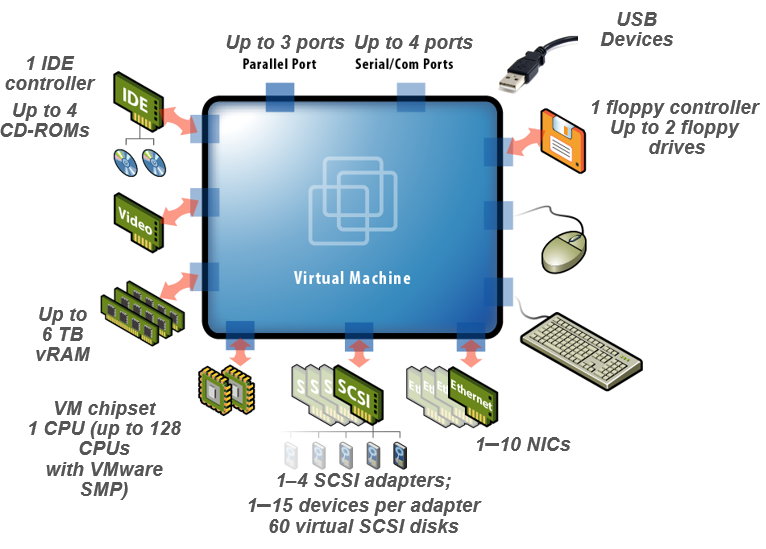

On VMware vSphere it’s possible add a lot of hardware devices to a VM:

There are also the PCI devices, other SCSI device, the virtual FC ports, and several other type of storage devices (like NVMe and SATA based).

Recently also virtual GPU are becoming more popular also on some server workload, due to the needs of specific computational tasks that are better performed on general purpose GPU, instead of a general-purpose CPU (note that vSphere 6.7U1 add the ability to vMotion VMs with virtual GPU).

VM hardware resources hot changes

But which kind of resources is possible to modify on a running VM?

This really depends by the hypervisor and its version (and sometimes also by its edition), but there are a lot of possibility.

The following table summarize the different operations on a running VM with a “recent” guest OS:

| Type of resource | Hot-Increasing the resource | Hot-Decreasing the resource |

| CPU (socket) | Could be possible | Could be possible |

| CPU (core) | Not possible due to OS limitations | Not possible due to OS limitations |

| RAM | Could be possible | Could be possible but usually not implemented |

| Disk (device) | Could be possible (not on IDE devices) | Could be possible (not on IDE devices) |

| Disk (space) | Could be possible (not on IDE devices) | Could be possible (not on IDE devices) but usually not implemented |

| Storage controller | Could be possible | Could be possible but usually not implemented |

| Network controller | Could be possible | Could be possible |

| PCI device | Could be possible | Could be possible but usually not implemented |

| USB device | Could be possible | Could be possible |

| Serial or parallel device | Not possible | Not possible |

| GPU general purpose | Could be possible | Could be possible but usually not implemented |

Now let’s provide more information about some of the different resources and some tips and notes about how changing the hardware resources on a running VM.

To make the example much clear and specific, we will consider a VM running on vSphere 6.7 Update 1.

Virtual CPU hot-add and hot-remove

By default, you cannot add CPU resources to a virtual machine when the virtual machine is turned on. The CPU hot add option lets you add CPU resources to a running virtual machine.

There are some requisites to enable the CPU hot add option, you need to verify that the VM is running and is configured as follows:

- Virtual machine is turned off.

- Virtual machine compatibility is ESX/ESXi 4.x or later.

- Latest version of VMware Tools installed.

- Guest operating system that supports CPU hot plug (basically have a “recent” OS, for Windows from Windows Server 2008 or Vista).

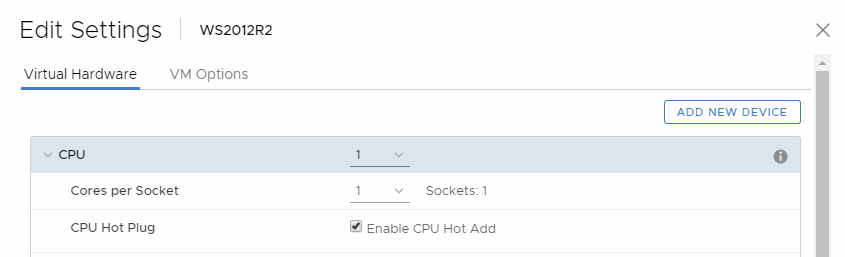

To enable the CPU hot add option:

- Right-click a virtual machine in the inventory and select Edit Settings.

- On the Virtual Hardware tab, expand CPU, and select Enable CPU Hot Add.

- Click OK.

Now you can hot-add a new socket (or more) to a running VM. Note that is possible only hot-add socket with the same number of cores of the existing sockets… The number of cores cannot be changed on a running VM.

On Windows Server Datacenter edition it’s also possible hot-remove the sockets, for other OSes you need to shutdown the VM to remove the sockets.

This feature is available only with Standard or Enterprise Plus editions and still is one of the distinct features of vSphere compared to other hypervisors (actually Microsoft Hyper-V does not implement it, but for example Nutanix AHV already has this feature).

There are some notes and suggestion for manage CPU hot-add (and remove):

- For best results, use virtual machines that are compatible with ESXi 5.0 or later.

- Hot-adding multi-core virtual CPUs is supported only with virtual machines that are compatible with ESXi 5.0 or later.

- To use the CPU hot plug feature with virtual machines that are compatible with ESXi 4.x and later, set the Number of cores per socket to 1.

- Virtual NUMA work correctly only with vSphere 6.x when you hot-add a new socket.

- Not all guest operating systems support CPU hot add. You can disable these settings if the guest is not supported. See also VMware KB 2020993 (CPU Hot Plug Enable and Disable options are grayed out for virtual machines)

- You can have some minor issues when you are hot-adding a CPU to a VM with a single CPU, for example on Windows system the task manager does not show the result immediately… just close and open it again.

- Test the procedure on the VM before put it in production… there are some Windows Server 2016 builds that may stops with a BSOD during a CPU hot-add (see https://vinfrastructure.it/2018/05/windows-server-2016-reboot-after-hot-adding-cpu-in-vsphere-6-5/, but same can happen also on vSphere 6.7).

- Adding CPU resources to a running virtual machine with CPU hot plug enabled disconnects and reconnects all USB passthrough devices that are connected to that virtual machine.

- Hot-adding virtual CPUs to a virtual machine with NVIDIA vGPU requires that the ESXi host have a free vGPU slot.

Virtual RAM hot-plug

Memory hot add lets you add memory resources for a virtual machine while that virtual machine is turned on.

There are some requisites to enable the RAM hot plug option, you need to verify that the VM is running and is configured as follows:

- Virtual machine is turned off.

- Virtual machine compatibility is ESX/ESXi 4.x or later.

- Latest version of VMware Tools installed.

- Guest operating system that supports CPU hot plug (basically have a “recent” OS, for Windows from Windows Server 2008 or Vista, but also some edition of Windows Server 2003 are supported).

- For a better Windows OS support table, see this blog post: https://www.petenetlive.com/KB/Article/0000527

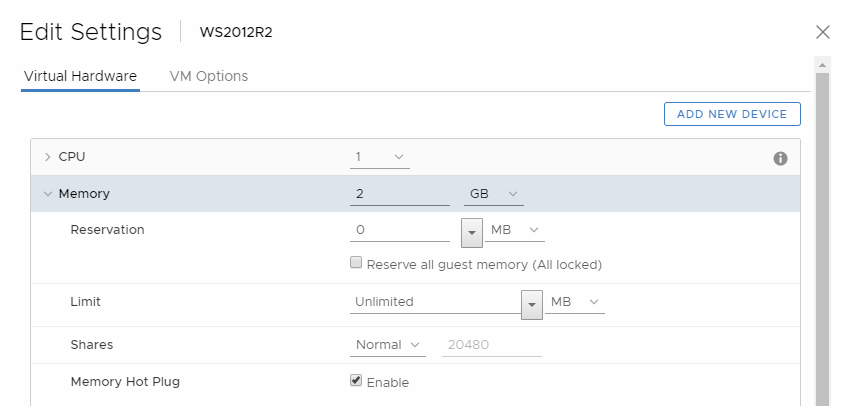

To enable the RAM hot plug option:

- Right-click a virtual machine in the inventory and select Edit Settings.

- On the Virtual Hardware tab, expand Memory, and select Enable to enable adding memory to the virtual machine while it is turned on.

- Click OK.

This feature is available only with Standard or Enterprise Plus editions and permit only to hot-add RAM to a running VM. Note that this feature it’s now implemented also in other hypervisors (for example in Microsoft Hyper-V starting with 2016 and later it’s possible add and also remove RAM).

There are some notes and suggestion for manage RAM hot-add:

- For best results, use virtual machines that are compatible with ESXi 5.0 or later.

- Enabling memory hot add produces some memory overhead on the ESXi host for the virtual machine

- Not all guest operating systems support RAM hot add. You can disable these settings if the guest is not supported.

- Hot-adding memory to a virtual machine with NVIDIA vGPU requires that the ESXi host have a free vGPU slot.

- Virtual NUMA work correctly only with vSphere 6.x when you hot-add a new socket.

- Test the procedure on the VM before put it in production… I haven’t noticed any big issue (like with the CPU hot-add), but always test your features on a non-critical environment.

Virtual disks

When you create a virtual machine, a default virtual hard disk is added. You can add another hard disk if you run out of disk space, if you want to add a boot disk, or for other file management purposes.

In VMware vSphere you can hot-add and hot-remove a virtual disk, and also hot-add space to existing virtual disks. For RDM disks there can be some limitations, but we don’t consider this kind of disks in this article.

Most of those operations and features are available also in other hypervisors, on ESXi they are available since version 3.x and also for the free edition!

In vSphere, disk space can only be added to existing disks. The shrink option to reduce a virtual disk size (available for example on Microsoft Hyper-V) it’s not available on vSphere.

Note that all the hot-add features are available only when virtual disks are connected to SCSI or SATA or SAS controller. On IDE controller those features do not work due to IDE/PATA limitations.

The maximum number of disks depends by the controller type. Actually we can have:

- Max 4 virtual disks on IDE controllers

- Max 60 virtual disks on SCSI/SAS controllers (up to 4 controllers)

- Max 60 virtual disks on NVMe controllers (up to 4 controllers)

- Max 120 virtual disks and/or CDROM devices on SATA controllers (up to 4 controllers)

- Max 256 virtual disks on PVSCI controllers (up to 4 controllers) new in vSphere 6.7

The maximum value for large capacity hard disks is 62 TB. When you add or configure virtual disks, always leave a small amount of overhead. Some virtual machine tasks can quickly consume large amounts of disk space, which can prevent successful completion of the task if the maximum disk space is assigned to the disk. Such events might include taking snapshots or using linked clones. These operations cannot finish when the maximum amount of disk space is allocated. Also, operations such as snapshot quiesce, cloning, Storage vMotion, or vMotion in environments without shared storage, can take significantly longer to finish.

Virtual machines with large capacity virtual hard disks, or disks greater than 2 TB, must meet resource and configuration requirements for optimal virtual machine performance.

Historically those “jumbo” disks have got some limitations in vSphere (for more information see https://vinfrastructure.it/2017/02/jumbo-disk-vmware-esxi/), but starting with vSphere 6.5 most limitations are gone.

VMs with large capacity disks have the following conditions and limitations:

- The guest operating system must support large capacity virtual hard disks and must use a GPT table (MBR table are limited to 2TB).

- You can move or clone disks that are greater than 2 TB to ESXi 6.0 or later hosts or to clusters that have such hosts available.

- You can hot-add space to a disk that is greater than 2 TB only from vSphere 6.5 and later.

- Fault Tolerance is not supported.

- BusLogic Parallel controllers are not supported.

- The datastore format must be one of the following:

- VMFS5 or later

- An NFS volume on a Network Attached Storage (NAS) server

- vSAN

- Virtual Flash Read Cache supports a maximum hard disk size of 16 TB.

GPU

If an ESXi host has an NVIDIA GRID GPU graphics device, you can configure a virtual machine to use the NVIDIA GRID virtual GPU (vGPU) technology.

NVIDIA GRID GPU graphics devices are designed to optimize complex graphics operations and enable them to run at high performance without overloading the CPU. NVIDIA GRID vGPU provides unparalleled graphics performance, cost-effectiveness and scalability by sharing a single physical GPU among multiple virtual machines as separate vGPU-enabled passthrough devices.

There are some requisites to support the virtual GPU:

- Verify that an NVIDIA GRID GPU graphics device with an appropriate driver is installed on the host. See the vSphere Upgrade documentation.

- Verify that the virtual machine is compatible with ESXi 6.0 and later.

To add the GPU:

-

- Right-click a virtual machine in the inventory and select Edit Settings.

- Right-click a virtual machine and select Edit Settings.

- On the Virtual Hardware tab, select Shared PCI Device from the New device drop-down menu.

- Click Add.

- Expand the New PCI device, and select the NVIDIA GRID vGPU passthrough device to which to connect your virtual machine.

- Select a GPU profile.

A GPU profile represents the vGPU type. - Click Reserve all memory.

- Click OK.