When planning or improving an IT infrastructure, one of the most difficult challenges is defining the correct approach to developing it so that it requires as little changes at further scaling up as possible. Keeping this in mind is really important, as at some point almost all environments reach the state where the necessity of growth becomes vivid. While hyperconvergence is popular these days, managing large setups with less resources involved becomes quite easy.

Today I will deal with data deduplication analysis. Data deduplication is a technique that helps to avoid storing repeated identical data blocks. Basically, during the deduplication process, unique data blocks, or byte patterns, are identified and written to the storage array after being analyzed. While such analysis is a continuous process, other data blocks are processed and compared to the initially stored patterns. If a match is found, instead of storing a data block, the system stores a little reference to the original data block. In case of small environments, this is not crucial mostly, yet for those with dozens or hundreds of VMs, the same patterns can be met numerous times. Thus, due to the advanced algorithms used, data deduplication allows storing more information on the same physical storage volume compared to traditional data storage methods. This can be achieved in several ways, one of which is StarWind LSFS (Log Structured File System), which offers inline deduplication of data on LSFS-powered virtual storage devices.

One of the initial questions any system administrator would think of would be something like “Is using data deduplication worth it?”. In fact, this really depends on the peculiarities of a particular environment. To help system administrators with answering feasibility questions, StarWind offers great freeware – StarWind Deduplication Analyzer. This software can be downloaded directly from the StarWind’s website. When run, it will analyze the files stored on your local hard drive or a remote ESXi server datastore and output the results.

Now, I would like to go through all steps of the analysis procedure. For the purposes of this post, I will use an ESXi host as a test stand. I will analyze its datastore for deduplication feasibility and see how I could benefit when storing all VMs from that host on an LSFS device with deduplication enabled. The size of my datastore is around 1TB and it has got a bit over a dozen of VMs put there, though not all of them running simultaneously.

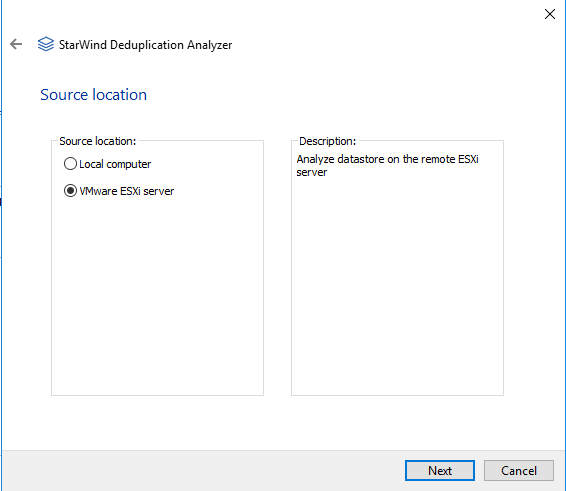

When the application is downloaded and launched, I get prompted to select the location of the source:

For obvious reasons, my selection is “VMware ESXi server”.

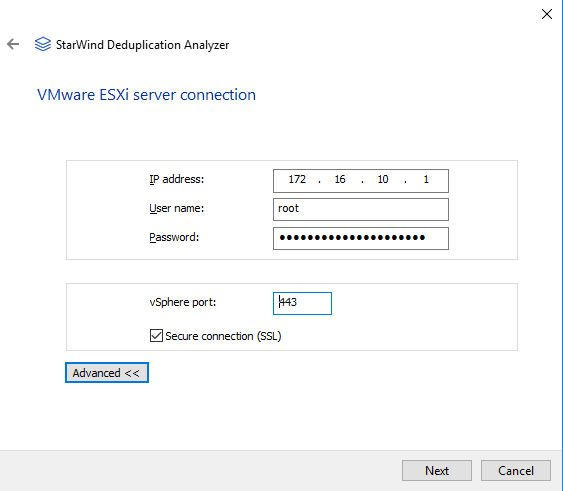

Next step requires inputting the IP address of the ESXi host and credentials for accessing the datastore:

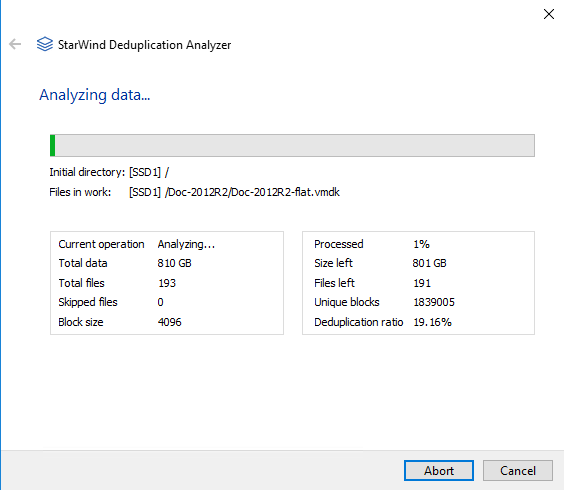

The analysis starts and presents us with live statistics on the process:

Depending on the datastore size and the network connection speed between the system where StarWind Deduplication Analyzer runs and the ESXi host, the analysis can last long. In my case, the network speed I have is 100Mbit (limited by my laptop NIC capabilities) and the total amount of data is 810GB as the screenshot above shows. The analysis speed I get is 5% of data, i.e. 40GB, per hour.

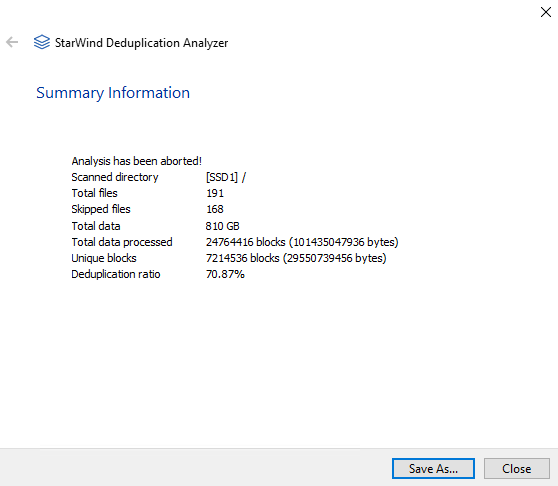

When the analysis is over, the software presents a window that is very similar to the below with a possibility to save the output information into a file:

This represents the actual results of analysis StarWind Deduplication Analyzer produces.

Conclusion

It is clear enough that if the resulting report shows low deduplication ratio, there is not really much to be won when using this technology. Yet, with the above analysis report on my test environment in mind, now I see that deduplication would be quite beneficial for my setup, as duplicate blocks constitute over 70% of data on my ESXi server datastore. Deduplication would help me saving underlying storage volume and get the even higher saturation of it with the help of virtualized resources.