2-nodes hyperconverged solution

As written in the previous post, for ROBO scenario the most interesting HCI (Hyper-Converged Infrastructure) configuration is a two nodes configuration, considering that two nodes could be enough to run dozen VMs (or also more).

For this reason, not all hyperconverged solutions could be suitable for this case (for example Nutanix or Simplivity need at least 3 nodes). And is not simple scale down an enterprise solution to a small size, due to the architecture constraints.

Actually, there are some interesting products specific for HCI in ROBO scenario:

- VMware Virtual SAN in a 2 nodes clusters

- StarWind Virtual Storage Appliance

- StorMagic SvSAN

There are also other VSA based solutions (like HP StoreVirtual, FalconStor NSS, DataCore, …), and also the new Microsoft Storage Spaces Direct that can work for a 2 nodes hyperconverged infrastructure, but to make some examples, I will only consider the VMware Virtual SAN product and a generic VSA based product (but specific for ROBO).

Note that there are some ROBO storage-related products, that maybe are designed for other cases, like the Nutanix solution for ROBO, that it’s just a remote one single storage node.

What you have to consider in each solution, is the license model (and the cost, because some solutions are more for enterprise usage rather than ROBO scenario), the type of architecture, how a two nodes cluster can manage the split-brain scenario, the resiliency of the solution, the requirements in term of resources (HCI use part of local resources on each node) and so on.

VMware Virtual SAN

VMware Virtual SAN (or vSAN) is the HCI solution integrated with ESXi (starting from version 5.5) but sold as a separated product. For ROBO there is also a specific SKU with a license model per VM.

For more information see this official document:

https://www.vmware.com/files/pdf/products/vsan/vmware-vsan-robo-solution-overview.pdf

Starting with version 6.1, Virtual SAN can be implemented with as few as two physical vSphere hosts at each office. But the minimum ESXi nodes remain still 3, to avoid the split brain scenario, so you still need a third ESXi that act just as a witness (and does not host any data). The interesting aspect is that it can be virtual machines (running a nested ESXi): a vSphere host virtual appliance can be the third node in the Virtual SAN cluster.

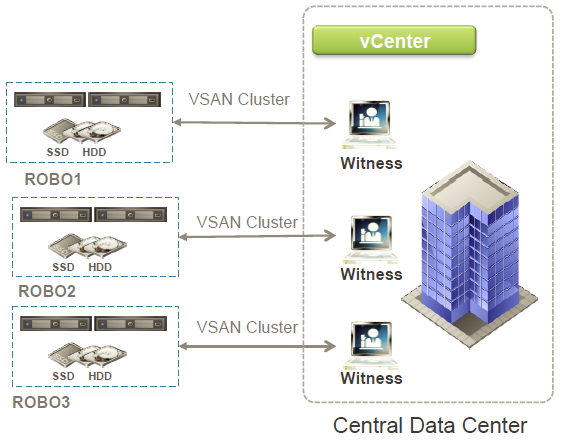

This third node is common will be the “witness” nodes and one dedicated witness is required for each Virtual SAN cluster with two physical nodes (so you need a witness for each location running a two-node Virtual SAN cluster).

Witness nodes could be deployed to a vSphere environment at a central main data center, like in this example:

But this approach is not the only one supported.

There are different Virtual SAN topologies, described in different documents (see the VMware Virtual SAN 6.2 for Remote Office and Branch Office Deployment Reference Architecture) and posts (see for example this post), but the two possible supported topologies are those centralized architectures:

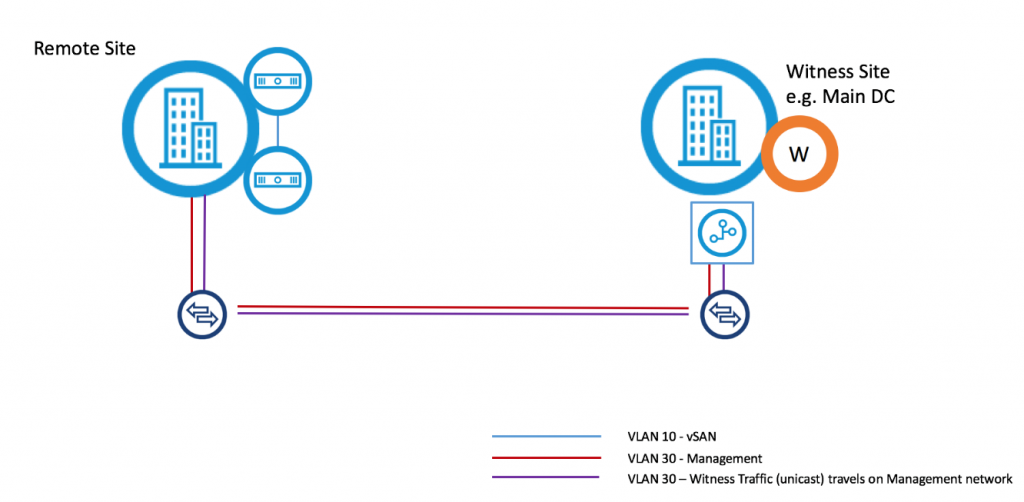

- Witness running in the main DC: in this full example, the witness (W) run remotely on another vSphere site, such as back in your primary data center, also on a vSAN deployment.

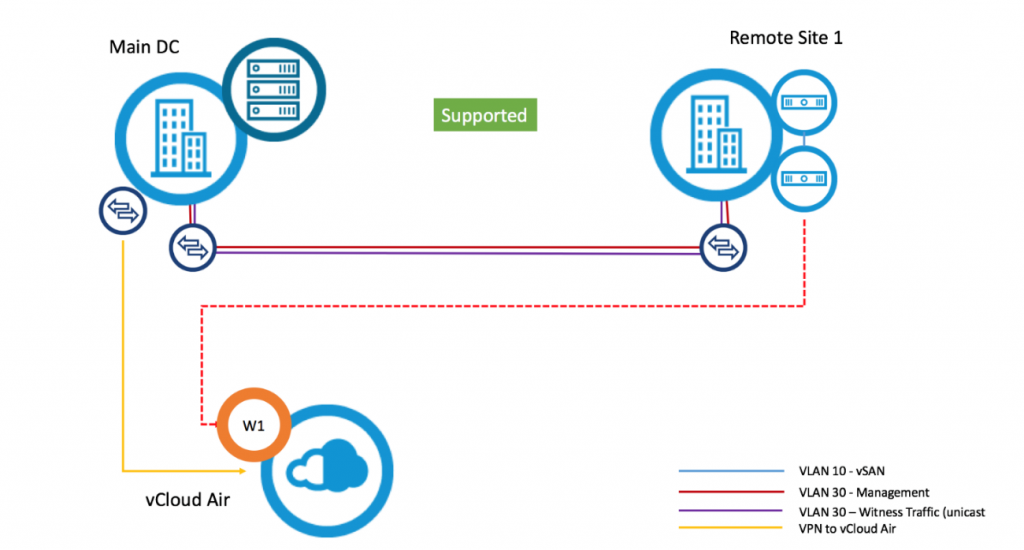

- Witness running in vCloud Air: the witness (W1) run remotely in vCloud Air and can be interesting to save resources on the main office, or where there isn’t really a “main” office (all remote offices are bigger in the same way).

Depending by your type or infrastructure and network connectivity across the sites, one solution could be better than another. But considering that recently VMware has sold the vCloud Air business, the second option should be better investigated in the future.

Remember that vSAN traffic will use the default gateway of the Management VMkernel interface to attempt to reach the Witness unless additional networking is put in place, such as advanced switch configuration or possibly static routing on ESXi hosts and/or the Witness Appliance

For the data resiliency, actually a two nodes cluster works as two different fault domains, so you have a data mirror across the nodes. But note that you don’t have (actually) a local resiliency inside a node, that could be good for the capacity aspects but could be potential risks in case you lose the availability of one node and you also loose on disk of the remaining node. With vSAN 6.6 it’s possible to have local resiliency in a metro-cluster configuration, so I guess that this feature will be extended soon also for the ROBO scenario to improve the data resiliency (actually this additional redundancy it’s only across node in the same fault domain, so cannot work with ROBO because you have only one node per fault domain).

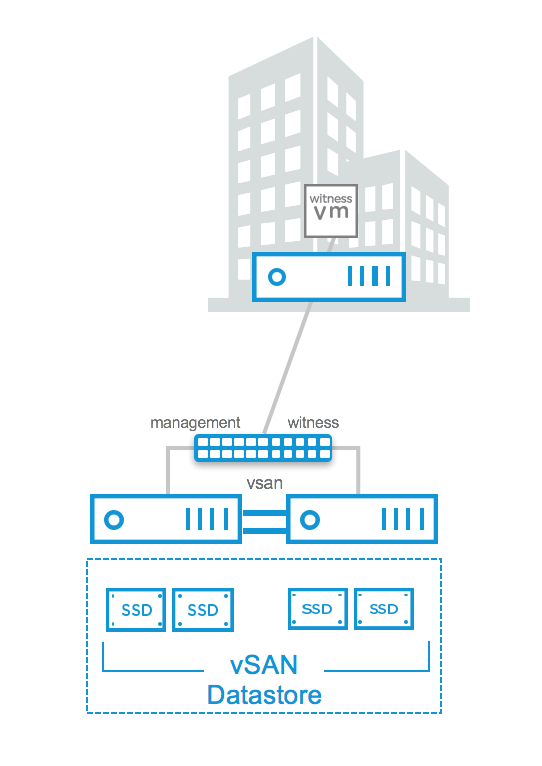

About network requirements, version 6.5 of vSAN add a really important capability: the ability to directly connect two vSAN data nodes using one or more crossover cables.

This is accomplished by tagging an alternate VMkernel port with a traffic type of “Witness.” The data and metadata communication paths can now be separated. Metadata traffic destined for the Witness vSAN VMkernel interface can be done through an alternate VMkernel port.

This permit to easily use 10 Gbps interfaces, but without the cost of the 10 Gbps switch.

If we have dual NICs for redundancy, we could possibly assign vMotion traffic down the other 10Gbps NIC (remember that vSAN VMkernel port should be just one and does not work like vMotion with multiple active interfaces).

VMware vSAN cluster configuration it’s really easy, but for a two nodes cluster, there are some extra configurations required for the witness and the “cross-traffic”. For information are available on this blog post.

VMware vSAN pro:

- Costs: interesting the VM based cost independent on the capacity or the resources of the node.

- Management: it’s all centralized in the vCenter Web Client interfaces and with interesting monitoring tools (in the recent version of vSAN).

- Kernel mode: it works at hypervisor level that means no VSA are required (and considering the ROBO licensing for vSphere it means that you save at least 2 licenses).

VMware vSAN cons:

- Kernel mode: it works at the hypervisor level, so to update your vSAN you need to update ESXi version. It can be also a potential vendor lock-in because you are “forced” to use ESXi as a virtualization platform.

- Local resiliency: you don’t have (yet) a simple solution to have a local RAID5 (or RAID6) redundancy to avoid data loss in case of one node is not available and the other has a one disk failure.

- SSD are not an option: for ROBO maybe you don’t really need SSD storage, but for vSAN you need at least one SSD disk for each node (not for the witness).

- Caching options: only SSD (or other flash based devices) could be used, but not just the host RAM.

- Witness architecture: it can be centralized, it can be virtual, but it is still an ESXi (with “huge” memory requirements) and you need one for each site… this can be not so easy to scale for several remote offices, or at least you have to plan also for this resources in the main office.

VSA based solutions

This type of solution is based on a VM on each host, that act as a storage controller that transform the local storage in a shared storage for a VMware datastore (usually via NFS or iSCSI protocols). It also provides data resiliency across nodes, using the synchronous replication. But can also provide a local resiliency (at least using a local RAID controller, but sometimes also using specific product solutions).

Main products in this category are:

Note that VMware has started its storage product in this way (with VMware VSA) but then has just changed totally the approach to use a more scalable solution like vSAN.

In most cases both VSA act as an active-active storage, that means with a right multi-path policy (for block level shared storage) you can provide data locality for your VMs.

VSA based solutions pro:

- Costs: it could be a pro or a con depending on the license model and the size of your remote offices. In most cases is based per capacity.

- Management: depending on the product, you can have a central management (and also central provisioning), maybe also integrated with vCenter Server.

- Complete data resiliency: not only across nodes but also inside the node.

- SSD are just an option: for ROBO maybe you don’t really need SSD storage, so you can choose to use them (in some cases also for caching purpose).

- Witness architecture: depending on the solution, in most cases there can be a single witness for all the remote sites. Also, this witness requires few resources and can also be deployed easily in the remote office (if you have limited network reliability to the main site), in some cases also on a Linux NAS bases system (to reduce the number of local devices).

- Virtual appliance mode: it works inside a virtual storage appliance that means a potential hypervisor independence (and most solutions can work at least both with VMware vSphere and Microsoft Hyper-V).

VSA based solutions cons:

- Costs: it could be a pro or a con depending on the license model and the size of your remote offices. In most cases is based per capacity.

- Virtual appliance mode: it works inside a virtual storage appliance that means at least one VM for each node (and considering the ROBO licensing for vSphere it means that you waste at least 2 licenses for each site).

- VSA placement: the VSA require to be placed in a local storage, that means other disks for this data (or logical disks in your local controller). SD card is not an option (could be used just for ESXi installation), SATADOM could be a solution, but you have to consider also the proper resiliency of this disk

- Infrastructure start-up: booting the entire ROBO infrastructure could be slow (or slower than usual) because you need to boot the hypervisor layer that is looking for some shared storage that is not (yet available), than the VSA first, re-connect the shared storage and only at this point you can start the other VMs. A good APD/PDL configuration is needed to avoid unexpected errors.

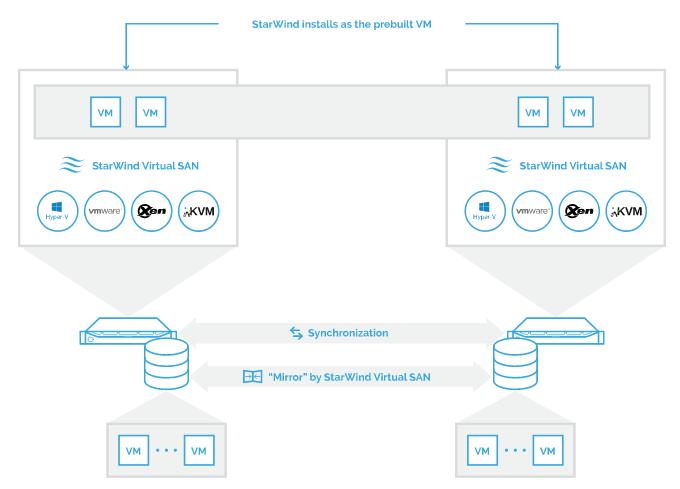

VSAN from StarWind

The VSAN from StarWind overall architecture is the following:

The solution is based on a native Windows (on top of Windows Server 2012 R2) hypervisor-centric hardware-less VM storage solution. It creates a fully fault tolerant and high performing storage pool purpose built for the virtualization workloads by mirroring existing server’s storage and RAM between the participating storage cluster nodes. The mirrored storage resource is then connected to all cluster nodes treated just as local storage by both hypervisors and clustered applications. Fault tolerance is achieved by providing multipathed access to all storage nodes

It’s a software based solution for traditional servers, but alternatively, deployment can be simplified through utilizing StarWind HyperConverged Appliance.

Those who need to build and maintain virtualization infrastructure at little to no expense, may be interested in VSAN from StarWind Free.

StarWind VSAN has a simple base configuration of just two nodes without the need for voting or quorum facilities and is exceptionally easy to operate. All local storage is shared with iSCSI protocol, so it works with almost all hypervisors (or potentially not only for hypervisors). It reduces cost requiring less hardware: no switches, no mandatory flash, no 10GbE and fewer servers.

There are three different type of editions (except the free one):

- Standard: designed for SMB and ROBO scenarios, creates up to 4 TB of synchronously replicated volumes (HA), or single targets (Non-HA) with an unlimited capacity. The license is not upgradable beyond basic capacity.

- Enterprise: it starts with two licensed nodes and scales-out to an unlimited number of nodes. There are no storage capacity limitations. The license includes asynchronous replication to a dedicated Disaster Recovery (DR) or Passive Node (as an option for extra cost).

- Data Center: created to handle any workload, it features unlimited capacity and the unlimited number of nodes. The license includes asynchronous replication to a dedicated Disaster Recovery (DR) or Passive Node.

StorMagic SvSAN

The StorMagic SvSAN overall architecture is the following:

Each instance of SvSAN runs as a VSA (Linux based and quite tiny) on servers with local storage. SvSAN supports the industry-leading hypervisors, VMware vSphere, and Microsoft Hyper-V, and provides the shared storage (again though iSCSI protocol) necessary to enable the advanced hypervisor features such as High Availability/Failover Cluster, vMotion/Live Migration and VMware Distributed Resource Scheduler (DRS)/Dynamic Optimization.

On VMware vSphere platform, there is a powerful integration with vCenter Server to provide not only the management and monitoring features but also the deployment capabilities for the VSA and also for the shared data stores.

The Neutral Storage Host (NSH) is a key component in this architecture and acts as a quorum or tiebreaker and assists cluster leadership elections to eliminate “split-brain” scenarios.

A single NSH can be shared between multiple remote sites (really interesting compared to vSAN witness that should be one per ROBO) and employs a lightweight communication protocol, making efficient use of the available network connectivity allowing it to tolerate low bandwidth, high latency WAN links. A number of supported NSH configurations are available including, local quorum, remotely shared quorum or no quorum to suit different customer requirements.

It is possible to build your own SvSAN storage solution using just a pair of commodity x86 servers from any server vendor with internal storage. But there is also some pre-build configuration with OEM partners (like Cisco UCS) for a “physical” appliance approach.

Starting from version 6.0, there are two different type of editions:

- Standard: includes everything that you need in a software-defined storage solution to configure for the lowest-cost, highly available infrastructure.

- Advanced: provides highly sophisticated memory caching and intelligent data auto-tiering, to unleash the full power of memory and hybrid disk configurations.

HCI Node sizing

Considering that all resources are locally and that the storage solution will consume part of those resources you have to consider carefully the sizing aspects. For each product, you may have some advice or some best practices to follow, but generally:

- CPU: usually CPU is not a constraint and also HCI solution (in a two nodes cluster) may not use much of this resources. So general consideration done in previous posts are still valuable.

- RAM: depending on the HCI solution you may need more RAM. Some solutions may just one 1 GB of RAM per nodes, check carefully the product requirements.

- Storage: HDD or SSD choices depends on I/O and capacity needs, but also by product requirements (vSAN require at least one SSD and another type of disk for each node), and budget constraints. Note that actually, some type of server’s SSD disks are cheaper (and bigger) than 15k disks.

- Storage adapter: the type of HBA depends by the solution. For vSAN you need a certified storage card, for VSA based solution, each storage card certified for the hypervisor could be fine.

- Network adapter: in HCI network it’s also the back-end plane of the storage, so you should plan carefully the number of NICs. VMs and host management can work with just a couple of 1 Gbps NICs, but for the storage side dedicated NICs (maybe 10Gbps) are recommended.

In the next (and last) post will be making some consideration on the backup aspects.