I’ve been using VMWare’s VPID (Virtual Port ID) technology for some time now both in work and in the home lab but I was curious to see just how VMWare handled a NIC going down and then coming back up and it turned out to be a lot more powerful and smooth than I first though.

In my lab, I’ve got several HP Microservers and a mix of TP-Link and Netgear switches.

I’ve found the TP-Link switches to be perfect for a lab as they have 48 1GBit ports and 4 1GBit FC ports. They haven’t cheapened out like Netgear have with the link between the last two Ethernet and the first two FC. With Netgear, you can only use last two Ethernet OR the first two FC ports.

You cannot use all of the ports on the switch. With TP-Link, all the ports are available and I find the web GUI a little more initiative although I did experience a bug on the TP-Link where the SNMP Engine kept crashing. This was fixed in a firmware upgrade so it wasn’t a major issue.

Anyway back to VMWare and VPID!

Once I had the switches up and running, I added them all to Observium. If you’ve not come across Observium before then it’s really something you should check out. It is a nice little application that can be installed fairly easily on Linux and will poll switches and other devices for information such as bandwidth and switch port errors.

Observium also has a couple of other features where it can alert if a port goes down, this can handy when working in datacentre as it’s very easy to accidentally dislodge a cable. This is also where things like VPID and teaming in windows and bonding in Linux can save you from an outage.

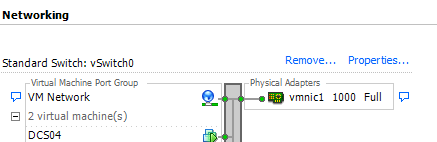

On my test lab, I’ve a VM called “DCS04” which has an IP of 10.253.1.44 and a MAC of 00-50-56-93-2b-c9. The MAC clearly shows that it’s a VMware VM as any MAC starting “00-50” is a VMWare VM.

On my ESXi host, I can see that this VM is being served through vmnic1 as the hypervisor only has one physical NIC allocated to the vSwitch.

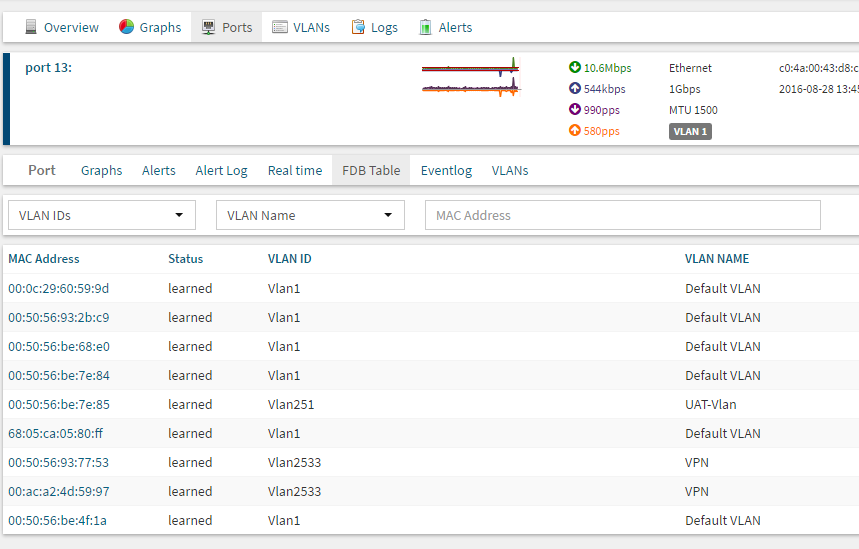

Searching in Observium and I can see the connection showing up in the FDB (Forwarding database, basically the MAC address table). Observium clearly shows me all the other VM’s on that connection as well as the physical NIC itself.

If I now drop another NIC into the vSwitch whilst continuously pinging DCS04 I see this:

A couple of ping packets take longer than others. Not a big deal and it could be down to the server being busy. The important things it that there are no packet drops.

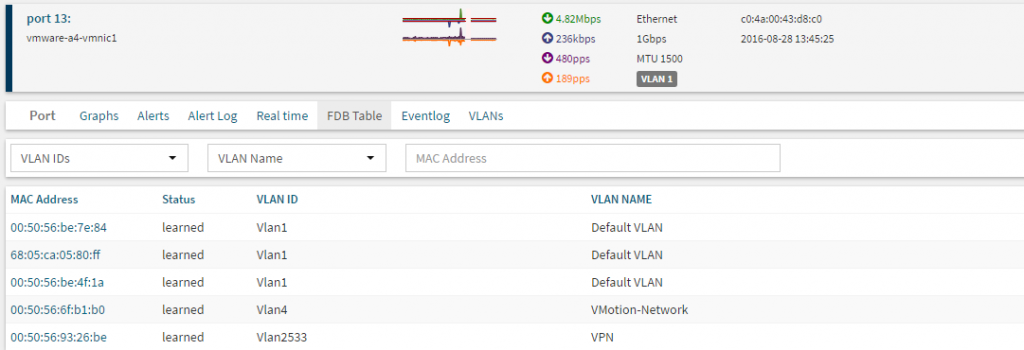

Observium polls the switches every five minutes for changes so, one poll later and I can see this on port 13 which is where this server was:

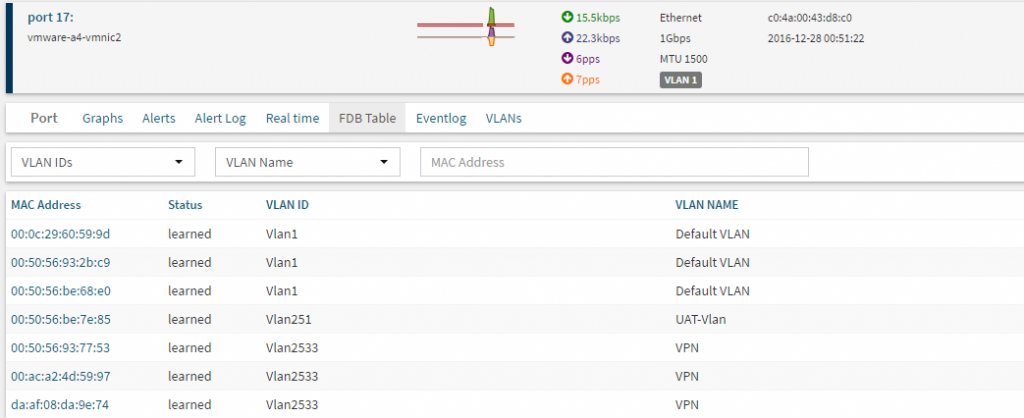

It’s actually moved the traffic of several machines off that NIC and onto the one I just added to the vSwitch, a search for the MAC and I can now see it on port 17 along with a few other VM’s.

When adding additional physical NICs into a vSwitch and, as long as they are not standby adapters, VMWare will immediately migrate part of the workload to the new NIC. The same is true if a NIC is removed, unplugged or fails, VMWare will move the traffic to other surviving NICs. It does this all dynamically. The nice thing about VPID is that it’ll work across different switch hardware, other teaming protocols like LACP generally need the same switch hardware.

VPID is a very nice technology that allows for spreading the VM load across multiple NICs and for providing some level of fault tolerance for an ESXi host. Add Observium into the mix for near real time stats on how busy a network port is plus alarms should a NIC be unplugged and you’ve got quite a nice environment for seeing just how much traffic an ESXi host will use.

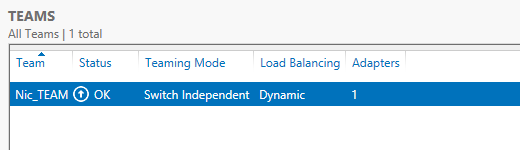

Similar switch agnostic technology exists in Windows via the server manager GUI:

- Comparing vSphere Distributed Switch and Cisco Nexus 1000v switch

- Rename VM network adapter automatically from Virtual Machine Manager 2016