Introduction

Window Server 2016 Hyper-V brought us Switch Embedded teaming (SET). That’s the way forward when it comes to converged networking and Software-Defined Networking with the network controller and network virtualization. It also allows for the use of RDMA on a management OS virtual NIC (vNIC). You can read about such an implementation in my blog and in the Microsoft documentation.

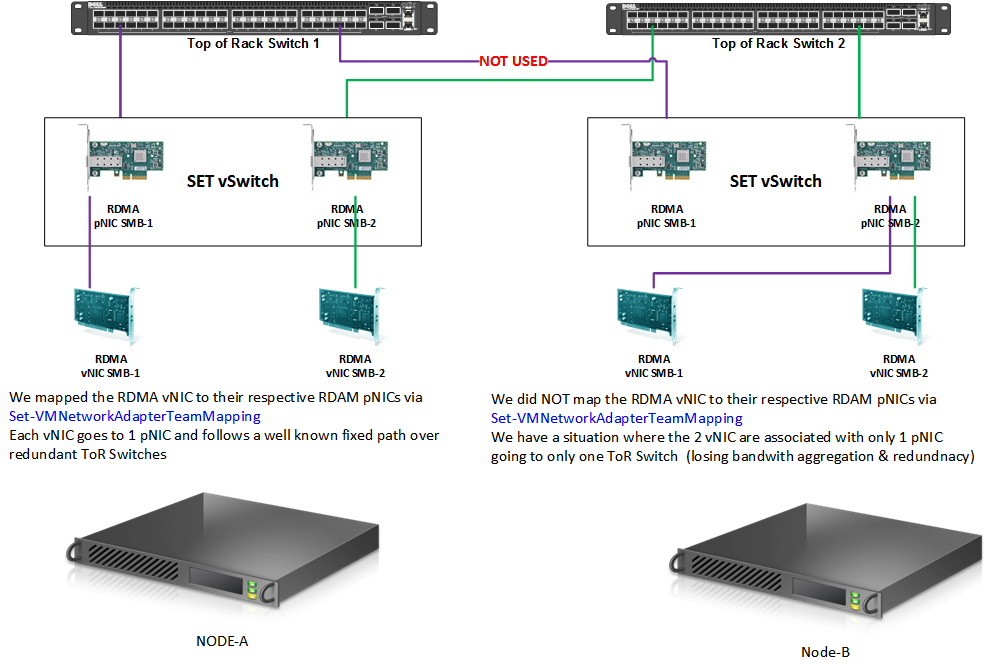

One of the capabilities within SET is affinitizing a vNIC to a particular team member, that is a physical NIC (pNIC). This isn’t a hard requirement for SET to work properly but it helps in certain scenarios. With a vNIC we mean either a management OS vNIC or a virtual machine vNIC actually, affinitizing can be done for both. The main use case and focus here and in real life is in the management OS vNICs we use for SMB Direct traffic.

Use cases

A major use case is that you want the SMB3 vNICs used for S2D, CSV, various types of live migration traffic to go over a well-known and predefined pNIC and – as would be a best practice in such cases – a well know Top of Rack Switch. These are in turn connected via a well know path towards the vNICs or pNICs in your SMB3 SOFS storage array (that might be S2D, a standard storage spaces SOFS, a 3rd party storage array). For this reason, Microsoft has made this possible for a SET vSwitch via the Set-VMNetworkAdapterTeamMapping command.

To understand why this is needed we need to dive into the details of the SET vSwitch, the vNICs and the pNICs a bit more.

How vNICs, pNIC behave within the SET vSwitch

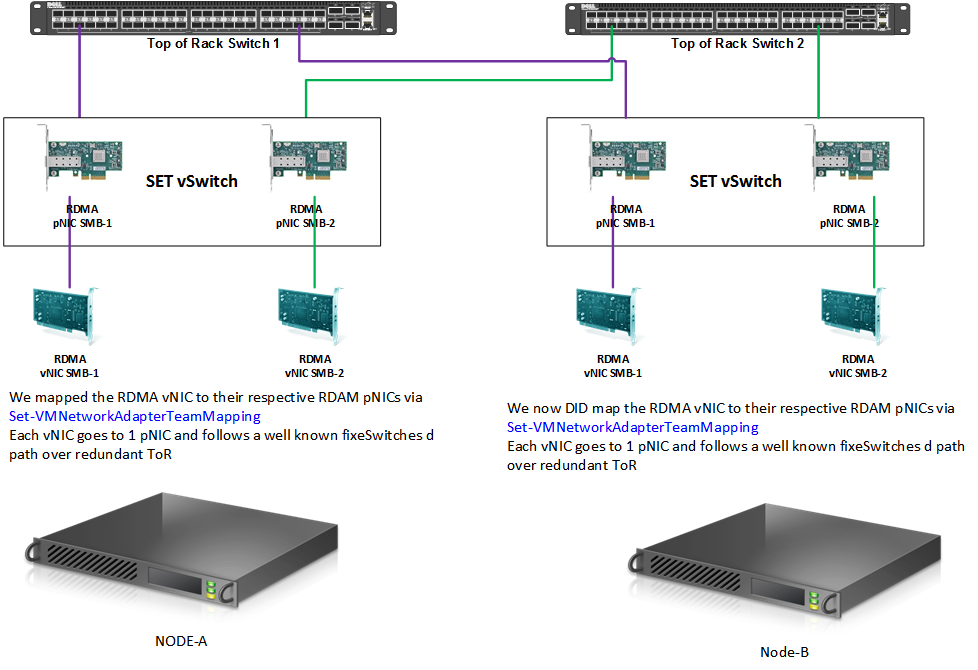

To show you the details of the SET vSwitch, the vNICs and the pNICs behavior we use a 2-node cluster where each node a 2 RDMA NICs (Mellanox ConnectX-3) configured in a SET vSwitch converged network setup with 2 RDMA capable vNIC. You can find an example of such a SET configuration where I use Set-VMNetworkAdapterTeamMapping to achieve this in my blog Windows Server 2016 RDMA and the Hyper-V vSwitch – Part II

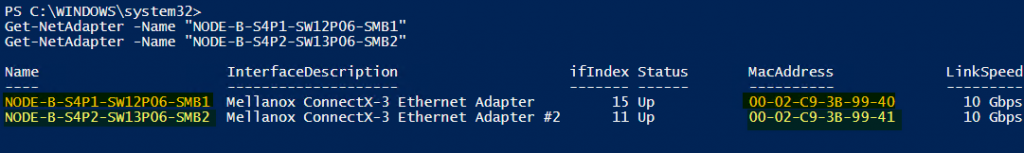

You can use that example of a complete SET configuration. The lab setup at the start of this article is shown in the picture below.

On Node-A we have configured the mapping of our RDMA vNICs to a particular RDMA pNIC. On Node-B we have not and that the node we’ll look at and test with. We’ll look the difference in behavior with or without mapping.

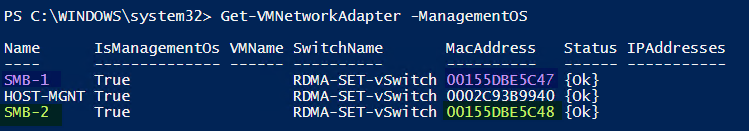

On our Node-B, we run Get-VMNetworkAdapter -ManagementOS. This gives us the MAC address of our RDMA vNICs

We check than the binding of these vNICs to the pNICs

What you see above is the both RDMA vNICs as identified via their MAC address are tied to the same RDMA pNIC.

We can (ab)use the Get-NetAdapterVmqQueue command to see this as well.

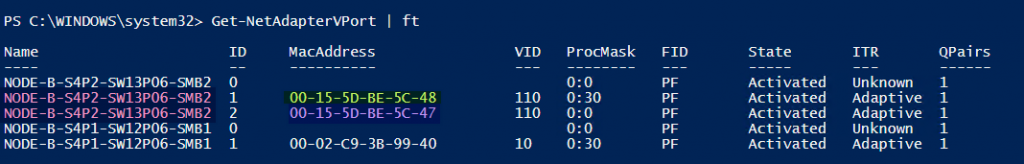

Please note that these commands show the mapping of the vNICs to the pNICs and not the MAC address of the actual pNICS as can be shown via

Get-NetAdapter -Name "NODE-B-S4P1-SW12P06-SMB1"

Get-NetAdapter -Name "NODE-B-S4P2-SW13P06-SMB2"The above is an example of a node where we did not map the RDMA vNICs to a specific RDMA pNIC. What does this mean for the traffic flowing between those 2 nodes?

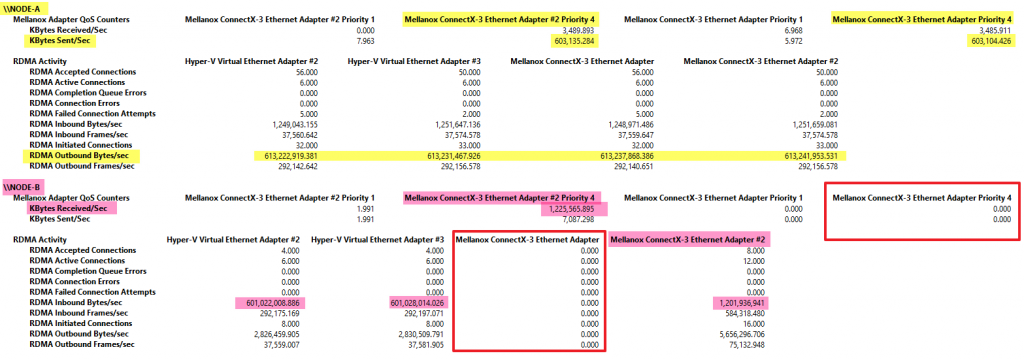

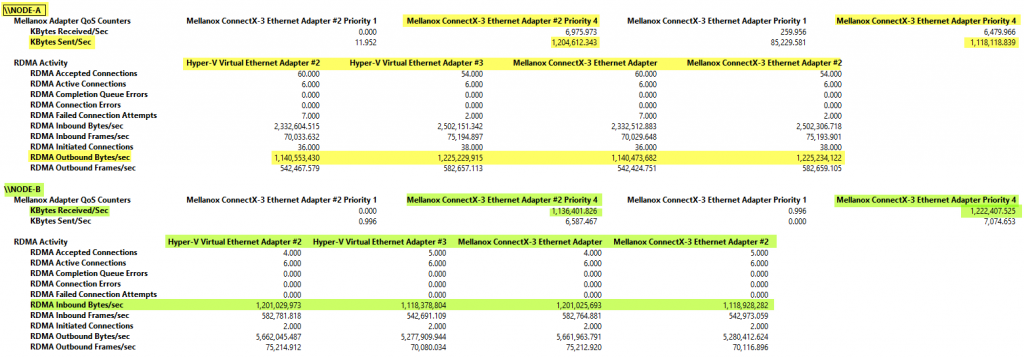

Well, let’s test this. We first send traffic from Node-A to Node-B

In the above screenshot, you see that both RDMA vNICs are sending traffic over their respective RDAM pNICs. This is when the mapping randomly went well, or we did the mapping or when it is a pure physical setup without a switch with SET. But on the receiving end, without the mapping and an unfortunate random binding of each RDAM vNIC to the same RDMA pNIC we see a sub-optimal situation. While indeed both vNICs are receiving traffic they both use the same pNIC limiting the bandwidth. The Mellanox NIC in the red square is not used.

When we send traffic from Node-B to Node-A the reverse occurs.

Node-B sends traffic over both vNIC that are using the same pNIC. The Mellanox NIC in the red square is not used. On Node-AG traffic is received on both RDMA vNICs and pNICs.

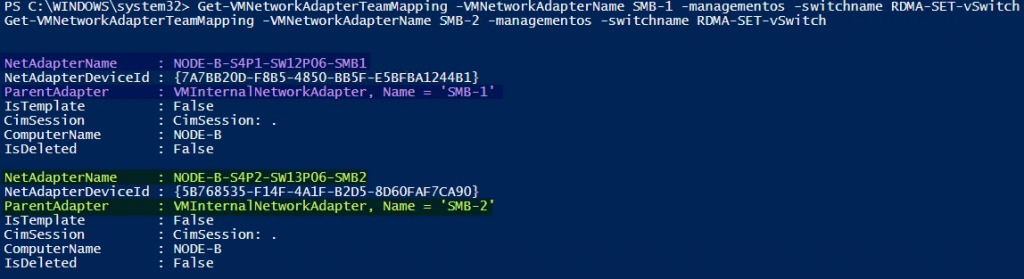

Let’s fix this! First, we test that there is indeed no mapping and that the results we saw above are not caused by another reason.

Get-VMNetworkAdapterTeamMapping -VMNetworkAdapterName SMB-1 -managementos -switchname RDMA-SET-vSwitch

Get-VMNetworkAdapterTeamMapping -VMNetworkAdapterName SMB-2 -managementos -switchname RDMA-SET-vSwitchThis returns nothing as the vNICs have not been mapped to specific team members. As you can learn from the tests above this can lead to sub-optimal behavior. This might or might not happen but we’d like to avoid it. It doesn’t break the functionality but it does waste bandwidth and resources. It also doesn’t allow for a well know predictable path for the traffic flow.

The solution is to map the RDMA vNICs to a specific RDMA pNIC team member via PowerShell

Set-VMNetworkAdapterTeamMapping -VMNetworkAdapterName SMB-1 -PhysicalNetAdapterName "NODE-B-S4P1-SW12P06-SMB1" -ManagementOS

Set-VMNetworkAdapterTeamMapping -VMNetworkAdapterName SMB-2 -PhysicalNetAdapterName "NODE-B-S4P2-SW13P06-SMB2" -ManagementOSWhen we check the mappings again we get a different result.

Get-VMNetworkAdapterTeamMapping -VMNetworkAdapterName SMB-1 -managementos -switchname RDMA-SET-vSwitch

Get-VMNetworkAdapterTeamMapping -VMNetworkAdapterName SMB-2 -managementos -switchname RDMA-SET-vSwitchOur SMB-1 vNic is mapped to NODE-B-S4P1-SW12P06-SMB1 and our SMB-2 vNIC is mapped to NODE-B-S4P2-SW13P06-SMB2.

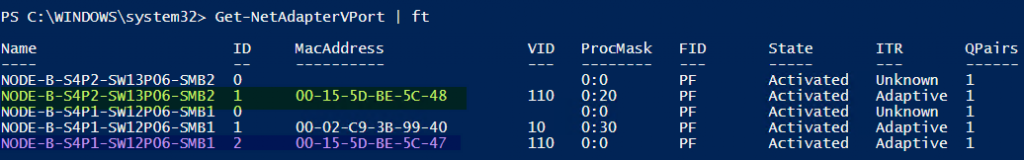

When we now look Get-NetAdapterVPort and Get-NetAdapterVmqQueue at we see a different picture than before.

The RDMA pNICs are associated or mapped to their RDMA vNIC as we expect. This is persistent through reboots or NIC disabling/enabling until you remove that mapping with the below PowerShell commands.

Remove-VMNetworkAdapterTeamMapping -VMNetworkAdapterName SMB-1 -ManagementOS

Remove-VMNetworkAdapterTeamMapping -VMNetworkAdapterName SMB-2 -ManagementOSIn a single picture, we now have the below situation:

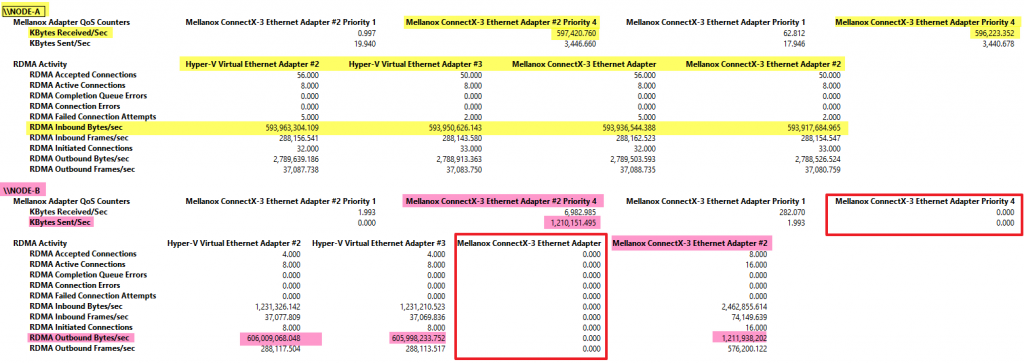

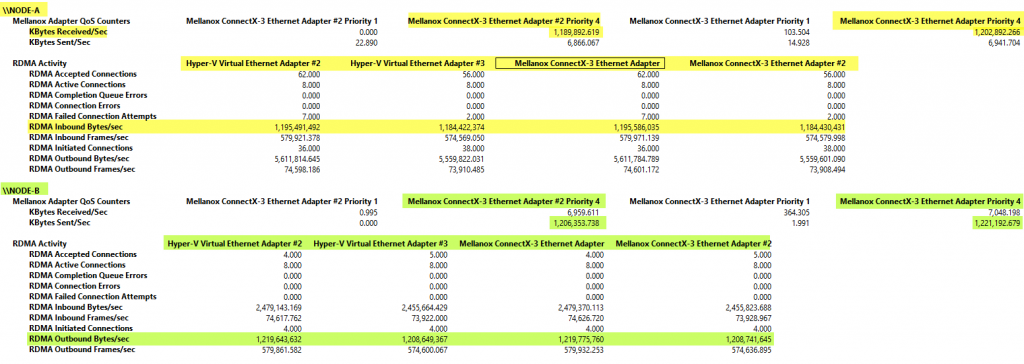

Now it time to test the effect of these settings. In both directions, sending from Node-A to Node-B and vice versa we see that both RDAM vNIC are being used as well as both RDMA pNICs.

Node-B sending to Node-A

Node-B sending to Node-A

It’s important to note that this is not just about making sure all available pNICs / bandwidth is used all of the time. It also makes sure your traffic flow paths are predictable. This is important with redundant Top of Rack switches with S2D for example. It makes sure you have redundancy at all times when possible and facilitates troubleshooting.

Also, note this does not interfere with failover at all. When there is only on pNIC both vNICs will use that one. Setting the affinity will not prevent failover to another physical NIC if the mapped pNIC fails. This means you are not at risk of losing redundancy. When the pNIC is back into service affinity will kick in again as well as the load balancing and aggregated bandwidth this gives you.

The above was for a 2-member SET vSwitch example where we for converged networking. What if you have a 4-member SET with 4 RDMA Capable pNICs with RDMA enabled on all 4 of them. You could create 4 RDMA vNICs and map those ones on one. Alternatively, you could create 2 vNICs and map one of those to 2 pNICs going to ToR switch 1 and the other 2 vNICs to 2 pNICs connected to ToR switch 2.

What about a 4-member SET with 4 RDMA Capable pNICs with RDMA enabled on only 2 of them and map two vNIC to the RDMA capable pNICs. This is a situation that I would avoid if possible. I rather have a SET vSwith with 2 pNICs and 2 non-teamed pNICs with RDMA enabled in that case. Having mixed capabilities on the SET member and working around that by affinitizing vNIC to pNICs creates an unbalance in usage. Until someone convinces me otherwise I will not go there.

There are also very valid use cases for having a SET of 2 pNICs for the virtual machines and anything to do with SDNv2 and leave two pNIC for RDMA without putting those into a SET vSwitch. This keeps any S2D, Storage Replica, LM traffic etc. separate from the VM traffic. That way you’re free to optimize for any scenario. Reasons for this could be that you don’t have the same NICs (2 port are Mellanox, 2 are Intel) or you don’t have 2*25Gbps but 4*10Gbps. Up to 10Gbps my favorite approach with Windows Server 2016 was to use a 2*10Gbps vSwitch with switch embedded teaming for the virtual machines offering all the current ab future benefits SET has to offer. But I use another 2*10Gbps for SMB Direct RDMA traffic. That gave me the best of both worlds when I needed the SET capabilities.

With 25Gbps capable NICs supported and even offered by default on the new server generations it’s become more attractive to go fully converged for the network stack. Where 40Gbps was less common on servers in the Windows 2012 R2 era (bar Storage Spaces deployments) it’s now common to have 25/50/100Gbps offerings in the network stack. Most scenarios will like to maximize the ROI/TCO by going converged. Price per Gbps the 25/50/100 Gbps stack is a no-brainer and modular switches give us a lot of freedom to design the best possible solutions for both port count, bandwidth, and cabling. Now add all that the network controller, software-defined networking, and storage to see that software-defined datacenter materialize where it makes sense.

Discussing all the options and decisions points in favor or against a certain design would lead us so far. But you are now armed with some extra knowledge about SET vNIC/pNIC behavior in order to make the best decisions for your needs, resources, and environments.