Hyper-converged infrastructure, when we started to hear about it last year, was simply an “appliantization” of the architecture and technology of software-defined storage (SDS) technology running in concert with server virtualization technology. Appliantization means that the gear peddler was doing the heavy lift of pre-integrating server and storage hardware with hypervisor and SDS hardware so that the resulting kit would be pretty much plug-and-play.

Hyper-converged infrastructure, when we started to hear about it last year, was simply an “appliantization” of the architecture and technology of software-defined storage (SDS) technology running in concert with server virtualization technology. Appliantization means that the gear peddler was doing the heavy lift of pre-integrating server and storage hardware with hypervisor and SDS hardware so that the resulting kit would be pretty much plug-and-play.

If this sounds a bit like past iterations of specialty appliances, it is. Recall that network-attached storage (NAS) was little more than a “thin” general purpose OS – slenderized to provide just those services required of a file server – bolted to the top of a direct-attached storage array. NAS got fancier when challenges to scaling and performance developed. Companies like NetApp added DRAM, then Flash, memories to create caches and buffers that would conceal the latencies introduced by their file layout technology and file system. Essentially, they added spoofing to make applications and end users think that their data had been received and committed to disk when, in fact, the data was sitting in a cache waiting for its turn at the read/write head. Even with these “enhancements,” NAS was still a pre-integrated cobble of hardware and software creating a file system appliance.

Hyper-converged infrastructure (HCI) appliances have proceeded in much the same way. They began simply enough as the implementation of an SDS stack on a generic server with some internal storage or externally-attached drive bays. With many products, the resulting kit was a “storage node” that was designed for deployment (in pairs or triplets, depending on the clustering model) behind a server hosting virtual machines. Other products, however, took the path of combining the virtual machine server with the storage appliance to create a kind of “one stop shop” appliance for virtual application hosting and data storage.

Actually, the original idea for hyper-convergence included software-defined network (SDN) technology with SDS and hypervisor stacks, enabling a true plug and play infrastructure component. From trade press accounts, Cisco Systems threw a wrench in the gears of SDN early on, preferring proprietary approaches to building networks rather than “open” models that would gut their margins on gear. So, SDN is rarely discussed these days in connection with hyper-convergence. But even without SDN, the current crop of HCI appliances seem to be gaining steam.

It helps that both IBM and Hitachi Data Systems have begun to make noise about their HCI offerings, which adds a bit of street cred to the architecture. EMC is also pursuing a hyper-converged product, VSPEX™ BLUE, but nobody seems to be listening to their marketing pitch against the din of loud discussions of what will become of the Evil Machine Corporation once it has been absorbed by the Dell “Borg Collective.”

From what I can tell, the big storage vendors have embraced the concept of hyper-converged for reasons that have little to do with any generally recognized value of the architecture and a lot to do with desperation over the flagging sales of their “legacy” SAN and NAS storage products. Despite tremendous data growth, companies are still keeping their new hardware acquisitions to a minimum – concerned that what VMware and Microsoft have been arguing about legacy gear (to avoid it going forward) may have some objective foundation beyond self-interest. Basically, companies are doing everything they can to defer new hardware acquisitions until the dust settles, both in terms of general economic reality and technology.

This hasn’t stopped the server makers, who fell prey to the “hardware is trash” mantra when hypervisor software vendors began making noise about a decade ago, from seizing on HCI as a way to breathe new life into their businesses. Today, all of the leading brands of server makers, from Huawei to Cisco UCS to Dell and HP, to Fujitsu and Lenovo, are partnering with one or more SDS vendors to build appliances. Even the grey market server dealers – vendors of refurbished, off-lease rigs – are allying with SDS vendors to grab some market share.

All of this industry activity, more than consumers clamoring for the new technology, accounts for the current volume of coverage of “emerging HCI solutions.” As a side effect, the big industry analysts, always willing to help the industry spend its cash, are generating reports and webinars and conferences that show HCI market trends high and to the right. Generally missing is a cogent analysis of what HCI will contribute in terms of business value to the consumer, measured in terms of cost-savings, risk reduction and improved productivity.

The value case that is currently being articulated around HCI is founded on “improved IT agility.” HCI appliances are supposed to make it easy (and fast) to roll out infrastructure in response to business needs – or client needs, in the case of cloud service providers. In this assertion, hyper-converged is showing a bit of the genetics it shares with hyper-clustering – a method for building supercomputers from x86 tinker toy servers. Everything in hyper-cluster computing is also built on server nodes and the university and government research labs who tend to deploy cluster supercomputers generally aren’t all that concerned about node price or other business value components.

When you read the literature, it quickly becomes apparent that key business-oriented benefits of HCI — the lower acquisition cost of the hardware compared to legacy kit, HCI’s more comprehensive management story compared to legacy vendor gear, and the increased utility of associating the right storage and data management services to the right data — independent of array controllers — are rarely touted as reasons for purchasing gear.

They should be. Today, storage accounts for between 33 and 75 percent of the cost of hardware in data centers, depending on the analyst you read, and the management of storage infrastructure is about six times the cost of the acquisition of storage hardware and software itself. The improved management paradigm of good SDS software can contribute significantly to OPEX cost reduction if only because it undoes some of the inefficiencies of legacy gear.

Most legacy storage infrastructure is heterogeneous, which means that it has been purchased from several vendors over the years for different reasons. Despite lip service to the contrary, vendors don’t want to make it easy to manage their gear and their competitor’s in a common way. This makes for isolated islands of storage — SANs based on switches that will not interoperate with competitor switches, NAS platforms that make it nearly impossible to migrate data to a competitor’s NAS. Even with SNMP and the SNIA’s SMI-S protocols, management of heterogeneous infrastructure beyond the most rudimentary form of hardware status monitoring is still a pain in the ear.

With effective HCI, the SDS software layer should be centrally manageable – meaning that all appliances using an SDS vendor’s technology should be able to be managed from a central console. The more that management is based on REST standards, the more open this paradigm should become – holding out the possibility that many SDS products from many vendors would expose a common RESTful API for management. That would most certainly be a boon.

Unfortunately, some HCI appliances are going the way of the legacy vendor rigs that they are replacing. VMware supports REST, but it is behind a couple of layers of proprietary APIs, limiting its utility as part of a heterogeneous management solution. Other vendors have created HCI models that can only scale if you deploy only their branded nodes, leading smart consumers to wonder how this is any different from the gear from legacy vendors that they are seeking to replace.

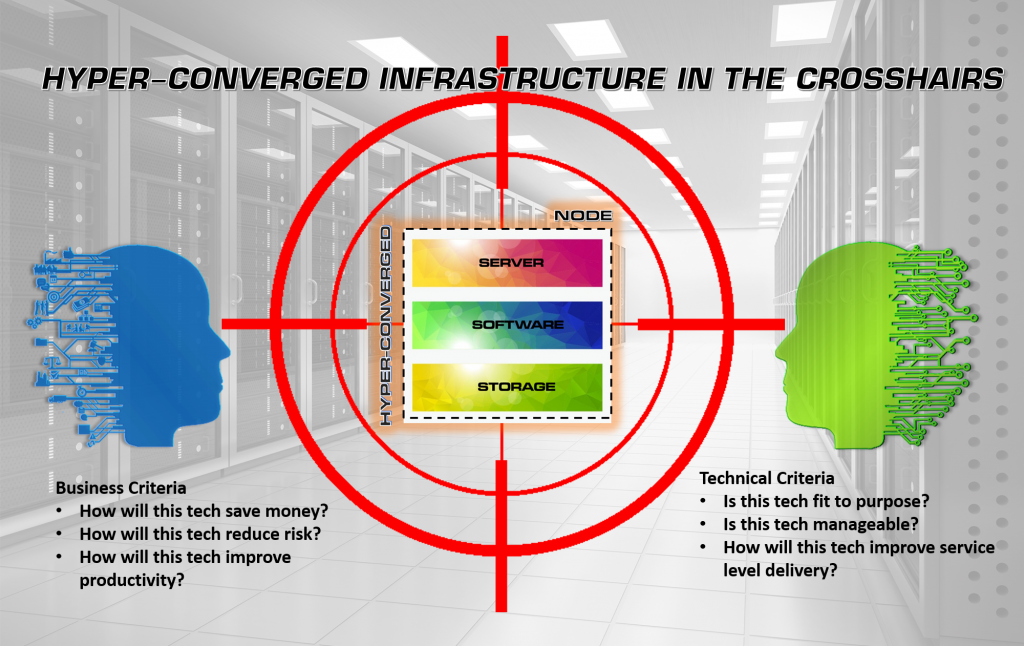

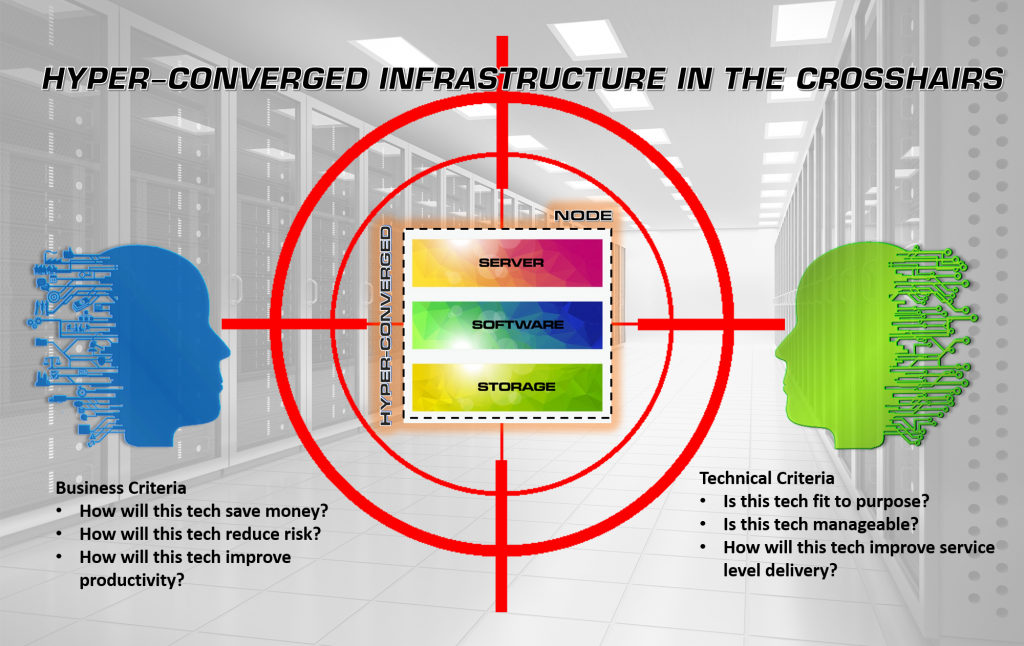

My two recommendations are these: first, before you embrace HCI, ask the all-important business value questions. How will this reduce my costs (CAPEX and OPEX)? How will this technology reduce risk (risk of data loss, risk of investment obsolescence, risk of data management failure and regulatory or legal non-compliance, etc.)? And how will this improve the productivity of my business and/or my IT operations? No technology initiative or acquisition should get a free pass; all initiatives need to be evaluated for their business value contributions.

My second recommendation is to look at management before you buy. How do you manage these appliances, which are likely to proliferate over time much more quickly than your legacy infrastructure did. What workloads can the appliance support? Are they tied to a single hypervisor? If they are hypervisor agnostic, do implementations in support of different hypervisor workload still avail themselves of common management? And finally, in addition to hardware status monitoring and provisioning tools, do the appliances provide good tools for selectively applying “value-add” services like continuous data protection, thin provisioning, replication and mirroring, deduplication, etc. to the data that they host?

Not all data is the same and not all data requires the same services. It is desirable, if you want to improve on legacy infrastructure, for the new hyper-converged infrastructure to have a better story to tell with respect to service provisioning. My two centavos.