Before we have started our journey through the storage world, I would like to begin with a side note on what is hyperconverged infrastructure and which problems this cool word combination really solves.

Folks who already took the grip on hyperconvergence can just skip the first paragraph where I’ll describe HCI components plus a backstory about this tech.

Hyperconverged infrastructure (HCI) is a term coined by two great guys: Steve Chambers and Forrester Research (at least Wiki said so). They’ve created this word combination in order to describe a fully software-defined IT infrastructure that is capable of virtualizing all the components of conventional ‘hardware-defined’ systems.

As a minimum, HCI includes virtualized computing which we know as a hypervisor (Hyper-V, ESXi, KVM, XenServer, etc.), a virtualized SAN (Software-Defined Storage) and virtualized networking (Software-Defined Networking).

HCI aims to eliminate a wide range of drawbacks which are common for legacy storage. Most legacy storage infrastructures are heterogeneous, which means that the hardware has been purchased from several vendors over the years and for different reasons. It’s really hard to manage each part separately as whenever troubles come, it becomes clear that you are forced to call ten vendors to resolve the issue. Network guys will forward you to storage providers, they, in turn, can send you to hypervisor support, and so on. The idea behind the hyperconverged solutions is the simplification. Therefore, for the most part, HCI comes as pre-configured, turnkey solution – I like to call it “plug and play”. All the components are commonly purchased from one vendor, thus the rule of “one point of contact” comes into play. This rule allows eliminating finger-pointing when datacenter requires assistance.

Well, why hyperconvergence has gotten so popular so quickly? First of all, it’s necessary to keep in mind other trends that are taking place. One of the reasons is that IT departments should be capable of provisioning resources instantly; from the value standpoint, Software-Defined Storage promises great efficiency gains, and so on.

It must be noted that IT world requires agile infrastructures able to react to business needs immediately (legacy servers were lacking this). Thus, HCI appliances make it easy to roll out the infrastructure in response to business needs, or client needs in the case of cloud service providers. When it comes to the management efficiency, HCI should be able to provide a “single pane of glass”. By saying this, I mean the following: the SDS software layer should be managed centrally (all appliances using the SDS vendor’s technology should be able to be managed from a central console).

Unfortunately, some HCI appliances are following the way of the legacy vendor rigs. For example, VMware supports REST, but it is hidden behind a couple of proprietary API layers, therefore this reduces efficiency and makes the technology look like a part of a heterogeneous management solution. Other vendors have created HCI models that can scale only if you deploy their branded nodes, thus some smart consumers are wondering how that should bring any difference from the legacy vendors gear that they are seeking to replace.

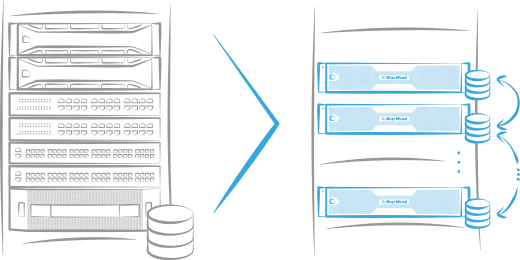

I would like to draw a line and elaborate a bit on the way in which hyperconvergence affected the market standards. First of all, it should be clear that HCI brought the biggest benefit to SMB, that part of businesses which was willing to replace the legacy infrastructure and whose workload could fit in few servers. The math isn’t complicated, trading NAS plus two switches and two compute hosts for two brand new servers looks like a good deal, considering the bills for supporting this infrastructure. HCI vendors commonly add additional features like “single support umbrella” a.k.a. “one point of contact” allowing SMB not to hire a separate team for supporting purposes. Also, all companies care about their mission critical data and VMs which are running the production. HCI eliminates the single point of failure (NAS or single storage server) and adds redundancy by mirroring data between servers. Therefore, it becomes understandable that cost saving won’t affect performance and reliability.