I am not against faster I/O processing, of course. It would be great if the world finally acknowledged that storage has always been the red-headed stepchild of the Von Neumann machine. Bus speeds and CPU processing speeds have always been capable of driving I/O faster than mechanical storage devices could handle. That is why engineers used lots of memory – as caches ahead of disk storage or as buffers on disk electronics directly – to help mask or spoof the mismatch of speed.

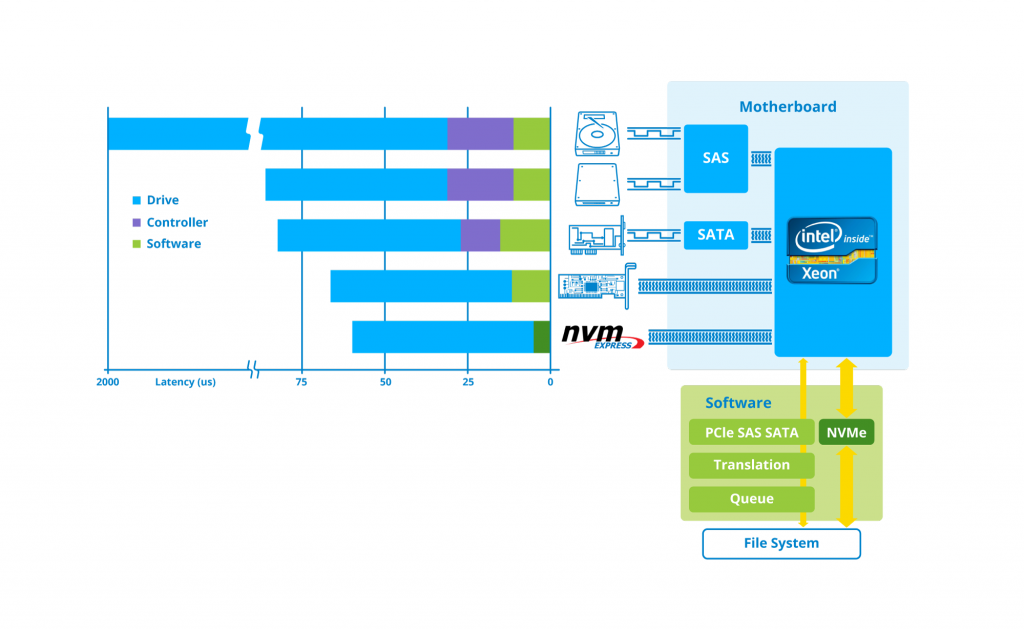

To hear advocates talk about NVMe – a de facto standard created by a group of vendors led by Intel to connect flash memory storage directly to a PCIe bus (that is, without using a SAS/SATA disk controller) – it is the most revolutionary thing that has ever happened in business computing. While the technology provides a more efficient means to access flash memory, without passing I/O through the buffers, queues and locks associated with a SAS/SATA controller, it can be seen as the latest of a long line of bus extension technologies – and perhaps one that is currently in search of a problem to solve.

I am not against faster I/O processing, of course. It would be great if the world finally acknowledged that storage has always been the red-headed stepchild of the Von Neumann machine. Bus speeds and CPU processing speeds have always been capable of driving I/O faster than mechanical storage devices could handle. That is why engineers used lots of memory – as caches ahead of disk storage or as buffers on disk electronics directly – to help mask or spoof the mismatch of speed.

In the case of flash memory, however, the speed of flash — on write at least – has never been all that earth shaking. Write speeds were not that much faster than tape, after all, but tape outperformed flash with predictability and consistency of data transfer rates (flash loses half its write speed the second time you need to write data to written groups of cells). Flash has always performed much better on reads than it did on writes, and despite all of the engineering that the industry has dedicated to masking the poor write performance while making flash cheaper and more capacious, NAND flash may in fact have gotten slower.

The relative write speed of flash and disk never really intrigued me. Truth be told, very few casual users – meaning those operating application workloads that are not as demanding as, say, high performance transaction processing systems – were really experiencing a lot of application latency from their use of SATA-connected flash SSDs in any case. This is especially true in small-to-medium sized companies with virtual server environments. There, the supposed connection between poor application or virtual machine performance and slow storage has been generally debunked – if only by demonstrating that there is no storage queue depth in the pathways between VM and storage volume. If there is no queue depth, that means that no I/O commands are waiting in line to be executed, so this could not be causing an I/O bottleneck that starves applications of performance.

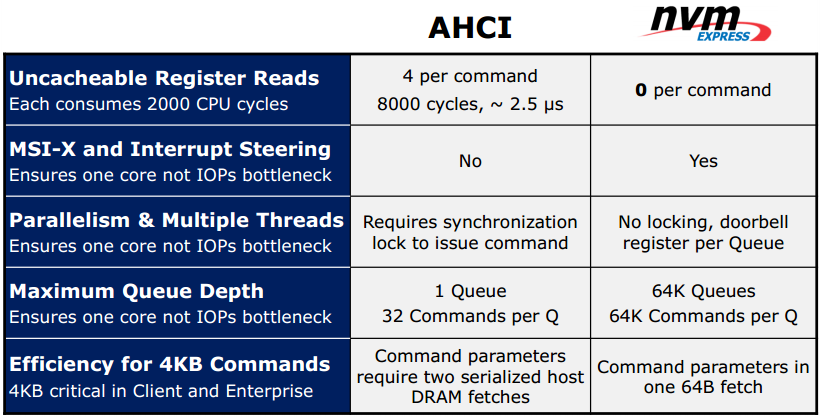

Is the NVMe specification an improvement over SATA controller connections for flash memory? Yes. There are a number of benefits accrued to leveraging native bus speeds and other benefits of NVMe as summarized by NVMexpress.org (see their chart below).

Source: Moving to PCI Express Based Solid State Drive with NVM Express

The question is whether this extra bandwidth, which some estimate to be five times more than the theoretical I/O-handling capabilities of AHCI (used with disk drive controllers), is necessary? When asked this question, one industry insider stated that the million IOPS potential of NVMe flash storage (compared with 200,000 IOPS potential of AHCI-SAS/SATA connected flash) is really only a “must-have” for 4K video editing at present.

There are many potential future applications for the improvements NVMe brings to flash storage. One could envision a future hyper-converged infrastructure appliance based on all flash and DRAM and specialized to host in-memory databases. IMDBs are on the roadmaps of virtually every database vendor today – and not just the analytics database vendors, but also the OLTP old guard. The concept is to move the entire DB with all of its extents into memory (flash works well for analytics, which tend to be read-intensive), so that there is no latency created by requests to/responses from disk drives. For OLTP, which tends to be more chatty, flash will likely need to be augmented with DRAM to support writes.

Of course, fitting mammoth legacy DBs into memory will probably exceed the memory attachment capabilities of a conventional server. So, one can well expect that super capacious interconnects (think 100 GbE or Infiniband) complemented by PCIe friendly bus extension protocols (RDMA over iWARP, RoCE, etc.) will provide a means to connect together multiple memories from multiple DRAM/flash appliances to build a scalable infrastructure for massive DB hosting.

But that’s all future-oriented thinking. It is nice to know that NVMe and its latest adjunct specifications like NVMe over Fabric will be there when we get around to needing them. For now, NVMe does improve one dimension of the flash world: it removes some of the nonsensical volatility that was being created by multiple vendors articulating multiple software stacks and device drivers in the hopes of differentiating their flash products from their competitor’s product.

Proprietary technology has always been the bane of storage and we have lived with a Wild West of proprietary wares, vendors using value-add software to jack up the prices of commodity kit and to lock out competitors while locking in consumers, for decades. Software-defined storage was supposed to correct for that, though the hypervisor vendors seemed as bent to create silos of technology as any monolithic array company.

In the case of NVMe, Intel simply took over the game from Fusion I/Os and other flash start-ups and created a standardized stack that supports their own ideas about server and storage architecture. Intel was able to drive a common approach like NVMe because the company remains one of the few that companies are willing to trust to create de facto standards. In a way that makes NVMe a sensible replacement for 20,000 approaches from hair-on-fire startups.