Introduction

As you already know, NVMe-oF (Non-Volatile Memory Express over Fabrics) is a groundbreaking technology that extends the advantages of NVMe across a network. In short, NVMe-oF allows direct access to storage media over high-speed networks, capitalizing on the speedy and large-capacity architecture of NVMe.

What does it mean? NVMe-oF significantly ramps up data transfer speeds, cuts down latency, and enhances overall storage efficiency. Basically, this technology takes storage performance to new levels and potentially changes the approach to building storage solutions in its entirety.

Purpose

In our previous material, we already delved into comparing the storage performance of Linux NVMe-oF initiator for Linux and StarWind NVMe-oF Initiator for Windows over RDMA and TCP.

That setup is as close to an actual business environment as it gets. Results have shown that the performance of each initiator was on par with the other, whether you use RDMA or TCP.

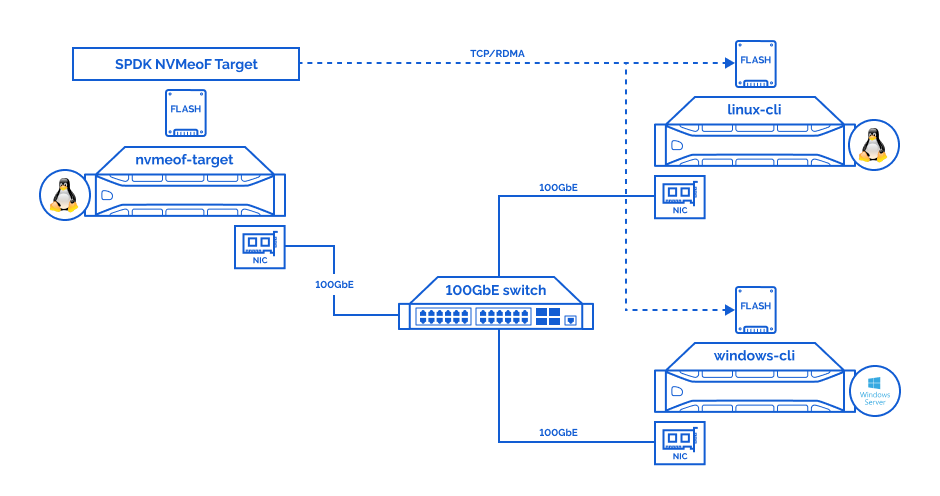

Now, we’re going to try it again with the same setup but different NVMe-oF implementations. Our purpose here is to compare the performance of the Linux NVMe-oF Initiator with the StarWind NVMe-oF Initiator for Windows (versions 1.9.0.567 and 1.9.0.578) over RDMA and TCP. Let’s see how they fare this time!

Benchmarking Methodology, Details & Results

The benchmark was conducted with the help of the fio utility in Linux NVMe-oF Initiator scenarios and diskspd in StarWind NVMe-oF Initiator for Windows scenarios.

Testbed:

Hardware:

| nvmeof-target | Supermicro (SYS-220U-TNR) |

|---|---|

| CPU | Intel(R) Xeon(R) Platinum 8352Y @2.2GHz |

| Sockets | 2 |

| Cores/Threads | 64/128 |

| RAM | 256Gb |

| Storage | 1x Intel® Optane™ SSD DC P5800X Series (400GB) |

| NICs | 1x ConnectX®-5 EN 100GbE (MCX516A-CDAT) |

Software:

| OS | Ubuntu 20.04.5 (5.15.0-67-generic) |

|---|---|

| SPDK | v22.09 |

| fio | 3.16 |

Hardware:

| linux-cli

windows-cli |

Supermicro (2029UZ-TR4+) |

|---|---|

| CPU | 2x Intel® Xeon® Platinum 8268 Processor @ 2.90GHz |

| Sockets | 2 |

| Cores/Threads | 48/96 |

| RAM | 96Gb |

| NIC | 1x ConnectX®-5 EN 100GbE (MCX516A-CDAT) |

Software linux-cli:

| OS | Ubuntu 20.04.5 (5.4.0-139-generic) |

|---|---|

| fio | 3.16 |

| nvme-cli | 1.9 |

Software windows-cli:

| OS | Windows Server 2019 Standard Edition (Version 1809) |

|---|---|

| diskspd | 2.1.0-dev |

| StarWind NVMeoF Initiator | 1.9.0.567 1.9.0.578 |

Test Patterns:

- random read 4k;

- random write 4k;

- random read 64K;

- random write 64K;

- sequential read 1M;

- sequential write 1M.

Linux – fio parameter examples

#Parameters “numjobs” and “iodepth” can change.

#Random Read 4k:

fio --name=nvme --rw=randread --numjobs=10 --iodepth=4 --blocksize=4K --direct=1 --ioengine=libaio --time_based=1 --group_reporting=1 --filename=/dev/nvme0n1 --runtime=600#Random Write 4k:

fio --name=nvme --rw=randwrite --numjobs=8 --iodepth=16 --blocksize=4K --direct=1 --ioengine=libaio --time_based=1 --group_reporting=1 --filename=/dev/nvme0n1 --runtime=600#Random Read 64k:

fio --name=nvme --rw=randread --numjobs=4 --iodepth=2 --blocksize=64K --direct=1 --ioengine=libaio --time_based=1 --group_reporting=1 --filename=/dev/nvme0n1 --runtime=600#Random Write 64k:

fio --name=nvme --rw=randwrite --numjobs=4 --iodepth=2 --blocksize=64K --direct=1 --ioengine=libaio --time_based=1 --group_reporting=1 --filename=/dev/nvme0n1 --runtime=600#Read 1024k:

fio --name=nvme --rw=read --numjobs=1 --iodepth=2 --blocksize=1024K --direct=1 --ioengine=libaio --time_based=1 --group_reporting=1 --filename=/dev/nvme0n1 --runtime=600#Write 1024k:

fio --name=nvme --rw=write --numjobs=1 --iodepth=2 --blocksize=1024K --direct=1 --ioengine=libaio --time_based=1 --group_reporting=1 --filename=/dev/nvme0n1 --runtime=600Windows – diskspd parameter examples:

#Parameters “-t (threads)” and “-o (number of outstanding I/O requests)” can change.

#Random Read 4k:

diskspd.exe -r -t24 -o4 -b4K -d300 -w0 -Su -L '#2'#Random Write 4k:

diskspd.exe -r -t20 -o4 -b4K -d300 -w100 -Su -L '#2'#Random Read 64k:

diskspd.exe -r -t4 -o2 -b64K -d300 -w0 -Su -L '#2'#Random Write 64k:

diskspd.exe -r -t4 -o2 -b64K -d300 -w100 -Su -L '#2'#Read 1024k:

diskspd.exe -si -t1 -o2 -b1024K -d300 -w0 -Su -L '#2'#Write 1024k:

diskspd.exe -si -t1 -o2 -b1024K -d300 -w100 -Su -L '#2'Performance Comparison: Linux and Windows Storage Stack:

In order to have a clearer understanding of this setup, it would be best if we compare the local performance of 1 NVMe for Linux and Windows.

| Linux local 1x Intel® Optane™ SSD DC P5800X Series (400GB) | Windows local 1x Intel® Optane™ SSD DC P5800X Series (400GB) | Comparison | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| pattern | numjobs | iodepth | IOPs | MiB\s | latency (ms) | CPU usage | numjobs | iodepth | IOPs | MiB\s | latency (ms) | CPU usage | IOPs | MiB\s | latency (ms) | CPU usage |

| random read 4k | 6 | 4 | 1552000 | 6 061 | 0.015 | 4% | 12 | 4 | 1508000 | 5 890 | 0.030 | 17% | 97% | 97% | 200% | 425% |

| random write 4k | 6 | 4 | 1160000 | 4 532 | 0.020 | 3% | 10 | 4 | 1113000 | 4 349 | 0.030 | 14% | 96% | 96% | 150% | 467% |

| random read 64K | 2 | 2 | 109 000 | 6 799 | 0.036 | 1% | 3 | 2 | 111 000 | 6 919 | 0.050 | 2% | 102% | 102% | 139% | 200% |

| random write 64K | 2 | 2 | 75 500 | 4 717 | 0.052 | 1% | 2 | 2 | 73 000 | 4 565 | 0.050 | 1% | 97% | 97% | 96% | 100% |

| read 1M | 1 | 2 | 7 034 | 7 034 | 0.284 | 1% | 1 | 2 | 7 030 | 7 030 | 0.280 | 1% | 100% | 100% | 99% | 100% |

| write 1M | 1 | 2 | 4 790 | 4 790 | 0.417 | 1% | 1 | 2 | 4 767 | 4 767 | 0.420 | 1% | 100% | 100% | 101% | 100% |

As you can see for yourself, based on random read/write 4k patterns, to achieve comparable performance in Windows, you need to double the ‘numjobs’ parameter. This will result in a latency increase of 100-150% and a CPU usage increase of 425-470%.

Similar results are seen in the random read 64K pattern. The performance is virtually the same for both operating systems in the random write 64K, read 1M, and write 1M patterns. In other words, the difference in the results acquired during the benchmarking of the two NVMe-oF initiators described below is no mystery when examining the difference in Linux and Windows storage stack performance outlined above.

Benchmarking Results:

Performance Comparison: Linux NVMe-oF Initiator and StarWind NVMe-oF Initiator for Windows (v1.9.0.567 and v1.9.0.578) over RDMA:

| Remote (RDMA) Linux NVMe-oF Initiator 1x Intel® Optane™ SSD DC P5800X Series (400GB) |

Remote (RDMA) Windows StarWind NVMe-oF Initiator (1.9.0.567) 1x Intel® Optane™ SSD DC P5800X Series (400GB) |

Comparison | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| pattern | numjobs | iodepth | IOPs | MiB\s | latency (ms) | CPU usage | numjobs | iodepth | IOPs | MiB\s | latency (ms) | CPU usage | IOPs | MiB\s | latency (ms) | CPU usage |

| random read 4k | 10 | 4 | 1500000 | 5 861 | 0.026 | 12% | 24 | 4 | 1132414 | 4 423 | 0.073 | 31% | 75% | 75% | 281% | 258% |

| random write 4k | 8 | 4 | 1130000 | 4 413 | 0.027 | 9% | 24 | 4 | 1105729 | 4 319 | 0.087 | 31% | 98% | 98% | 322% | 344% |

| random read 64K | 3 | 2 | 110 000 | 6 850 | 0.054 | 1% | 4 | 2 | 110 328 | 6 895 | 0.072 | 2% | 100% | 101% | 133% | 200% |

| random write 64K | 3 | 2 | 75 400 | 4 712 | 0.079 | 1% | 3 | 2 | 74 819 | 4 676 | 0.080 | 1% | 99% | 99% | 101% | 100% |

| read 1M | 1 | 2 | 7 033 | 7 033 | 0.283 | 1% | 1 | 2 | 7 030 | 7 030 | 0.284 | 1% | 100% | 100% | 100% | 100% |

| write 1M | 1 | 2 | 4 788 | 4 788 | 0.416 | 1% | 1 | 2 | 4 787 | 4 787 | 0.418 | 1% | 100% | 100% | 100% | 100% |

The optimizations in StarWind NVMe-oF Initiator for Windows v1.9.0.578 have played a vital role. Thanks to them, we managed to gain an 11% performance increase in the random read 4k pattern and 4% in the random write 4k pattern (compared to v1.9.0.567). As a result, the thread count (‘numjobs’) has been reduced from 24 in v1.9.0.567 to 16 or 18 in v1.9.0.578, leading to decreased latency and CPU usage values.

When compared with Linux NVMe-oF Initiator, StarWind NVMe-oF Initiator for Windows only shows a difference in the random read 4k pattern (14% lower performance). The results are largely comparable in all other patterns. Higher latency and CPU usage values are explained in the section “Performance Comparison: Linux and Windows Storage Stack.”

Performance Comparison: Linux NVMe-oF Initiator and StarWind NVMe-oF Initiator for Windows (v1.9.0.567 and v1.9.0.578) over TCP:

| Remote (TCP) Linux NVMe-oF Initiator 1x Intel® Optane™ SSD DC P5800X Series (400GB) |

Remote (TCP) Windows StarWind NVMe-oF Initiator (1.9.0.567) 1x Intel® Optane™ SSD DC P5800X Series (400GB) |

Comparison | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| pattern | numjobs | iodepth | IOPs | MiB\s | latency (ms) | CPU usage | numjobs | iodepth | IOPs | MiB\s | latency (ms) | CPU usage | IOPs | MiB\s | latency (ms) | CPU usage |

| random read 4k | 16 | 16 | 1536000 | 6 000 | 0.165 | 18% | 30 | 16 | 1080908 | 4 222 | 0.441 | 23% | 70% | 70% | 267% | 128% |

| random write 4k | 12 | 16 | 1123000 | 4 387 | 0.170 | 12% | 20 | 16 | 1046539 | 4 088 | 0.303 | 22% | 93% | 93% | 178% | 183% |

| random read 64K | 6 | 4 | 110 000 | 6 867 | 0.217 | 4% | 7 | 4 | 105 154 | 6 572 | 0.266 | 5% | 96% | 96% | 123% | 125% |

| random write 64K | 6 | 4 | 75 300 | 4 705 | 0.318 | 3% | 6 | 4 | 74 937 | 4 683 | 0.320 | 3% | 100% | 100% | 101% | 100% |

| read 1M | 3 | 4 | 7 000 | 7 000 | 1.711 | 3% | 3 | 4 | 7 032 | 7 032 | 1.706 | 3% | 100% | 100% | 100% | 100% |

| write 1M | 3 | 2 | 4 435 | 4 435 | 1.351 | 1% | 2 | 2 | 4 786 | 4 786 | 1.253 | 1% | 108% | 108% | 93% | 100% |

| Remote (TCP) Linux NVMe-oF Initiator 1x Intel® Optane™ SSD DC P5800X Series (400GB) |

Remote (TCP) Windows StarWind NVMe-oF Initiator (1.9.0.578) 1x Intel® Optane™ SSD DC P5800X Series (400GB) |

Comparison | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| pattern | numjobs | iodepth | IOPs | MiB\s | latency (ms) | CPU usage | numjobs | iodepth | IOPs | MiB\s | latency (ms) | CPU usage | IOPs | MiB\s | latency (ms) | CPU usage |

| random read 4k | 16 | 16 | 1536000 | 6 000 | 0.165 | 18% | 32 | 16 | 1444087 | 5 640 | 0.352 | 29% | 94% | 94% | 213% | 161% |

| random write 4k | 12 | 16 | 1123000 | 4 387 | 0.170 | 12% | 22 | 16 | 1176025 | 4 593 | 0.298 | 25% | 105% | 105% | 175% | 208% |

| random read 64K | 6 | 4 | 110 000 | 6 867 | 0.217 | 4% | 7 | 4 | 109 877 | 6 867 | 0.254 | 5% | 100% | 100% | 117% | 125% |

| random write 64K | 6 | 4 | 75 300 | 4 705 | 0.318 | 3% | 6 | 4 | 76 096 | 4 756 | 0.315 | 3% | 101% | 101% | 99% | 100% |

| read 1M | 3 | 4 | 7 000 | 7 000 | 1.711 | 3% | 3 | 4 | 7 028 | 7 028 | 1.706 | 3% | 100% | 100% | 100% | 100% |

| write 1M | 3 | 2 | 4 435 | 4 435 | 1.351 | 1% | 2 | 2 | 4 698 | 4 698 | 0.851 | 1% | 106% | 106% | 63% | 100% |

Conclusion

Thanks to the adjustments, StarWind NVMe-oF Initiator for Windows v1.9.0.578 could increase its performance by 24% in the random read 4k pattern, by 12% in the random write 4k pattern, and by 4% in the random read 64K pattern. Not bad at all! Compared with Linux NVMe-oF Initiator, StarWind NVMe-oF Initiator for Windows v1.9.0.578 only gives way in the random read 4k pattern (6%). As you can already tell, the performance is comparable or higher in all other patterns. I hope this information proves useful and assists you in the future!

This material has been prepared in collaboration with Viktor Kushnir, Technical Writer with almost 4 years of experience at StarWind.

- Linux NVMe-oF Initiator and StarWind NVMe-oF Initiator: Performance Comparison Part 1

- Linux NVMe-oF Initiator and StarWind NVMe-oF Initiator: Performance Comparison Part 2

- Real-World Production Scenario: Squeezing all possible performance out of Microsoft SQL Server AGs (Availability Groups). Local NVMe Vs. RDMA-mapped (NVMe-oF) remote NVMe storage. Does the juice worth a squeeze?