Microsoft continues the expansion of its services for Azure in Europe, this time with new capabilities related to Big Data: Azure Data Lake Analytics and Data Lake Store. These new services appear as server-less options for companies that already have resources in Azure and a large amount of data, allowing them to transform that data into valuable information.

What is Azure Data Lake Analytics?

This service was prepared to permit customers to reach Big Data capabilities in an easier way. Azure Data Lake Analytics allows customers to write, run and manage analytics jobs to be executed in Azure without concerning about sizing infrastructure, managing VMs. Organizations can simply execute the jobs using their code, and you only pay for the amount of time the job is running.

Azure Data Lake Analytics supports multiple languages, like U-SQL, R, Python, and .NET over petabytes of data.

Here are the main features provided by Azure Data Lake Analytics:

- Dynamic scaling: Dynamically provision resources needed for the jobs you need to execute. Customers do not need to worry about re-writing code if you are analyzing gigabytes, terabytes or even exabytes.

- Develop faster, debug, and optimize smarter using familiar tools: Great integration with Visual Studio, which is an easy tool and fairly common among developers.

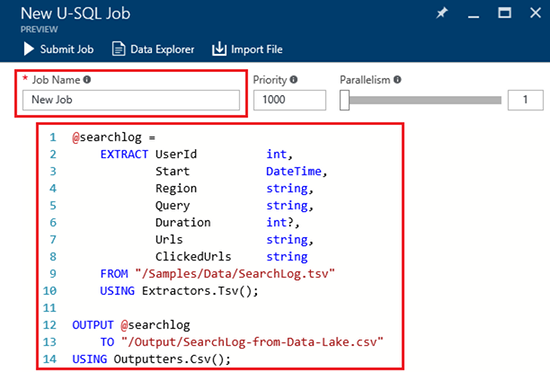

- Supporting U-SQL: A query language that extends the familiar, simple, declarative nature of SQL with the expressive power of C#.

- Affordable and cost effective: Customers can pay on a per-job basis when data is processed. No hardware, licenses, or service-specific support agreements are required.

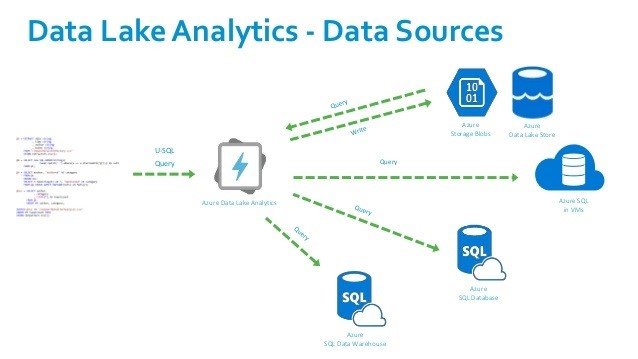

- Works with all Azure Data: Can also work with Azure Blob storage and Azure SQL database.

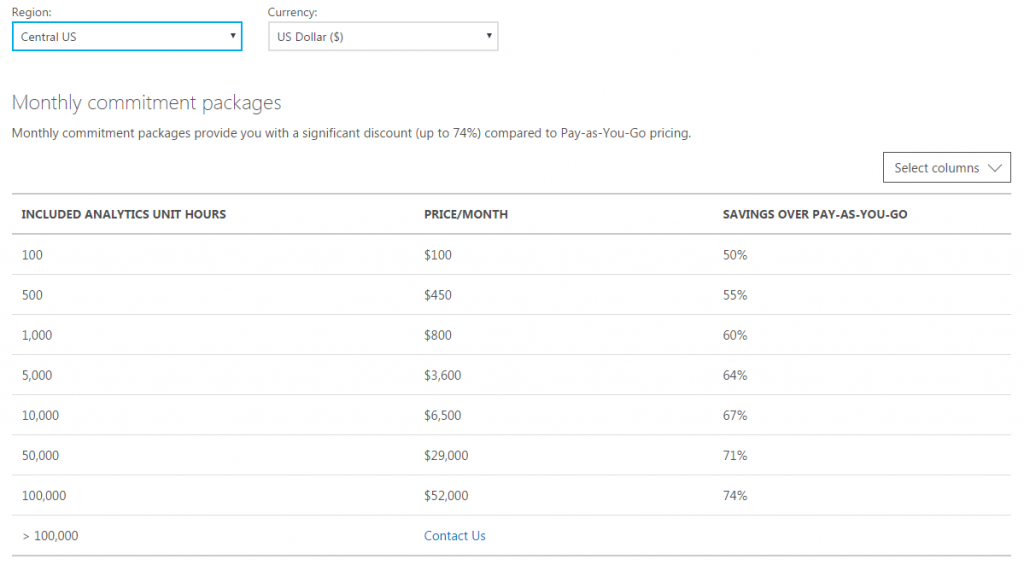

Regarding Azure Data Lake Analytics pricing in Europe, the only option available for now is pay-as-you-go, with a cost of $2/hour. There’s also a Monthly Commitment package option, only available in the US, which includes considerable savings.

Getting Started with Azure Data Lake Analytics

This features also comes with a free trial option, as any other Azure service. To get started, only requires having an active Azure subscription, and the process to create an account are simple:

- Sign on to the Azure portal.

- Click New, click Intelligence + analytics and then click Data Lake Analytics.

- Type or select the following values:

- Name: Name the Data Lake Analytics account.

- Subscription: Choose the Azure subscription used for the Analytics account.

- Resource Group. Select an existing Azure Resource Group or create a new one. Azure Resource Manager enables you to work with the resources in your application as a group. For more information, see Azure Resource Manager

- Location. Select an Azure data center for the Data Lake Analytics account.

- Data Lake Store: Each Data Lake Analytics account has a dependent Data Lake Store account. The Data Lake Analytics account and the dependent Data Lake Store account must be located in the same Azure data center. Follow the instruction to create a new Data Lake Store account, or select an existing one.

- Click Create. It takes you to the portal home screen. A new tile is added to the StartBoard with the label showing “Deploying Azure Data Lake Analytics”. It takes a few moments to create a Data Lake Analytics account. When the account is created, the portal opens the account on a new blade.