This is the first test in the series, dedicated to Microsoft Storage Replica – a new solution introduced in Windows Server 2016. It shows a step-by-step process of “Shared Nothing” Failover File Server building, based on the Server Message Block protocol (SMB3). Microsoft Storage Replica is a versatile data replication solution. It performs replication between servers, between volumes in a server, between clusters, etc. The typical use case for Microsoft Storage Replica is Disaster Recovery, which is basically replication to a remote site. It allows easy recovery of data in case the main site operation is disrupted by some sort of a force majeure, like natural disasters. The test is performed by StarWind engineers with a full report on the results.

This is the first test in the series, dedicated to Microsoft Storage Replica – a new solution introduced in Windows Server 2016. It shows a step-by-step process of “Shared Nothing” Failover File Server building, based on the Server Message Block protocol (SMB3). Microsoft Storage Replica is a versatile data replication solution. It performs replication between servers, between volumes in a server, between clusters, etc. The typical use case for Microsoft Storage Replica is Disaster Recovery, which is basically replication to a remote site. It allows easy recovery of data in case the main site operation is disrupted by some sort of a force majeure, like natural disasters. The test is performed by StarWind engineers with a full report on the results.

1st Test Scenario – We got this Windows Server Technical Preview with the intention to configure and run Storage Replica. Here’s a full report on what happened and how it all worked for us here for with File Server role…

Storage Replica: “Shared Nothing” Hyper-V Guest VM Cluster

Storage Replica: “Shared Nothing” Hyper-V HA VM Cluster

Storage Replica: “Shared Nothing” Scale-out File Server

Introduction

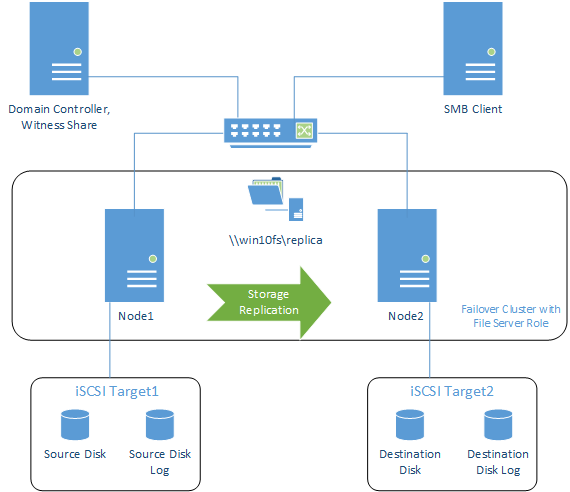

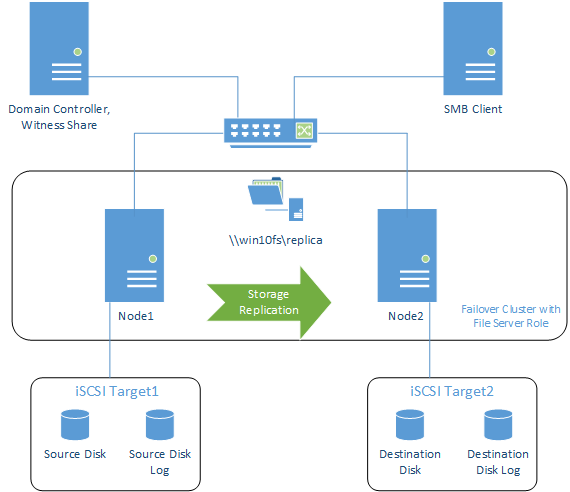

As soon as Microsoft introduced Storage Replica, we decided to check if it’s true that convenient (to get detailed info, try these links: http://technet.microsoft.com/en-us/library/dn765475.aspx and http://blogs.technet.com/b/filecab/archive/2014/10/07/storage-replica-guide-released-for-windows-server-technical-preview.aspx). Microsoft claims it can create clusters without any shared storage or SAN. In order to test this interesting feature, we decided to try Storage Replica with Microsoft Failover Cluster. We chose the File Server role for this example.

Content

All in all, this configuration will use three servers:

- First cluster node for Storage Replica

- Second cluster node for Storage Replica

- The server with the MS iSCSI Target, which provides iSCSI storage (not shared – for the sake of testing the capabilities of Storage Replica) for the cluster nodes. We also have to create SMB 3.0 share to use as a witness (because Storage Replica cannot fulfill this task).

Cluster nodes are first joined into the domain.

Note: Though the manual says that SSD and SAS disks are supported for Storage Replica, I couldn’t get them connected. As the same thing went with virtual SAS disks, emulated by Microsoft Hyper-V or VMware ESXi, I tried to connect the iSCSI devices created in the MS Target. It seems like the only option that managed to work somehow.

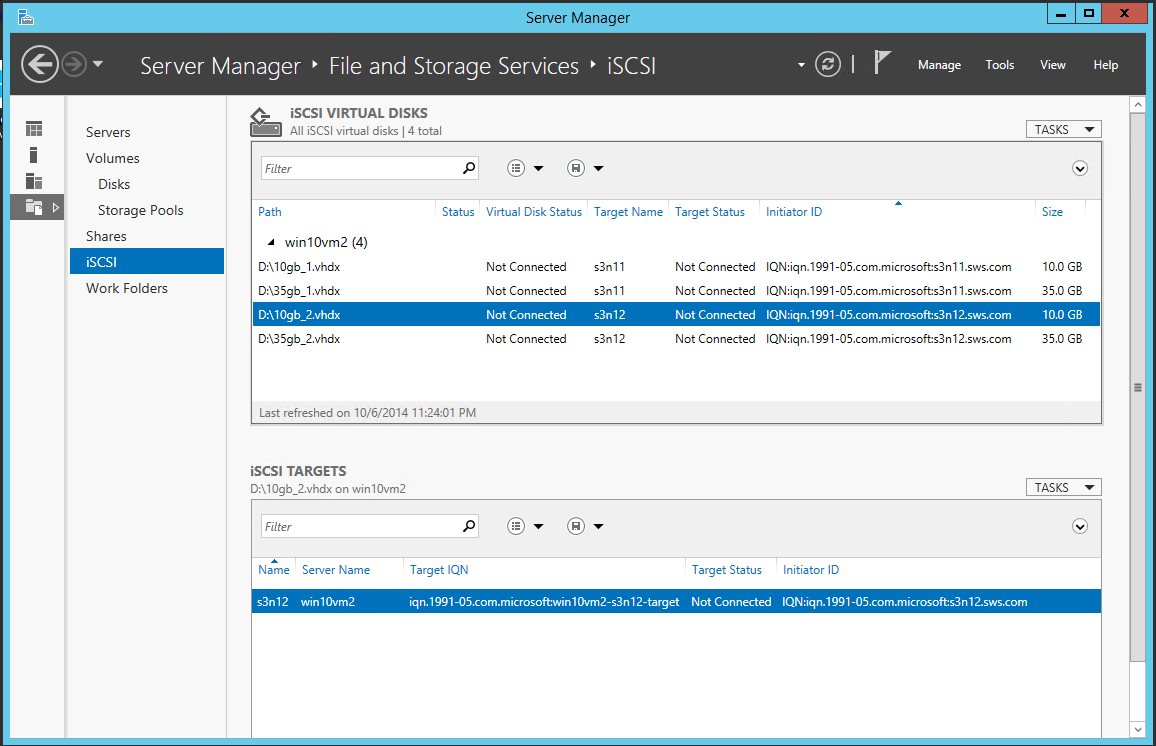

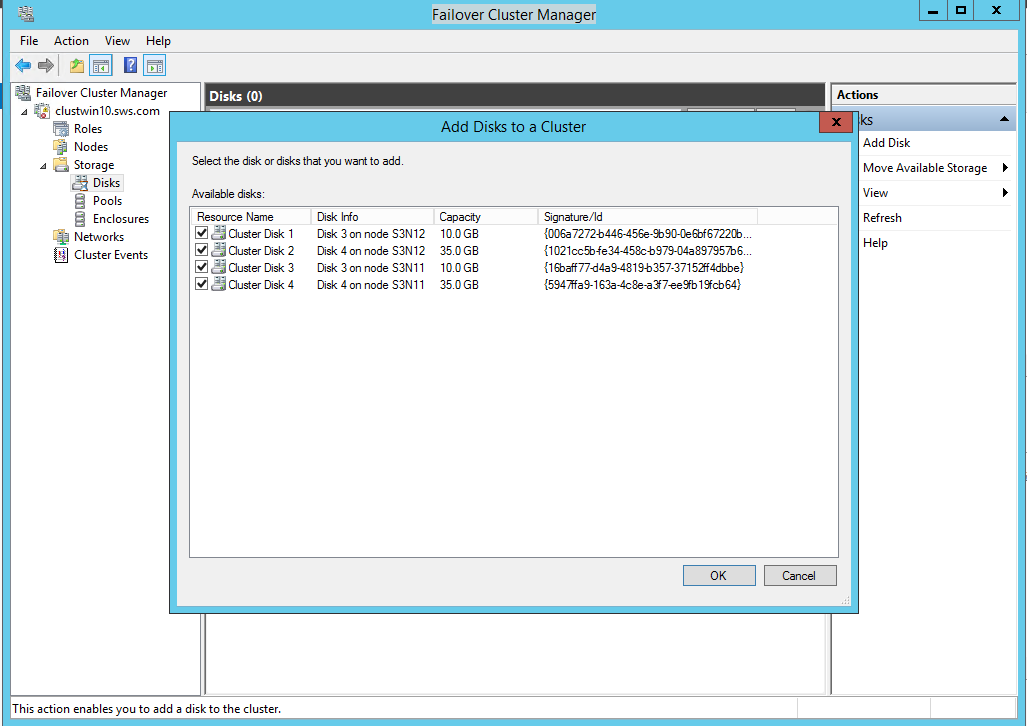

I’m starting MS iSCSI Target on a separate machine and create 2 disks for each node (4 disks total). As this is not shared storage, the first and the second disks are connected to the first node, while the third and the fourth – to the second node. One disk from the first pair will be used as a replica source, while the other one – as a source log disk. On the second pair, the disks will be respectively – replica destination and destination log. The log disk must be at least 2 Gb or a minimum 10% of the source disk.

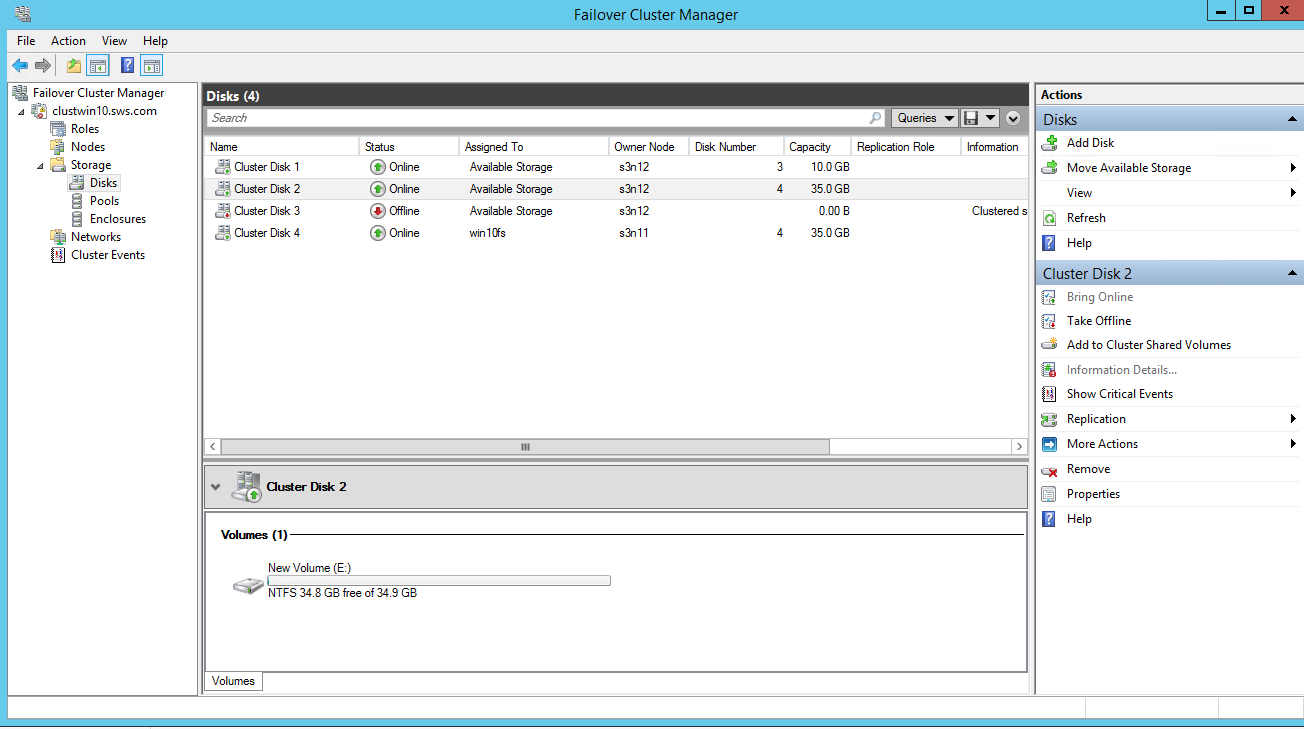

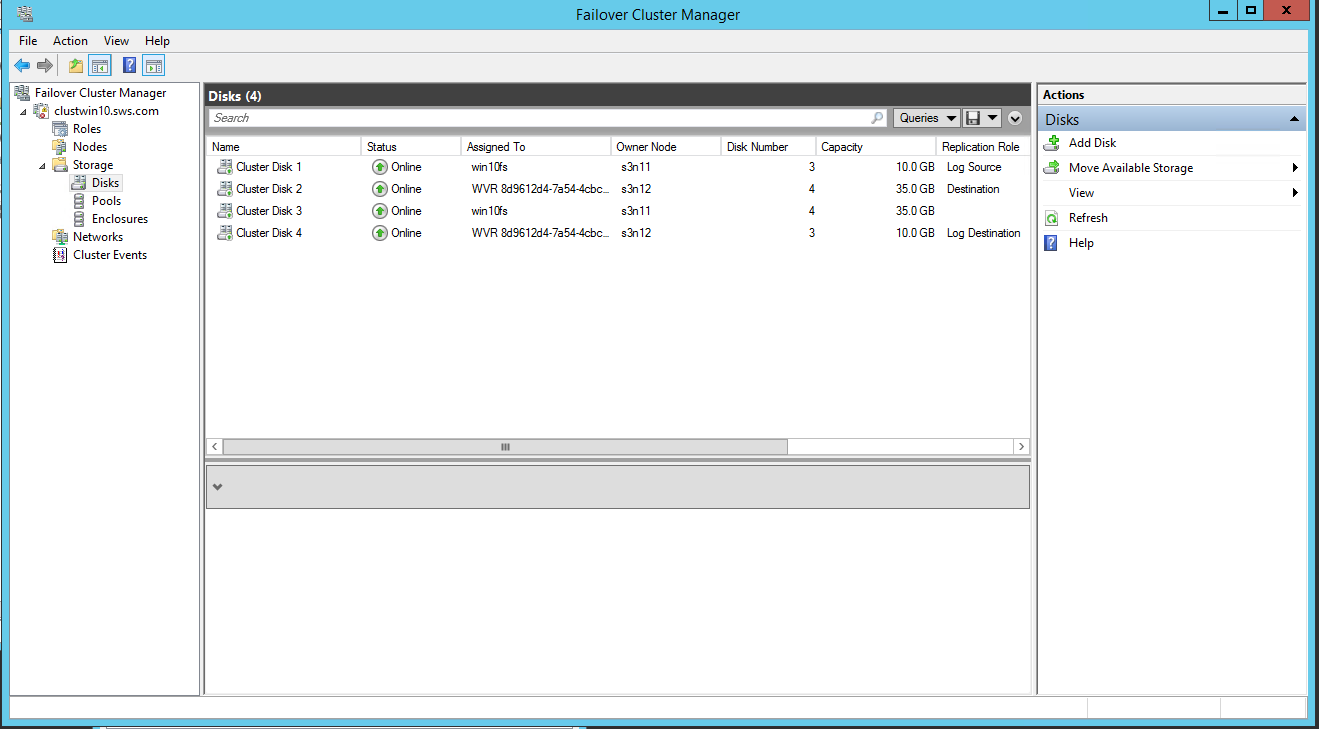

The screenshot shows the two 35 Gb disks, created for source and destination, as well as two 10 Gb disks for the source log and destination log.

Now we’re all set.

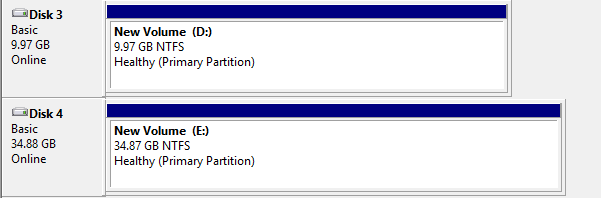

Connecting the devices through the initiator on both the nodes, where I’m going to test Storage Replica. Initializing them, choosing GPT (this is important!) and formatting the disks. For both the nodes, I choose the same letters (this is important too!).

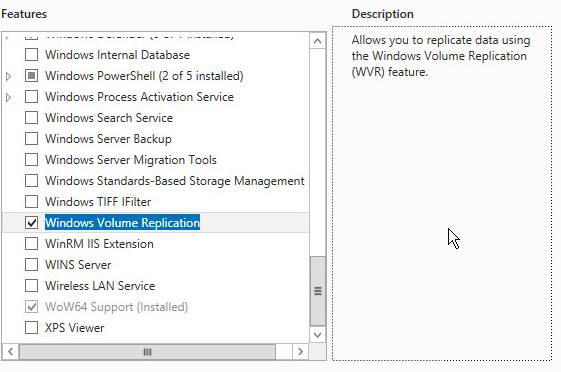

Using Add Roles and Features wizard, I’ll add Failover Clustering, Multipath I/O and Windows Volume Replication on the nodes.

Reboot. After it’s complete, I will create a cluster (as we mentioned above, SMB 3.0 share is used for witness).

Going to the Storage->Disks in the cluster, adding disk by clicking Add Disk. Here’s what we see in the next window (2 disks on each node):

Adding all of them.

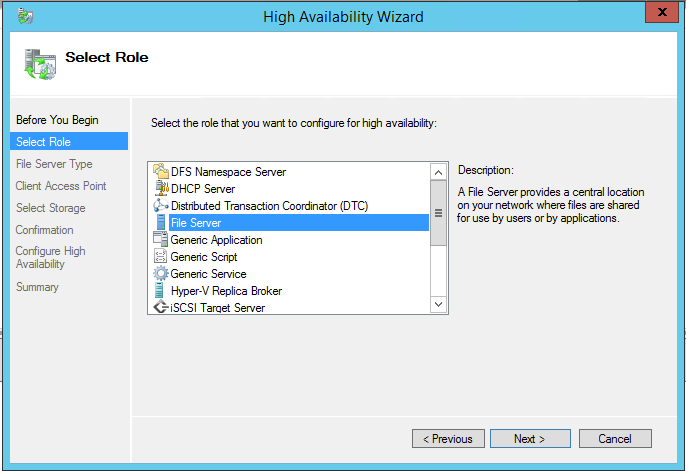

Now I’ll go to Add Roles and choose the file server role.

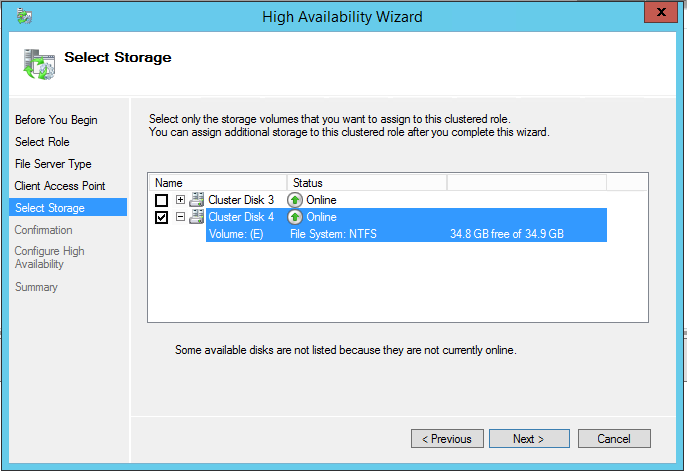

Going through the standard wizard, I see the Select Storage step, where I’m choosing only the data disk, not the disk for logs.

Successfully finishing the wizard, I’m getting the role. Highlight it and choose the Resource stab. Right-click the added cluster disk and choose Replication – Enable.

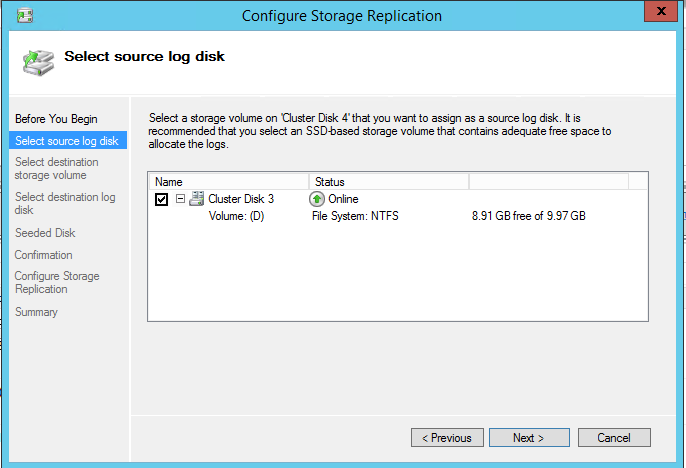

Choose the log disk in the replica creation wizard that appears.

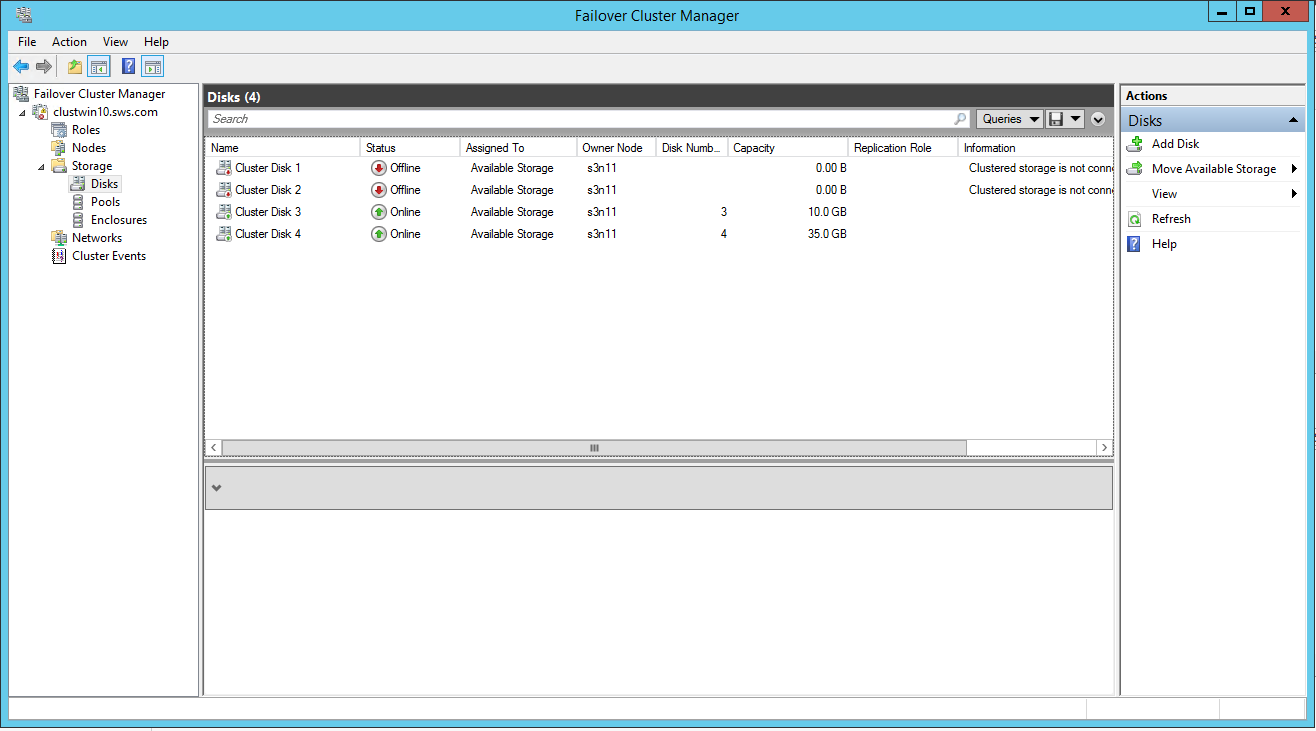

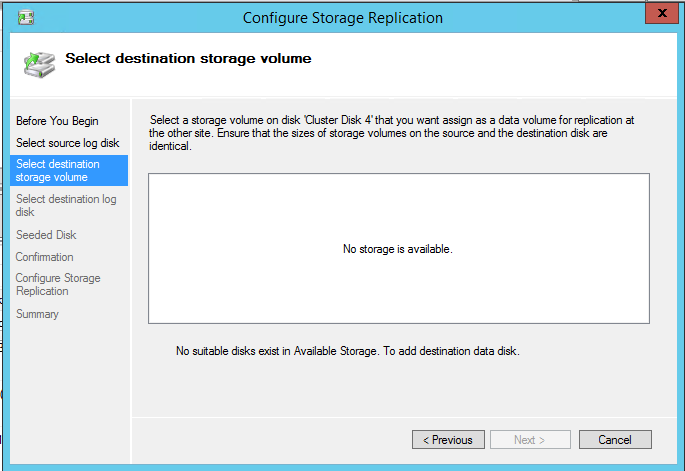

The next step is choosing the replica destination. The list on the next screenshot is empty. If you get an empty list (as shown on the next screenshot), return to the Storage – Disks.

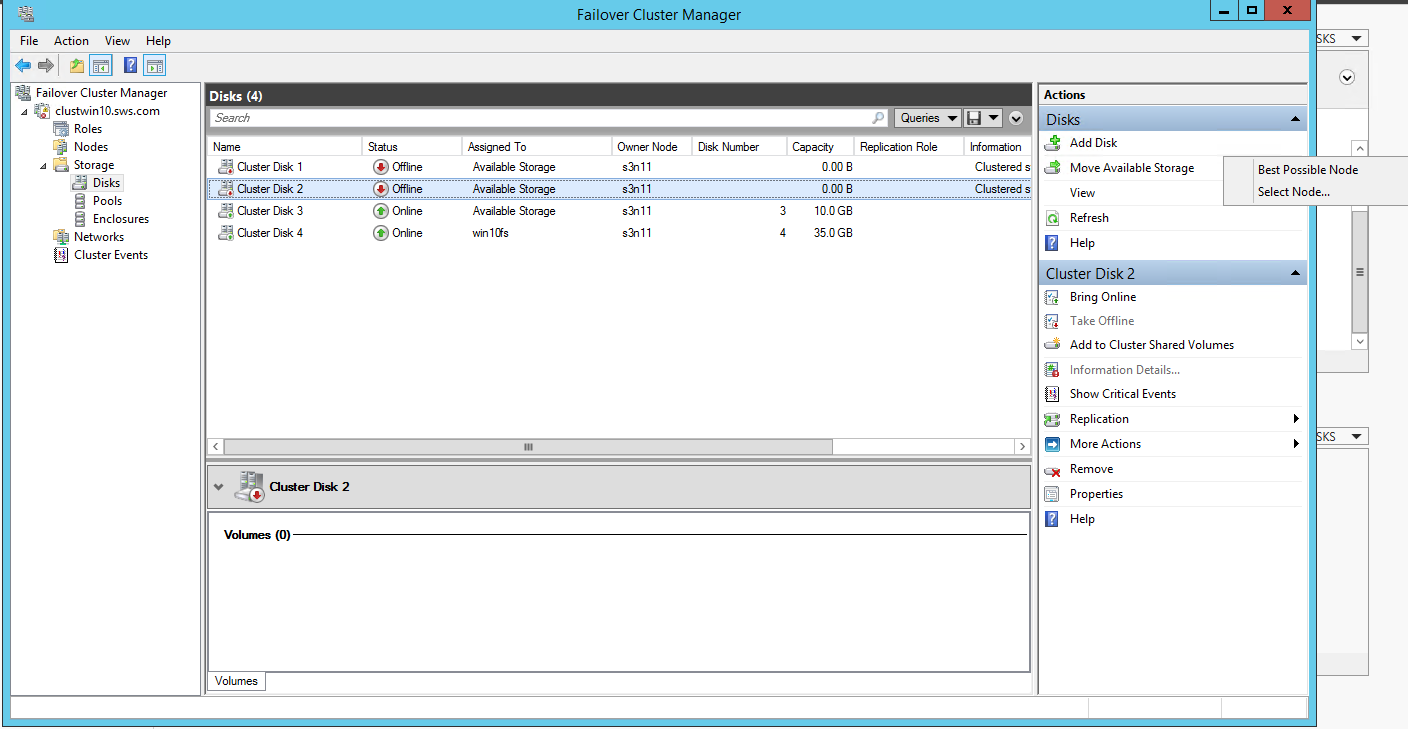

You need to change the owner of all the disks to the replica destination node. This can be done through Move Available Storage – Best Possible Node.

Here’s what I got:

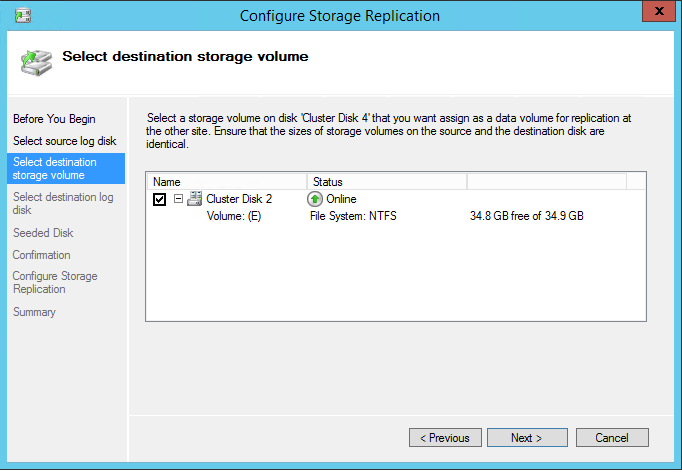

Getting back to the Storage Replica creation wizard – now the disk is available there.

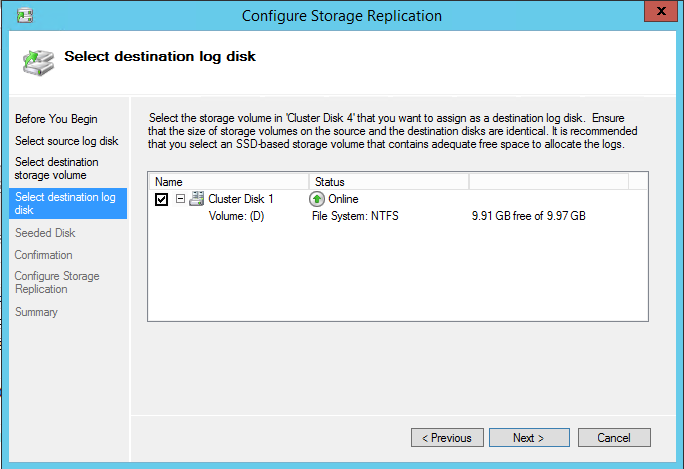

Setting the log disk for the replication.

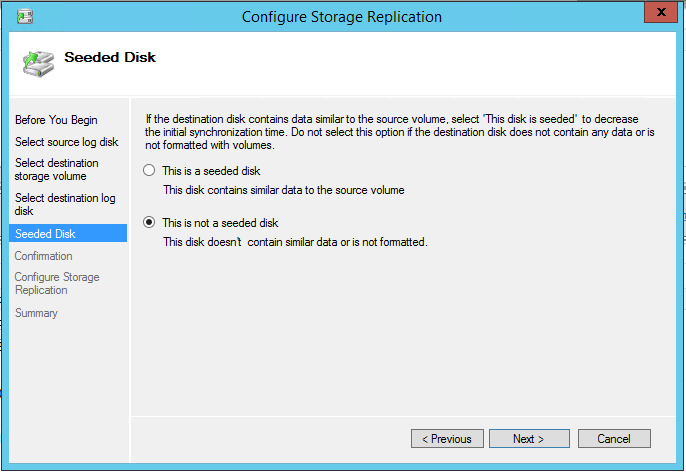

The disks are not synchronized, so I’m choosing the second option.

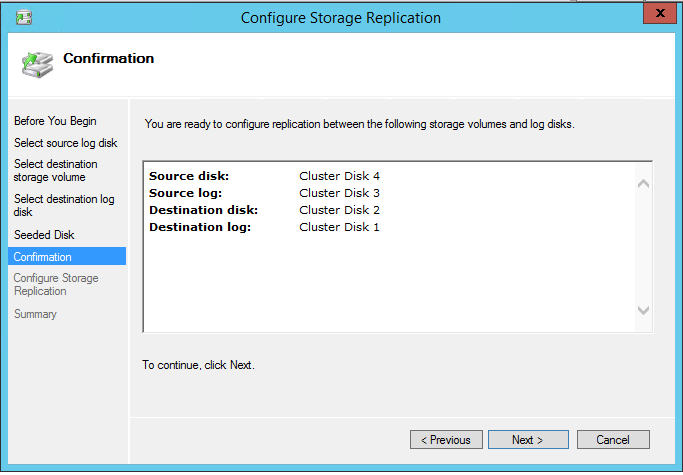

Confirm the creation.

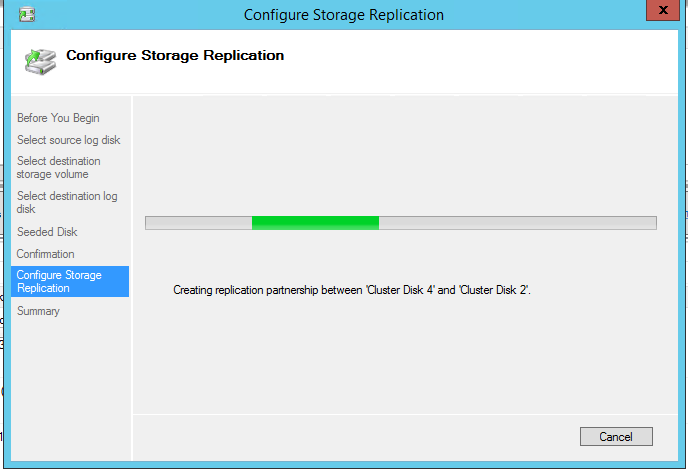

The data is being synchronized.

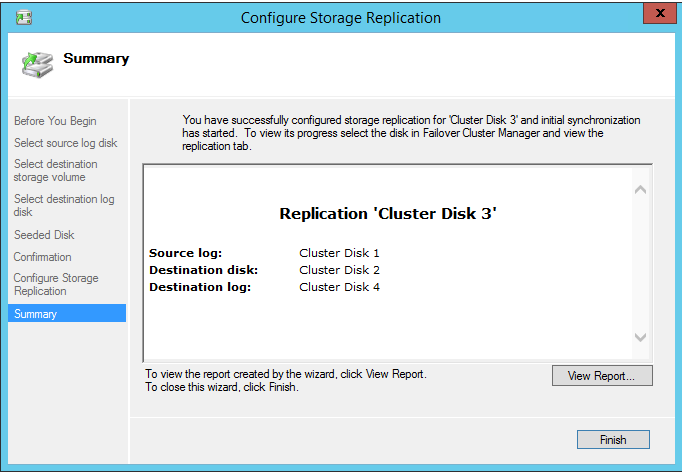

The next screenshot shows my success.

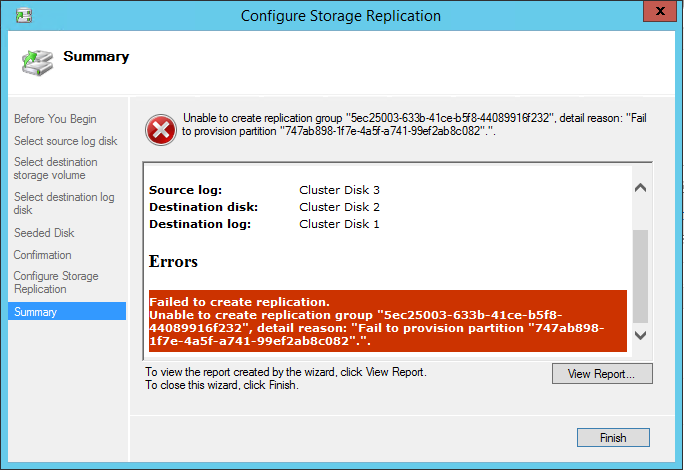

Note: If you’re getting an error like the one on the next screenshot, recreate the devices and connect them again. Otherwise, you’d have a hard time getting the disks to operate normally.

The replica and a slightly changed disk display are the signs of success.

Here on the screenshot, you can see that cluster disk 1 and others have different sizes from the initial ones. The reason is in the need to create them again – so they were added under new numbers.

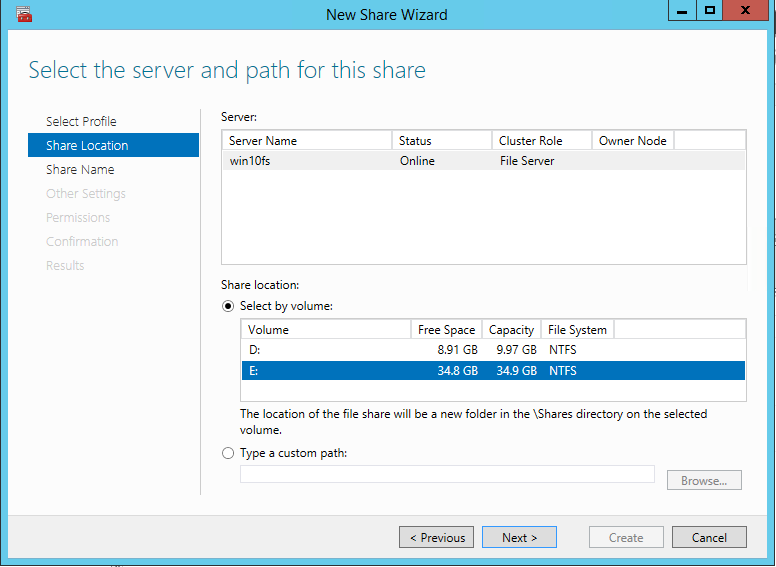

The next step is to create a file share – it’s a standard wizard dialog, so you’ll surely figure it out without my instructions. The only thing I’d like to point out the log disk is also available as the share location. I sure hope Microsoft will repair this issue, because it may cause some trouble for inexperienced users.

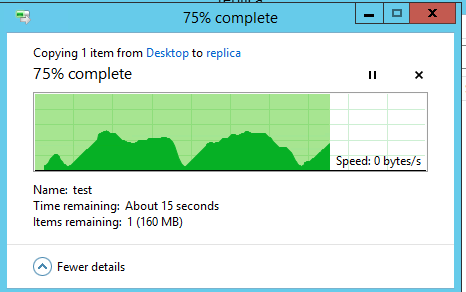

After having created the share, I’ll upload something there and crash the owner node of the cluster during the process.

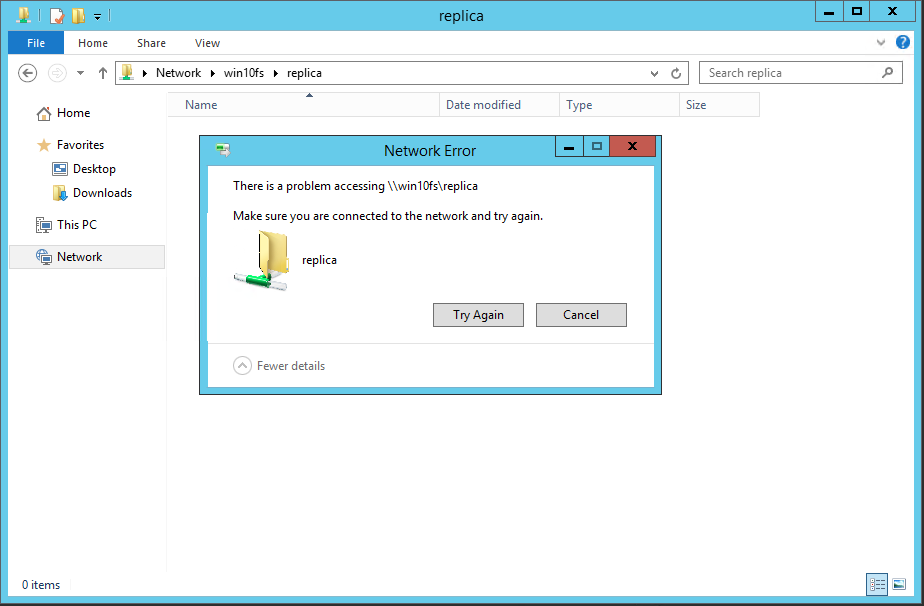

The Continuous Availability option is enabled in the share, so when a node crashes, SMB Transparent Failover must occur. Well, it did not. At the moment of the node crash, the speed went down, so the copying died as well because of the timeout.

Conclusion

As you can clearly see, Failover Cluster in File Server role works fine with Storage Replica with only a slight trouble. Though we’ve enabled Continuous Availability in the SMB share, the operation was disrupted during failover. Doesn’t look like transparent failover at all.