Persistent Memory (PMEM) support is introduced in the recently released VMware vSphere 6.7. This new non-volatile memory type covers the gap in performance between the random-access memory (RAM) and Flash/SSD. But, is PMEM really that fast? In this article, I take a closer look at what persistent memory is and its performance.

What’s the difference between PMEM and any other memory type?

There are 3 key things about using PMEM:

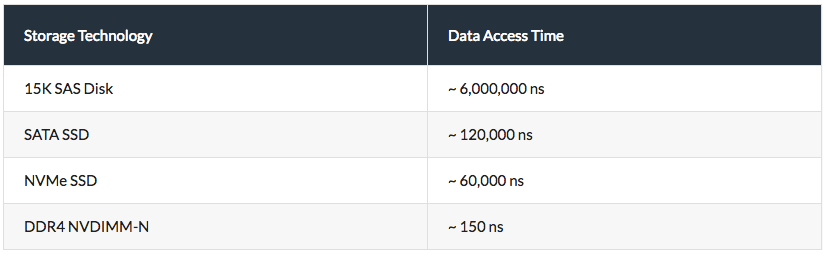

- Latency and bandwidth are close to DRAM. In other words, PMEM is approximately 100 times faster than SSD.

- For saving and uploading data, CPU utilizes the standard byte-addressable instructions.

- PMEM enables to avoid data corruption during device reboot or unexpected failure.

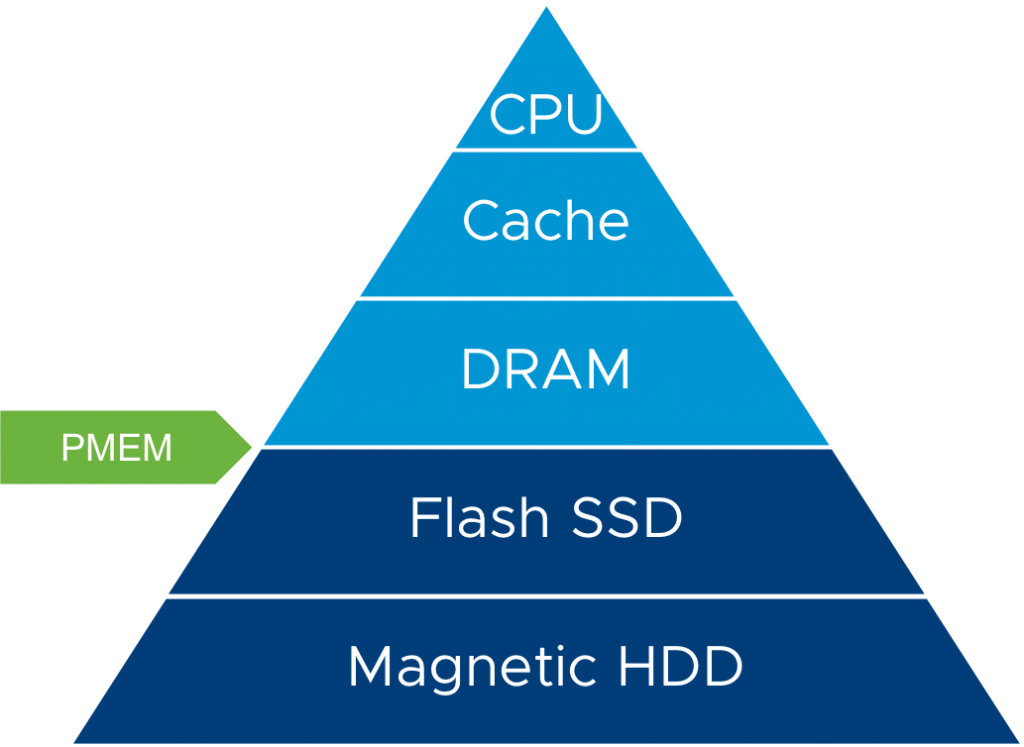

The figure below highlights the convergence of memory and storage. Technology at the top of the pyramid has the shortest latency, so it has the highest performance. Now, look at where PMEM is:

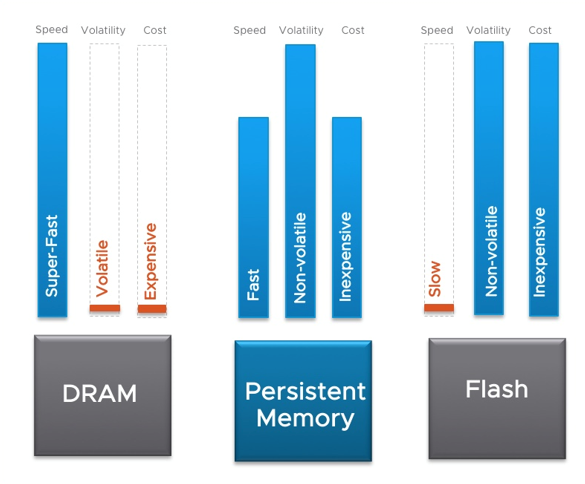

PMEM lays between DRAM and Flash in terms of performance. And, it is the true common ground between these two technologies. Just look at these three technologies comparison in the diagram below:

Such properties, together with an ability to prevent data corruption in the event of storage failure, make PMEM disks the storage of choice for running heavy performance-hungry applications. For instance, PMEM can potentially be used for keeping high-loaded databases, real-time computing, and so on.

Currently, vSphere 6.7 supports two PMEM device types available on the market:

- NVDIMM–N (by DELL EMC and HPE) – DIMM devices that have both NAND-flash and DRAM modules on the single card. Data is transmitted between two modules during the boot process, power off or any event of power outage. Such DIMMs have a power supply from the motherboard to prevent data corruption. Currently, both HPE and DELL EMC manufacture 16 GB NVDIMM-N modules.

- Scalable PMEM (by HPE) – cards comprised of HPE SmartMemory DIMMs, Non-volatile Memory SSDs (NVMe), and batteries which allow creating logical NVDIMMs. In these devices, data is transmitted between DIMMs and NVMes. Scalable PMEM technology can be used in devices with high PMEM capacity.

And, what also is important, virtualization with PMEM does not almost affect performance. These devices have only 3% virtualization overhead.

Let’s compare NVMe SSD, NVDIMM-N, and other storage technologies performance. The table below provides you with some numbers.

Adding PMEM disks to vSphere virtual machines

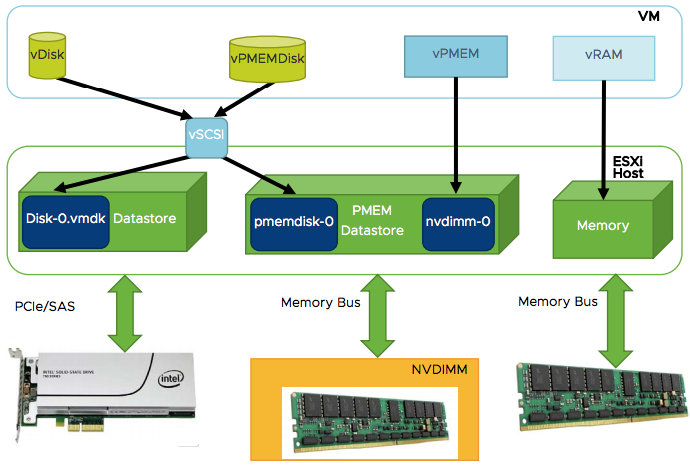

Here’s how PMEM can be presented to VMware vSphere 6.7 virtual machines:

- vPMEMDisk – vSphere presents PMEM to a virtual machine just as if it were a virtual SCSI device. There’s no need to change anything for the guest OS or applications. In this way, vPMEMDisk allows using PMEM in old operating systems and applications.

- vPMEM – vSphere presents PMEM to virtual machines as an NVDIMM device. Most modern operating systems (i.e., Windows Server 2016 and CentOS 7.4) feature NVDIMMs and can present them as block devices or byte-addressable devices. Applications can access PMEM through the thin direct-access (DAX) file system layer or map the part of the device and obtain the direct access via byte-addressable commands. To use vPMEM, Virtual Hardware version should be 14 or higher.

Well, to make clear all that I wrote above, here’s how vPMEM and vPMEMDisk logically work:

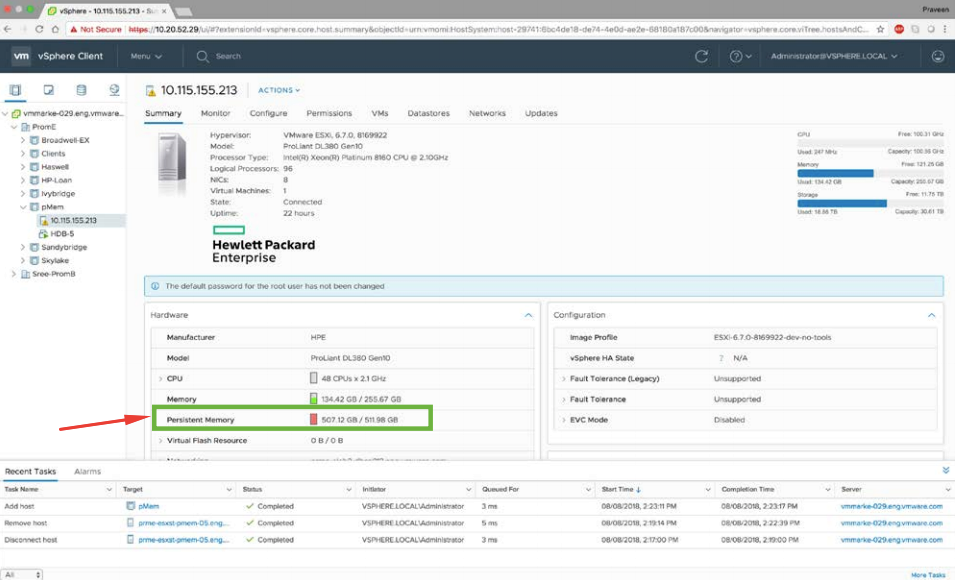

In order to check whether your VMware ESXi host has any PMEM devices on board, go to the Summary tab and check the hardware details. If there are any persistent memory devices, you’ll see their capacity on the list:

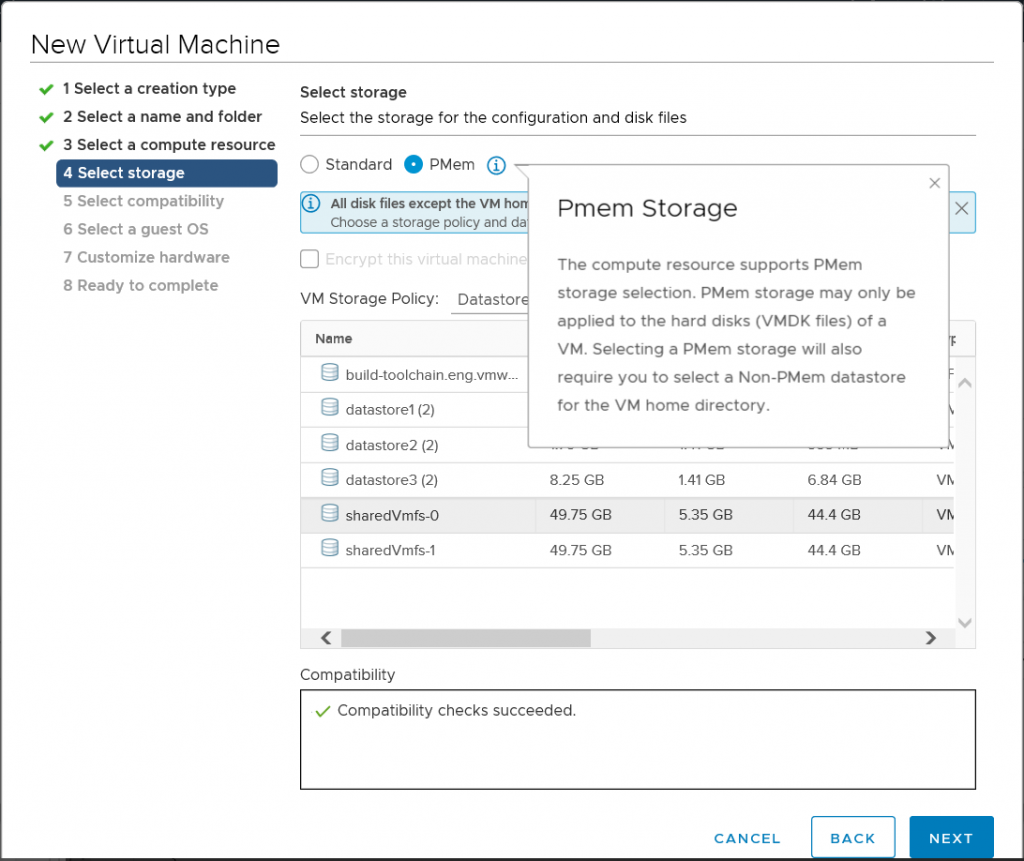

During virtual machine creation, you can choose either the Standard storage or the PMEM one:

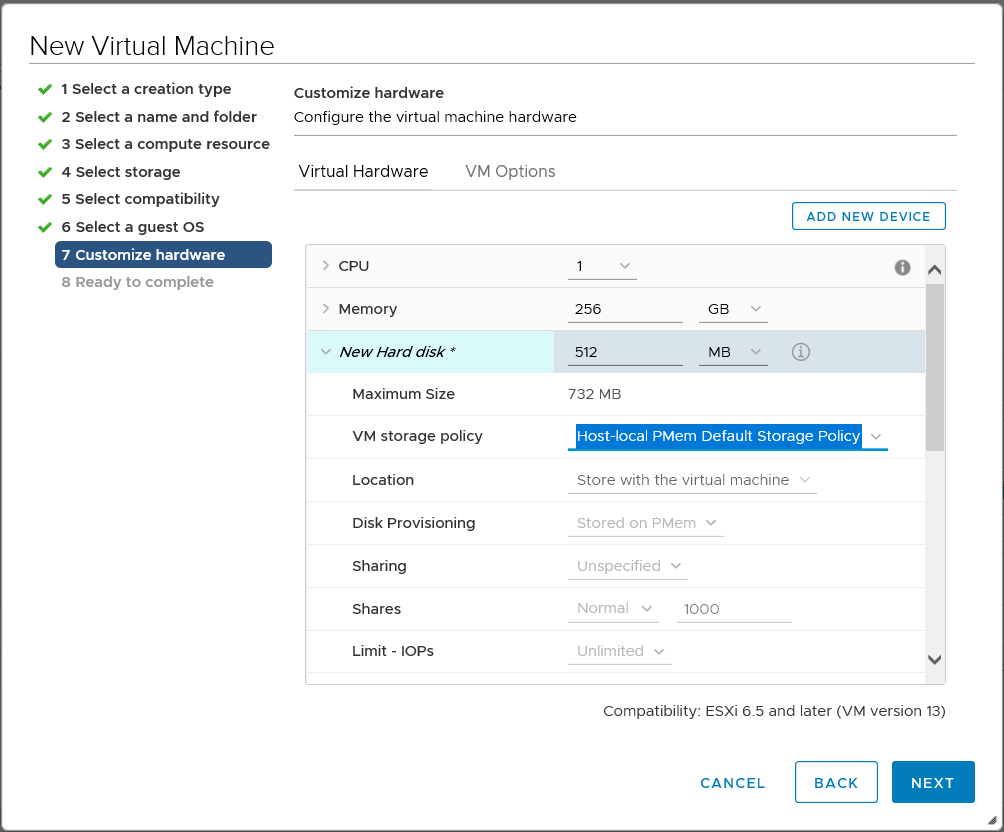

Later, while configuring virtual machine hardware in the Customize hardware tab, you can set the PMEM disk parameters:

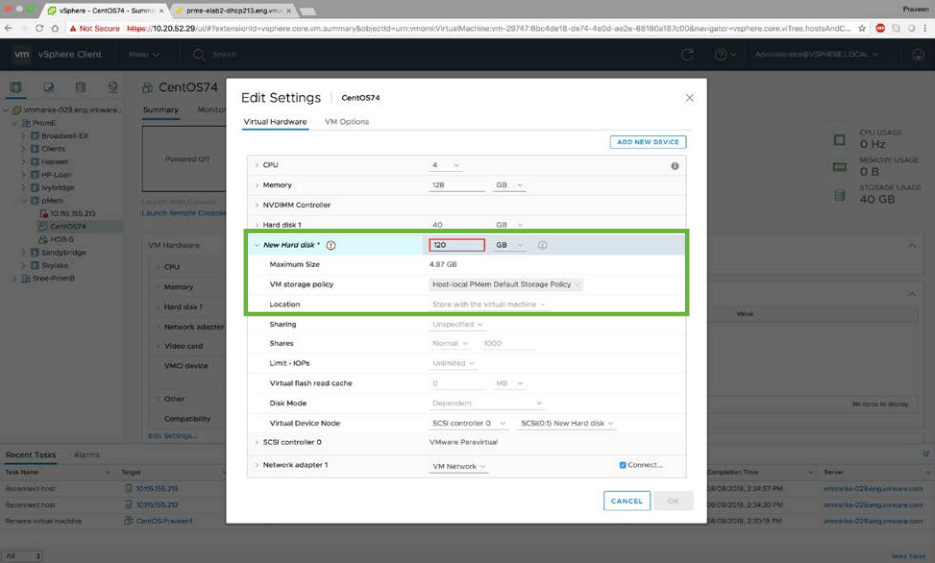

Alternatively, you can just add a vPMEMDisk device to the virtual machine in settings:

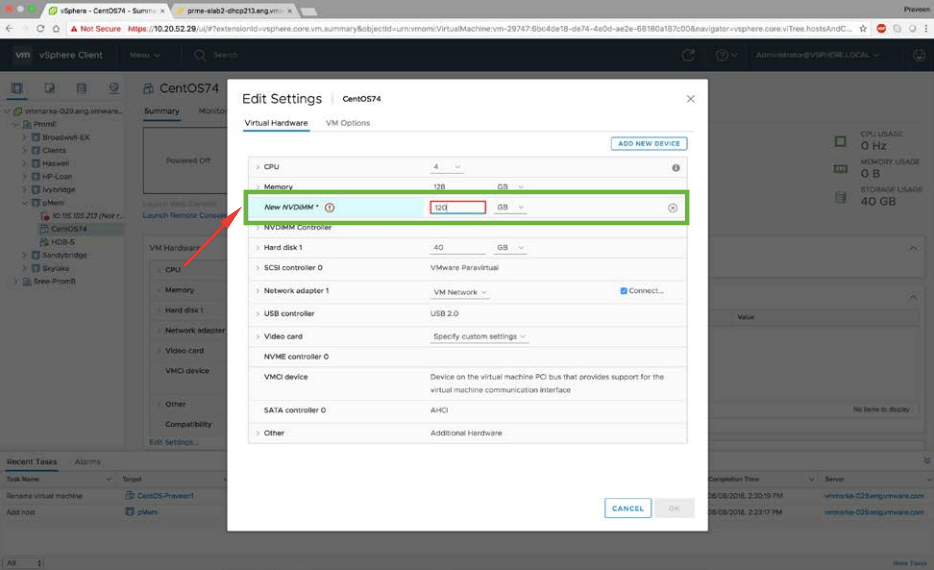

To add a vPMEM disk, just create a new NVDIMM logical storage for a VM. For that purpose, specify the necessary device capacity in the New NVDIMM field:

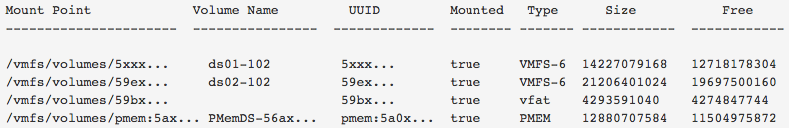

Note that there can be only one PMEM device belonging to an ESXi host. This device can keep only NVDIMM storage and virtual machines disks. In this way, you cannot store there any other files, i.e., vmx, log, iso, etc. Such datastore allows you only to query information about devices. Here’s the command allowing you to do that:

# esxcli storage filesystem listAnd, here’s how the listing looks like:

Also, note that at this time PMEM datastores are not displayed in HTML5 vSphere Client at all.

Once you power on the VM with PMEM, vSphere reserves some space on that device. This space remains reserved for PMEM, regardless VM state (powered on or off). You can get rid of that reservation by migrating the VM or deleting it.

Talking of VM with PMEM disks migration, the process highly depends on the type of the device used by the virtual machine. In general, for migrating virtual machines using PMEM disks between ESXi hosts, both vMotion and Storage vMotion are used. On the PMEM device of the target host, there should be enough space for the virtual machine’s PMEM storage. At this point, note that you can migrate the virtual machine with vPMEMDisk on the host without PMEM devices, but you can’t do that with VMs with vPMEMs.

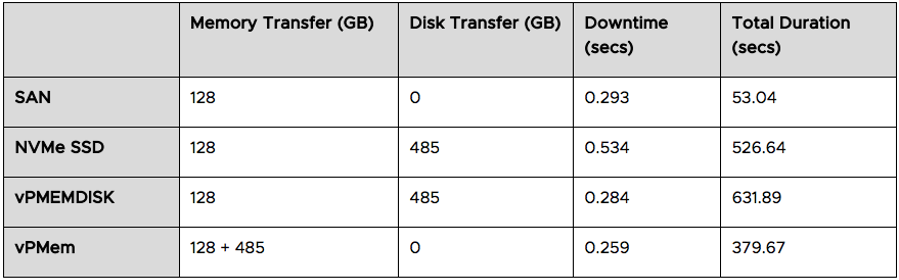

As it happens while migrating with vMotion, there’s almost no downtime. Well, in the worst case, while migrating a VM with the NVMe SSD storage it loses connection for half a second:

VMware DRS and DPM fully support PMEM. Still, DRS mechanism prevents you from migrating a virtual machine using PMEM to the host without the PMEM device.

What about PMEM devices performance?

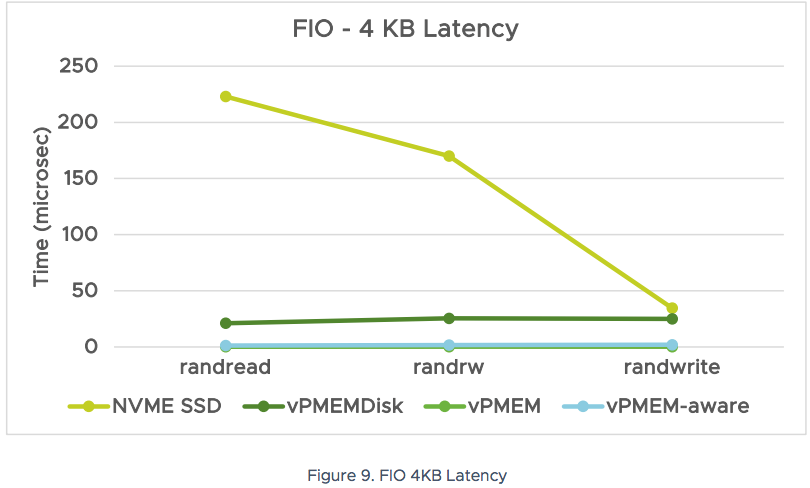

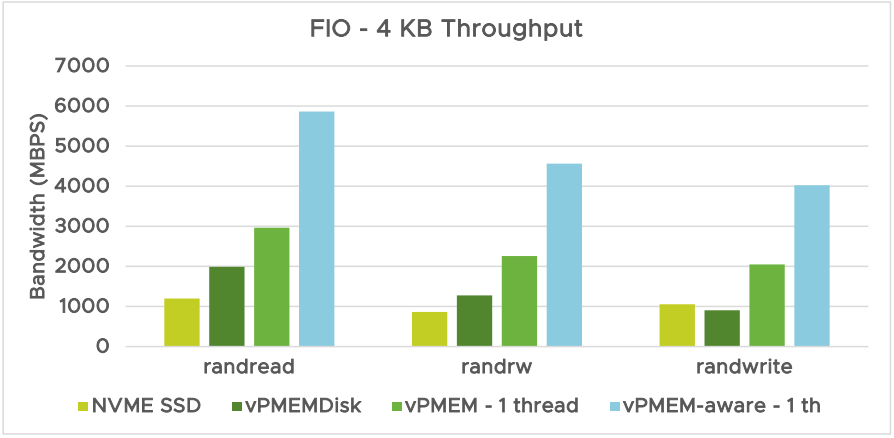

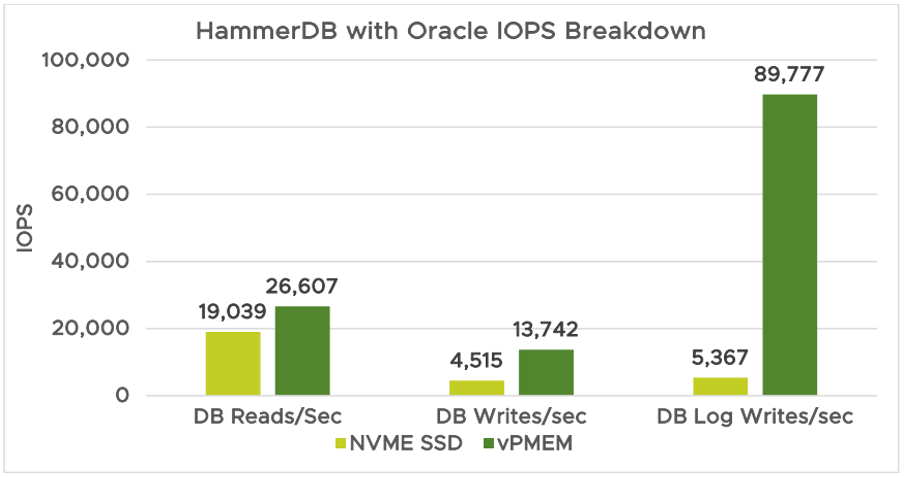

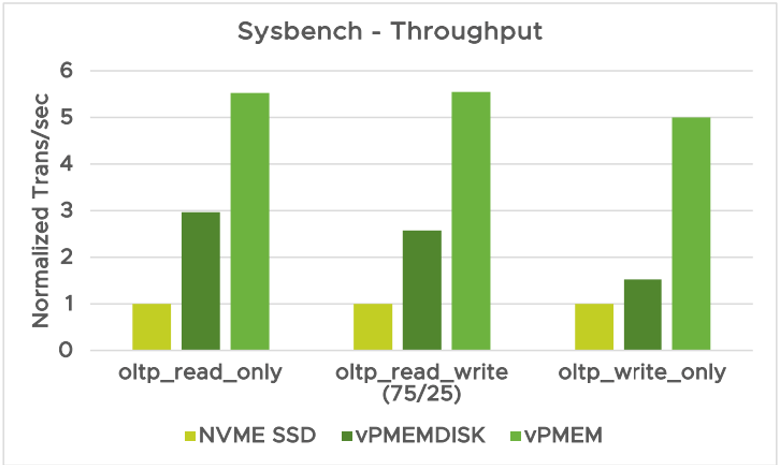

Now, let’s look at how fast PMEM can go! VMware has recently tested PMEM with numerous benchmarks. Study results are available in the Persistent Memory Performance on vSphere 6.7 – Performance Study document. Here are some plots from that report.

Benchmarking Latency

vPMEM-aware – Guest OS can work with PMEM via the direct access.

Measuring the throughput

HammerDB with Oracle IOPS breakdown

Sysbench throughput measurement (in Transactions per second) while working with MySQL database

Conclusion

In this article, I discussed how PMEM works and provided you with some numbers regarding its performance. This technology is a true middle ground between the fast but volatile DRAM and slower but non-volatile Flash/SSDs. For more details check out the performance report itself. More details about using Persistent Memory in VMware vSphere 6.7 are available here and here.