Introduction

SMB Direct is a fantastic technology that brings many benefits. These benefits are too good to leave on the table. But some planning on how to use it goes a long way in to optimizing your experience. To help with planning you need to understand the technology and your environment. A great aid in achieving this is the use of a map of your environment. Studying the map will help to determine where to go, what route to take, why, when and how.

Figure 1: Photo by Hendrik Morkel on Unsplash

For some reason, people tend to jump right in without looking at the map if they even have one. Unless you already know the territory, this can lead to a lot of wasted time and energy. So please study your environment, look at the landscape, map it and get your bearings before acting. Let’s start!

What is SMB Direct?

SMB Direct is SMB 3 traffic over RDMA. So, what is RDMA? DMA stands for Direct Memory Access. This means that an application has access (read/write) to a hosts memory directly with CPU intervention. If you do this between hosts it becomes Remote Direct Memory Access (RDMA).

Fair enough, but why are we interested in this? Well, because of the “need for speed”. You want the great throughput at low latencies offers. Due to how RDMA achieves this you also save on CPU cycles and cache.

Figure 2: Photo by Cédric Dhaenens on Unsplash

What’s not to like about all that? So how does RDMA achieve this? When reading about RDMA you’ll notice some terminology used to describe the benefits. Terminology like “Zero Copy”, “Kernel Bypass”, “Protocol Offload” and “Protocol Acceleration”.

How RDMA works

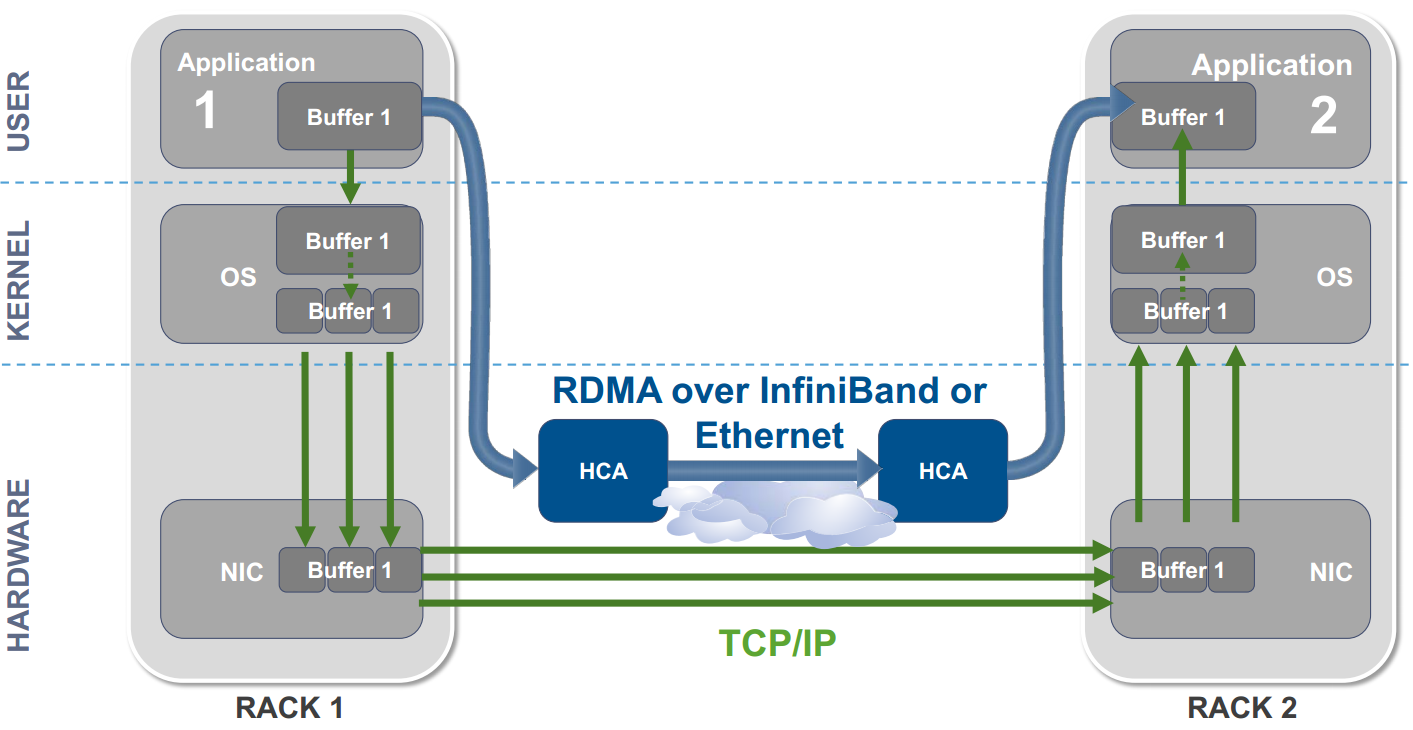

RDMA works by copying data from a user applications memory on one host directly into the user applications memory in another host via the hardware path (NIC & network). These are the blue lines in the picture below (Figure 3). The green lines depict traditional TCP/IP traffic as you have known it for a long time.

Figure 3: RDMA traffic & TCP/IP traffic Image courtesy of Mellanox

Do note that if the application resides in the kernel space it “only” bypasses the OS Stack and the system drivers, but this still provides a significant benefit.

Zero Copy & Kernel Bypass

These terminologies refer to the speed gains RDMA offers due to the fact that, in contrast to normal TCP/IP behavior, the data does not have to be copied multiple times from the applications memory buffer into kernel memory buffers. From there it has to be passed along by yet other copy action to the NIC, which sends it over the network to the other host. On that other host the reverse process has to happen. Data in the NIC memory buffers is copied to the kernel space where it gets copied again and sent to the user space into to the applications memory buffer.

You notice that there is a lot of copying going on, which is the overhead that RDMA avoids (Zero Copy). By doing so it also avoid the context switches between user space and kernel space (Kernel Bypass). This speeds things up tremendously.

CPU Offload / Bypass

But that’s not all. The work is actually offloaded to the NIC, so it bypasses the CPU of the hosts (CPU Offload or Bypass). This has two advantages:

Applications can access (remote) memory without kernel and user space processes consuming any host CPU cycles for reading and writing.

The caches in the CPU(s) won’t be filled with the accessed memory content.

The CPU cycles and cache can be used for the actual application workload and not for moving data around. The benefit normally ranges between 20 to 25 % reduction in CPU overhead. Excellent!

Transport Protocol Acceleration

Finally, the RDMA can do something that is called protocol acceleration. The message-based transactions and the ability to do gather/scatter where reading multiple buffers and sending them as one while writing them to multiple receiving buffers speeds ups the actual data movement. There are many details to this. Presentations and publications of SNIA (Storage Networking Industry Association) and academic researchers are readily available on the internet and can become quite technical.

I could have dived into this subject a bit more but I’m not a network level developer and I vulgarize the complexities as I try to understand and communicate them. To truly understand the nitty, gritty details you must put in some effort and study up.

The take away message is that RDMA protocols are inherently fast.

Why do we need it?

Outside of high performance computing, we have seen a large and continuing rise in all kinds of East-West traffic over the past decade. This started with virtualization which, together with virtual machine mobility, induces a performance & scalability challenge to many resources (network, storage, compute). On top of that, we see trends like Hyper Converged Infrastructure (HCI), storage replication and other use cases consume ever more bandwidth. Meanwhile, the need for ultra-low latency increases as we get faster storage options (NVMe, various types of NVDIMM (N, F, P) or Intel’s 3D XPoint) which leads to new architectures around their capabilities.

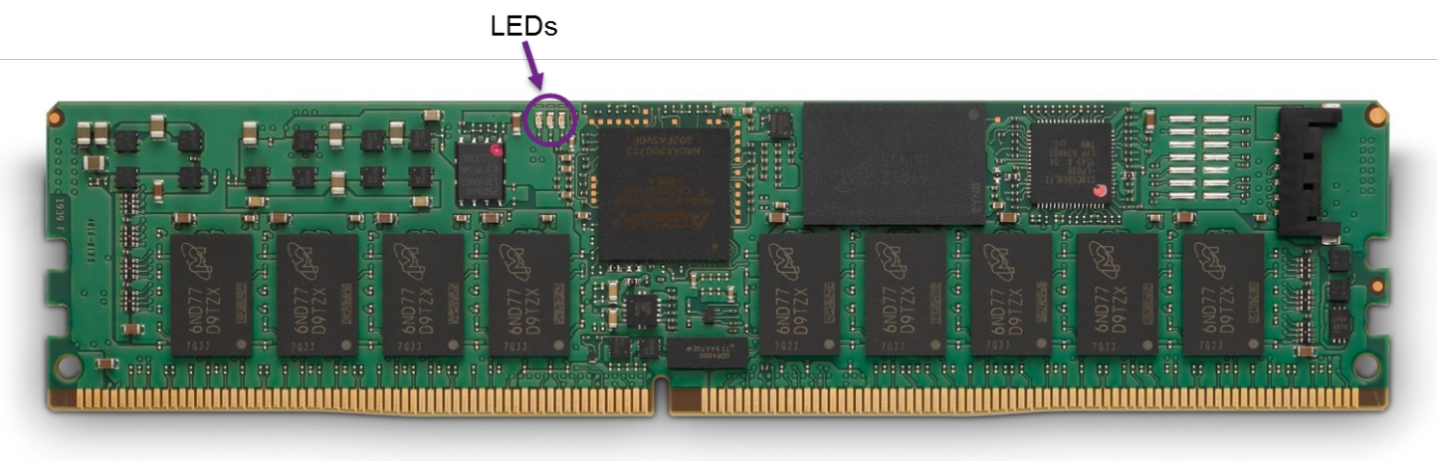

Figure 4: Make no mistake, Non Volatile DIMM (in all its variations) will drive the needs for RDMA even further (image courtesy of DELL)

In the Microsoft ecosystem that is addressed by ever more types of traffic leveraging SMB Direct. Think about Cluster Shared Volumes (CSV) redirection, various types of Live Migration, S2D storage traffic, Storage Replica, Backups to file shares. Storage over file system shares is also highly demanding and has become more popular.

When you look at the features of RDMA it’s clear why SMB Direct matters so much today to Microsoft and why RDMA generally is becoming ever more important to the industry.

By using RDMA we avoid being slowed down, maybe even to a point where SMB network traffic without RDMA puts too much of a burden on the CPU cycles to be feasible. We also prevent latencies becoming too high or throughput diminishing too much for the requirements of modern storage architectures to function well. It also makes sure we don’t waste the money we spent on our very capable commodity hardware (switches, NICs, storage and servers) as well as leveraging the full capabilities of our investment in Windows.

We have looked at what SMB Direct is and explained the benefits of RDMA. In Part II we’ll take a first look at the various flavors of RDMA as well as the relationship between RoCE RDMA and DCB as well as why that matters. In part III we will dive deeper into the flavors of RDMA that SMB Direct can leverage and what the current landscape looks like.