Flavors of RDMA

In Part I we looked at SMB Direct, what this has to do with RDMA and why it matters. In Part II we will look at which flavors of RDMA are available for use with SMB Direct. On the whole, it’s save to say that there are three RDMA options available today for use with SMB Direct in Windows Server 2012(R2), 2016 and 2019 (in preview at the time of writing). Those options are InfiniBand, RoCE and iWarp.

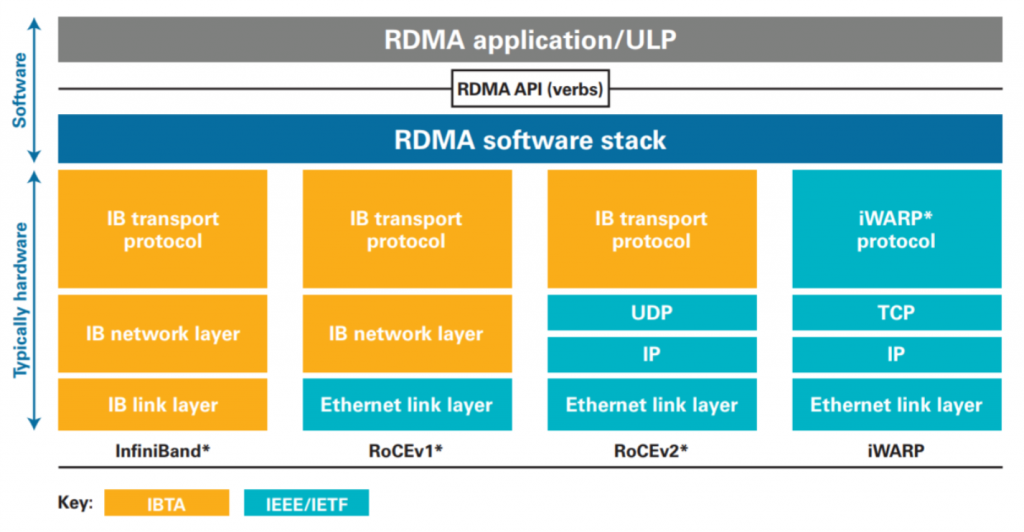

Figure 1: RDMA options – Image courtesy of SNIA

In a nutshell:

- Infiniband is the odd one out, not being Ethernet

- Both iWARP and RoCE provide RDMA over Ethernet

- RoCEv1 is basically InfiniBand transport & network over Ethernet

- RoCEv2 enhances RoCEv1 and uses a TCP/UDP IP header (not TCP/IP) and which brings layer 3 routability but still uses InfiniBand transport on top of Ethernet

- iWARP is layered on top of TCP/IP => Offloaded TCP/IP flow control and management

- Both iWARP and RoCE (and InfiniBand) support verbs. Verbs allow applications to leverage any type of RDMA.

Next, to those Infiniband, iWarp and RoCE, there is also Intel Omnipath. This is an evolution of a technology (TrueScale) Intel bought from Qlogic. For now, that seems to be mainly competing with InfiniBand and it’s focused on High-Performance Computing (HPC). It’s still very new and for the Wintel community interested in S2D, HCI, Hyper-V and other Microsoft deployments not yet available and as such not on the radar screen that much. As far as Intel goes, their renewed investment in iWarp is the more interesting one in regards to SMB Direct right now. Especially after having been out of the game since their legacy NetEffect (which they acquired) NE20 cards went extinct in 2014.

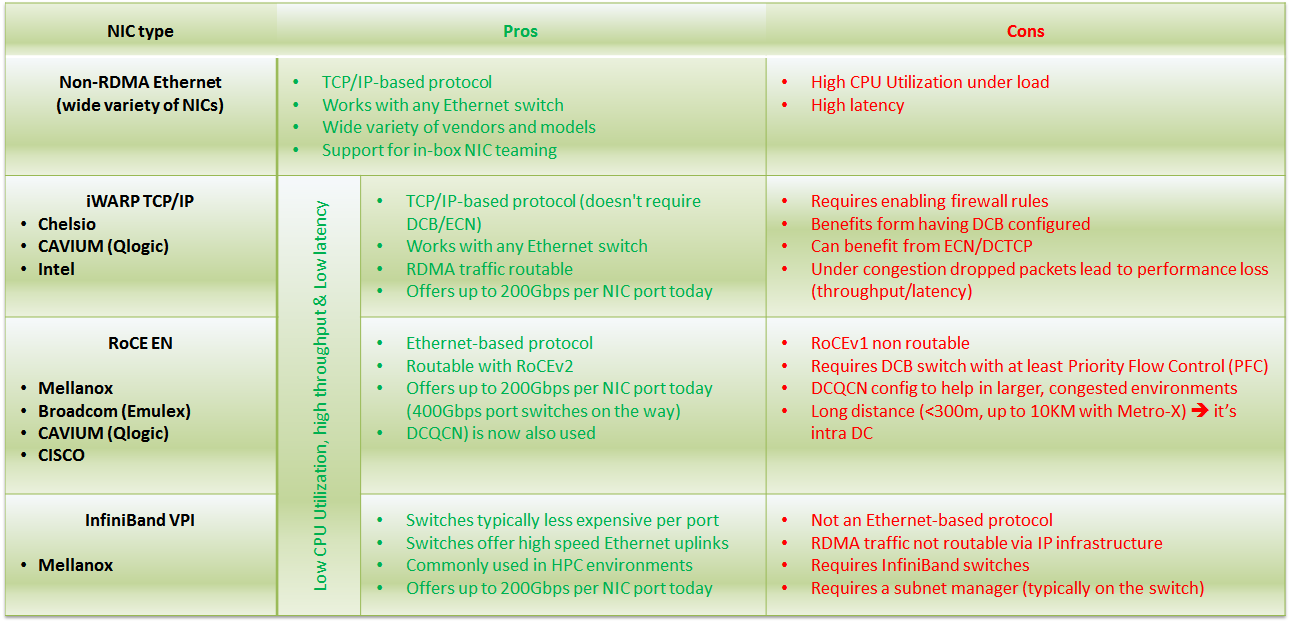

So, let’s look a bit closer at the three flavors of RDMA for use with SMB Direct in a handy table listing some pros and cons. Some are real, some are perceived.

Figure 2: RDMA options for use with SMB Direct

Whatever the flavor, I’ll be very clear about one thing. High throughput and low latency are two goals that are at odds with each other. Even with RDMA that strives to optimize both and achieves this marvel of engineering with CPU offload, by bypassing the kernel, going for zero copy and avoids packet drops as much as possible (“lossless” network). The reality is that latency can and will rise under congestion and as such bandwidth throughput will drop. RDMA rocks but it’s not magic and won’t save you from bad network designs with bottlenecks and congestion. To deal with the ever-growing needs, switches become ever more capable as do the NICs. 10Gbps has become a LOM option nowadays and a 100Gbps NIC is something you buy as a commodity as are the switches. But no matter how capable the hardware is and how many resources are available, proper design is paramount here to avoid getting into trouble. In a way, it reminds me of the day when the 1st 10Gbps switches made it into the networks (2010-2011) and how we designed for successful implementations as well as for dealing with the concerns some network experts had with them.

Reality Check: PCIe 3 and moving beyond dual port 25Gbps NICs

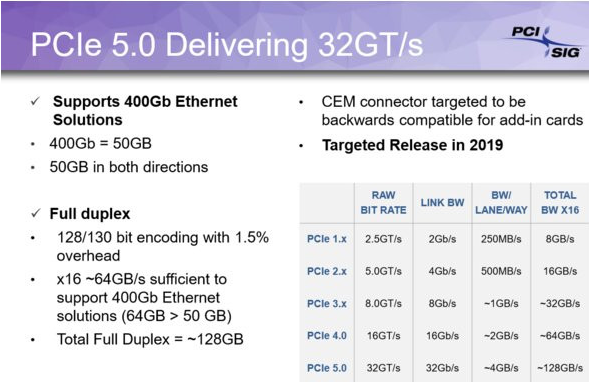

When we look at what PCIe 3 can achieve it’s clear we need a breakthrough when it comes to the rapid pace at which NIC & switch port speeds evolve. PCIe 4 was supposed to help with that but it’s arriving very late to the party and PCIe 5 is actively being discussed and we still have to see our first servers with PCIe 4 arrive.

Figure 3: Image courtesy of PCI SIG

The PCIe 3 x8 slots can handle a dual 25Gbps NIC but when using 40Gbps or above you’d better stick to 1 port per slot. In the near future and with an eye on 100Gbps and bigger the lure of PCIe 5 might make PCIe 4 short-lived.

If we leave Infiniband out of the discussion for a moment, you’ll find that the big discussion between RoCE and iWarp revolves around needing a lossless network or not. When a lossless network is required or beneficial it becomes a discussion on complexities on how to achieve that. DCB (Data Center Bridging) came in to life for converged networking and is a major component in all this. To understand the discussion around DCB we need to know what it is. So, let’s get that out of the way first.

What is DCB?

DCB is a suite of protocol standards (IEEE) focused around enabling converged networks in the datacenter. Configuration needs to be done on the endpoints (OS), the NICs and the switches.

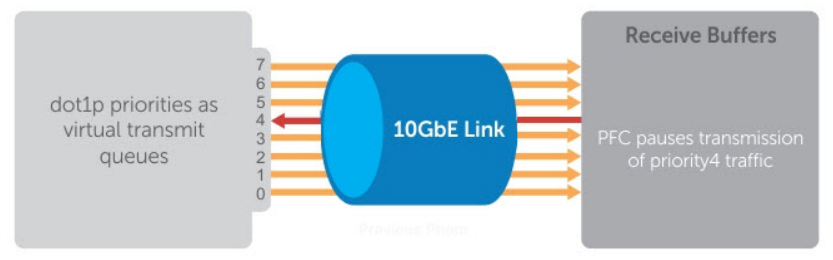

Priority-based Flow Control (PFC)

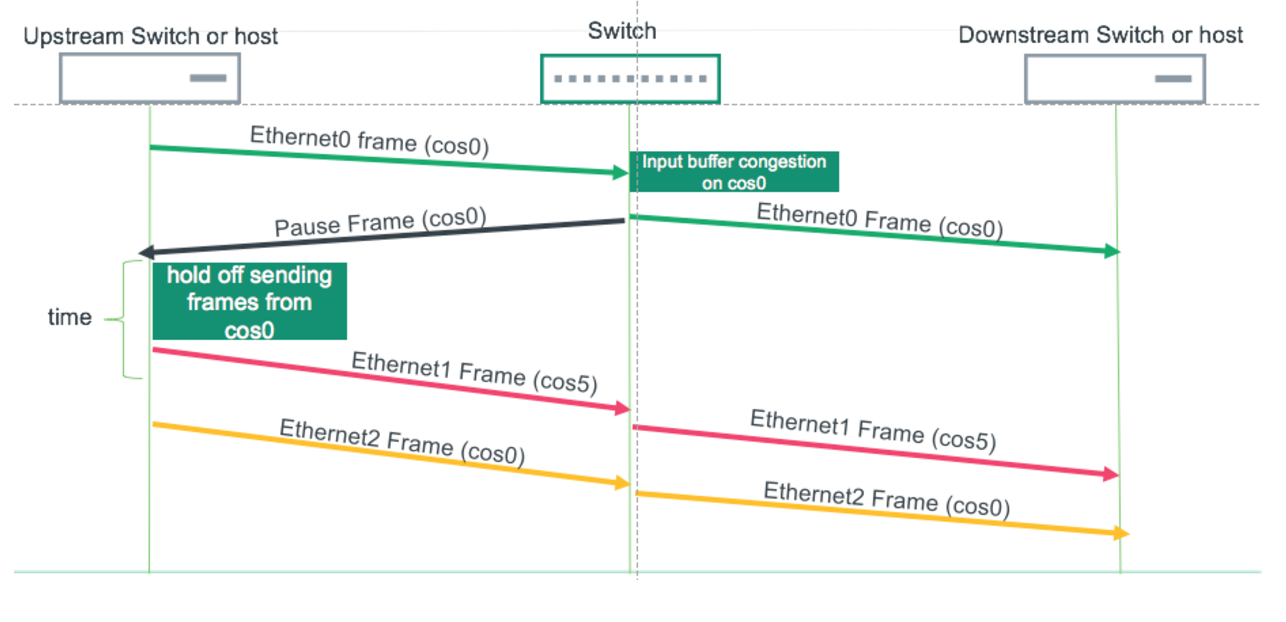

PFC provides a link-level, flow-control mechanism that can be independently controlled for each priority to ensure zero-loss due to converged-network congestion.

Figure 3: Image courtesy of DELL Technologies

Figure 4: PFC Image courtesy of Cumulus Networking Inc. Note that PFC workshop to hop and not end to end.

This means that wit PFC only the traffic configured and tagged for the lossless priority will be paused when needed. This is a better approach than pausing all the network traffic as is the case with Global Pause, as not all traffic needs to be lossless.

Enhanced Transmission Selection (ETS)

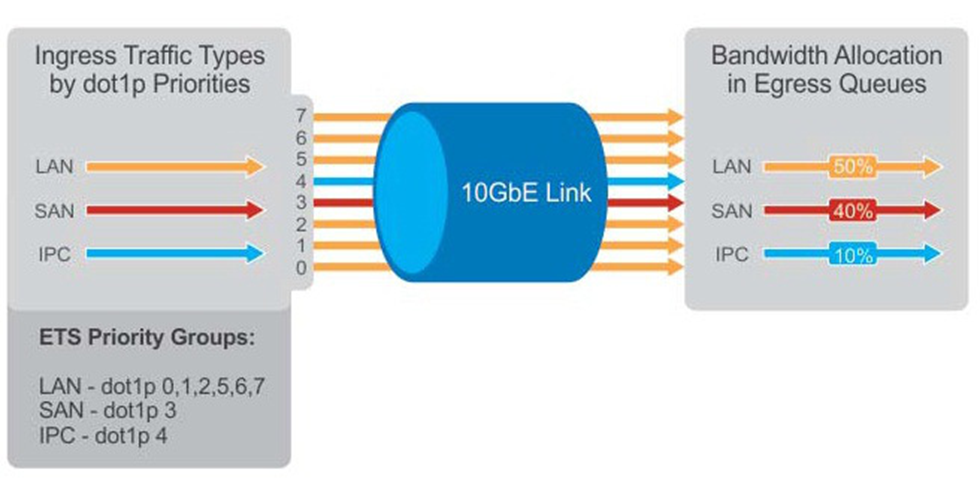

ETS provides a common management framework for bandwidth assignment to traffic classes, so it’s QoS management.

Figure 5: Image courtesy of DELL Technologies

With ETS we can leverage the priority tag to implement Quality of Service by mapping priorities to classes to which we assign a minimum bandwidth. When congestion occurs, minimum bandwidth is guaranteed as configured making sure the workloads get the share they need as defined by the users.

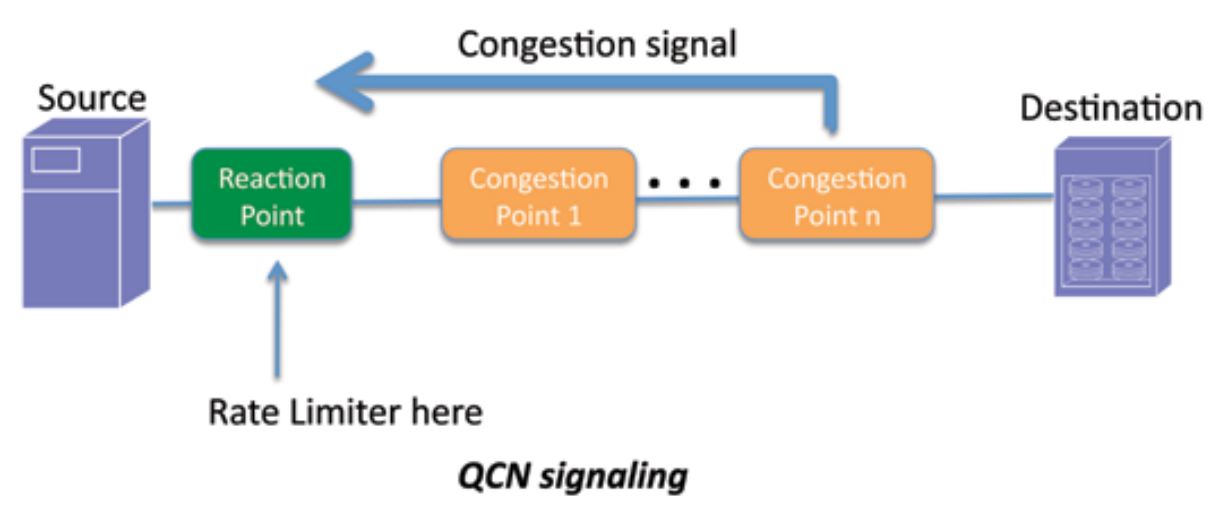

Quantified Congestion Notification (QCN)

QCN provides end to end congestion management for protocols without built-in congestion-control mechanisms. It’s also expected to benefit protocols with existing congestion management by providing more timely reactions to network congestion. QCN is layer 2 only, so not routable.

Figure 6: Image source unknown

If you look at this conceptually you should find that it matches what ECN is doing but that does work over layer 3 and is TCP/IP. Also, note that this isn’t very widespread in implementation, some would call it a unicorn.

Data Center Bridging Exchange Protocol (DCBx)

DCBx is a discovery and capability exchange protocol used to convey capabilities and configurations of the other three DCB features between neighbours to ensure consistent configuration across the network. It also detects and communicates mismatches between peers. It is an extension of LLDP, so this needs to be enabled.

The challenge DCB tries to address

Basically, the challenge is convergence. This is when more types of network traffic, such as storage and data traffic, run on the same fabric. It has been around a long time (ever since convergence became a thing) and it always comes down to how to serve all workloads when they run on the same network links at the throughput and latencies they require. As the push for convergence and hyper-convergence grew with evolving technologies the needs to manage this grew as well and as such the ways to do so.

In the end it’s about more capacity (bigger pipes), more capable network gear and better capabilities to make convergence work. Bigger pipes always seem to beat QoS in ease of use and because QoS is about dividing available bandwidth fairly when needed. But if you don’t have enough bandwidth, QoS won’t help sufficiently anymore. Congestion control is the other issue and depending on the workload that issue is more or less problematic. When it comes to storage, congestion is a big issue as it causes latency to increase and bandwidth to decrease. The effects of high latency can be disastrous (see Latency kills). What helps best fighting congestion is having more resources available, this always beats managing limited resources. But even then, congestion can occur and you need to handle this as elegantly as possible to make sure things keep working well. When your protocol needs a lossless network, managing congestion is crucial when it does occur. That’s were DCB plays it role and the challenge is to configure it right and make it scale, which has been a discussion point for many years and everyone in that discussion has its own agenda and needs. We’ll take a look at that discussion in Part III.

Beyond DCB into the alphabet soup of congestion control

Congestion control is not something limited to RDMA or SMB Direct. Whether one uses RoCEv1 with IB, RoCEv2 with TCP/UDP, iWarp with TCP/IP or non-RDMA TCP/IP traffic congestion control is a thing. When you need it, you really need it and there is no escaping this and hoping things will be fine.

So, in Part III, we’ll also touch very lightly on ECN, DCTCP, DCQQCN, L3QCN etc. Configuring these also is not a walk in the park and I’ll show you this is a complex area of endeavour and while the concepts of RDMA and congestion control are simple that doesn’t mean it’s easy to do.

Again, more on this in Part III.

- SMB Direct – The State of RDMA for use with SMB 3 traffic (Part I)

- SMB Direct – The State of RDMA for use with SMB 3 traffic (Part III)