Introduction

In hyperconverged infrastructure (HCI), where compute, network, and storage performance must work hand-in-hand, the choice of storage solution plays a critical role in determining the resulting infrastructure efficiency. This is another article in our “HCI Performance Benchmarking” series, where we compare StarWind Virtual SAN (VSAN) with other well-established software-defined storage products.

This time, we want to add a twist to keep things intriguing. We won’t reveal the name of the vendor or its product, leaving you to guess it yourself! We called it “Mysterious Software-Defined Storage (SDS)” in this article’s title, and for simplicity, we will continue referring to it as “Mysterious”.

In this installment, we evaluate “Mysterious” in a 2-node HCI configuration, focusing on specific storage patterns and their impact on workload performance. In case you missed our earlier articles, the first explored TCP-based configurations of StarWind Virtual SAN and Microsoft Storage Spaces Direct (S2D), available here, and the second focused on their RDMA-based configurations, available here. These articles provide foundational context on TCP and RDMA’s influence on performance in HCI environments.

Our goal now is to examine three unique configurations: “Mysterious” over TCP and two StarWind Virtual SAN setups, using NVMe over TCP (NVMe/TCP) and RDMA (NVMe/RDMA).

To compare these two setups, we configured a 2-node Hyperconverged Infrastructure (HCI) vSphere cluster using identical settings: full host mirroring (essentially ‘network RAID1’) combined with RAID5 for local NVMe pool protection, resulting in an overall RAID51 configuration.

Through our detailed test environment and benchmarking methodology, we’ll walk through each scenario’s strengths and limitations. For further insights into similar testing on Proxmox VE, check out our related analysis comparing DRBD/Linstor, Ceph, and StarWind VSAN NVMe/TCP, available here.

Here’re the configurations analyzed in this article:

“Mysterious” over TCP

StarWind Virtual SAN NVMe/RDMA

StarWind Virtual SAN NVMe/TCP

Note on “Mysterious” over RDMA: we were unable to configure an RDMA-based setup at this time, but we plan to do it sometime in the future.

Read on as we explore how each setup stacks up in terms of latency, IOPS, and overall performance across key workload patterns. Whether you’re an IT professional or a system architect, this article will help illuminate the best storage strategies for an optimized HCI setup.

Solutions overview

“Mysterious” SDS, TCP:

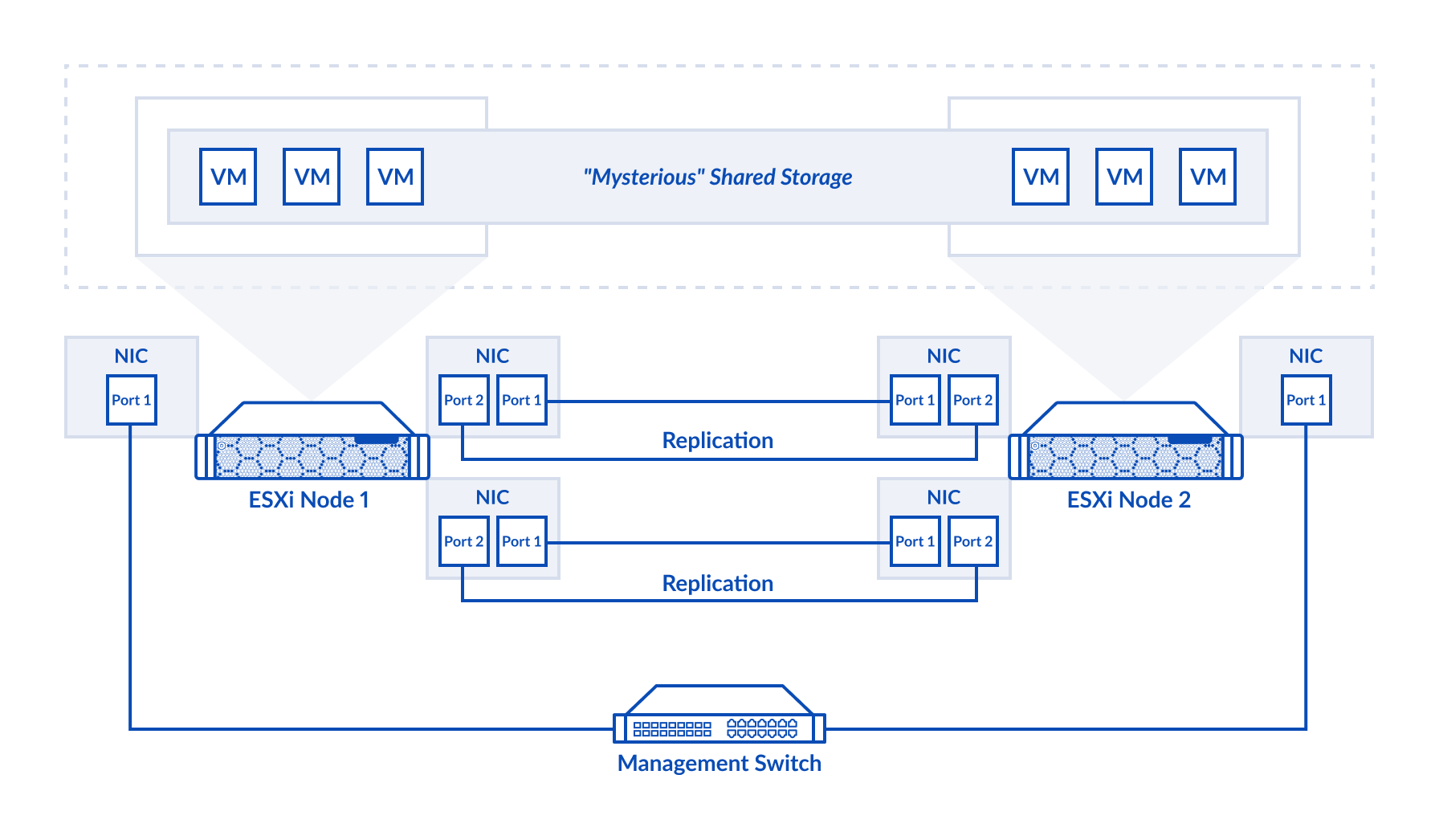

Note: The above diagram is simplified for clarity. We are not showing additional details such as the quorum witness since we are focusing purely on performance this time.

In this setup, the focus is on a 2-node HCI deployment using “Mysterious” over TCP, as shown in the diagram. Each hypervisor node is equipped with two network interface cards (NICs), each featuring two ports. These NICs handle both storage replication between the nodes and management traffic through the management virtual switch.

StarWind Virtual SAN, NVMe/RDMA

As demonstrated in the diagram, the StarWind VSAN NVMe/RDMA setup is powered by Mellanox NICs that are configured for Remote Direct Memory Access (RDMA) using SR-IOV (Single Root I/O Virtualization), which allows NIC Virtual Functions to be passed directly to the StarWind Controller Virtual Machine (CVM).

Each hypervisor node is equipped with 5 NVMe drives. These drives are passed through directly to the StarWind CVM on each node, where they are assembled into a RAID5 array.

On top of the RAID5 array, two StarWind HA devices are created. These High Availability devices are designed to replicate data across both nodes, ensuring that in the event of node failure, the system remains operational with zero downtime.

StarWind Virtual SAN, NVMe/TCP

In this scenario, StarWind VSAN is deployed using NVMe/TCP as the transport protocol. Here, the key difference lies in the network configuration. The core architecture remains the same – featuring the StarWind CVM handling NVMe devices and ensuring data redundancy through StarWind HA, but in this case, SR-IOV was not used for Mellanox NICs.

Instead of dedicating NIC virtual functions for RDMA communication, the StarWind CVM uses standard virtual network adapters provided by the hypervisor. This simplifies the setup, as you don’t need to configure SR-IOV on Mellanox NICs. It’s a familiar and straightforward design that still maintains the performance edge of NVMe storage.

Testbed overview

In this section, we’ll take a closer look at the hardware and software setup driving the performance of “Mysterious” and StarWind VSAN within the NVMe/TCP scenario. The testbed leverages Intel® Xeon® Platinum processors, 100GbE Mellanox network adapters, and Micron NVMe storage, all working together under StarWind VSAN Version 8 to push the boundaries of data throughput and efficiency.

Hardware:

| Server model | Supermicro SYS-220U-TNR |

|---|---|

| CPU | Intel(R) Xeon(R) Platinum 8352Y @2.2GHz |

| Sockets | 2 |

| Cores/Threads | 64/128 |

| RAM | 256GB |

| NIC | 2x ConnectX®-5 EN 100GbE (MCX516A-CDAT) |

| Storage | 5x NVMe Micron 7450 MAX: U.3 3.2TB |

Software:

| StarWind VSAN | Version V8 (build 15260, CVM 20231016) |

|---|

StarWind CVM parameters:

| CPU | 16 vCPU |

|---|---|

| RAM | 16GB |

| NICs | 1x virtual network adapter for management 4x Mellanox ConnectX-5 Virtual Function network adapter (SRIOV) for NVMe/RDMA scenario 4x virtual network adapter for NVMe/TCP scenario |

| Storage | RAID5 (5x NVMe Micron 7450 MAX: U.3 3.2TB) |

Testing methodology

To ensure accurate and reliable results, the performance benchmark was carried out using the FIO utility in a client/server mode. We created a series of virtual machines (VMs) to simulate real-world conditions, scaling the setup from 10 to 40 VMs, each configured with 4 vCPUs and 4GB of RAM. For benchmarking purposes, each VM was equipped with a single 100GB virtual disk, filled with random data ahead of the testing to reflect actual storage scenarios.

Several critical performance patterns were tested, including:

- 4K random read

- 4K random read/write 70/30

- 4K random write

- 64K random read

- 64K random write

- 1M read

- 1M write

To strike an optimal balance between performance and latency, the FIO parameters for numjobs and iodepth were carefully selected. This allowed us to simulate demanding workloads without compromising the system’s responsiveness.

Before diving into the main testing, we implemented a “warm-up” phase to ensure disks were adequately prepared. For example, the 4K random write pattern was run for 4 hours prior to testing 4K random read/write 70/30 and 4K random write benchmarks, while the 64K random write pattern was run for 2 hours ahead of its respective tests.

Each test was repeated three times to minimize variance, and the final results are based on the average of these runs. The read tests lasted for 600 seconds each, while the write tests were conducted over 1800 seconds, providing an in-depth analysis of how the infrastructure handled different types of data loads.

Tables with benchmark results

“Mysterious”, TCP

The tables highlight “Mysterious” performance over TCP as VM counts scale up from 10 to 40. At a lower VM count, “Mysterious” delivers solid IOPS for 4k random reads, starting at 650,183 IOPS for 10 VMs and climbing to 790,537 IOPS at 20 VMs. In mixed 4k read/write (70/30) workloads, IOPS also scale up smoothly, reaching 430,940 IOPS with 20 VMs.

As VM counts reach higher values, such as 30 and 40, “Mysterious” effectively manages larger I/O demands, maintaining 624,764 IOPS for 4k random reads at 30 VMs, though some latency increase is observed with mixed read/write patterns as expected with higher load. Node CPU usage for 4k random read/write workloads increases sharply from 50% at 10 VMs to a substantial 95% at 40 VMs, suggesting high CPU demand for processing intense read/write operations.

Notably, with 64k and 1024k read patterns, “Mysterious” attains up to 25,589 MiB/s due to reading data locally, thus bypassing the network stack. At the same time, CPU utilization reaches as high as 70% even with fewer job threads and queue depths, indicating that processing power becomes a critical resource constraint in achieving higher throughput and lower latency.

| VM count | Pattern | Numjobs | IOdepth | IOPS | MiB/s | Latency (ms) | Node CPU usage (%) |

|---|---|---|---|---|---|---|---|

| 10 | 4k random read | 4 | 4 | 650,183 | 2,539 | 0.247 | 52.00% |

| 4k random read/write (70%/30%) | 4 | 4 | 380,062 | 1,484 | 0.508 | 50.00% | |

| 4k random write | 4 | 2 | 135,946 | 530 | 0.590 | 28.00% | |

| 64k random read | 2 | 8 | 302,182 | 18,886 | 0.537 | 42.00% | |

| 64k random write | 2 | 8 | 40,932 | 2,558 | 4.030 | 29.00% | |

| 1024k read | 1 | 4 | 21,693 | 21,693 | 1.847 | 33.00% | |

| 1024k write | 1 | 2 | 3,642 | 3,642 | 5.503 | 30.00% | |

| VM count | Pattern | Numjobs | IOdepth | IOPS | MiB/s | Latency (ms) | Node CPU usage (%) |

| 20 | 4k random read | 4 | 4 | 790,537 | 3,087 | 0.410 | 67.00% |

| 4k random read/write (70%/30%) | 4 | 4 | 430,940 | 1,683 | 0.897 | 74.00% | |

| 4k random write | 4 | 2 | 167,416 | 653 | 0.960 | 43.00% | |

| 64k random read | 2 | 8 | 409,428 | 25,589 | 0.813 | 57.00% | |

| 64k random write | 2 | 8 | 42,614 | 2,663 | 8.210 | 37.00% | |

| 1024k read | 1 | 4 | 30,836 | 30,835 | 2.893 | 51.00% | |

| 1024k write | 1 | 2 | 3,653 | 3,652 | 10.990 | 47.00% |

| VM count | Pattern | Numjobs | IOdepth | IOPS | MiB/s | Latency (ms) | Node CPU usage (%) |

|---|---|---|---|---|---|---|---|

| 30 | 4k random read | 4 | 4 | 624,764 | 2,440 | 0.840 | 73.00% |

| 4k random read/write (70%/30%) | 4 | 4 | 386,413 | 1,509 | 1.545 | 83.00% | |

| 4k random write | 4 | 2 | 168,606 | 658 | 1.433 | 55.00% | |

| 64k random read | 2 | 8 | 406,941 | 25,433 | 1.227 | 57.00% | |

| 64k random write | 2 | 8 | 49,783 | 3,111 | 10.430 | 54.00% | |

| 1024k read | 1 | 4 | 33,181 | 33,180 | 4.150 | 56.00% | |

| 1024k write | 1 | 2 | 3,799 | 3,798 | 15.900 | 58.00% | |

| VM count | Pattern | Numjobs | IOdepth | IOPS | MiB/s | Latency (ms) | Node CPU usage (%) |

| 40 | 4k random read | 4 | 4 | 545,998 | 2,132 | 1.250 | 76.00% |

| 4k random read/write (70%/30%) | 4 | 4 | 382,059 | 1,492 | 1.982 | 95.00% | |

| 4k random write | 4 | 2 | 171,808 | 670 | 1.870 | 63.00% | |

| 64k random read | 2 | 8 | 357,878 | 22,367 | 2.000 | 57.00% | |

| 64k random write | 2 | 8 | 46,881 | 2,929 | 13.827 | 65.00% | |

| 1024k read | 1 | 4 | 35,934 | 35,933 | 4.760 | 70.00% | |

| 1024k write | 1 | 2 | 3,917 | 3,916 | 20.610 | 62.00% |

StarWind VSAN NVMe over RDMA scenario

The tables showcase StarWind VSAN’s performance using NVMe-oF across various VM counts and workload patterns. For 4k random reads, IOPS reaches a peak of over 1.5 million at 40 VMs. As expected, 4k random read/write and write patterns show relatively lower performance, though they still deliver impressive IOPS and throughput, particularly at higher VM counts.

Latency remains impressively low, especially for small-block read operations. Even with increasing VM counts, latency for 4k random reads stays under 1 ms, demonstrating the high efficiency of the RDMA protocol in maintaining quick response times under load. However, larger-block operations such as 1024k reads and writes experience slightly higher latencies, with 1024k write latency reaching over 9 ms at 40 VMs.

CPU utilization scales predictably with VM count and workload intensity, reaching a peak of 60% for 4k random read at 40 VMs, showing a balanced resource usage.

| VM count | Pattern | Numjobs | IOdepth | IOPS | MiB/s | Latency (ms) | Node CPU usage (%) |

|---|---|---|---|---|---|---|---|

| 10 | 4k random read | 4 | 4 | 1,030,832 | 4,026 | 0.155 | 41.00% |

| 4k random read/write (70%/30%) | 4 | 4 | 554,083 | 2,164 | 0.358 | 32.00% | |

| 4k random write | 4 | 2 | 171,289 | 669 | 0.470 | 21.00% | |

| 64k random read | 2 | 4 | 225,770 | 14,110 | 0.350 | 21.00% | |

| 64k random write | 2 | 2 | 57,774 | 3,611 | 0.690 | 18.00% | |

| 1024k read | 1 | 2 | 15,199 | 15,199 | 1.320 | 15.00% | |

| 1024k write | 1 | 1 | 4,173 | 4,173 | 2.400 | 17.00% | |

| VM count | Pattern | Numjobs | IOdepth | IOPS | MiB/s | Latency (ms) | Node CPU usage (%) |

| 20 | 4k random read | 4 | 4 | 1,473,245 | 5,754 | 0.215 | 53.00% |

| 4k random read/write (70%/30%) | 4 | 4 | 730,326 | 2,852 | 0.544 | 39.00% | |

| 4k random write | 4 | 2 | 255,798 | 998 | 0.625 | 25.00% | |

| 64k random read | 2 | 4 | 270,478 | 16,904 | 0.593 | 23.00% | |

| 64k random write | 2 | 2 | 67,422 | 4,214 | 1.185 | 19.00% | |

| 1024k read | 1 | 2 | 17,186 | 17,186 | 2.333 | 16.00% | |

| 1024k write | 1 | 1 | 4,380 | 4,380 | 4.563 | 17.00% |

| VM count | Pattern | Numjobs | IOdepth | IOPS | MiB/s | Latency (ms) | Node CPU usage (%) |

|---|---|---|---|---|---|---|---|

| 30 | 4k random read | 4 | 4 | 1,559,821 | 6,092 | 0.305 | 57.00% |

| 4k random read/write (70%/30%) | 4 | 4 | 795,173 | 3,105 | 0.741 | 42.00% | |

| 4k random write | 4 | 2 | 301,524 | 1,177 | 0.795 | 27.00% | |

| 64k random read | 2 | 4 | 277,462 | 17,340 | 0.865 | 24.00% | |

| 64k random write | 2 | 2 | 71,981 | 4,498 | 1.665 | 20.00% | |

| 1024k read | 1 | 2 | 17,421 | 17,421 | 3.450 | 16.00% | |

| 1024k write | 1 | 1 | 4,372 | 4,372 | 6.858 | 18.00% | |

| VM count | Pattern | Numjobs | IOdepth | IOPS | MiB/s | Latency (ms) | Node CPU usage (%) |

| 40 | 4k random read | 4 | 4 | 1,568,856 | 6,127 | 0.408 | 60.00% |

| 4k random read/write (70%/30%) | 4 | 4 | 838,880 | 3,276 | 0.933 | 46.00% | |

| 4k random write | 4 | 2 | 327,063 | 1,276 | 0.978 | 30.00% | |

| 64k random read | 2 | 4 | 276,578 | 17,285 | 1.158 | 24.00% | |

| 64k random write | 2 | 2 | 74,322 | 4,644 | 2.153 | 21.00% | |

| 1024k read | 1 | 2 | 17,380 | 17,380 | 4.610 | 17.00% | |

| 1024k write | 1 | 1 | 4,423 | 4,423 | 9.040 | 18.00% |

StarWind VSAN NVMe over TCP scenario

The tables illustrate StarWind VSAN’s performance using NVMe over TCP under varying VM counts and workload configurations. For 4k random read tasks, StarWind VSAN exhibits robust results, achieving 635,695 IOPS at 10 VMs and rising to 974,973 IOPS at 20 VMs. In mixed 4k read/write (70/30) patterns, the IOPS scale smoothly, reaching 513,804 at 20 VMs, highlighting StarWind VSAN’s capability in managing diverse I/O operations.

As VM counts increase, StarWind VSAN consistently delivers strong throughput for larger data blocks, reaching up to 15,216 MiB/s for 1024k reads at 20 VMs. Additionally, for 64k random read, StarWind VSAN NVMe/TCP maintains performance levels near 14,000 MiB/s, reflecting its efficiency under larger data transfers even at higher VM densities.

Latency generally rises with the VM count, reflecting the increased load. For instance, the latency for 4k random read/write (70%/30%) grows from 0.905 ms at 10 VMs to 2.42 ms at 40 VMs, suggesting the impact of increased read/write operations on response time. Notably, 1024k write operations experience the steepest latency increase, reaching up to 13.405 ms with 40 VMs.

CPU utilization increases in line with the VM count and workload intensity, peaking at 64% with 40 VMs under 4k random read patterns. The higher CPU usage during read-intensive tasks, especially with small block sizes like 4k, demonstrates the system’s efficiency in processing high-throughput read requests. Conversely, CPU usage remains lower in write operations with larger block sizes, reflecting the different processing demands for these tasks.

| VM count | Pattern | Numjobs | IOdepth | IOPS | MiB/s | Latency (ms) | Node CPU usage (%) | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 10 | 4k random read | 4 | 8 | 635,695 | 2,483 | 0.505 | 36.00% | ||||

| 4k random read/write (70%/30%) | 4 | 8 | 414,061 | 1,617 | 0.905 | 32.00% | |||||

| 4k random write | 4 | 4 | 148,238 | 579 | 1.080 | 24.00% | |||||

| 64k random read | 2 | 8 | 147,698 | 9,231 | 1.090 | 30.00% | |||||

| 64k random write | 2 | 2 | 30,683 | 1,917 | 1.300 | 20.00% | |||||

| 1024k read | 1 | 2 | 9,358 | 9,358 | 2.140 | 25.00% | |||||

| 1024k write | 1 | 1 | 2,423 | 2,423 | 4.130 | 20.00% | |||||

| VM count | Pattern | Numjobs | IOdepth | IOPS | MiB/s | Latency (ms) | Node CPU usage (%) | ||||

| 20 | 4k random read | 4 | 8 | 974,973 | 3,808 | 0.660 | 51.00% | ||||

| 4k random read/write (70%/30%) | 4 | 8 | 513,804 | 2,007 | 1.463 | 29.00% | |||||

| 4k random write | 4 | 4 | 197,670 | 772 | 1.620 | 28.00% | |||||

| 64k random read | 2 | 8 | 224,001 | 14,000 | 1.430 | 40.00% | |||||

| 64k random write | 2 | 2 | 37,243 | 2,327 | 2.150 | 22.00% | |||||

| 1024k read | 1 | 2 | 15,217 | 15,216 | 2.630 | 33.00% | |||||

| 1024k write | 1 | 1 | 2,835 | 2,835 | 7.140 | 21.00% | |||||

| VM count | Pattern | Numjobs | IOdepth | IOPS | MiB/s | Latency (ms) | Node CPU usage (%) |

|---|---|---|---|---|---|---|---|

| 30 | 4k random read | 4 | 8 | 1,177,015 | 4,598 | 0.820 | 58.00% |

| 4k random read/write (70%/30%) | 4 | 8 | 577,997 | 2,258 | 1.940 | 42.00% | |

| 4k random write | 4 | 4 | 229,052 | 894 | 2.098 | 31.00% | |

| 64k random read | 2 | 8 | 242,938 | 15,183 | 1.980 | 42.00% | |

| 64k random write | 2 | 2 | 38,862 | 2,429 | 3.090 | 23.00% | |

| 1024k read | 1 | 2 | 16,359 | 16,359 | 3.670 | 35.00% | |

| 1024k write | 1 | 1 | 3,052 | 3,052 | 9.995 | 22.00% | |

| VM count | Pattern | Numjobs | IOdepth | IOPS | MiB/s | Latency (ms) | Node CPU usage (%) |

| 40 | 4k random read | 4 | 8 | 1,285,263 | 5,020 | 1.000 | 64.00% |

| 4k random read/write (70%/30%) | 4 | 8 | 608,703 | 2,377 | 2.420 | 44.00% | |

| 4k random write | 4 | 4 | 256,770 | 1,003 | 2.490 | 33.00% | |

| 64k random read | 2 | 8 | 251,799 | 15,737 | 2.545 | 44.00% | |

| 64k random write | 2 | 2 | 40,296 | 2,518 | 3.980 | 23.00% | |

| 1024k read | 1 | 2 | 16,687 | 16,687 | 4.795 | 35.00% | |

| 1024k write | 1 | 1 | 3,067 | 3,067 | 13.405 | 22.00% |

Benchmarking results in graphs

In this section, we’ll delve into a comprehensive analysis of the benchmark results for each test scenario, where various storage solutions, protocols, and metrics such as Input/Output Operations Per Second (IOPS), latency, throughput, and CPU usage were evaluated. Each figure provides insight into how well each storage solution handles different workloads under various VM counts, offering a nuanced view of performance, efficiency, and scalability.

Random Read 4k:

Let’s start with the 4K random read test, where Figure 1 demonstrates the performance in IOPS.

With a configuration of numjobs=4 and at a 4-depth queue, we see StarWind VSAN NVMe/RDMA takes the lead, handling up to 1,030,832 IOPS with 10 VMs, a figure that rises to 1,568,856 IOPS as the VM count reaches 40.

In comparison, “Mysterious” performs at 650,183 IOPS with 10 VMs, trailing behind StarWind NVMe/RDMA by approximately 37%. StarWind VSAN NVMe/TCP maintains close competitiveness with 635,695 IOPS at 10 VMs, although it remains slightly behind the “Mysterious”.

As the workload scales to 40 VMs, StarWind NVMe/RDMA retains a performance lead, highlighting its consistent capability to manage high IOPS requirements using RDMA transport.

Latency directly affects system responsiveness. In Figure 2, the 4K random read test results highlight the performance differences among the storage configurations as the queue depth and VM count increase. Across the board, latency increases with a higher VM load, but each solution handles this differently.

StarWind VSAN NVMe/RDMA delivers exceptional performance, starting at just 0.155 ms with 10 VMs and gradually increasing to only 0.408 ms at 40 VMs. This highlights RDMA’s ability to maintain responsiveness even under heavy workloads, demonstrating a 67% latency advantage over “Mysterious” at maximum load.

Meanwhile, “Mysterious” starts with a latency of 0.247 ms at 10 VMs, which gradually rises to 1.250 ms at 40 VMs — a fivefold increase.

StarWind VSAN NVMe/TCP sits between these two, beginning with a higher latency of 0.505 ms with 10 VMs, matching “Mysterious” at 30 VMs with 0.820 ms, and outperforming it at 40 VMs, where it rises to only 1 ms compared to “Mysterious”‘s 1.250 ms. This solution balances moderate latency growth with increasing VM counts.

Efficient CPU usage is foundational for scalable, high-performing virtualization, and Figure 3 presents a clear comparison of node CPU consumption across storage configurations under increasing workloads.

StarWind VSAN NVMe/RDMA emerges as a leader in efficiency, showcasing a CPU usage of just 60% at 40 VMs, allowing it to handle demanding read operations without significant resource strain. This low CPU footprint illustrates RDMA’s capability to offload processing demands effectively.

On the other hand, “Mysterious” demonstrates greater resource demand, reaching up to 76% CPU usage with 40 VMs, which represents a 27% increase in resource consumption compared to StarWind VSAN NVMe/RDMA at the same workload.

StarWind VSAN NVMe/TCP balances CPU demands, capping usage at 64% with 40 VMs, which is approximately 7% higher than StarWind over RDMA but 15% lower than “Mysterious”.

Random Read/Write (70%/30%) 4k:

Figure 4 delves into the mixed 4K random read/write 70%/30% workload. It’s essential for assessing storage performance in real-world virtualized environments. A high-performing storage solution must handle this mixed workload efficiently, as it represents the backbone of many daily operations.

In this test, StarWind VSAN NVMe/RDMA demonstrated a commanding lead, achieving 554,083 IOPS at 10 VMs and scaling to 838,880 IOPS at 40 VMs.

In contrast, “Mysterious” showed lower performance, starting at 380,062 IOPS at 10 VMs, peaking at 430,940 IOPS at 20 VMs, and dropping to 382,059 IOPS at 40 VMs, marking a substantial 55% gap compared to StarWind NVMe/RDMA at the highest VM count. Interestingly, “Mysterious” exhibited a performance decline starting at 30 VMs, underscoring scalability challenges in handling intensive mixed workloads.

StarWind VSAN NVMe/TCP, while not matching RDMA performance levels, maintained consistent performance as VM counts increased, peaking at 608,703 IOPS at 40 VMs — nearly 60% faster than “Mysterious” in the same test.

Figure 5 addresses latency for the 4K random read/write mix.

Predictably, StarWind VSAN NVMe over RDMA continues to impress with latency as low as 0.358 ms with 10 VMs, which modestly increases to 0.933 ms at 40 VMs. This gradual and controlled increase in latency demonstrates the robustness of RDMA in handling high-VM densities without significant impact on response times, which is critical for maintaining a responsive environment as workloads scale.

Meanwhile, “Mysterious”, although unable to match RDMA, still delivers remarkable results at a 10-VM workload, starting with a comparatively low latency of 0.508 ms. However, as VM density grows, latency gradually increases, reaching 1.982 ms at 40 VMs.

StarWind VSAN NVMe/TCP exhibits a higher latency compared to the competition, starting at 0.905 ms and rising to 2.420 ms at the maximum VM count.

Figure 6 offers insights into CPU usage under the demanding 4K random read/write (70%/30%) mixed workload scenario.

“Mysterious” exhibits the highest CPU utilization across configurations, indicating substantial processing demands under a mixed read/write scenario. Beginning with a 50% CPU load at 10 VMs, it continues to scale steeply with the workload, peaking at an intense 95% CPU usage at 40 VMs. This represents approximately 100% higher CPU load compared to StarWind VSAN in both RDMA and TCP configurations, even at the maximum VM count.

StarWind VSAN NVMe/RDMA demonstrates much better CPU efficiency. It starts with only 32% CPU usage at 10 VMs and reaches a modest peak of 46% at 40 VMs. This efficient scaling demonstrates RDMA’s ability to support heavy workloads with significantly less resource strain, consuming 52% less CPU than “Mysterious” at a peak load.

StarWind VSAN NVMe/TCP also maintains a balanced CPU profile. Starting at 32% CPU usage with 10 VMs, it closely mirrors RDMA’s efficiency, peaking at a modest 44% at the highest workload. This balance, 54% lower than “Mysterious” at 40 VMs, indicates that StarWind VSAN NVMe/TCP can manage mixed workloads effectively without placing excessive strain on the CPU.

Random Write 4k:

When it comes to 4K random write performance, StarWind VSAN NVMe/RDMA continues to demonstrate impressive results, particularly at low queue depth (QD=2) with four concurrent jobs (numjobs=4). As seen in Figure 7, StarWind VSAN NVMe/RDMA pushes through to a peak of 327,063 IOPS with a load of 40 VMs, setting the bar for performance in write-intensive scenarios.

Meanwhile, “Mysterious” struggles to keep up, peaking at 171,808 IOPS under the same conditions – a considerable 48% lower than StarWind VSAN NVMe/RDMA.

StarWind VSAN NVMe/TCP lands between two, peaking at 256,770 IOPS. This balanced performance presents it as a solid middle ground.

In Figure 8, latency metrics for 4K random writes reveal StarWind VSAN NVMe/RDMA’s stability, maintaining latency under 1 ms even with higher VM counts.

“Mysterious” begins with remarkably low latency at 0.590 ms with 10 VMs but escalates to 1.870 ms with 40 VMs, indicating lower efficiency under heavier loads. Compared to StarWind NVMe/RDMA, which stays consistently below 1 ms, “Mysterious” lags by 87% at 40 VMs.

Meanwhile, StarWind VSAN NVMe/TCP starts at 1.080 ms with 10 VMs, already higher than both StarWind NVMe/RDMA and “Mysterious”. As the VM count grows to 40, latency rises to 2.490 ms, marking a 131% increase from its initial latency.

In Figure 9, CPU usage during the 4K random write test uncovers notable differences in efficiency between the storage solutions, with StarWind VSAN NVMe/RDMA emerging as the leader in resource efficiency.

StarWind VSAN NVMe/RDMA starts with a modest 21% CPU usage at 10 VMs, gradually increasing to 30% at 40 VMs. This controlled increase – just a 9% absolute rise across all VM counts – illustrates RDMA’s ability to handle write-heavy loads with minimal impact on CPU resources, a valuable trait in performance-driven environments.

“Mysterious” paints a different picture. Starting with 28% CPU usage at 10 VMs, it climbs sharply to 63% by 40 VMs. This represents a 125% increase in CPU demand, indicating that “Mysterious” becomes progressively more CPU-intensive under heavier loads. In direct comparison with StarWind NVMe/RDMA at 40 VMs, “Mysterious” requires 110% more CPU resources, making RDMA the clear winner in terms of resource efficiency.

StarWind VSAN NVMe/TCP strikes a middle ground in this comparison, starting at 24% CPU usage with 10 VMs and capping out at 33% with 40 VMs. With a 38% increase in CPU usage, it maintains a steady performance curve, particularly when compared to “Mysterious” NVMe/TCP significant consumption. At peak, StarWind VSAN NVMe/TCP consumes only 4% more CPU than RDMA while remaining a substantial 48% lower than “Mysterious”, highlighting its balanced CPU efficiency.

Random Read 64k:

Figure 10 illustrates throughput results for the 64K random read test with 2 numjobs and an I/O depth of 8.

“Mysterious” achieves the highest throughput, peaking at 25,589 MiB/s with 20 VMs and maintaining 25,433 MiB/s with 30 VMs. However, throughput slightly declines to 22,367 MiB/s at 40 VMs. This performance advantage is primarily driven by the local read optimization in “Mysterious”, which bypasses network processing, effectively boosting throughput.

StarWind VSAN NVMe/RDMA, while trailing in this case, achieves a robust 17,340 MiB/s at 30 VMs and 17,285 MiB/s at 40 VMs. While RDMA’s throughput is 32% lower than “Mysterious” at peak, its stability under load reflects RDMA’s capacity for reliable performance under demanding workloads.

StarWind VSAN NVMe/TCP starts about 50% slower than “Mysterious” at 9,231 MiB/s and reaches its maximum of 15,737 MiB/s at 40 VMs. Despite being 38.5% lower than “Mysterious” at peak and 9.2% below RDMA’s highest throughput, StarWind VSAN NVMe/TCP maintains consistent performance without significant drop-offs as VM counts increase.

The latency analysis in Figure 11 reveals that StarWind VSAN NVMe/RDMA effectively maintains low latency, even as VM counts increase. It begins at 0.350 ms with 10 VMs, escalating to 1.158 ms at 40 VMs. This steady and lower latency showcases RDMA’s efficiency in handling 64K random read requests with minimal delay.

While having higher throughput in this pattern due to local reading, “Mysterious” exhibits a similar latency growth pattern as in previous tests. Starting at 0.537 ms with 10 VMs, it increases up to 2 ms at 40 VMs, a 272% increase from its baseline.

StarWind VSAN NVMe/TCP experiences the highest latency among the solutions, beginning at 1.090 ms with 10 VMs and peaking at 2.545 ms with 40 VMs. Compared to StarWind NVMe/RDMA, StarWind VSAN NVMe/TCP shows 120% higher latency at maximum VM counts and is 27% slower than “Mysterious” under the same conditions.

Figure 12 presents CPU usage for the 64K random read test.

Here, StarWind VSAN NVMe/RDMA demonstrates exceptional efficiency in CPU usage, consistently hovering around 23-24% CPU usage across all VM counts, including peak loads. This consistency – where CPU usage increases only minimally with higher VM demands – highlights RDMA’s optimized resource utilization.

“Mysterious”, however, shows a substantial escalation in CPU consumption as the number of VMs increases. Starting at 42%, CPU usage climbs to 57% by 20 VMs and remains at this peak level even at 40 VMs. This represents approximately 130% higher CPU consumption compared to StarWind NVMe/RDMA at maximum load, indicating a significantly higher strain on system resources as VM density increases.

StarWind VSAN NVMe/TCP offers a middle ground, beginning with relatively low CPU usage and gradually reaching 44% at 40 VMs. While 83% higher than StarWind NVMe/RDMA at maximum VMs, it is still 23% more efficient in CPU consumption than “Mysterious”.

Random Write 64k:

Now, let’s move on to the 64K random write throughput analysis in Figure 13, where each solution’s performance is measured in MiB/s with a configuration of numjobs=2 and IOdepth=8.

StarWind VSAN NVMe/RDMA takes the lead here, delivering the highest throughput across the tested VMs. Starting at 3,611 MiB/s with 10 VMs, RDMA’s throughput continues to scale efficiently, reaching 4,644 MiB/s at 40 VMs. This result illustrates RDMA’s impressive ability to handle larger block sizes and heavy random write operations, consistently outperforming the other solutions.

Comparatively, “Mysterious” offers good throughput values, though at a lower scale. Beginning at 2,558 MiB/s with 10 VMs, it reaches its peak throughput of 3,111 MiB/s at 30 VMs before slightly decreasing to 2,929 MiB/s at 40 VMs.

Meanwhile, StarWind VSAN NVMe/TCP shows lower throughput levels, peaking at 2,518 MiB/s at 40 VMs, suggesting potential limitations when processing larger data blocks.

Latency is important for evaluating how well storage systems manage delays during intensive write operations.

Figure 14 breaks down the latency for 64K random writes, showing that RDMA again has an advantage, with its latency increasing modestly as VM count rises. Starting at 0.690 ms with 10 VMs, RDMA’s latency extends to 2.153 ms at 40 VMs, maintaining a relatively low delay despite the workload.

StarWind VSAN NVMe/TCP, however, sees a steeper increase in latency, beginning at 1.300 ms with 10 VMs and reaching 3.980 ms at 40 VMs.

“Mysterious”, in comparison, faces the highest latency levels, suggesting a greater challenge in efficiently managing the 64K RW pattern. Starting at 4.030 ms with 10 VMs, its latency climbs to 13.827 ms at 40 VMs – nearly six times the latency recorded with RDMA at the same VM count.

Turning our attention to CPU usage, as illustrated in Figure 15, we see RDMA maintaining its efficiency with CPU load ranging from 18% at 10 VMs to 21% at 40 VMs.

StarWind VSAN NVMe/TCP operates within a moderate range, with CPU usage rising from 20% to 23% as VM counts increase. While 10% higher than RDMA at peak loads, it still shows effective resource management.

In contrast, “Mysterious” exhibits a steep increase in CPU load as VM counts rise. Starting at 29% with 10 VMs, CPU usage escalates sharply to 65% at 40 VMs. This 125% rise in CPU demand from the initial to the maximum VM count translates to a 209% higher load than StarWind NVMe/RDMA at 40 VMs and 182% higher than StarWind VSAN NVMe over TCP.

1M Read:

Moving to sequential 1M read operations, Figure 16 showcases the throughput performance with numjobs=1 and IOdepth=4.

Starting with “Mysterious”, it demonstrates its standout capability for sequential reads, peaking at a remarkable 35,933 MiB/s with 40 VMs. This top result surpasses StarWind VSAN NVMe/RDMA by 52%, underscoring “Mysterious”’s performance edge when local data reads bypass the network stack. By leveraging direct access to locally stored data, “Mysterious” efficiently maximizes throughput in sequential read tasks.

Meanwhile, StarWind VSAN NVMe/RDMA reaches 17,421 MiB/s at 30 VMs and achieves 17,380 MiB/s at 40 VMs, maintaining consistent throughput under increased loads. However, with a 52% lower throughput than “Mysterious” at the 40 VM mark, StarWind NVMe/RDMA’s results highlight the limitations imposed by the longer data path compared to “Mysterious”.

StarWind VSAN NVMe/TCP exhibits the lowest peak throughput of the three, achieving 16,687 MiB/s at 40 VMs, reflecting a 46% lower throughput than “Mysterious” and 4% behind StarWind NVMe/RDMA.

Despite the previous throughput numbers, the latency for 1M reads, as observed in Figure 17, once again demonstrates the advantage of using RDMA in StarWind VSAN, which starts at 1.320 ms with 10 VMs and reaches 4.610 ms with 40 VMs.

StarWind VSAN NVMe/TCP, however, encounters higher latency that begins at 2.140 ms at 10 VMs and climbs to 4.795 ms at 40 VMs, showing a slight lag.

“Mysterious” achieves notable throughput gains but sees a latency climb, starting at 2.450 ms with 10 VMs and reaching 4.760 ms at 40 VMs. This result places it 3% behind StarWind’s NVMe over RDMA and nearly on par with NVMe/TCP at peak load.

In Figure 18, we see the CPU usage results for 1024K read operations.

StarWind VSAN NVMe/RDMA again proves efficient, peaking at only 17% CPU utilization. StarWind VSAN NVMe/TCP shows a moderate increase, ranging from 25% to 35% as VM counts grow. While this represents a 59% increase over RDMA at peak load, StarWind over TCP still delivers commendable CPU efficiency.

In contrast, “Mysterious” demonstrates an increase in CPU usage as VM counts rise, highlighting a potential trade-off for its high throughput. Starting at 33% CPU usage with 10 VMs, “Mysterious” NVMe/TCP resource consumption escalates swiftly to 70% at 40 VMs, marking an impressive 311% higher CPU load than RDMA and 100% more than StarWind over TCP. This stark contrast suggests that while “Mysterious” achieves exceptional sequential read throughput, it does so with a significant increase in CPU usage.

1M Write:

In Figure 19, we turn to 1M sequential write throughput with numjobs=1 and at IO depth=2.

StarWind VSAN NVMe/RDMA stands out, hitting the top throughput of 4,423 MiB/s at 40 VMs.

“Mysterious” follows, delivering 3,916 MiB/s at the same VM count, approximately 12% lower than StarWind VSAN NVMe/RDMA.

At the other end, StarWind VSAN NVMe/TCP reaches 3,067 MiB/s at its peak, around 31% lower than RDMA and 22% below “Mysterious”. This gap underscores RDMA’s efficiency in leveraging network infrastructure for write-heavy workloads, particularly under the increased pressure of 40 VMs. As a result, RDMA not only leads in throughput but demonstrates optimal performance for operations requiring both speed and resource efficiency.

Latency results for 1M sequential writes, as shown in Figure 20, confirm RDMA’s efficiency in managing delay even under high VM counts.

StarWind VSAN NVMe/RDMA starts at 2,400 ms latency with 10 VMs, rising modestly to 9,040 ms as VM counts reach 40. On the other hand, StarWind VSAN NVMe/TCP shows higher latency, starting at 4,130 ms with 10 VMs and reaching 13,405 ms at 40 VMs. This latency level indicates that StarWind TCP lags behind RDMA by about 48% at peak load.

“Mysterious” faces the most substantial latency increase, beginning at 5,503 ms with 10 VMs and escalating to a significant 20,610 ms with 40 VMs. At this level, “Mysterious” latency is approximately 55% higher than StarWind TCP and 129% higher than RDMA under maximum load.

Finally, Figure 21 focuses on CPU usage during 1M sequential write operations.

StarWind VSAN NVMe/RDMA leads the pack with impressive CPU efficiency, maintaining a low, stable 18% CPU usage even under heavy loads at 30 and 40 VMs. This consistency underscores RDMA’s optimized design for sequential write tasks, keeping CPU demand minimal and predictable across high VM densities.

StarWind VSAN NVMe/TCP also manages CPU usage effectively, registering 22% CPU usage at both 30 and 40 VMs, which positions it as a resource-friendly solution for larger sequential write operations. This shows StarWind TCP’s ability to balance workload demands without straining the CPU.

In contrast, “Mysterious” exhibits a more intensive CPU demand, beginning at 30% with 10 VMs and climbing sharply to 62% at 40 VMs. This is 40% higher than RDMA’s peak CPU usage at the same load and approximately 36% higher than StarWind TCP, indicating a notable increase in resource consumption. The sharp rise in “Mysterious” CPU usage suggests a design that, while delivering performance, requires significantly more resources to handle high sequential write tasks.

Additional benchmarking. 1 VM 1 numjobs 1 iodepth.

To gain a deeper understanding of how StarWind Virtual SAN and “Mysterious” perform under specific synthetic conditions, we conducted additional benchmarks focusing on a single-thread scenario, with 1 thread and 1 queue. This setup allows us to effectively measure storage access latency in an ideal, controlled environment. The benchmarks focus on 4K random read and write patterns.

Benchmark results in a table

| “Mysterious” over TCP – Host Mirroring + RAID5 (1 VM) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Pattern | Numjobs | IOdepth | IOPS | MiB/s | Latency (ms) | |||||

| 4k random read | 1 | 1 | 6,341 | 25 | 0.160 | |||||

| 4k random write | 1 | 1 | 2,413 | 9 | 0.410 | |||||

| StarWind VSAN NVMe over RDMA – Host mirroring + RAID5 (1 VM) | ||||||||||

| Pattern | Numjobs | IOdepth | IOPS | MiB/s | Latency (ms) | |||||

| 4k random read | 1 | 1 | 8,464 | 33 | 0.120 | |||||

| 4k random write | 1 | 1 | 5,416 | 21 | 0.180 | |||||

| StarWind VSAN NVMe over TCP – Host mirroring + RAID5 (1 VM) | |||||

|---|---|---|---|---|---|

| Pattern | Numjobs | IOdepth | IOPS | MiB/s | Latency (ms) |

| 4k random read | 1 | 1 | 6,039 | 23 | 0.170 |

| 4k random write | 1 | 1 | 3,273 | 12 | 0.310 |

Benchmark results in graphs

This section presents visual comparisons of the performance and latency metrics across storage configurations under research.

4k random read:

Figure 1 highlights the 4K random read performance in IOPS delivered by each storage solution with 1 numbjob and at 1 IOdepth.

StarWind VSAN NVMe/RDMA achieves the highest IOPS with 8,464, showcasing a 33% performance advantage over “Mysterious”, which reaches 6,341 IOPS.

StarWind VSAN NVMe/TCP, though competitive, records a slightly lower 6,039 IOPS, which is about 4.7% below “Mysterious” and 28.6% lower than StarWind NVMe/RDMA output.

StarWind VSAN NVMe/RDMA proves its robust capability in handling read-intensive, single-threaded operations, where high IOPS can be critical for applications needing quick data retrieval.

Latency, a critical metric for real-time read operations, is analyzed in Figure 2.

StarWind VSAN NVMe/RDMA demonstrates an impressive low latency of 0.120 ms for the 4K random read, positioning itself as the clear leader. This represents a 25% latency reduction compared to “Mysterious”, which registers 0.160 ms, and a 29.4% advantage over StarWind VSAN NVMe/TCP’s 0.170 ms.

4k random write:

In the 4K random write test, Figure 3 reveals the IOPS levels each storage solution can achieve.

StarWind VSAN NVMe/RDMA continues to lead with 5,416 IOPS, a result that outpaces “Mysterious” by a considerable 124%, as the latter manages only 2,413 IOPS.

StarWind VSAN NVMe/TCP falls in the middle, achieving 3,273 IOPS, representing a 35.8% performance increase over “Mysterious”.

Lastly, Figure 4 focuses on 4K random write latency, where low latency remains crucial for efficient write operations.

StarWind VSAN NVMe/RDMA once again excels, keeping latency at 0.180 ms, the lowest among the three configurations. In comparison, “Mysterious” lags with a latency of 0.410 ms, which is 127% higher than StarWind NVMe/RDMA.

StarWind VSAN NVMe over TCP achieves a more balanced middle ground, with a latency of 0.310 ms, placing it 72% higher than RDMA but still offering a 24% reduction compared to “Mysterious”.

These results underscore StarWind NVMe/RDMA unparalleled capability for low-latency writes. StarWind TCP provides a balanced alternative, while “Mysterious” though functional, lags behind, showing a clear gap in latency-sensitive operations compared to StarWind’s high-performance offerings.

Conclusion

When comparing these storage solutions across various workloads, each configuration reveals distinct strengths and trade-offs in throughput, latency, and CPU efficiency – tailored for different priorities.

“Mysterious” really shines in read-heavy tasks, especially benefiting from local-read capabilities in 64K random read and 1M read scenarios. This advantage makes it highly effective for read-intensive and sequential workloads, though it does come with a trade-off: higher CPU usage and latency, especially with dense VM environments. This suggests that while “Mysterious” performs exceptionally well in specific read-heavy tasks, maintaining that performance under heavy loads may demand a higher CPU investment.

In contrast, StarWind VSAN NVMe/RDMA, due to the fact it runs within the Linux-based Controller Virtual Machine, has a local read data path designed in a way that routes local traffic through the network, the same way it does with reads over the remote partner. Such an approach is optimized for deployment simplicity and isolation of critical components.

StarWind VSAN NVMe/RDMA strikes a balance, delivering great performance across both random and sequential workloads. With impressively low latency and minimal CPU consumption, RDMA’s architecture holds steady even as VM counts rise, making it a solid choice for environments that demand low-latency performance without significant resource strain like databases, real-time analytics, VDI, and more.

Meanwhile, StarWind VSAN NVMe/TCP keeps a steady, balanced profile, maintaining dependable throughput and moderate CPU usage across various scenarios. Although it not always reaches StarWind VSAN RDMA’s peak performance among all scenarios or matches “Mysterious” in high-throughput reads, its balanced output and moderate resource utilization make it a strong, versatile contender for cases when RDMA is not available.

If you have any comments or questions about the article, feel free to reach out via: info@starwind.com