Introduction

In Windows Server 2016 Microsoft introduced storage Quality of Service (QoS) policies. Previously in Windows Server 2012 R2, we could set minimum and maximum IOPS individually virtual hard disk but this was limited even if you could automate it with PowerShell. The maximum was enforced but the minimum not. That only logged a warning if it could be delivered and it took automation that went beyond what was practical for many administrators when it needed to be done at scale. While it was helpful and I used it in certain scenarios it needed to mature to deliver real value and offer storage QoS in environments where cost-effective, highly available storage was used that often doesn’t include native QoS capabilities for use with Hyper-V.

This was a missing component that as virtualization environments grow and ever more mixed loads were put together become more evident and with that the need to prioritize IO for certain workloads. That was also true in environments where hard predictability was a hard requirement which means minimums needed to be enforced, which it cannot do.

If you are interested in more information on storage QoS in Windows Server 2012 R2 please read my blogs on it.

- Storage Quality of Service (QoS) In Windows Server 2012 R2

- How To Measure IOPS Of A Virtual Machine With Resource Metering And MeasureVM

- How To Monitor Storage QoS Minimum IOPS & Identify VM & The Virtual Hard Disk In Windows Server 2012 R2 Hyper-V

- Where Does Storage QoS Live In Windows Server 2012 R2 Hyper-V

But rest assured, in the Hyper-V production environments under my control I have moved to Windows Server 2016 as fast as possible for many reasons and one of them is storage QoS policies.

With storage QoS, you can create different types of policies, assign these policies to virtual hard disks to apply them and monitor the storage performance. Within the minimum and maximum boundaries set in a policy storage performance is adjusted based on the actual storage load (IOPS, bandwidth). Change the policy settings provides for easy centralized management.

What do I need to get storage QoS policies in Windows Server 2016

Requirements

First of all, you need Windows Server 2016 on all the hosts involved (not the guests).

Storage QoS is dependent on failover clustering and as such can leverage 2 scenarios: Scale-Out File Server (SOFS) or CSVs on a Hyper-V cluster.

This works with any storage array (SAN, Shared Raid, Storage Spaces) and protocol. (iSCSI, FC, SMB3). This is important. While storage spaces and Storage QoS combined benefit from each other it’s not you don’t need S2D to leverage storage QoS.

Storage QoS on Hyper-V using SOFS

- The failover cluster providing the SOFS shares need to be running failover clustering.

- The Hyper-V hosts consuming the SOFS share can be a Hyper-V failover cluster or a non-clustered Hyper-V Host.

Storage QoS on Hyper-V using Cluster Shared Volumes

- You need a Hyper-V cluster with the Hyper-V role enabled on the nodes.

- Hyper-V needs to use Cluster Shared Volumes (CSV) for storage

These requirements are met my most Hyper-V clusters out there.

Do note that this means that local or clustered storage that is not CSV is not supported. This also means that virtual iSCSI or FC or even SMB3 Shares to the guest is not supported unless it’s a guest cluster playing by the same rules and then only within the guest cluster itself. If you use VHD Sets for guest clusters in accordance with the above requirements then Storage QoS does work.

How does it work?

As we have seen in the requirements storage QoS is built into the Microsoft failover clustering with SOFS and CSVs and with Hyper-V. When you leverage SOFS for storage this exposes file shares to Hyper-V nodes over SMB3. In Windows Server 2016, SOFS leverages a newly added policy manager which provides the central storage performance monitoring for the VMs running on the SOFS shares. The policy manager communicates the storage QoS policy settings and metrics back to the Hyper-V host. The Hyper-V hosts adjust the storage IO consumption the virtual machine as needed.

This is an ongoing perpetual process that ensures that any change made to policy settings or storage IO requirements of the VMS are communicated timely to the Hyper-V hosts.

With CSV instead of SOFS as storage on the Hyper-V cluster the storage QoS Resource Manager, the rate limiter and IO scheduler are all running the Hyper-V nodes that are members of the same cluster. That’s the biggest difference with the SOFS deployment. Which is why a non-clustered Hyper-V node leveraging SOFS Shares can still have storage QoS.

How to set up Storage QoS

While back during the technical previews of Windows Server 2016 you had to enable the feature yourself this is no longer the case. You will have it automatically at your disposal when you install a new Hyper-V cluster with CSVs or SOFS cluster or perform a Cluster OS rolling upgrade from existing Failover Cluster running Hyper-V with CSVs or Scale-Out File Server to Windows Server 2016. There is nothing for you to do or manage on cluster Store QoS Resource, it’s all handled for you.

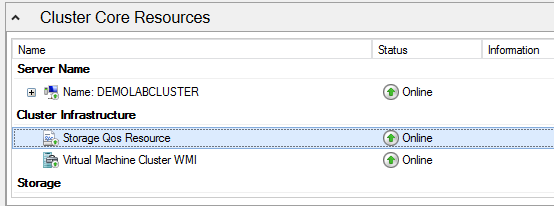

You can see the cluster Store QoS Resource in the GUI

Or a query for it via PowerShell:

Get-ClusterResource -Name "Storage Qos Resource"Important notes about storage QoS Policies

It’s important to note a few things:

- Both minimum and maximum IOPS/Bandwidth are enforced.

- Minimum bandwidth is guaranteed but not reserved unless needed. This means that if the IOPS are not needed by the virtual hard disks they are assigned to they are available to other workloads.

- Minimum bandwidth guarantees can only be met as long as the storage volume or storage array can deliver. If the real demand exceeds the total of minimum IOPS configured for one or more VMs and those cannot be met because the demand exceeds what the storage array/volume can deliver there is contention. That means the status of a flow (file handle to a virtual disk on a VM) or a volume will change form “OK” to “Insufficient Throughput”. n that volume or all volumes and potentially on one, some or all VMs depending on the policy setting. You’ll see the status of those change

- There are 2 types of storage QoS Policies: dedicated and aggregated. You can have both of them in the same environment and even mix them to achieve your goals. In total, you can have up to 10,000 policies in one failover cluster which should suffice for all your needs.

What are dedicated storage QoS policies?

With dedicated policy, you create a minimum number of IOPS and maximum number IOPS and or bandwidth. The latter will work on a whatever is reached first basis, the IOPS or the bandwidth. The dedicated policy is applied to individually to each and every virtual hard disk assigned to that policy.

Let’s look at what that means. If you have a single VM with 3 virtual disks in a single dedicated policy each disk will get their own allocation of the minimum and maximum settings as defined by the policy. So, if the maximum is 2000 IOPS each disk can do 2000 IOPS which combined leads to 6000 IOPS maximum for the entire VM. This rule is the same when you have multiple VM with one or more virtual disks. As longs as the disks are in the same dedicated policy each disk gets that same minimum and maximum for itself when possible. As said, you can have different dedicated policies assigned to different virtual hard disks within the same VM or across multiple VMs.

Use cases for dedicated policies

If you have groups of virtual hard disks or virtual machines where all the hard disks need to meet the same storage IO needs you can set a single dedicated policy for that.

VDI deployments are a prime example of this. You want to ensure each VM gets an adequate minimum IOPS but you also don’t want people to go overboard on their personal VM ruining the experience for others. On top if that this provides consistency which is great because end users tend to complain when they notice a significant difference. At 07:00 AM the early bird knowledge worker might be very happy with her 20.000 IOPS for her reporting tool but by 09:30 when everyone else is also at work she might call the support desk to find out why here reports run so much slower. This is an excellent example of dedicated storage QoS policies.

Another example of this would be an SQL Server farm that needs the same minimum IOPS and perhaps must be stopped from consuming too much so they each the same maximum IOPS. For individual virtual hard disks that have an extra IOPS requirement, you could create a second dedicated policy. That way you’d have one for the data file and one for the log file virtual disks. Perhaps you can have a third dedicated policy for the OS with SQL Server files virtual disks.

What are aggregated storage QoS policies?

An aggregated policy applies maximums and minimum for the combination of the virtual hard disks where it has been assigned to. This means these virtual hard disks share the specified minimum IOPS and maximum IOPS and/or bandwidth in the aggregated policy.

For example, let’s look at an aggregated policy with a minimum of 1000 IOPs and a maximum of 5000 IOPs. When you apply this policy to 3 different virtual hard disks you are giving those 3 disks together (combined) a minimum IOPS of 1000 IOPS and a maximum of 5000 IOPS as long as the storage system can deliver that. That means that all 3 virtual hard disks will get 1/3 of that 1000 minimum IOPS or 1/3 of that 5000 maximum IOPS if they all have the same workload. But if one hard disk is completely idle the IOPS will be divided between the other two active virtual hard disks assign to that policy. This remains the same whether all the virtual hard disks are assigned to a single VM or across VMs.

Use cases for aggregated Policy

Aggregated policies come in handy when you want multiple virtual hard disks to share a single policy its minimum IOPs and maximum IOPS / bandwidth settings. There are multiple use cases for this:

A development team can get an aggregated policy for their test and development VMs. The IT Ops guys can get one for their lab needs if that shares storage with production. That means that the minimum /maximum settings will be divided between them based on demand. All disks will be guaranteed their combined minimum while they will not exceed their specified maximum IOPs or bandwidth. The same goes for tenants in multi-tenant scenarios and you can provide different SLA level policies.

You could define an aggregated policy for all the hard disks of VMs that are part of a service or multi-tier application. In that respect, you could give a policy to an application end to end instead of teams or tenants.

Mixing dedicated and aggregated policies

You can mix and match. Perhaps you can use an aggregated policy for all the virtual disks in a SQL Server farm but use a dedicated policy for the log file virtual disk that is very IO intensive.

What do you achieve by implementing Storage QoS?

Storage QoS Policies in Windows Server 2016 help us achieve 3 important goals. Reporting and monitoring help us gain insight. We prevent excessive IO consumption that might disrupt our services and while we maintain a predictable and easily managed storage IO environment. All included in the box.

Reporting & Monitoring

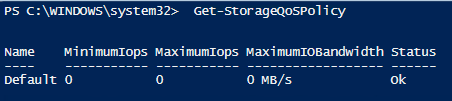

We gain a better picture of storage IO consumption across our Hyper-V deployments at the volume level as well as at the flow level, which comes down to the virtual hard disk level and as such the vM level. Just having storage QoS starts giving us a basic idea even without configuring any storage QoS policy yourself. So, with just the default policy, which is easily verified with Get-StorageQoSPolicy.

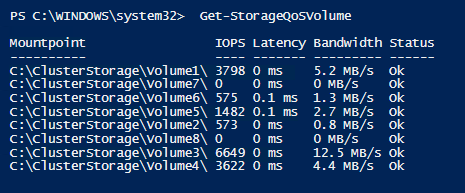

At the volume level with Get-StorageQoSVolume, you’ll see how your CSV / LUNs are doing storage performance wise. You get metric and the status at the volume level. Below you see the standard output of a Hyper-V cluster with CSV before I even created and assigned any storage QoS policies.

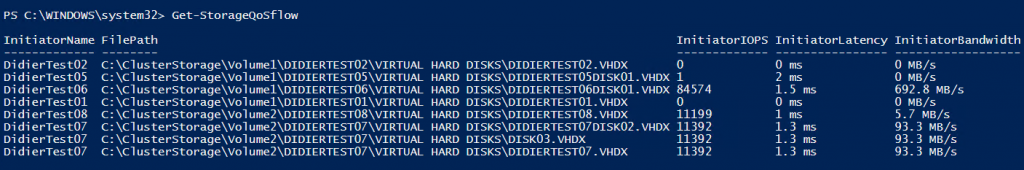

At the flow level with Get-StorageQoSFlow, you get more insight into the flow (*) level. That comes down to what’s happening at the virtual hard disks and as such when you combine that info, the VMs are in regards to storage performance. Below you see the standard output of a Hyper-V cluster with CSV before I even created and assigned any storage QoS policies. Play with PowerShell to find more details. A good one is the status of the flow.

(*) A flow equals each file handle a Hyper-V server opens to a virtual hard disk. If a virtual machine has two virtual hard disks attached, it will have 1 flow to the SOFS or the CSV per file. When a virtual hard disk is shared between multiple VMs in a guest cluster, it will have 1 flow per virtual machine.

Please note that the metrics are gathered for you on all nodes in the SOFS cluster or Hyper-V cluster using CSVs. You can point Get-StorageQoSVolume and Get-StorageQoSFlow at any cluster node and get the same collective output.

You get all this, out of the box, before you even configured a single policy yourself. Pretty cool, right and right there at your fingertips without having to enable resource metering on all the VMS and using Measure-VM.

Now when we do implement our own policies we can easily gain a better picture of storage IO consumption across our Hyper-V deployments at the volume level as well as at the flow level, which comes down to the virtual hard disk level and as such the VM level. So, we’ll look at how to do this a bit later.

Perhaps a final word on status:

OK means that the policy settings can be met

InsufficientThroughput means that the minimum IOPs cannot be delivered. This happens when one or more virtual hard disks of one or more VMs have minimum IOPS set in policies that the storage system cannot deliver.

UnknownPolicyId means that a policy was assigned to the virtual machine’s virtual hard but is missing. You can remove this assignment or create a policy with that ID. The policy can have been deleted or the VM/virtual hard disk files can have been moved to another cluster that doesn’t have a policy with that ID. Keep your policies and ID the same across clusters if you have that kind of mobility and need maintain policies. In this condition, the virtual hard disk / VM is fully functional, but the disk behaves as if no policy has been assigned.

More information on these storage QoS policy commands can be found here.

Muting noisy neighbors

When you apply even a basic policy with maximum bandwidth or IOPS you can put a limit on what storage IO hungry VMs can take. A minimum IOPS setting will allow other VMs to get at least their minimum IOPS even when some VMs are running galore. This prevents one or a few noisy neighbors from hogging all the storage IO. Note that that storage QoS is assigned at the virtual hard disk level which gives you fine granularity in controlling storage IO while still preventing a single VM from being dominant.

Predictable & managed storage IO consumption

By leveraging storage QoS policies we manage storage IO consumption and can make it more predictable. That allows for SLAs and avoids disruptive IO patterns. Aggregated and dedicated storage QoS policies allow for flexibility in addressing the needs of your organization. Policies also deliver an easier way of managing the settings for a larger environment. They help to ensure that excessive storage IO is capped where and when needed while they still allow for higher IO consumption where and when required.

Creating, altering and monitoring storage QoS policies

You should first of all note that there is no practical difference in creating, monitoring and altering dedicated or aggregated storage QoS policies. The only constraint is that you define the type at creation and that cannot be changed afterward.

Storage QoS policies are defined and managed on either the SOFS server when leveraging SMB 3 shares сluster or on the Hyper-V cluster itself when working with CSVs on that cluster itself.

Note that a single cluster can have a maximum of 10,000 storage QoS policies.

I will now walk you through a lab where I create both types of policies, assign them to the virtual hard disks of some lab VMs. We then look at the results and alter the policies to see the effect.

Creating storage QoS Policies

#Remove any Storage QoS policy assigned to our demo VM's virtual hard disks

Get-VM -Name DidierTest07 -ComputerName DEMOLABCLUSTER | Get-VMHardDiskDrive | Set-VMHardDiskDrive -QoSPolicyID $null

Get-VM -Name DidierTest08 -ComputerName DEMOLABCLUSTER | Get-VMHardDiskDrive | Set-VMHardDiskDrive -QoSPolicyID $null#Get rid of any existing Storage QoS policies

Remove-StorageQosPolicy -Name * -Confirm:$false -ErrorAction Ignore

#Show them that by default there is "only" the default policy

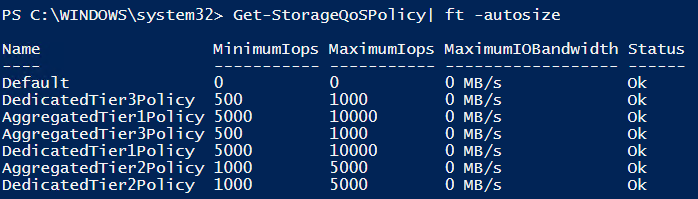

Get-StorageQoSPolicy | ft -autosize

#Create aggregated storage QoS policies have the IOPS/Bandwidth settings divided amongst all VMs virtual hard disks that have the policy assigned.

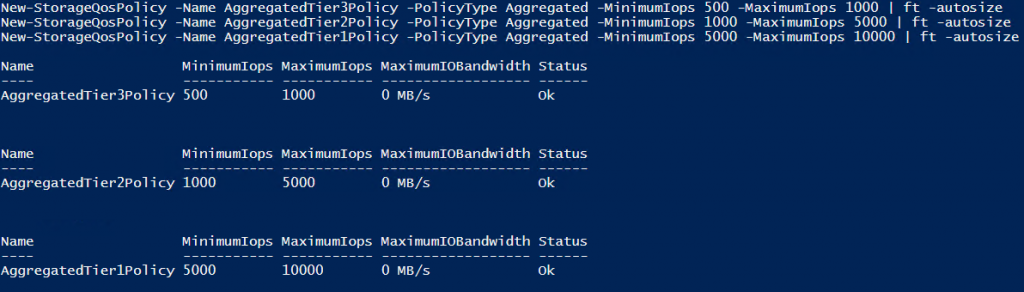

New-StorageQosPolicy -Name AggregatedTier3Policy -PolicyType Aggregated -MinimumIops 500 -MaximumIops 1000 | ft -autosize

New-StorageQosPolicy -Name AggregatedTier2Policy -PolicyType Aggregated -MinimumIops 1000 -MaximumIops 5000 | ft -autosize

New-StorageQosPolicy -Name AggregatedTier1Policy -PolicyType Aggregated -MinimumIops 5000 -MaximumIops 10000 | ft -autosize#Create dedicated storage QoS policies have the IOPS/Bandwidth settings dedicated to any VM’s virtual hard disks.

New-StorageQosPolicy -Name DedicatedTier3Policy -PolicyType Dedicated -MinimumIops 500 -MaximumIops 1000 | ft -autosize

New-StorageQosPolicy -Name DedicatedTier2Policy -PolicyType Dedicated -MinimumIops 1000 -MaximumIops 5000 | ft -autosize

New-StorageQosPolicy -Name DedicatedTier1Policy -PolicyType Dedicated -MinimumIops 5000 -MaximumIops 10000 | ft -autosize#Show them that storage QoS policies again

Get-StorageQoSPolicy | ft -autosizeAltering storage QoS policies

If you want to alter a storage QoS policy that easily done with the Set-StoragQoSPolicy command:

Set-StorageQosPolicy -Name DedicatedTier1Policy -MinimumIops 1000 -MaximumIops 10000Or

Set-StorageQosPolicy -Name DedicatedTier1Policy -MinimumIops 1000 -MaximumIops 5000You get the idea. That’s all there is to that it and it takes effect very fast.

Don’t forget you cannot change the policy type. You’ll need to destroy and recreate the policy with the same policy ID to do that. To do so we grab the policy ID and use that to create a new one with that ID after we deleted the original one.

#Grab the ID of the existing policy

$SavePolicyID = (get-StorageQosPolicy -Name DedicatedTier1Policy).PolicyId#Remove the existing policy

Remove-StorageQosPolicy -Name DedicatedTier1Policy -Confirm:$false -ErrorAction Ignore#Create the new policy of a different type with the same ID but as before. The virtual hard disks assigned to that policy will now be subject to the new oneNew-StorageQosPolicy -PolicyId $DedicatedTier1Policy -PolicyType Aggregated -Name DifferentTypeOfPolicyWithRecuperatedID -MinimumIops 1000 -MaximumIops 5000Applying storage QoS Policies & monitoring them

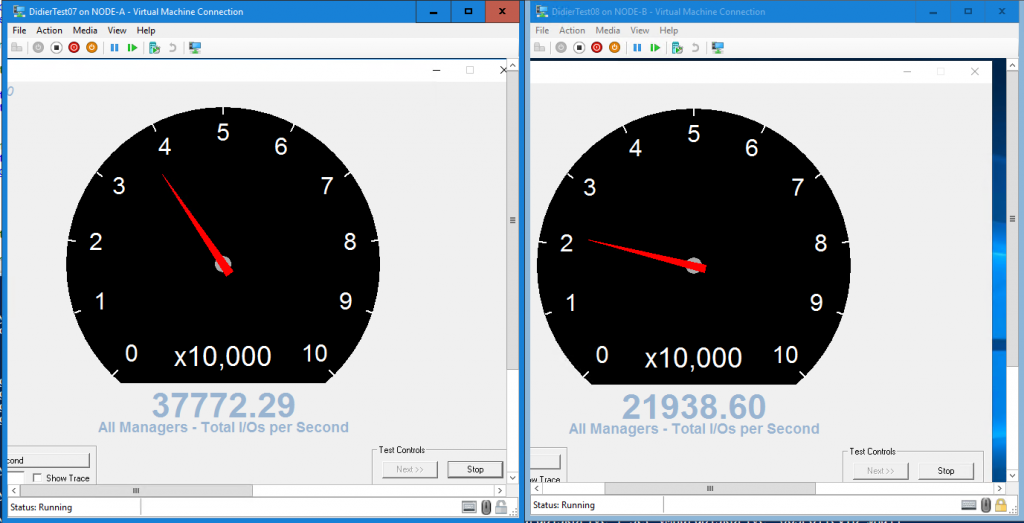

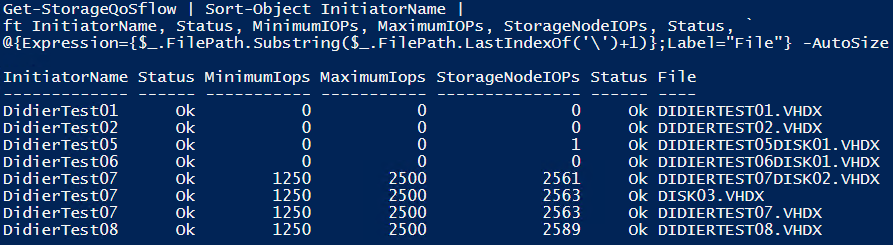

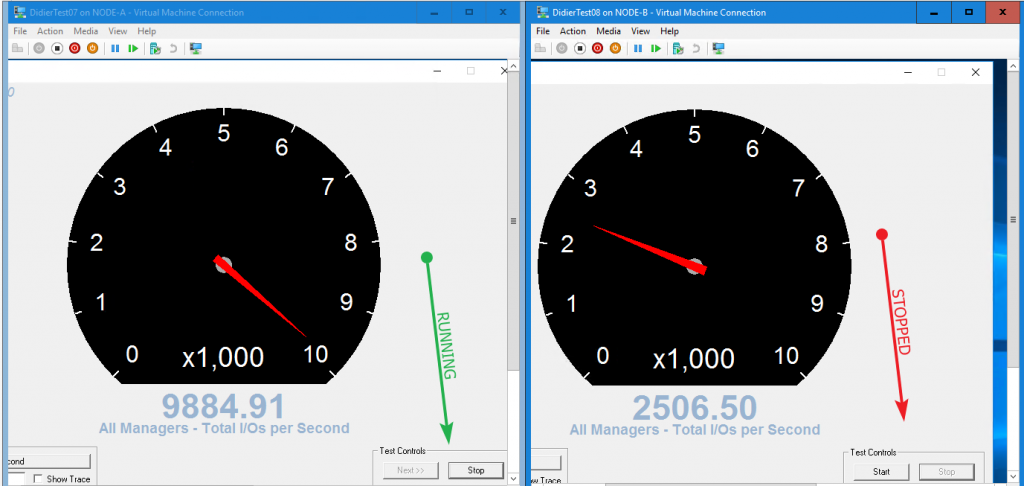

We have not assigned any policies to the virtual hard disks of the VMs yet but we have 2 VMs running with IOMeter blasting away just generating all the IOPS it can. VM DidierTest07 has 3 virtual disks we’ll assign to a policy and VM DidierTest08 has only one. They reside on the same CSV LUN. They are competing for IOPS as they have no restrictions set.

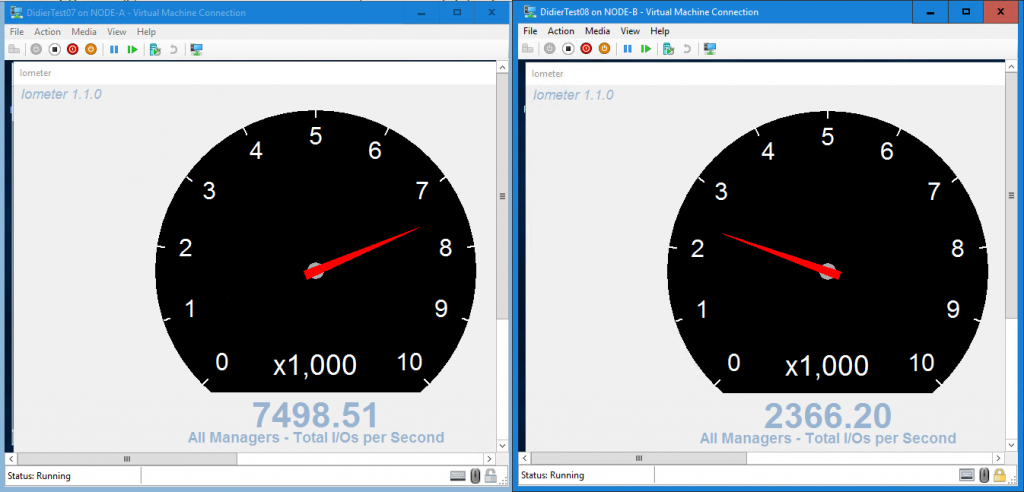

#We put both VMs in the AggregatedTier1Policy

Get-VM -Name DidierTest07 -ComputerName DEMOLABCLUSTER | Get-VMHardDiskDrive | Set-VMHardDiskDrive -QoSPolicyID (get-StorageQosPolicy -Name AggregatedTier1Policy).PolicyId

Get-VM -Name DidierTest08 -ComputerName DEMOLABCLUSTER | Get-VMHardDiskDrive | Set-VMHardDiskDrive -QoSPolicyID (get-StorageQosPolicy -Name AggregatedTier1Policy).PolicyIdThe results are seen almost immediately as we’d expect from an aggregated policy that has 4 virtual hard disks over 2 virtual machines. Each gets its fair share of the maximum because the storage array can deliver that. The maximum IOPS for was 10,000. Divided over 4 virtual hard disks that 2,500 IOPS per VHDX. Remember that DidierTest07 has 3 virtual hard disks (3*2,500 IOPS=7,500 IOPS) and DidierTest08 only 1 (2,500 IOPS).

We see the same when we play a bit Get-StorageQoSFlow

Get-StorageQoSFlow | Sort-Object InitiatorName |

ft InitiatorName, Status, MinimumIOPs, MaximumIOPs, StorageNodeIOPs, Status, `

@{Expression={$_.FilePath.Substring($_.FilePath.LastIndexOf('\')+1)};Label="File"} -AutoSizeThe minimum and maximum IOPS are nicely divided across the 4 virtual hard disks on our 2 VMs.

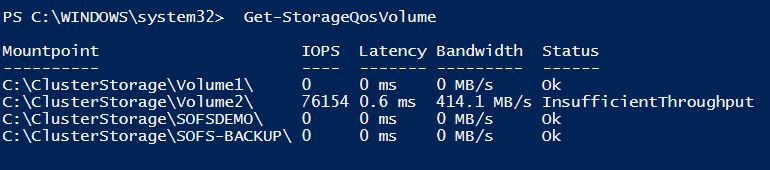

With Get-StorageQosVolume we see that our 2 VMs (the only ones on CSV C:\ClusterStorage\Volume2\ ) are indeed getting the maximum the aggregate storage QoS policy allows as they generate 10,000 IOPS

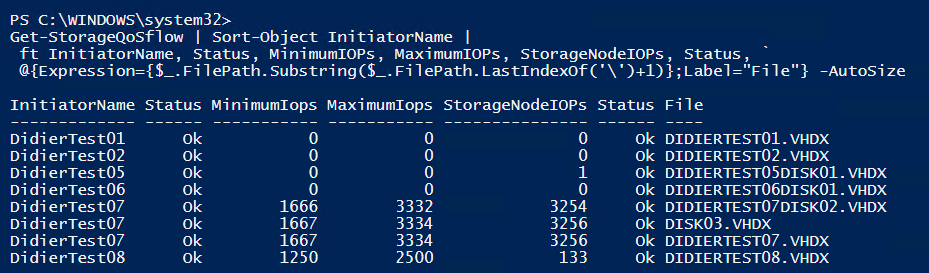

All virtual hard disks will have their MinimumIOPs, MaximumIOPs and MaximumIobandwidth value adjusted based on their storage IO load. This way the total amount minimum and maximum settings used by all disks assigned to this aggregated policy stays within the defined boundaries. If I Stop IOMeter on DidierTest08 we see this happen, but we need to wait a bit, it’s not instantaneously. DidierTest07 with its 3 VHDX files will get 3*3,333 IOPS = 10,000 IOPS the maximum set by the aggregated policy.

If start IOMeter again on DidierTest08 you’ll see that the situation returns to the original one. Neat!

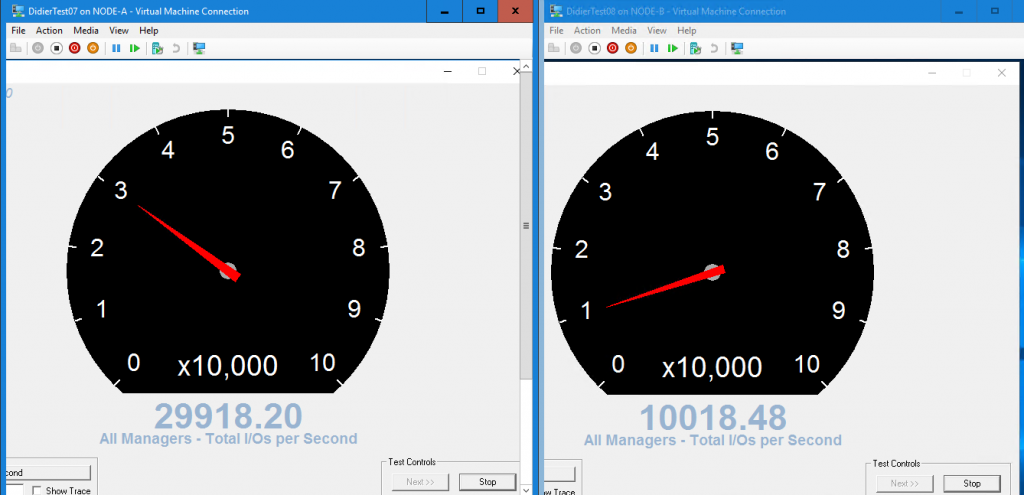

So now we’ll play a bit with the dedicated storage QoS policy and assign that one to the virtual hard disks of our 2 test VMs.

# Put both VMs in the DedicatedTier1Policy

Get-VM -Name DidierTest07 -ComputerName DEMOLABCLUSTER| Get-VMHardDiskDrive | Set-VMHardDiskDrive -QoSPolicyID (get-StorageQosPolicy -Name DedicatedTier1Policy).PolicyId

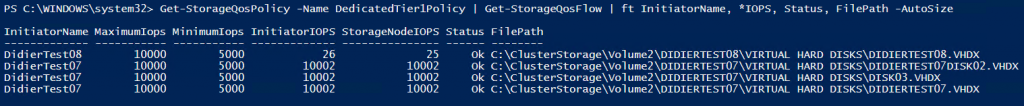

Get-VM -Name DidierTest08 -ComputerName DEMOLABCLUSTER | Get-VMHardDiskDrive |Set-VMHardDiskDrive -QoSPolicyID (get-StorageQosPolicy -Name DedicatedTier1Policy).PolicyIdThat gives us this nice picture. As the storage array can deliver the IOPS each and every individual VHDX assigned to DedicatedTier1Policy gets the maximum IOPS setting defined in that policy: 10,000 IOPS. For DidierTest07 that’s 3 * 10,000 IOPS = 30,000 IOPS and for DidierTest08 that 1 * 10,000 IOPS = 10,000 IOPS.

Playing with PowerShell we can confirm this.

Note that stopping IOMeter on DidierTest08 has no impact on DidierTest07, the 3 VHDX files get their maximum but nothing more or less. For them, nothing has changed. The freed up IOPS will be available where and when needed on the cluster VMs or volumes.

Get-StorageQosPolicy -Name DedicatedTier1Policy | Get-StorageQosFlow | ft InitiatorName, *IOPS, Status, FilePath -AutoSizeAs the last experiment, I create congestion by hammering that CSV and setting the required minimum to a ridiculous unachievable value, just to show you a status change.

Conclusion

Having storage QoS available without needing to get it from your storage vendors is a really good thing. With Windows Server, it’s included in the operating system, so it’s not an extra cost and provides a valuable capability. It allows you to focus on getting the capacity, latency and IOPS you need by selecting the storage solution you want for that purpose as cost-effectively as possible. For a VDI solution on a hyper-converged system that helps achieve a great TCO and ROI and it will do the same for multitenant environments, SLA’s and prioritization of workloads etc. It also allows for monitoring your storage performance even if you have no direct need for minimum or maximum storage QoS right now. When you do, you can keep noise neighbors under control, enforce maximums and guarantee minimums, dish these out to SLA tiers, to complete (multi-tier) applications solutions or teams/product owners. On top of that if your storage can’t deliver what’s requested by the policies you’ll know. I like it a lot as it helps me deliver and manage expectations.