“From Synology’s Active-Passive Architecture Drawbacks to StarWind’s Active-Active Voodoo Magic”

Introduction

DiskStation DS916+ is a further improvement of DS415+ model. Storage capacity in DS916+ can be scaled using DX513 expansion units, making a total of nine 3.5 disk bays. Given the relatively small form factor and impressive capacity potential, such configuration may become a great solution for small businesses and enthusiasts.

After reviewing the DS916+ specifications and performing some rough calculations, it comes clear that this unit is totally capable of serving as a primary backup storage or a file server.

Being user-friendly and feature-rich, Synology’s proprietary OS (DSM) can be used to turn the DS916+ into SMB/NFS NAS or iSCSI SAN in a few minutes. There are also many features useful for storage administrators: “active-passive” DSM HA Cluster, detailed storage performance statistics, network performance monitor, hardware status monitor and many others.

According to our experience, “active-passive” HA configuration is not the best choice for production scenarios. One of the nodes in a two-node cluster is on standby in this case, so only the resources of a single node are used. This means halving the possible throughput, worse read performance and higher failover time if we compare to “active-active” configuration.

In order to confirm the above opinion, we decided to get ourselves a pair of Synology DS916+ and benchmark it. The main objective of our further experiments is to define whether a mid-range Synology units are capable of handling the light production workload typical for small businesses. We will also deploy a VSAN from StarWind Free on top of our Synology boxes and check how it performs.

In our today’s article, we will run a set of benchmarks to measure the performance of DS916+ in different scenarios.

Specifications

Each DS916+ unit features the below specifications:

CPU: 1x Quad Core Intel Pentium N3710 with up to 2.56 GHz burst frequency

RAM: 2 GB DDR3

Storage: 4x WD RE 3 TB Enterprise Hard Drives: 3.5 Inch, 7200 RPM, SATA III, 64 MB Cache

Network: 2x RJ-45 1GbE LAN Ports

OS: DSM 6.0.2-8451 Update 4

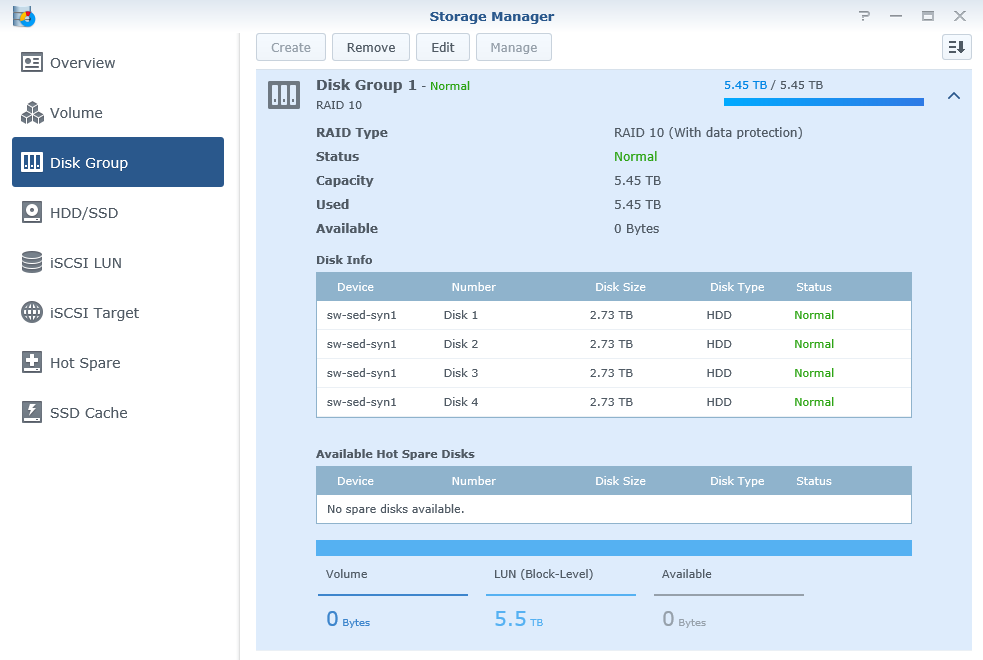

We decided to configure RAID 10 in order to achieve a balance between performance and redundancy. We do not recommend configuring RAID 5/6 on spindle arrays that will be used as virtual machine storage.

DSM Storage Manager “Disk Group” view:

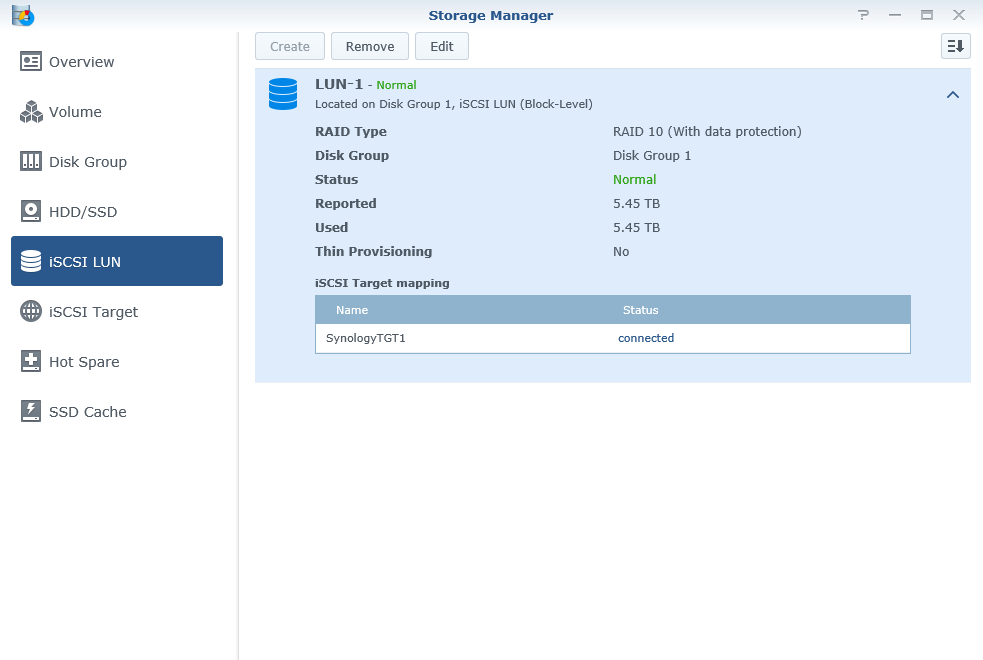

iSCSI LUN and the corresponding iSCSI Target were created on top of RAID10 drive group.

DSM Storage Manager “iSCSI LUN” view:

Performance benchmark

All the tests listed below were performed using Microsoft Diskspeed tool. Our benchmark process includes a number of different access patterns that would help to monitor the storage performance under particular workload types.

We have decided to pick the next patterns for our benchmark:

- 4K 100% Random Read

- 4K 100% Random Write

- 8K 67% Random Read 33% Random Write

- 8K 67% Sequential Read 33% Sequential Write

- 64K 100% Sequential Read

- 64K 100% Sequential Write

- 256K 100% Sequential Read

- 256K 100% Sequential Write

As you can see, we are covering the “industry standard” 4K; “production-like” mixed 8K; larger sequential 64K; and “backup ready” 256K patterns.

We have tested different Diskspeed Threads/Queues combinations in order to squeeze out the maximum performance out of our setup while keeping the latency at some acceptable levels. The final value was rendered as 8 Threads and 8 Outstanding I/Os.

Measuring standalone Synology DS916+ unit iSCSI performance

In this test, we are using only a single DS916+ box connected via two 1GbE networks to the Supermicro server. Supermicro OS is Windows Server 2016 with MPIO feature installed and configured in “Round-Robin” mode.

- Test duration: 60 seconds

- Test file size: 1TB

- Threads: 8

- Outstanding I/Os: 8

Corresponding Diskspeed parameters:

4k 100% Random Read:

diskspd.exe -t8 -o8 -r -b4K -w0 -d60 -h -L -c1000G4k 100% Random Write:

diskspd.exe -t8 -o8 -r -b4K -w100 -d60 -h -L -c1000G8k 67% Sequential Read 33% Sequential Write:

diskspd.exe -t8 -o8 -si -b8K -w33 -d60 -h -L -c1000G8k 67% Random Read 33% Random Write:

diskspd.exe -t8 -o8 -r -b8K -w33 -d60 -h -L -c1000G64k 100% Sequential Read:

diskspd.exe -t8 -o8 -si -b64K -w0 -d60 -h -L -c1000G64k 100% Sequential Write:

diskspd.exe -t8 -o8 -si -b64K -w100 -d60 -h -L -c1000G256k 100% Sequential Read:

diskspd.exe -t8 -o8 -si -b256K -w0 -d60 -h -L -c1000G256k 100% Sequential Write:

diskspd.exe -t8 -o8 -si -b256K -w100 -d60 -h -L -c1000G

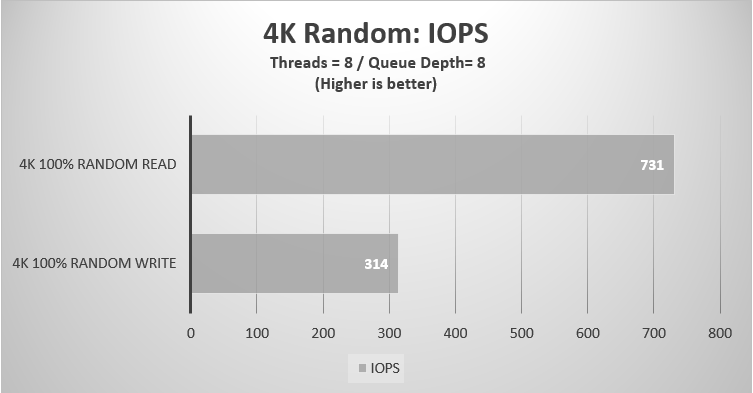

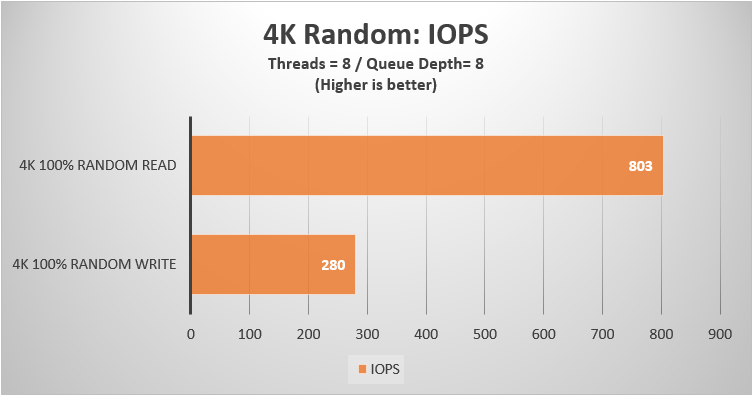

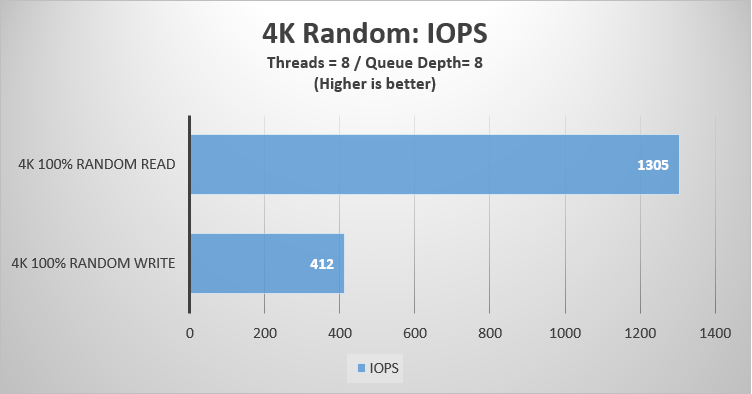

As a starting point, we measured a random 4K performance in 100% write and 100% read workloads:

The results meet our expectations quite precisely. Obviously, we have noticed a little struggle on write operations that can be justified since Synology systems are using software RAID.

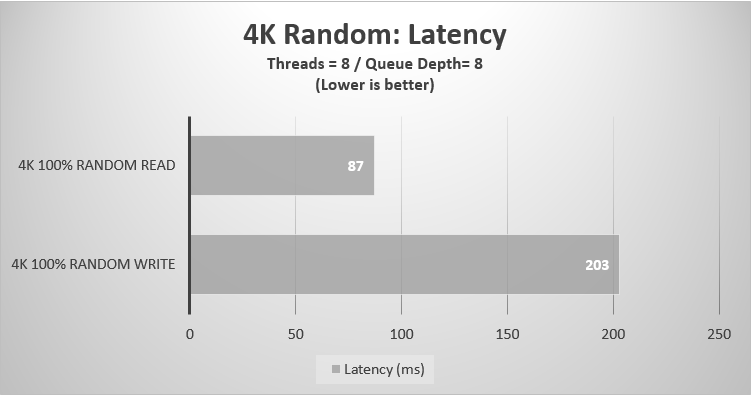

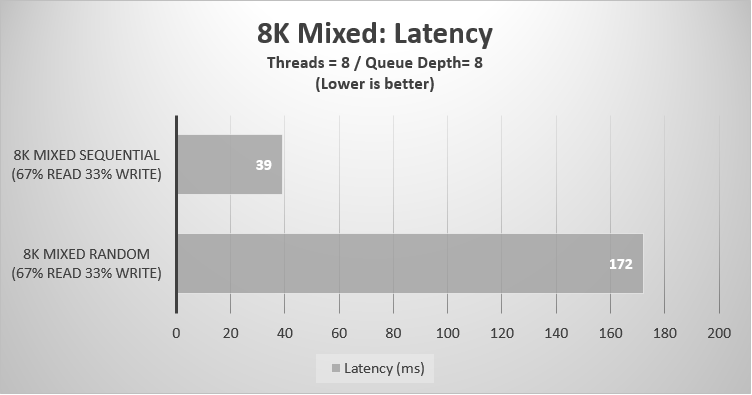

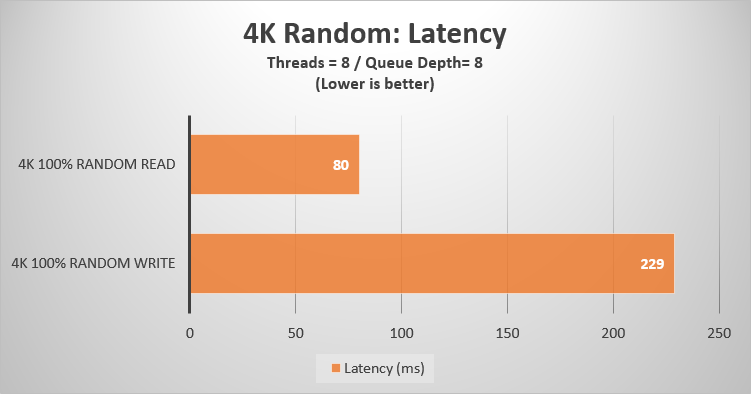

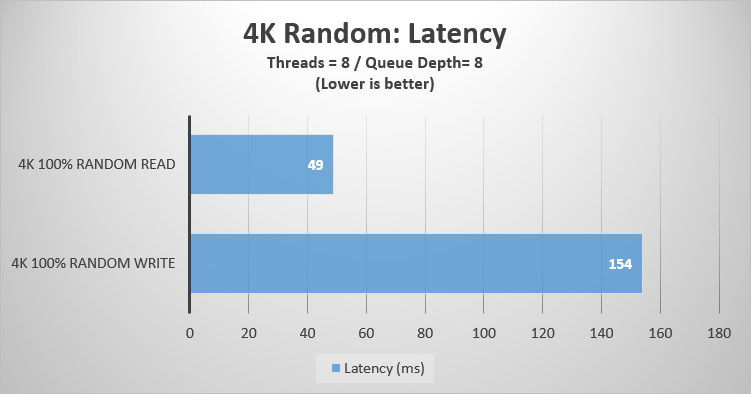

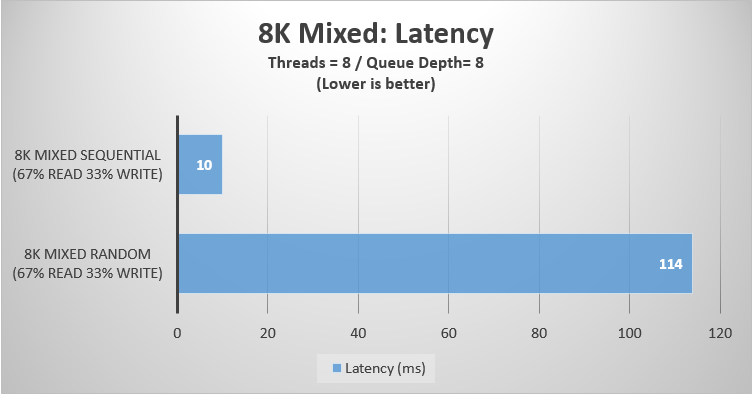

Given the workload complexity, we have received an acceptable latency values:

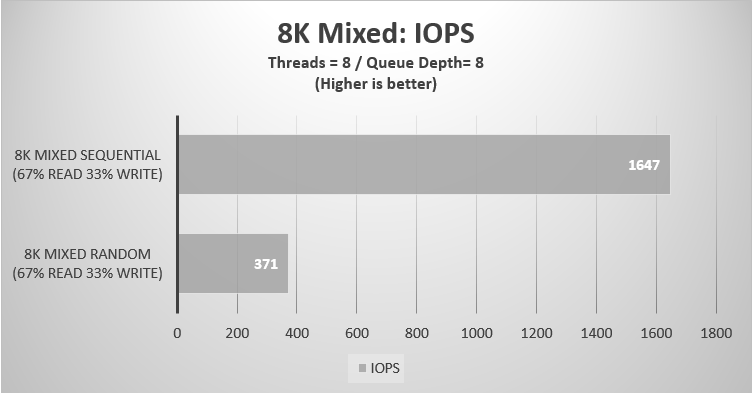

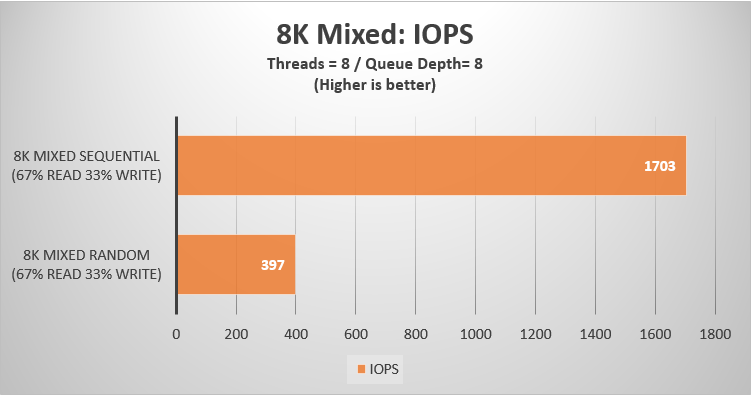

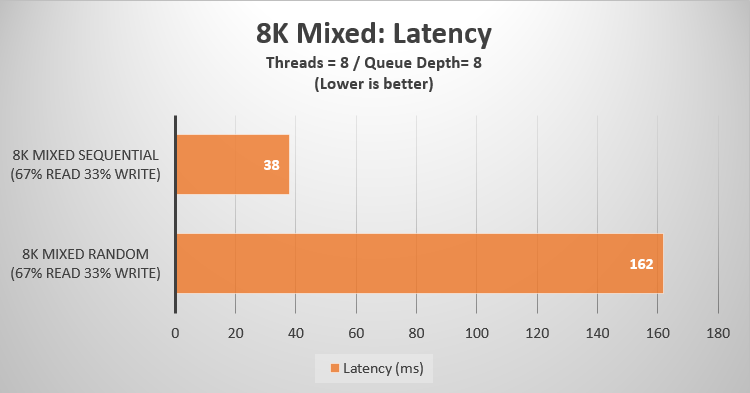

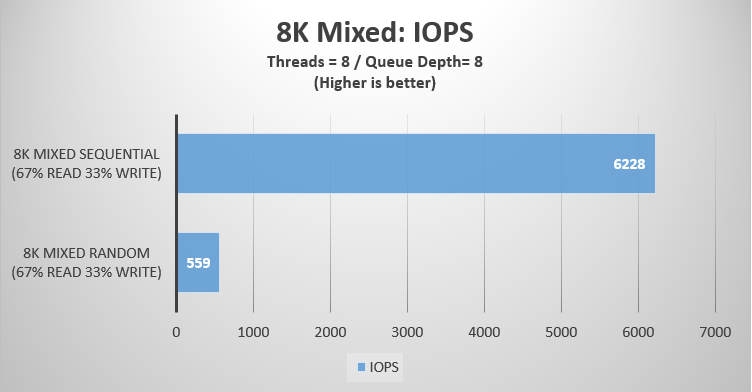

Moving forward, we have tried some “more real” access patterns. Consisting of the mixed (67% Read 33% Write) 8K I/O transfers, such workloads show less synthetic results and assist in better interpreting the achieved values.

As clearly indicated in the result charts, our WD RE spinners prefer sequential patterns, which is not surprising at all.

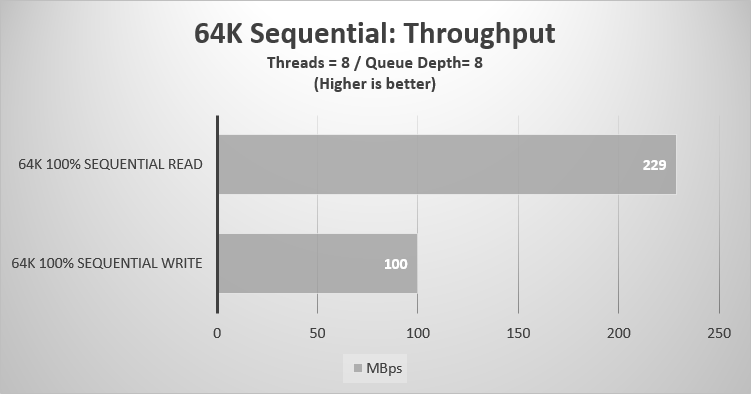

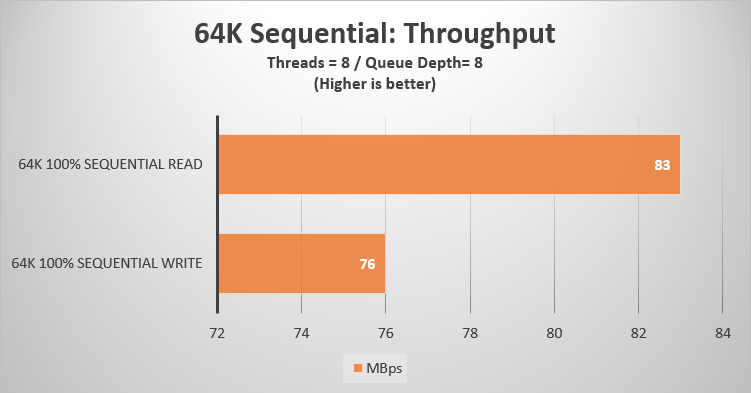

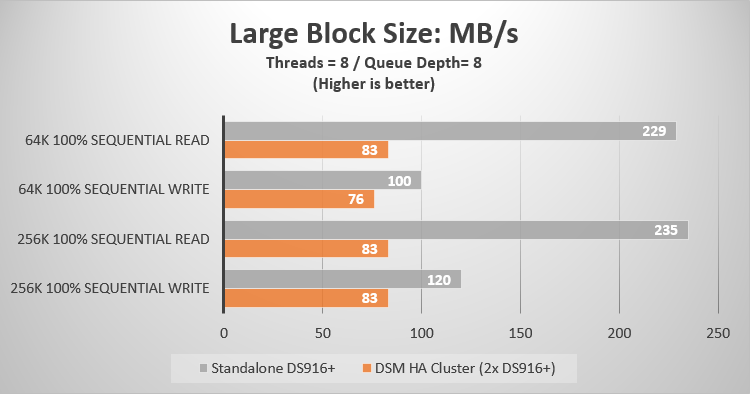

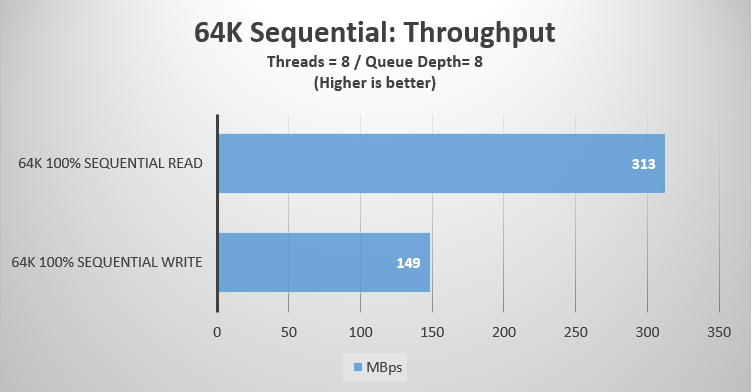

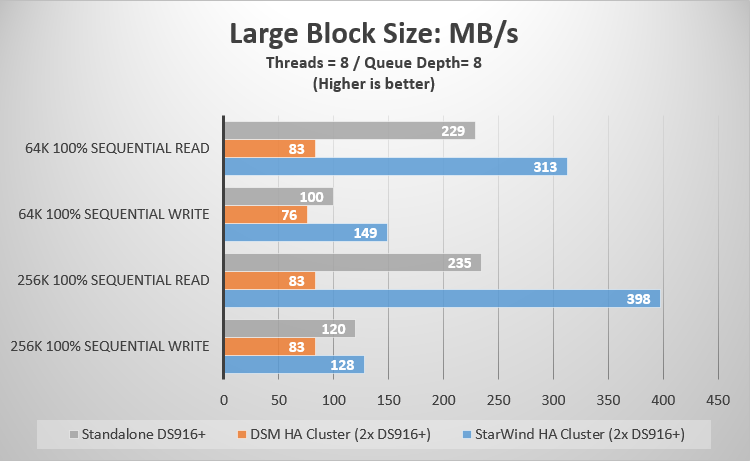

Continuing our tests, we have measured the performance of a larger 64K and 256K sequential patterns. Such workloads are typical for backup jobs and during file server operations. Due to the bigger block size, throughput numbers would be more relevant than IOPS.

229MB/s is a good result for sequential reads assuming we are reaching the network throughput limitation here. The network saturation was around 1.8Gbps out of 2Gbps possible (remember we have 2x 1GbE networks with MPIO enabled).

Looking at sequential writes, the results appeared to be a bit different. We have achieved 100MB/s which is, surprisingly, just a half of the throughput declared for DS916+ model.

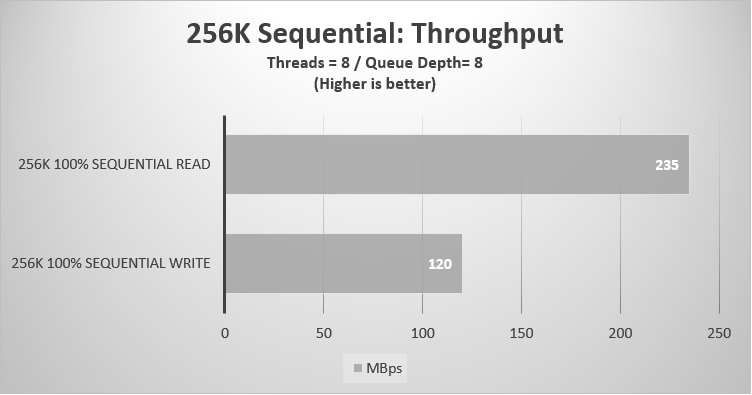

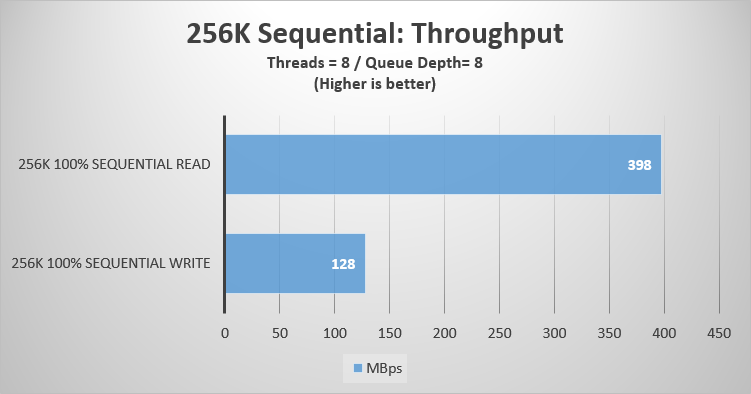

Next, we have tested the 256K sequential access:

With the biggest sequential block size, we have achieved what we believe is the maximum array throughput.

Summing up the results, we have seen that a single Synology unit is indeed capable of running 5-8 lightweight VMs. Moreover, sequential performance will be enough for a routine backup and file server tasks, which is obviously the main purpose for the majority of NAS units available on market.

Measuring the iSCSI performance of 2x Synology DS916+ in DSM HA Cluster setup

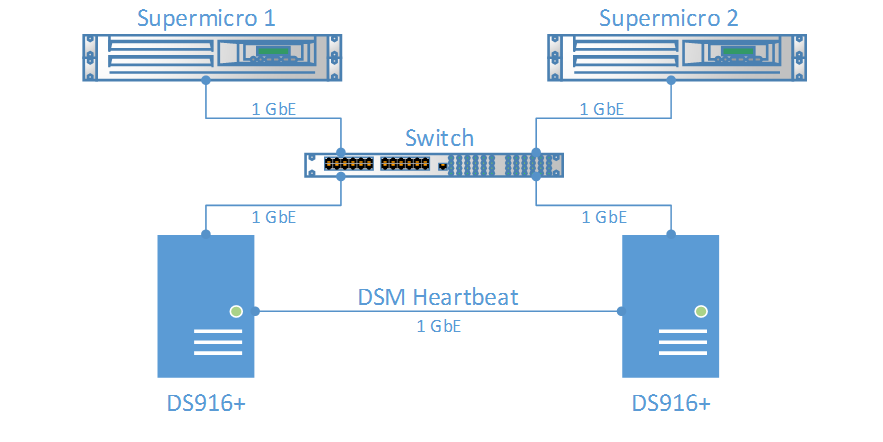

Year by year, due to the decrease of cost, HA solutions become more and more affordable to the small and medium business. It’s been a while since users can take advantage of the basic HA features on NAS devices, and our Synology model is no exception. Thus, we decided to test a pair of our DS916+ units connected to a pair of Supermicro servers in DSM HA Cluster configuration.

DSM clustering works in “active-passive” mode and Multipathing is no longer an option since 1x1GbE port on each unit is used for replication and Heartbeat.

Network diagram:

We keep the same Diskspeed setting across the whole benchmark process:

- Test duration: 60 seconds

- Test file size: 1TB

- Threads: 8

- Outstanding I/Os: 8

Corresponding Diskspeed parameters are the same as well:

4k 100% Random Read:

diskspd.exe -t8 -o8 -r -b4K -w0 -d60 -h -L -c1000G4k 100% Random Write:

diskspd.exe -t8 -o8 -r -b4K -w100 -d60 -h -L -c1000G8k 67% Sequential Read 33% Sequential Write:

diskspd.exe -t8 -o8 -si -b8K -w33 -d60 -h -L -c1000G8k 67% Random Read 33% Random Write:

diskspd.exe -t8 -o8 -r -b8K -w33 -d60 -h -L -c1000G64k 100% Sequential Read:

diskspd.exe -t8 -o8 -si -b64K -w0 -d60 -h -L -c1000G64k 100% Sequential Write:

diskspd.exe -t8 -o8 -si -b64K -w100 -d60 -h -L -c1000G256k 100% Sequential Read:

diskspd.exe -t8 -o8 -si -b256K -w0 -d60 -h -L -c1000G256k 100% Sequential Write:

diskspd.exe -t8 -o8 -si -b256K -w100 -d60 -h -L -c1000G

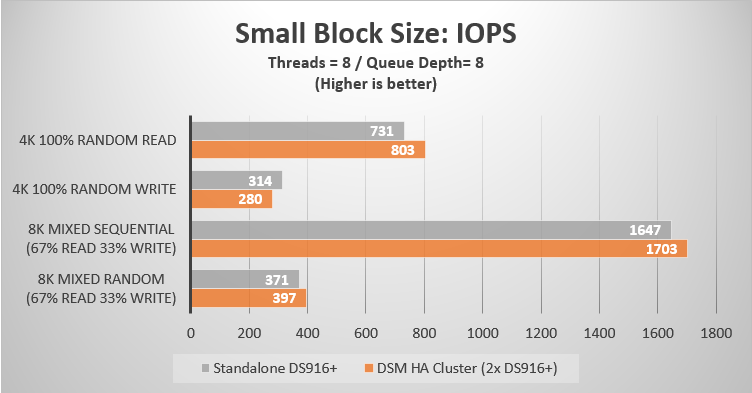

We are repeating our set of tests for this scenario, starting from 4K Random pattern:

Read performance got better, while write performance has suffered a bit if compared to the results achieved with the single Synology unit.

Latency chart represents the corresponding trend:

It is time to benchmark the “real world” patterns:

This time the achieved results are looking almost the same.

Latency stays at the similar level as well:

Next, we are testing the large sequential patterns. The access specifications below should give us a more valuable output since they will indicate the actual network saturation:

Working under DSM HA Cluster configuration, we were left with just one active 1GbE port on a single “active” unit instead of two active 1GbE ports if compared to a standalone configuration. You can clearly see how the network throughput limitation impacts the large-sized workloads. The results achieved in DSM HA Cluster are significantly lower if compared to ones of the single DS916+ unit.

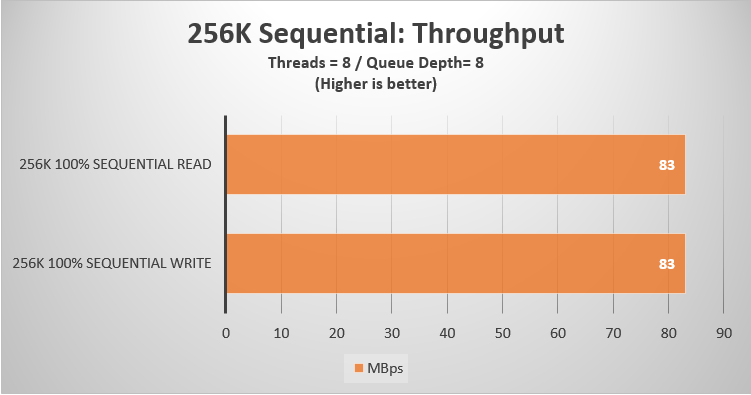

We can see the similar picture after moving to the bigger block size:

It seems that 83 MB/s is the highest throughput we can achieve in DSM HA configuration. It is also worth noticing that, surprisingly, we were not anywhere near to saturate even a single 1GbE port (125MBps) in DSM HA Cluster configuration.

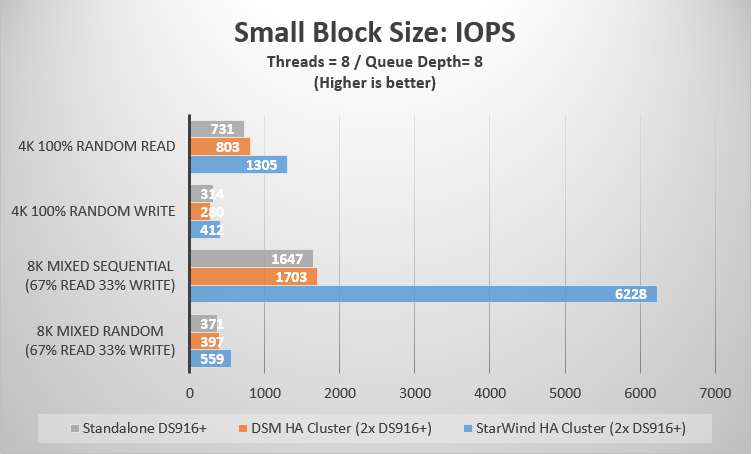

In the below charts we have compared benchmark results from the previously tested scenarios:

As we can see, DSM HA Cluster slightly outperforms a single Synology box during smaller block workloads, except “4K 100% RW” pattern where a single Synology unit got better by a small margin.

Looking at 64K and 256K results, it comes clear that a standalone Synology box performs much better than DSM HA Cluster thanks to an ability to utilize the Multipath I/O in Round Robin mode. Which, basically, translates into 1GbE vs 2GbE of maximum possible throughput.

Measuring the iSCSI performance of VSAN from StarWind Free deployed over 2x Synology DS916+

After reviewing results from the DSM HA Cluster benchmark, we have suggested that our setup should have a plenty of space for improvement, especially the 64K – 256K sequential performance. Large block workloads are quiet crucial for any production, even if their share is a relatively small portion of the total workload.

With these thoughts in mind, we decided to rebuild our setup and replace the Synology DSM HA Cluster with VSAN from StarWind Free to handle the data replication.

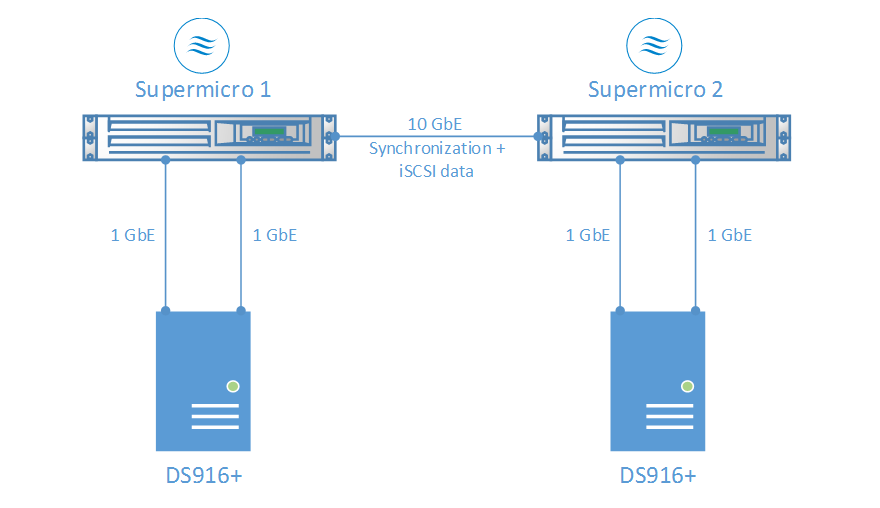

In our new setup we are using 2x Synology DS916+ units, each connected to the corresponding Supermicro server via 2x 1GbE networks. Supermicro servers are connected to each other via single 10GbE network that will be used for both StarWind Synchronization and iSCSI traffic. We are using 10GbE NICs to eliminate a possible network bottleneck.

Network diagram:

StarWind device settings:

- Type: Imagefile

- Size: 1TB

- L1 cache: no cache

- L2 cache: no cache

Once again, we are keeping the same DiskSpd settings for this test sequence:

- Test duration: 60 seconds

- Test file size: 1TB

- Threads: 8

Outstanding I/Os: 8

The list of Diskspeed parameters was left unchanged:

Corresponding Diskspeed parameters are the same as well:

4k 100% Random Read:

diskspd.exe -t8 -o8 -r -b4K -w0 -d60 -h -L -c1000G4k 100% Random Write:

diskspd.exe -t8 -o8 -r -b4K -w100 -d60 -h -L -c1000G8k 67% Sequential Read 33% Sequential Write:

diskspd.exe -t8 -o8 -si -b8K -w33 -d60 -h -L -c1000G8k 67% Random Read 33% Random Write:

diskspd.exe -t8 -o8 -r -b8K -w33 -d60 -h -L -c1000G64k 100% Sequential Read:

diskspd.exe -t8 -o8 -si -b64K -w0 -d60 -h -L -c1000G64k 100% Sequential Write:

diskspd.exe -t8 -o8 -si -b64K -w100 -d60 -h -L -c1000G256k 100% Sequential Read:

diskspd.exe -t8 -o8 -si -b256K -w0 -d60 -h -L -c1000G256k 100% Sequential Write:

diskspd.exe -t8 -o8 -si -b256K -w100 -d60 -h -L -c1000G

The first step is to define the system performance using the full-random access pattern:

And this is where we can clearly see the benefits of Multipath I/O in Active-Active HA configuration. With an ability to utilize 4x active 1GbE iSCSI paths, StarWind device shows significantly better random-read performance and slight increase in random-write performance.

Latency has decreased in a corresponding manner:

Switching to the mixed workloads, we achieved great results as well:

Here we have seen an ultimate performance increase during the mixed sequential workload as well as a significant increase during the mixed random workload. Once again, this was achieved thanks to the ability of utilizing all available networks simultaneously.

Latency is significantly decreased as well:

It is time to benchmark the throughput during large-block sequential access.

As expected from this type of setup, we achieved great results on 64K pattern:

Network saturation gets even better with the 256K block size:

We have achieved 80% (398MBps out of 500Mbps) network saturation on sequential reads, which is a really good result. Moreover, throughout sequential read workloads, saturation increases with the larger block sizes and goes up to 88,5% during the 512K workload.

To sum up all of the above results, we have combined them into two charts. We are comparing 3 configurations:

- Standalone DS916+ unit

- 2x DS916+ units in the “active-passive” DSM HA Cluster configuration

- 2x DS916+ units in the “active-active” StarWind HA configuration

Conclusion

Synology DS916+ holds an impressive set of features making it a great NAS solution for small businesses and enthusiasts. From the iSCSI SAN standpoint, a single spindle-based DS916+ unit, configured in RAID 10, can provide enough redundancy and performance to run a couple of lightweight virtual machines.

However, after adding a second NAS device and configuring the native DSM HA Cluster, it became clear that such configuration introduces a network bottleneck due to the inability of utilizing more than one active 1GbE iSCSI path. Moreover, DSM HA Cluster’s “active-passive” configuration implies that only a single Synology unit is involved in data-transfer operations. In another words, meaning that the capabilities of only a single RAID array are being utilized. Performance impact is certainly visible after looking at the sequential workload results. Such limitations can affect the performance of file transfer operations, live migrations, VM cloning and backup jobs.

To eliminate network bottleneck and increase the performance, we decided to change the “HA storage provider”. Obviously, we have chosen VSAN from StarWind Free for this purpose, since it can easily turn our configuration into “active-active”. Synchronous data replication in “active-active” mode makes it possible for iSCSI traffic to use all physical networks present in our NAS devices simultaneously.

After conducting a set of benchmarks for each configuration type, we have seen that StarWind significantly outperforms both a single Synology DS916+ unit and 2x DS916+ units in DSM HA Cluster configuration. The most significant performance boost was achieved in the 4K 100% Random Read; 8K Mixed Sequential; 64K and 256K sequential patterns.

We are satisfied with the achieved results and can recommend VSAN from StarWind Free+ Windows Failover Cluster as a good alternative for the native Synology DSM HA Cluster setup.

Also, it is worth noticing that we did not enable iSER on StarWind targets during today’s benchmarks. We will definitely test our setup using VSAN from StarWind with RDMA-enabled networking in the nearest future.

In the next articles, we will also test the iSCSI failover duration of Synology DSM HA Cluster and VSAN from StarWind Free since it is another important aspect of HA clustering.