VMware ESXi is the industry-leading hypervisor that is installed directly on a physical server. It is a reliable and secure solution with a tiny hardware footprint. At the same time, ESXi architecture is easy in management, patching, and updating.

Recently, Mellanox has released iSER 1.0.0.1, the stable iSER driver build for ESXi. iSER is an iSCSI extension for RDMA that enables the direct data transfer out and into SCSI memory without any intermediate data copies. Here, we study the driver stability and performance to understand how the protocol streamlines ESXi environments.

Introduction

VMware ESXi is the industry-leading hypervisor that is installed directly on a physical server. It is a reliable and secure solution with a tiny hardware footprint. At the same time, ESXi architecture is easy in management, patching, and updating.

Recently, Mellanox has released iSER 1.0.0.1, the stable iSER driver build for ESXi. iSER is an iSCSI extension for RDMA that enables the direct data transfer out and into SCSI memory without any intermediate data copies. Here, we study the driver stability and performance to understand how the protocol streamlines ESXi environments.

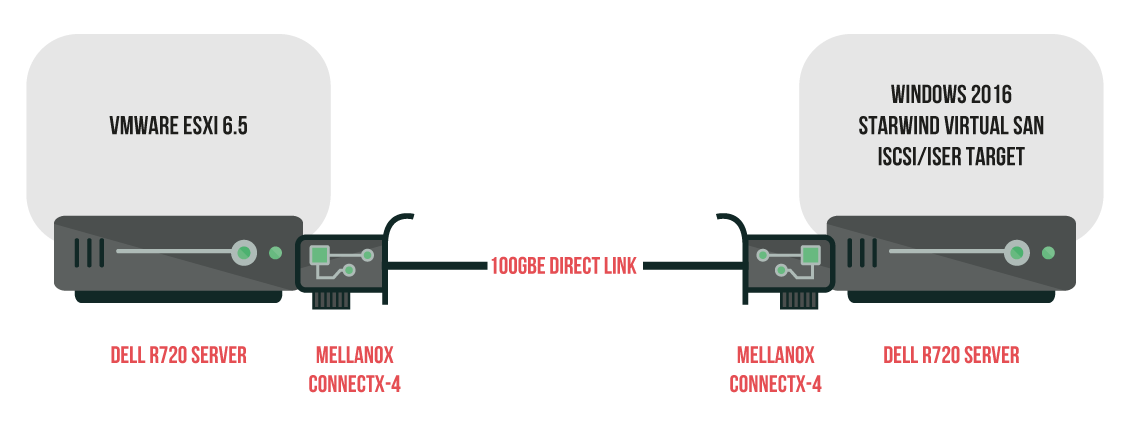

The employed setup description

The employed setup configuration is provided below:

Two Dell R720 servers

Two Intel Xeon E5-2660

128GB DDR3 RAM

Mellanox ConnectX-4 100GbE NIC, Direct link

VMware ESXi, 6.5.0, 6765664

Windows Server 2016

StarWind Virtual SAN build V8.0 build 11660

Testing steps

- Install drivers and configure the setup.

- Check whether iSER initiator works.

- Carrying out tests on the RAM disk.

Installing driver and setting up iSER adapter for ESXi

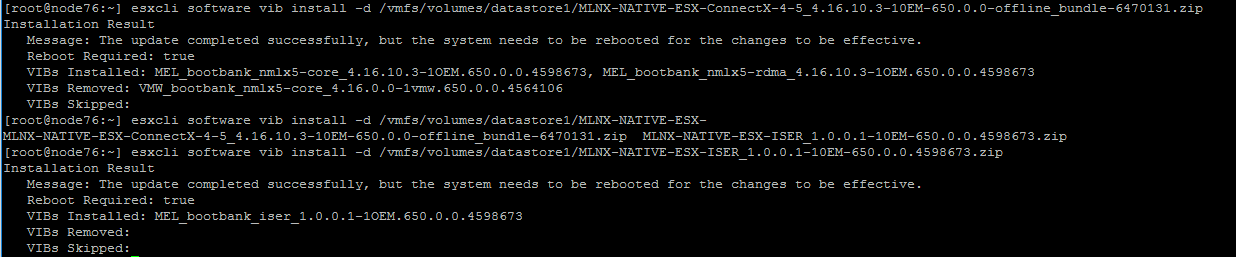

Initially, upgrade the Mellanox network adapter to ensure the stable functioning of the iSER module. For this purpose, download the most recent drivers’ version for ESXi (4.16.10.3), or Windows (1.70.0.1) frоm the vendor’s website.

Next, install the drivers and the module:

Once the installation is over, a reboot is needed.

Configure the network on Mellanox adapter ports. Afterwards, create two virtual switches and specify the MTU 9000 size.

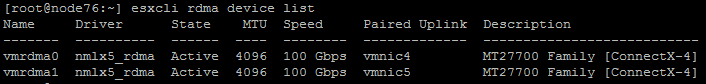

Check RDMA adapters:

To launch iSER module, deploy the command below:

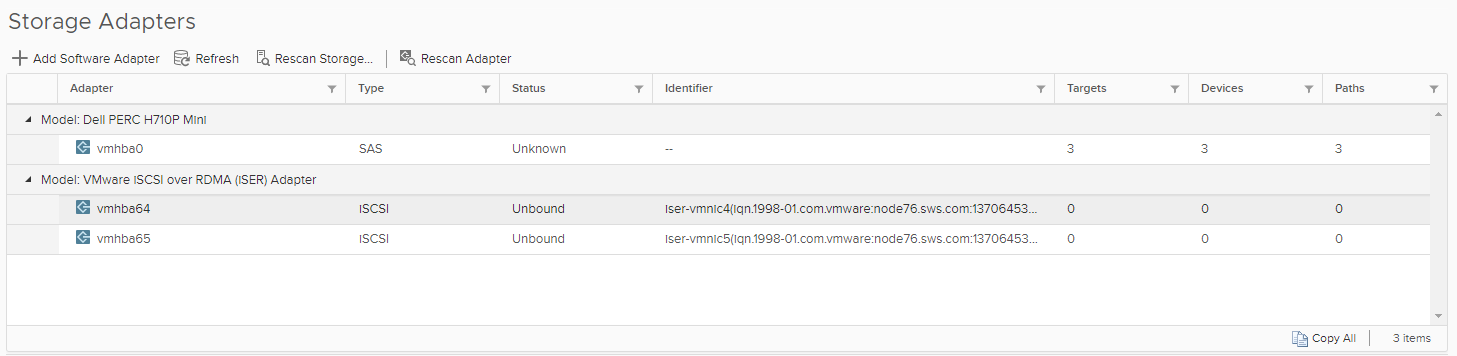

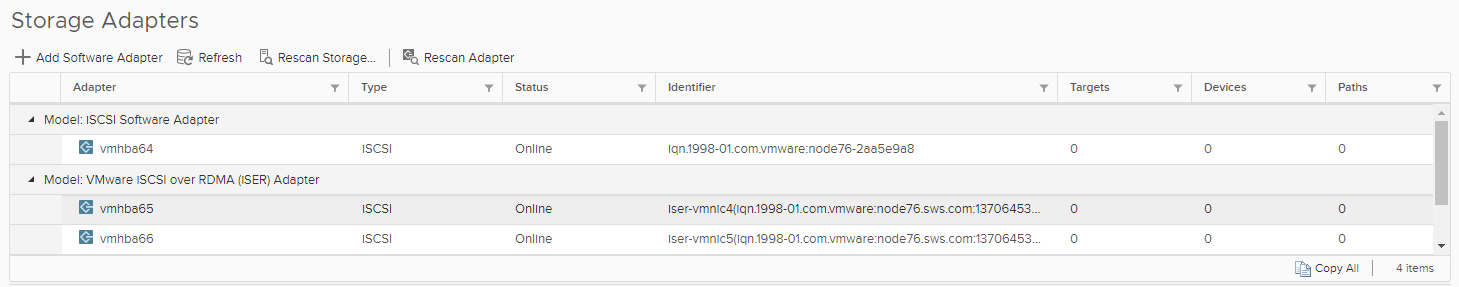

esxcli rdma iser addEventually, iSER adapter is displayed in the interface as it is shown in the screenshot below:

Please note that if ESXi hosts are reloaded at this point, iSER adapters will disappear. To avoid this, add the commands below to the /etc/rc.local.d/local.sh to deploy them when the system launches:

esxcli rdma iser add

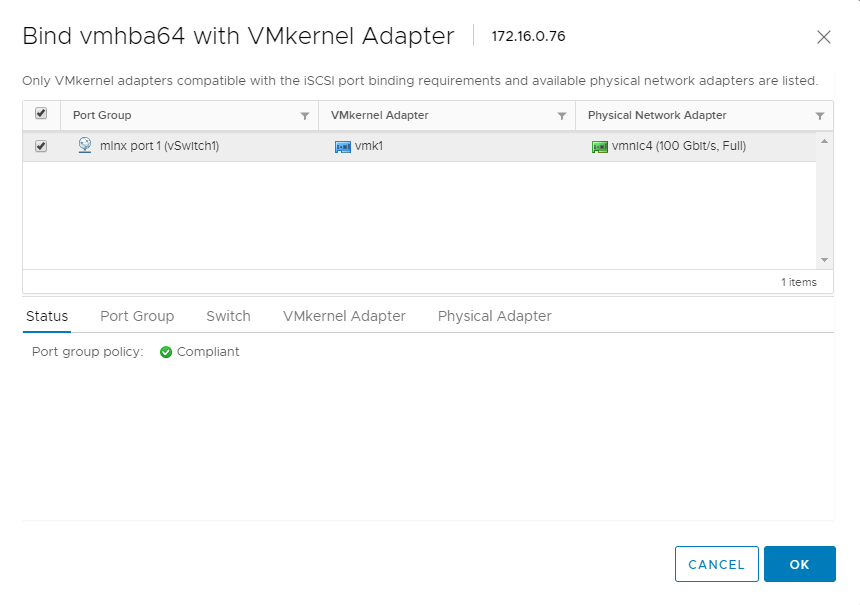

esxcli storage core adapter rescan –allFor the proper functioning, iSER adapter requires to be assigned to a network port:

Once the iSER adapter is assigned to a network port, it is ready to be used.

For the test purposes, we employed a 32-GB StarWind virtual disk located on the RAM disk. RAM disk enables to eliminate the storage performance impact on tests’ results.

Here, we employ StarWind RAM Disk, the free utility available on https://www.starwindsoftware.com/high-performance-ram-disk-emulator.

Configuring StarWind target for testing

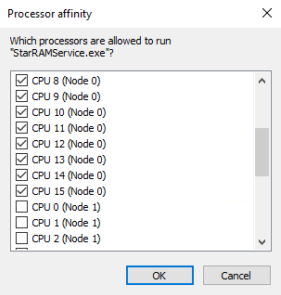

Install StarWind RAM Disk and launch it afterward. For performance optimization, assign the StarRAMService.exe process to the NUMA 0 node as it is shown below:

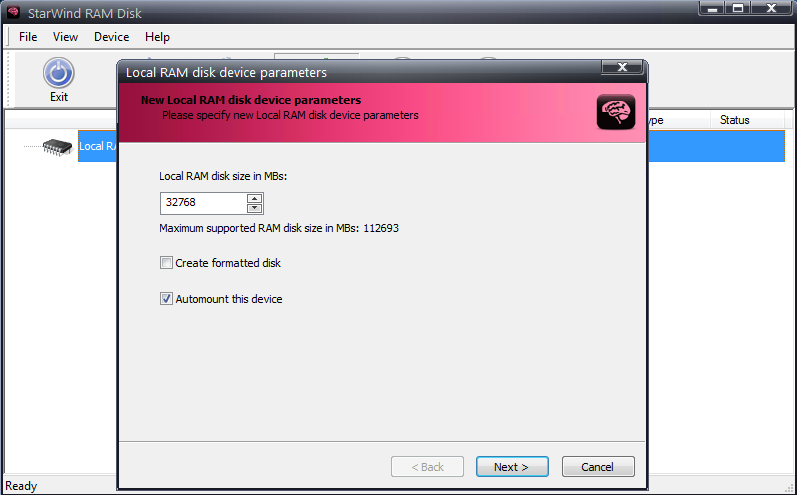

Create a 32-GB RAM disk.

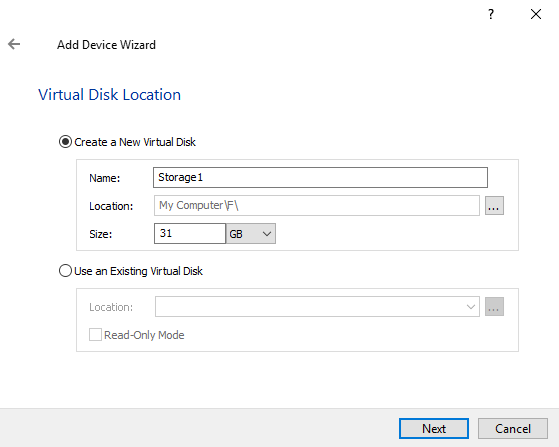

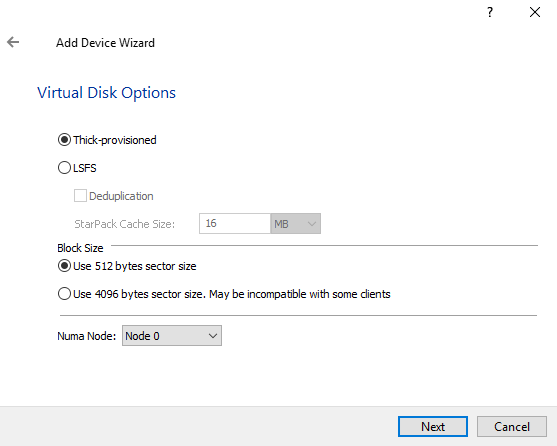

Next, install StarWind Virtual SAN and create a virtual disk on the recently established RAM disk.

The device parameters: Thick-provisioned, 512 bytes sector size, assigned to NUMA node 0.

In two-processor environments, it is recommended to distribute the virtual disk and iSCSI target workloads between the physical processors to ensure the ultimate StarWind performance. For this purpose, open Starwind.cfg in the folder where StarWind is installed and add “node=”1” in the <targets> section.

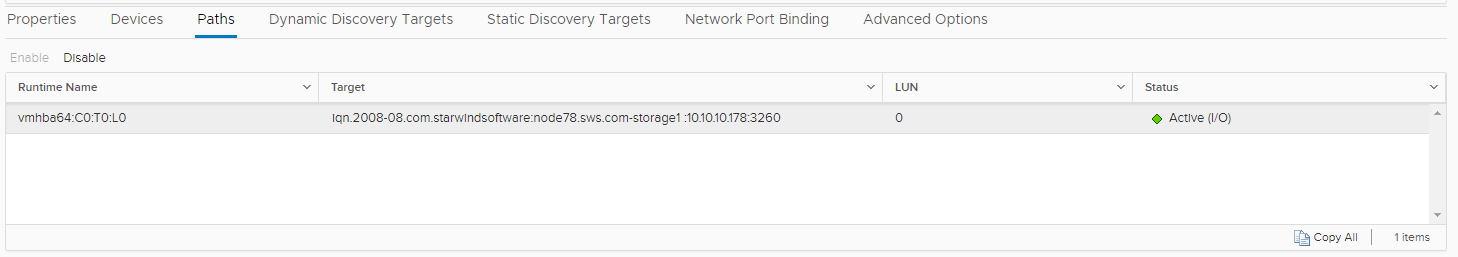

Next, add the target’s IP address to Dynamic Discovery Targets and press Rescan.

Connected targets are listed as it is shown below:

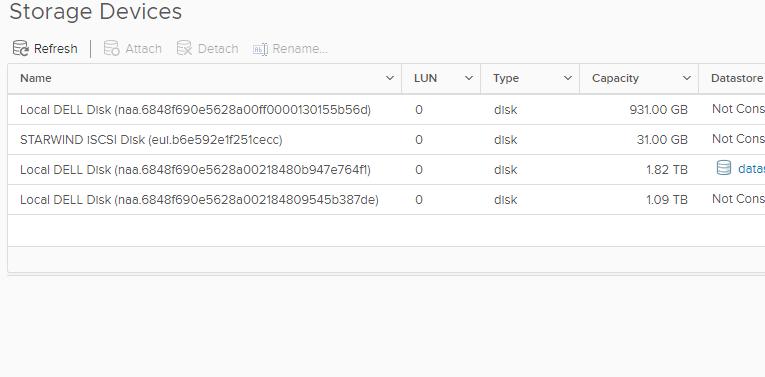

StarWind disk is displayed on the Storage Devices tab:

Testing the setup

The performance here is tested in two different ways: by creating a VMFS Datastore with the further VMDK connection to a virtual machine, and the entire disk connected to VM as an RDM.

Tests in our study are carried out with fio. We used the following load templates:

Random 4-KB blocks reading and writing (assessing the maximal number of IOPS);

Sequential 64-KB blocks reading and writing (measuring the maximal throughput).

We used eight channels each with Queue Depth 16.

First, we estimated the performance of the RAM disk and RDMA connection between the target and initiator.

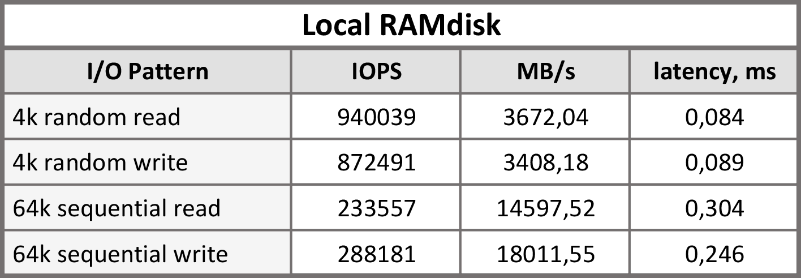

The table below highlights the RAM disk test results:

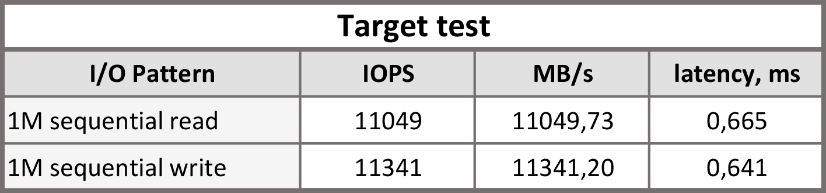

We also assessed RAM disk peak performance when it was entirely transferred to the processor’s cache. For the test purpose, we created a 2-MB disk and tested its reading and writing capabilities with 1-MB blocks. Data transferring rate reached 23 GB/S. Unfortunately, we did not find any ESXi-compatible utility with rping functions to test RDMA channel directly. Regarding this fact, we use the recently created 2-MB disk for testing the channel. We connected it to the virtual machine via iSER as an RDM. The table below highlights tests’ results.

The maximal expected throughput of the 100-Gb interface equals 12500 Mb/s. Being grouped, target and initiator can exhibit the close to line-rate-interface throughput.

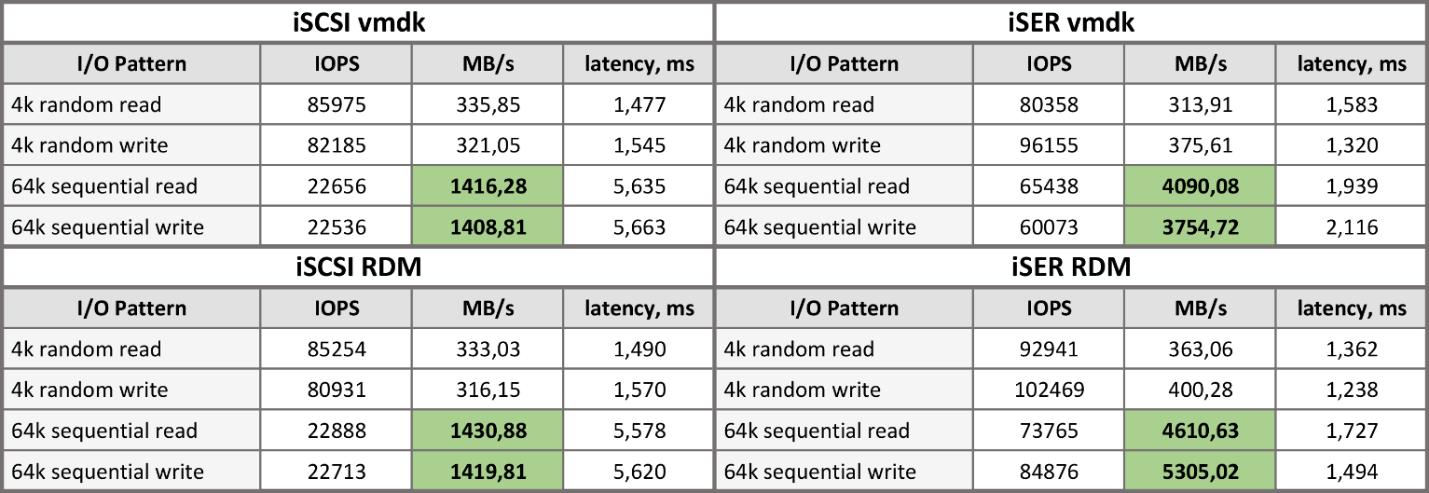

The table below compares the metrics obtained while testing Software iSCSI Adapter and RDMA iSER:

According to the table, there is no significant difference in performance during operations with small blocks. Once the blocks’ size increases, the difference becomes three times higher.

Below, we discuss the possible reasons for this phenomenon.

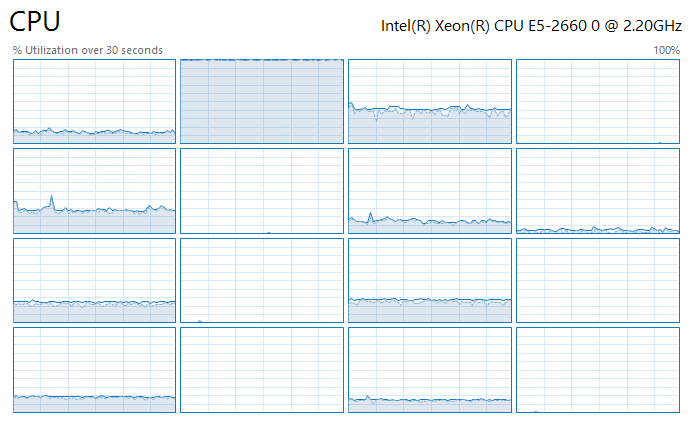

First, it is necessary to analyze processor’s loads. The plots below depict the VM processor’s loads during testing with 4-KB blocks:

Processor’s utilization pattern during reading and writing looks similar in all cases. Eight processor’s cores are equally loaded with fio. One core is entirely utilized presumably by I/O system working with the virtual SCSI adapter.

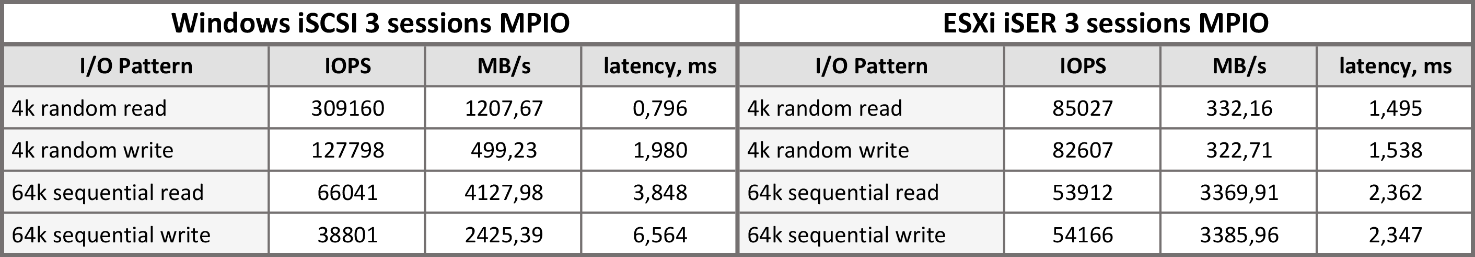

Neither iSCSI nor iSER initiators for ESXi can set the sessions parallel as it is done in Windows. This limitation results in an inability to enlarge performance with Multipath I/O. The table below highlights the result of testing initiators for Windows iSCSI and ESXi iSER via MPIO (3 sessions). On ESXi, all sessions are executed on a single processor, while on Windows each session is assigned to a separate processor.

According to the table above, iSCSI MPIO performs better in operations with small blocks that can be explained by sessions’ distribution among processor’s cores.

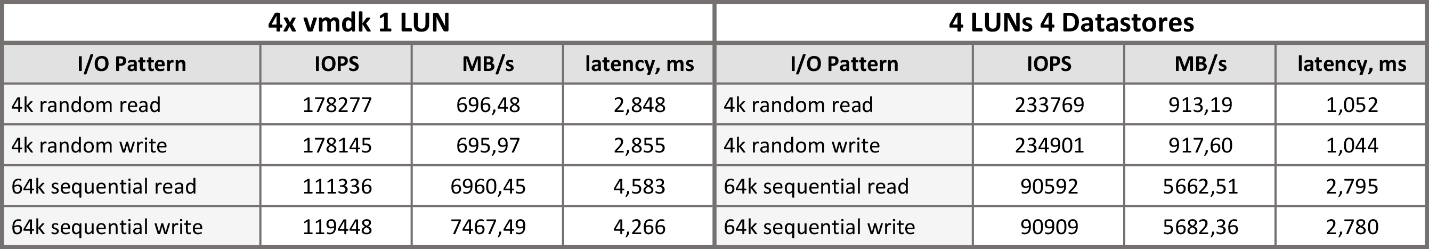

In ESXi environment, there are some features in deploying several VMs on a single LUN. In particular, ESXi provides the adaptive algorithm for administrating the VMs queue depth. This algorithm enables level system loads by reducing queue depth of all VMs on the LUN. Find more details here. This feature reduces the performance of four VMs so that their overall performance turns out lower than single VM’s efficiency.

Provided further tests’ results support the claim:

The table on the left highlights performance of four VMDK disks. These disks are located on a single Datastore located on one LUN connected via iSER. The table on the right depicts performance of four VMDK located on different Datastores on different LUNs and targets.

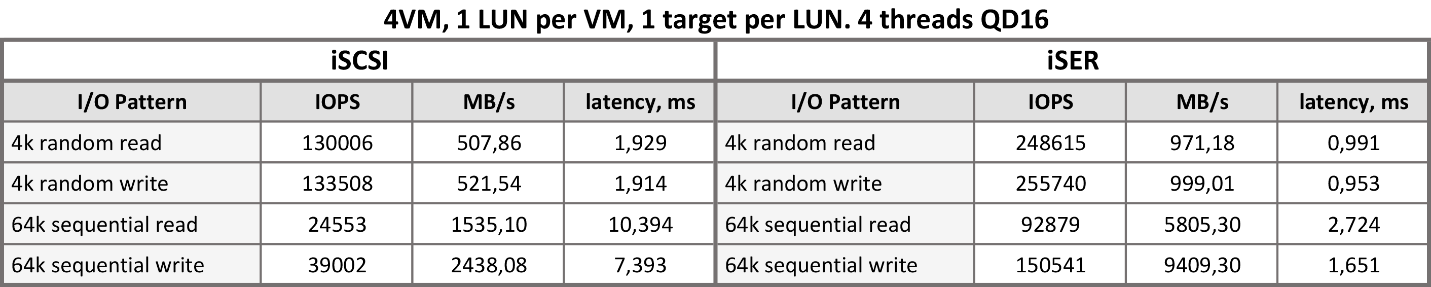

The testing templates are the same. The table below testifies that allotted devices and targets exhibit low latency. In addition, performance increases if RDM disk is connected.

The table below compares iSCSI and iSER:

Conclusion

In general, iSER provides higher performance and throughput than iSCSI. This difference is minor during operations with small blocks, but it becomes three times bigger once blocks are seized. Processor’s utilization pattern during reading and writing looks similar in all cases. It should also be noted that Multipath I/O does not enlarge ESXi performance because neither iSCSI nor iSER initiators for ESXi can set the sessions parallel.