This topic aims to explain the Quorum configuration in a Failover Clustering. As part of my job, I work with Hyper-V Clusters where the Quorum is not well configured and so my customers have not the expected behavior when an outage occurs. I work especially on Hyper-V clusters but the following topic applies to most of Failover Cluster configuration.

What’s a Failover Cluster Quorum

A Failover Cluster Quorum configuration specifies the number of failures that a cluster can support in order to keep working. Once the threshold limit is reached, the cluster stops working. The most common failures in a cluster are nodes that stop working or nodes that can’t communicate anymore.

Imagine that quorum doesn’t exist, and you have two-nodes cluster. Now there is a network problem, and the two nodes can’t communicate. If there is no Quorum, what prevents both nodes to operate independently and take disks ownership on each side? This situation is called Split-Brain. Quorum exists to avoid Split-Brain and prevents corruption on disks.

The Quorum is based on a voting algorithm. Each node in the cluster has a vote. The cluster keeps working while more than half of the voters are online. This is the quorum (or the majority of votes). When there are too many of failures and not enough online voters to constitute a quorum, the cluster stop working.

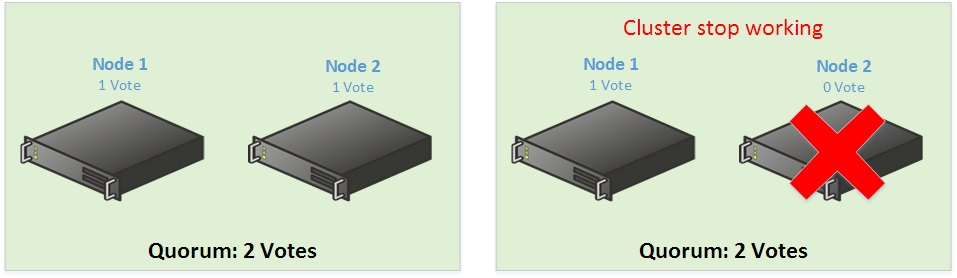

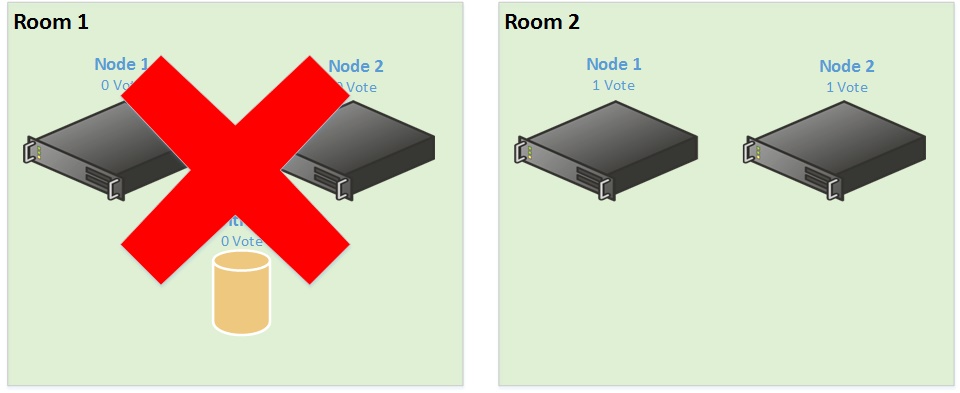

Below is a two nodes cluster configuration:

The majority of vote is 2 votes. So a two nodes cluster as above is not really resilient because if you lose a node, the cluster is down.

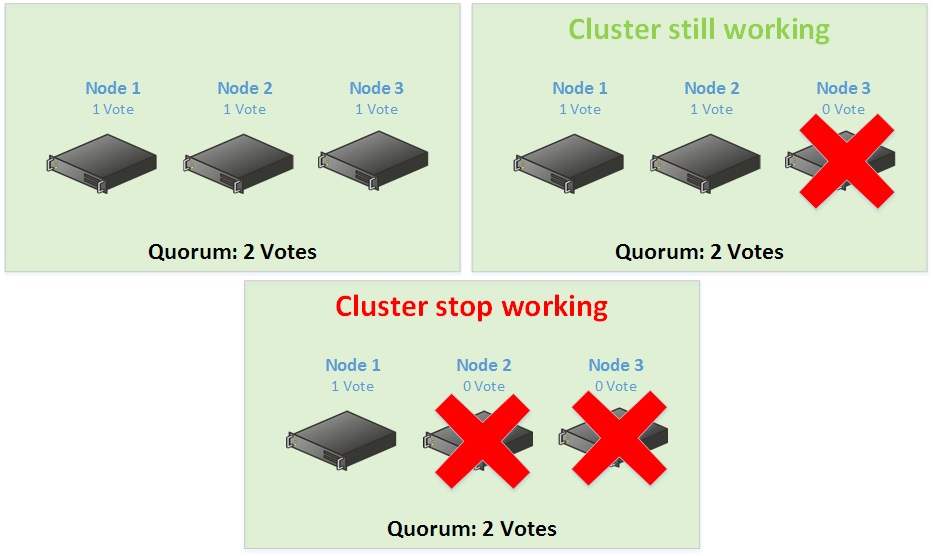

Below a three-node cluster configuration:

Now you add a node in your cluster. So, you are in a three-node cluster. The majority of vote is still 2 votes. But because there are three nodes, you can lose a node and the cluster keep working.

Below a four-node cluster configuration:

Despite its four nodes, this cluster can support one node failure before losing the quorum. The majority of vote is 3 votes so you can lose only one node.

On a five-node cluster the majority of votes is still 3 votes so you can lose two nodes before the cluster stop working and so on. As you can see, the majority of nodes must remain online in order to the cluster keeps working and this is why it is recommended to have an odd majority of votes. But sometimes we want only a two-node cluster for some applications that don’t require more nodes (as Virtual Machine Manager, SQL AlwaysOn and so on). In this case we add a disk witness, a file witness or in Windows Server 2016, a cloud Witness.

Failover Cluster Quorum Witness

As said before, it is recommended to have an odd majority of votes. But sometimes we don’t want an odd number of nodes. In this case, a disk witness, a file witness or a cloud witness can be added to the cluster. This witness too has a vote. So when there are an even number of nodes, the witness enables to have an odd majority of vote. Below, the requirements and recommendations of each Witness type (except Cloud Witness):

| Witness type | Description | Requirements and recommendations |

| Disk witness |

|

|

| File share witness |

|

The following are additional considerations for a file server that hosts the file share witness:

|

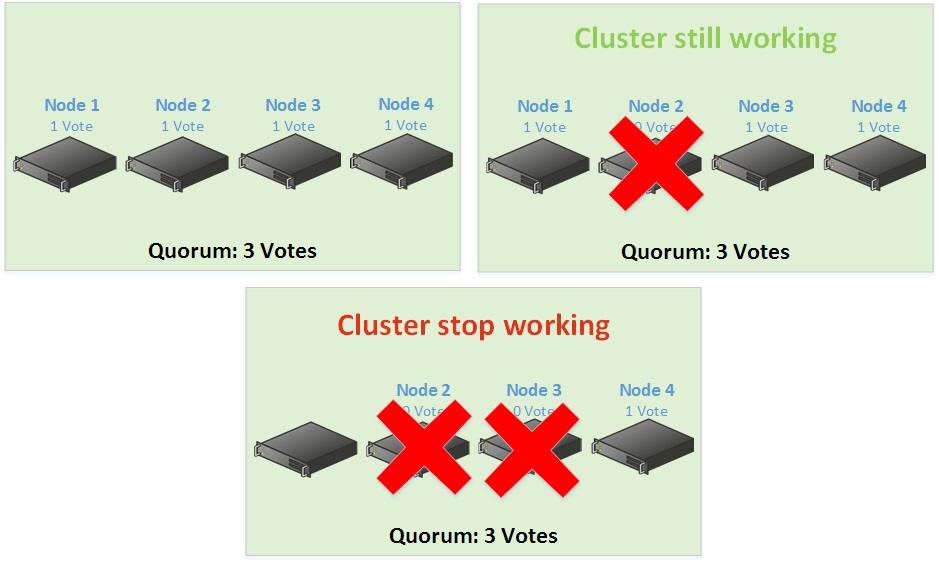

So below you can find again a two-nodes Cluster with a witness:

Now there is a witness, you can lose a node and keep the quorum. Even if a node is down, the cluster still working. So when you have an even number of nodes, the quorum witness is required. But to keep an odd majority of votes, when you have an odd number of nodes, you should not implement a quorum witness.

Quorum configuration

Below you can find the four possible cluster configuration (taken from this link):

- Node Majority (recommended for clusters with an odd number of nodes)

Can sustain failures of half the nodes (rounding up) minus one. For example, a seven node cluster can sustain three node failures.

- Node and Disk Majority (recommended for clusters with an even number of nodes).

Can sustain failures of half the nodes (rounding up) if the disk witness remains online. For example, a six node cluster in which the disk witness is online could sustain three node failures.

Can sustain failures of half the nodes (rounding up) minus one if the disk witness goes offline or fails. For example, a six node cluster with a failed disk witness could sustain two (3-1=2) node failures.

- Node and File Share Majority (for clusters with special configurations)

Works in a similar way to Node and Disk Majority, but instead of a disk witness, this cluster uses a file share witness.

Note that if you use Node and File Share Majority, at least one of the available cluster nodes must contain a current copy of the cluster configuration before you can start the cluster. Otherwise, you must force the starting of the cluster through a particular node. For more information, see “Additional considerations” in Start or Stop the Cluster Service on a Cluster Node.

- No Majority: Disk Only (not recommended)

Can sustain failures of all nodes except one (if the disk is online). However, this configuration is not recommended because the disk might be a single point of failure.

Stretch Cluster Scenario

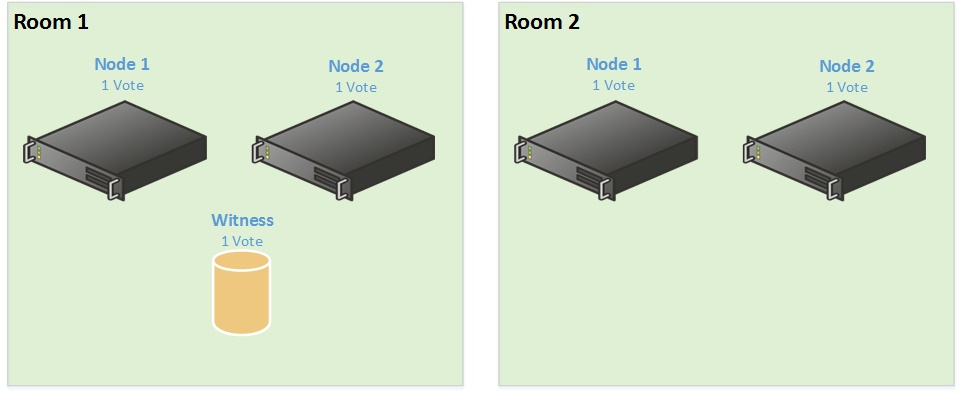

Unfortunately (I don’t like stretch cluster in Hyper-V scenario), some customers have stretch cluster between two datacenters. And the most common mistake I see to save money is the below scenario:

So the customer tells me: Ok I’ve followed the recommendation because I have four nodes in my cluster but I have added a witness to obtain an odd majority of votes. So let’s start the production. The cluster is running for a while and then one day the room 1 is underwater. So you lose Room 1:

In this scenario you should have also stretch storage and so if you have implemented a disk witness it should move to room 2. But in the above case you have lost the majority of votes and so the cluster stop working (sometimes with some luck, the cluster is still working because the disk witness has time to failover but it is lucky). So when you implement a stretch cluster, I recommend the below scenario:

In this scenario, even if you lose a room, the cluster still works. Yes I know, three rooms are expensive but I have not recommended you to make a stretch cluster (Hyper-V case). Fortunately, in Windows Server 2016, the quorum witness can be hosted in Microsoft Azure (Cloud Witness).

Dynamic Quorum (from Windows Server 2012 feature)

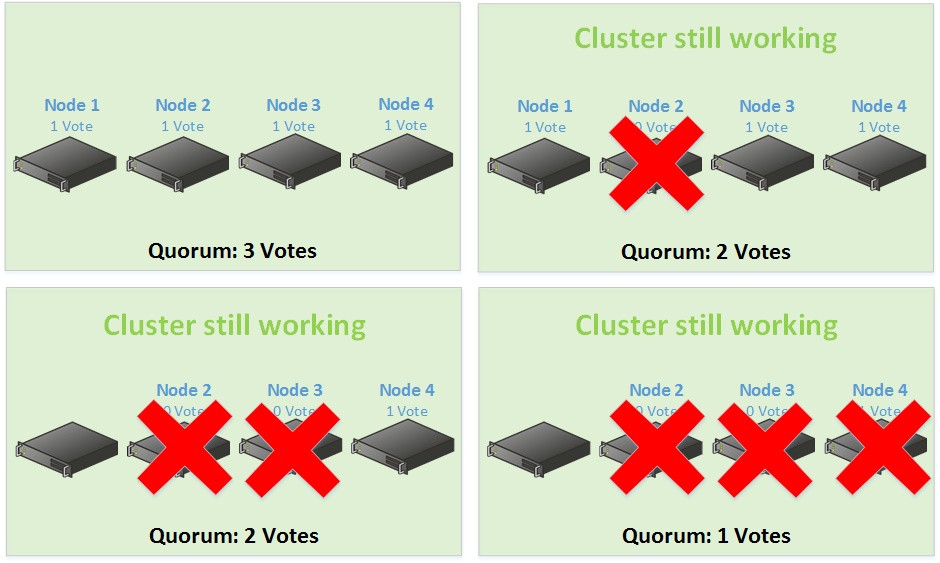

Dynamic Quorum enables to assign vote to node dynamically to avoid losing the majority of votes and so the cluster can run with one node (known as last-man standing). Let’s take the above example with four-node cluster without quorum witness. I said that the Quorum is 3 votes so without dynamic quorum, if you lose two nodes, the cluster is down.

Now I enable the Dynamic Quorum. The majority of votes is computed automatically related to running nodes. Let’s take again the Four-Node example:

So, why implementing a witness, especially for stretched cluster? Because Dynamic Quorum works great when the failure is sequential and not simultaneous. So for the stretched cluster scenario, if you lose a room, the failure is simultaneous and the dynamic quorum has not the time to recalculate the majority of votes. Moreover I have seen strange behavior with dynamic quorum especially with two-node cluster. This is why in Windows Server 2012, I always disabled the dynamic quorum when I didn’t use a quorum witness.

The dynamic quorum has been enhanced in Windows Server 2012 R2. Now there is Dynamic Witness implemented. This feature calculates if the Quorum Witness has a vote. There are two cases:

- If there is an even number of nodes in the cluster with the dynamic quorum enabled, the Dynamic Witness is enabled on the Quorum Witness and so the witness has vote.

- If there is an odd number of nodes in the cluster with the dynamic quorum enabled, the Dynamic Witness is enabled on the Quorum Witness and so the witness has not vote.

So since Windows Server 2012 R2, Microsoft recommends to always implement a witness in a cluster and let the dynamic quorum to decide for you.

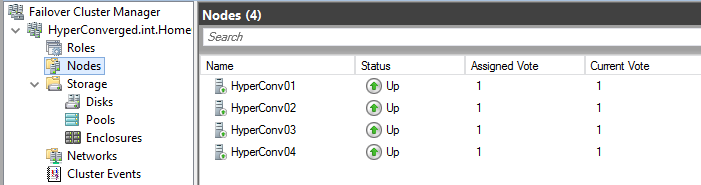

The Dynamic Quorum is enabled by default since Windows Server 2012. In the below example, there is a four-node cluster on Windows Server 2016. But it is the same behavior.

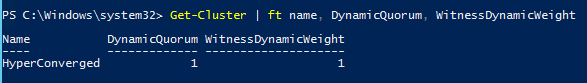

I verify if the dynamic quorum is enabled and also the dynamic witness:

The Dynamic Quorum and the Dynamic Witness are well enabled. Because I have four nodes, the Witness has a vote and this is why the Dynamic Witness is enabled. If you want to disable the Dynamic Quorum you can run this command:

(Get-Cluster).DynamicQuorum = 0

Cloud Quorum Witness (from Windows Server 2016 feature)

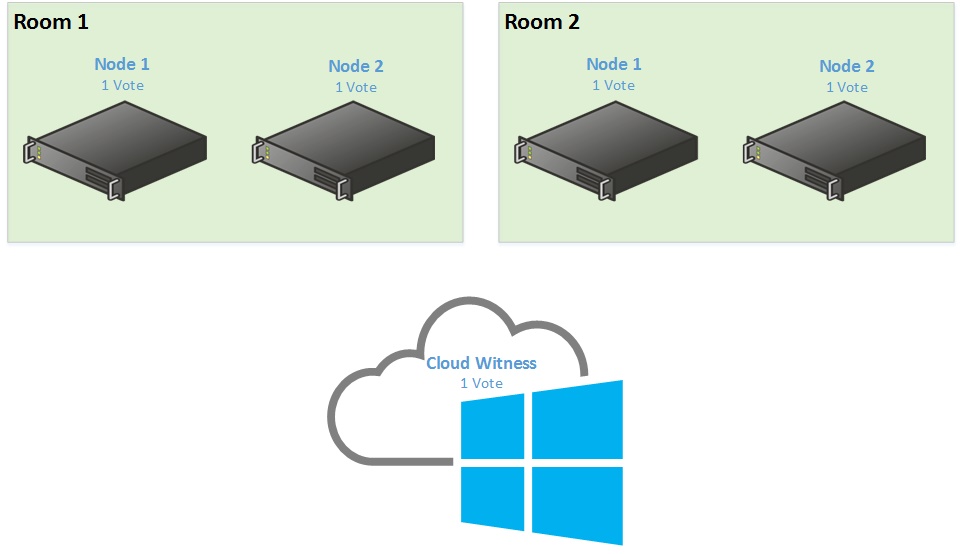

By implementing a Cloud Quorum Witness, you avoid to spending money on the third room in case of stretch cluster. Below is the scenario:

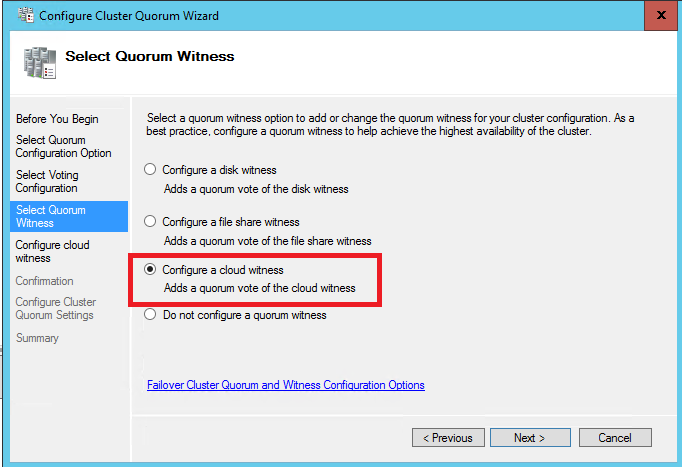

The Cloud Witness, hosted in Microsoft Azure, has also one vote. In this way you have also an odd majority of votes. For that you need an existing storage account in Microsoft Azure. You also need an access key. Now you have just to configure the quorum as a standard witness. Select Configure a Cloud Witness when it is asked.

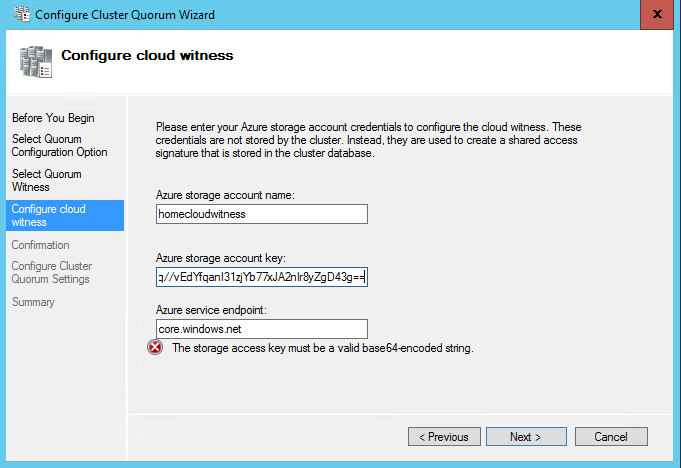

Then specify the Azure Storage Account and a storage key.

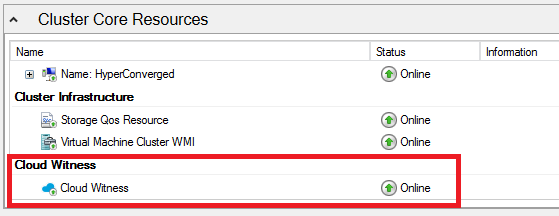

At the end of the configuration, the Cloud Witness should be online.

Conclusion

In conclusion, I recommend this when you are configuring a Quorum in a failover cluster:

- Prior to Windows Server 2012 R2, always keep an odd majority of vote

– In case of an even number of nodes, implement a witness

– In case of an odd number of nodes, do not implement a witness

- Since Windows Server 2012 R2, Always implement a quorum witness

– Dynamic Quorum manage the assigned vote to the nodes

– Dynamic Witness manage the assigned vote to the Quorum Witness

- In case of stretch cluster, implement the witness in a third room or use Microsoft Azure.