Introduction

As the name of this article is hinting, I’m going to discuss the answers to the said questions. My beginner colleagues are often wondering what virtual disk is preferable to choose. Therefore, although I was talking about this topic a while ago here and there, it’s time to get to the point.

What do we call a virtual disk?

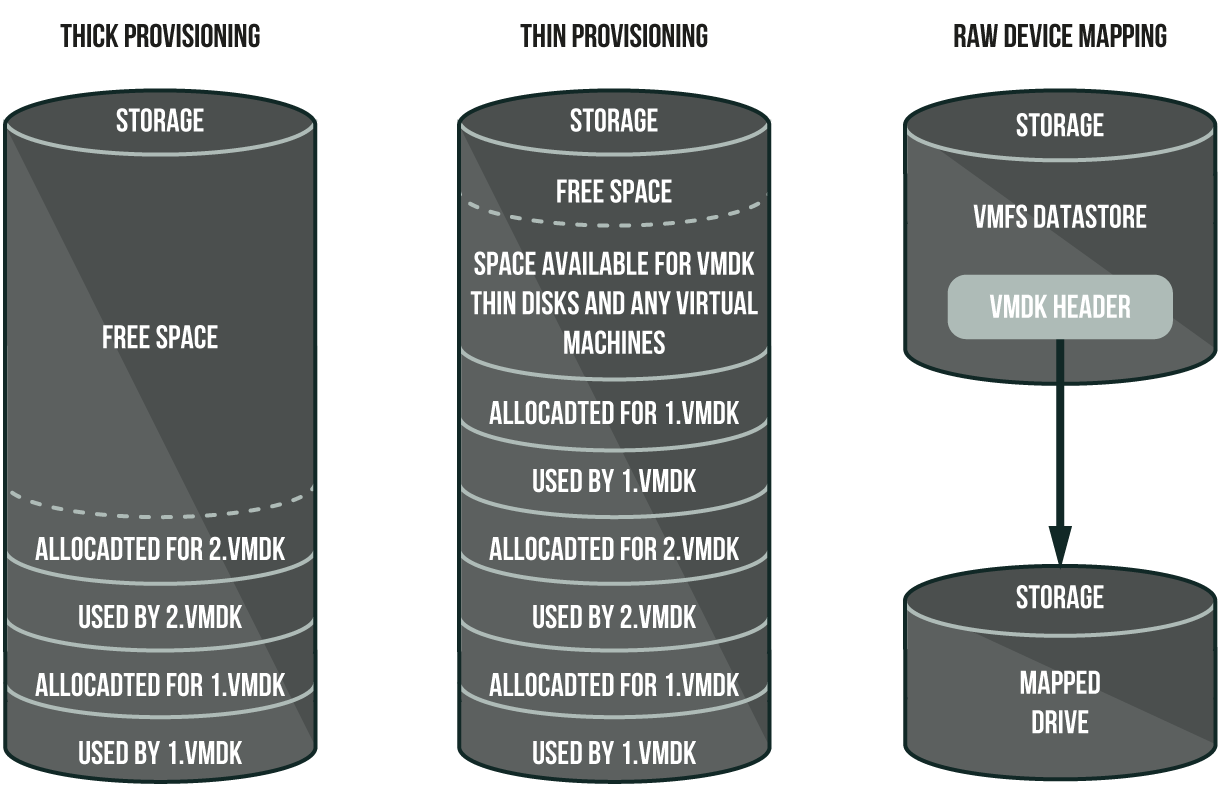

Primarily, a virtual disk for a VM from VMware is basically the same thing as in other hypervisors – a datastore file. The OS inside the VM is connecting with the virtual disk using specific device drivers. In other cases, like with RDM, it’s a header file for a physical disk, albeit it works almost the same exact way as in the previous case.

Physical structure of different virtual machine disk formats from VMware

Let us quickly remember the key differences.

Thick Provision Lazy Zeroed is essentially a format for a virtual machine disk that creates a new disk in a default format. All the space designated for the files is reserved in full, but it has a few more aspects necessary to mention. The old files aren’t cleared and formatted with zeros until the VM writes new data for the first time, and only within the specifically allocated space required for the new data. However, when you overwrite already written data (ergo, use that allocated space for a second time), the old data isn’t “zeroed out” anymore. Efficiency-wise, this type of provision is definitely not the best choice for a VM with high-performance requirements, but, on the other hand, you can create and connect this disk in mere seconds.

Thick Provision Eager Zeroed is a similar thing, only working vice versa both in terms of speed and performance. Eager Zeroed also creates a new disk in a default format with the space designated for the files is reserved in full, but, unlike the previous configuration, it “zeroes out” the allocated space in advance. Thanks to this, when your VM is writing data for the very first time, there is no delaying in speed required for “zeroing”. Creating and connection of such a disk need a lot more time than Lazy Zeroed disk, and it strongly depends on the speed and size of disk space, but this disk gives away higher performance rates as compensation.

Thin Provision, upon creating a virtual disk, reserves space designated for a disk equals zero; namely, it is 0B. Further, upon necessity, it can expand into the required size of provisioned space. Provisioning more space, just like in the case with Lazy Zeroed, would require, in turn, zeroing out the space you want to use to write new data. Of course, it means that writing new data for the first time will take longer than anticipated with this disk format. As you can figure out, it also isn’t a good fit for the VMs that have high-performance standards. Naturally, it does have an advantage, for it does not reserve space in full by default. Nevertheless, this is really an ambiguous advantage since if you configure it in a wrong way, that may result in the “disappearance” of all reserved space, which is leading to the problem with resource distribution between the VMs. Don’t forget that the rest of the datastore space, including the reserved space designated for disk expansion, is available to all VMs.

Raw device mapping (RDM) option is also supported by the VMFS file system. RDM enables a disk subsystem (storage LUN) of storage devices such as Fibre Channel and iSCSI to be connected to a VM. If the storage area network (SAN) uses snapshot software as a backup solution for VMs, you’ll need a direct connection. Furthermore, RDM-disks also comes in handy when using Microsoft Clustering Services (MSCS), even for both “virtual to virtual” and “physical to virtual” clusters. You can access the RDM option through a symbolic link referencing RDM in a VMFS volume. In that separate case, virtual disk header files in the VM configuration are viewed as VMFS volume files in the working directory of a VM. Basically, when you are opening your RDM volume to write data, the VMFS file system grants access to an RDM file on the physical device to realize the options of file locking and access control. After that, all read and write operations are sent directly to the RDM volume, without a mapping file.

The RDM files contain metadata used to manage and redirect disk accesses to the physical device. RDM gives you the advantages of direct access to disks while keeping some advantages of a virtual disk in the VMFS file system.

Before input/output operations, a VM via header file initiates the opening of the RDM volume. Next step suggests that the VMFS file system resolves the physical device sector addresses, while the VM starts to read and write operations on the physical device.

RDM allows you to:

- migrate a VM with VMotion to the RDM volumes;

- add new RDM volumes with VI Client;

- use file system features, such as distributed file locking, permissions and naming;

There are two compatibility modes for RDM:

- Virtual compatibility mode enables mapping virtual disks, including the possibility of storage snapshot creation. In this scenario, you have to choose the VMFS volume where you will store a mapping file and the volume where the VM configuration will be.

- Physical compatibility mode, in turn, allows direct access to the SCSI device, and it doesn’t require a mapping file.

VMotion, DRS, and HA are supported in both compatibility modes.

Analysis of speed and delays upon the first and secondary data write

The rock on which we split upon deciding what format should we choose is their speed. As I have already mentioned, two of four options show low-performance rates during the first writes to the new location. To make it perfectly clear, I ran some testing on a single SSD storage device using two patterns (4k random write and 64k sequential write). I used FIO 3.13 utility and accessory DD utility for imitation of the first write so I could test the data rewrite. I won’t be dedicating too much time to describing the testing method since it was highlighted in the previous materials, so let’s just run the key aspects:

- I’ve chosen two patterns for testing so I could make sure that low speed isn’t pattern’s fault;

- Devices were recreated before each new test to avoid the influence of the previous result;

- After testing the first write, the rewrite operation with random data using the DD utility was conducted to expand the disk to full size with the subsequent write in the case of Thin Provision and excluding the possibility of zeroing out with the Thick Provision Lazy Zeroed affecting the eventual results. After the following procedure, disks of different formatting showed more or less similar scores;

- Tests were run on the bare metal ESXI 6.7 up1 on the VM with OS Windows Server 2016 version 5.1.14393.1884 configured according to the official recommendations from VMware;

- The testing disk Intel SSD DC S3510 was installed on the server and sent to ESXI as Raid 0 from separate disk. The first testing was conducted with the disk caching turned on, and the results were close to those specified by the vendor. As the next step, the disk caching was turned off to avoid its influence on the results. Eventually, the performance turned out to be several times lower than the vendor specified it. Therefore, the working processes of different virtual disks were not interrupted by the disk caching processes, so the consistency in results is reliable.

Virtual machine configuration:

- 24 x Intel(R) Xeon(R) CPU E5-2690 v3 @ 2.60GHz (CPU);

- 1 x 48 Gb (RAM);

- 1 x 20 GB (HDD for System);

- 1 x 445 GB (SSD fot Test);

- 1 x LSI Logic SAS (SCSI controller for HDD);

- 1 x VMware Paravirtual (SCSI controller for SSD);

- 1 x 1 Gb/s (VMXNET 3 LAN).

FIO configuration files:

4k random write (inconsistent random write over the whole size 4k disk):

[global]

time_based

ramp_time=60

runtime=360

direct=1

ioengine=windowsaio

cpus_allowed=0,2,4,6,8,10,12,14,16,18

filename=\\.\physicaldrive1

numjobs=10

iodepth=1

[4k random write]

rw=randwrite

bs=4k

stonewall

64k sequential write (consistent write over the whole size 64k disk):

[global]

time_based

ramp_time=60

runtime=360

direct=1

ioengine=windowsaio

cpus_allowed=0,2,4,6,8,10,12,14,16,18

filename=\\.\physicaldrive1

numjobs=10

iodepth=1

[64k sequential write]

rw=write

bs=64kAs you can see, data kind of speaks for itself. Both theories regarding the low performance of Thick Provision Lazy Zeroed and Thin Provision and the change in the results during rewrite are confirmed.

Disks comparison

To sum up, I decided to portray these results in a more precise and concise way.DiskformatSpeed of the new disk creationReserving disk space on a datastoreZeroing out the free saceSpeed of the first write on the data blockSpeed of rewrite on the data blocPossible use

| Disk format | Speed of the new disk creation | Reserving disk space on a datastore | Zeroing out the free space | Speed of the first write on the data block | Speed of rewrite on the data block | Possible use |

|---|---|---|---|---|---|---|

| Thick Provision Lazy Zeroed | Fast | Reserved in full in response to the full size from the beginning | Before the first write | The lowest | High | VMs not dependant on the high speed performance |

| Thick Provision Eager Zeroed | Slow, depends on the disk size | Reserved in full in response to the specified size from the beginning | While creating a disk, requires a lot of time | High | High | VM dependant on the high speed performance |

| Thin Provision | Fast | Reserved if necessary to write data. The initial size is 0B. | Upon distributing a new block for writing new data | Low | High | VM with limited resources, not dependant on the high speed performance |

| Raw Device Mapping | Fast | Reserved in full in response to the initial size of the physical storage device | Before the first write | High | High | VM dependant on the high speed performance |

Conclusion

As usual, I hope that this material will be of use, that it was simple, and reliable enough.