Introduction

A lot of you have probably heard already about VMware Project Pacific, which was announced at VMworld 2019. This product basically transforms VMware vSphere into a Kubernetes native platform and is currently in technology preview. It is set to appear in the next vSphere releases.

In fact, Project Pacific is part of the VMware Tanzu portfolio of products and services along with Tanzu Mission Control. While VMware Project Pacific enables using container and VMs orchestration platform, Mission Control provides lifecycle management for Kubernetes clusters from a single point of control.

What does it do?

Project Pacific became one of the drawing cards of the latest annual VMworld conference, and VMware has made sure the people noticed it. It’s not surprising since they support Kubernetes more attentively than just another commodity in their portfolio.

If we were to narrow it down to a single point, it’s just that a lot of customers are currently planning on deploying containerized apps on physical infrastructures because it allows saving extra expenses on licensing and hypervisors and using highly available fast apps.

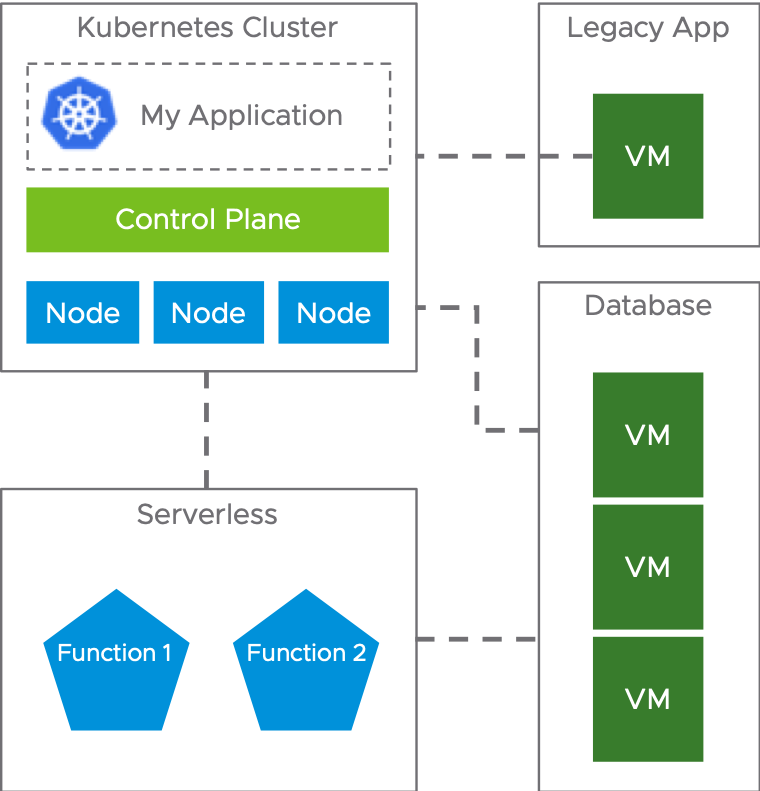

However, even though it actually works out, these apps still need to communicate with corporate features like databases or directory services. That being said, it results in a weird mix between physical and virtual environments, maintained by multiple different software development and DevOps teams:

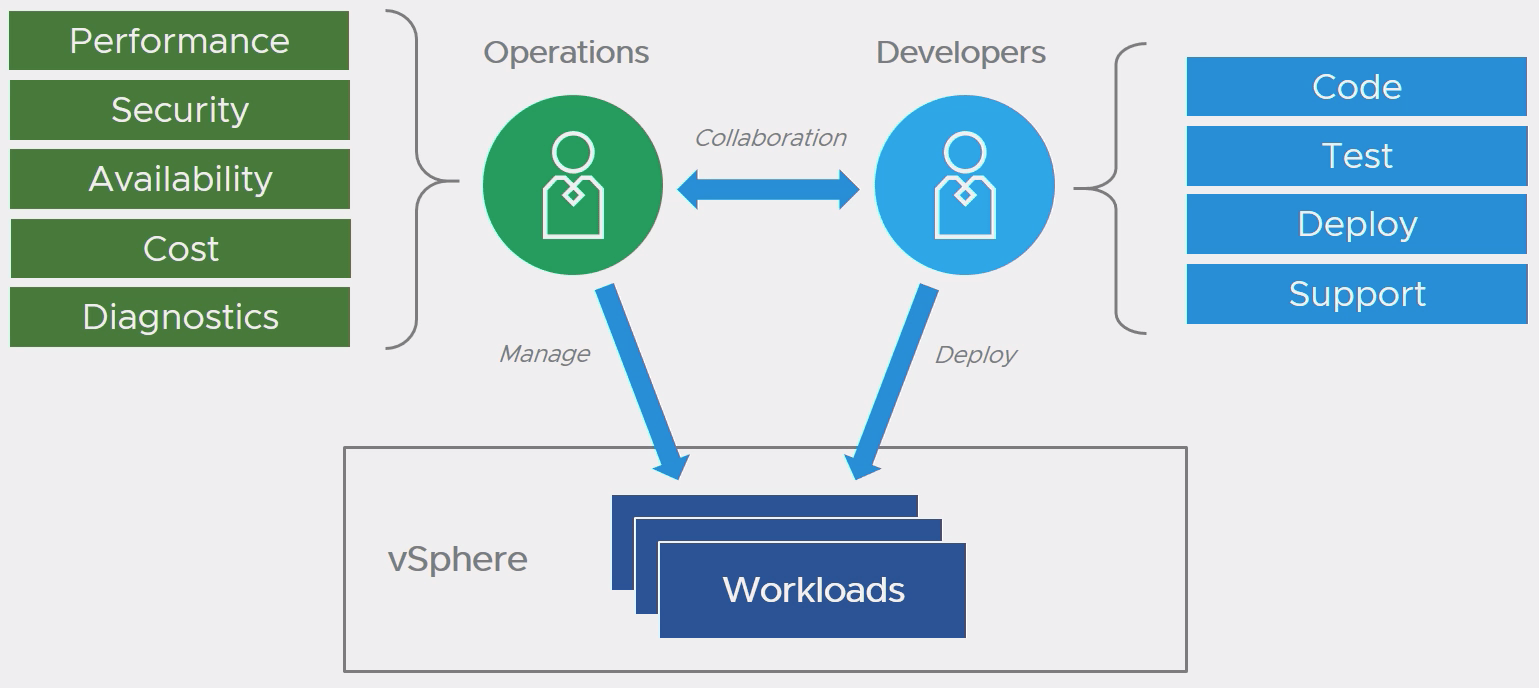

That is why the two primary tasks of the Project Pacific are the following:

- Drawing Kubernetes users to the vSphere platform with fault tolerance, load balancing, and the other features invaluable for the VMs using containerized apps. Interesting enough, that VMware even goes as far as promising NSX, vSAN, and VVols support on application containers.

- Uniting software development teams, DevOps teams, and vSphere admins in one workflow with technical measures to deploy and maintain containerized apps on the vSphere platform:

How does it work?

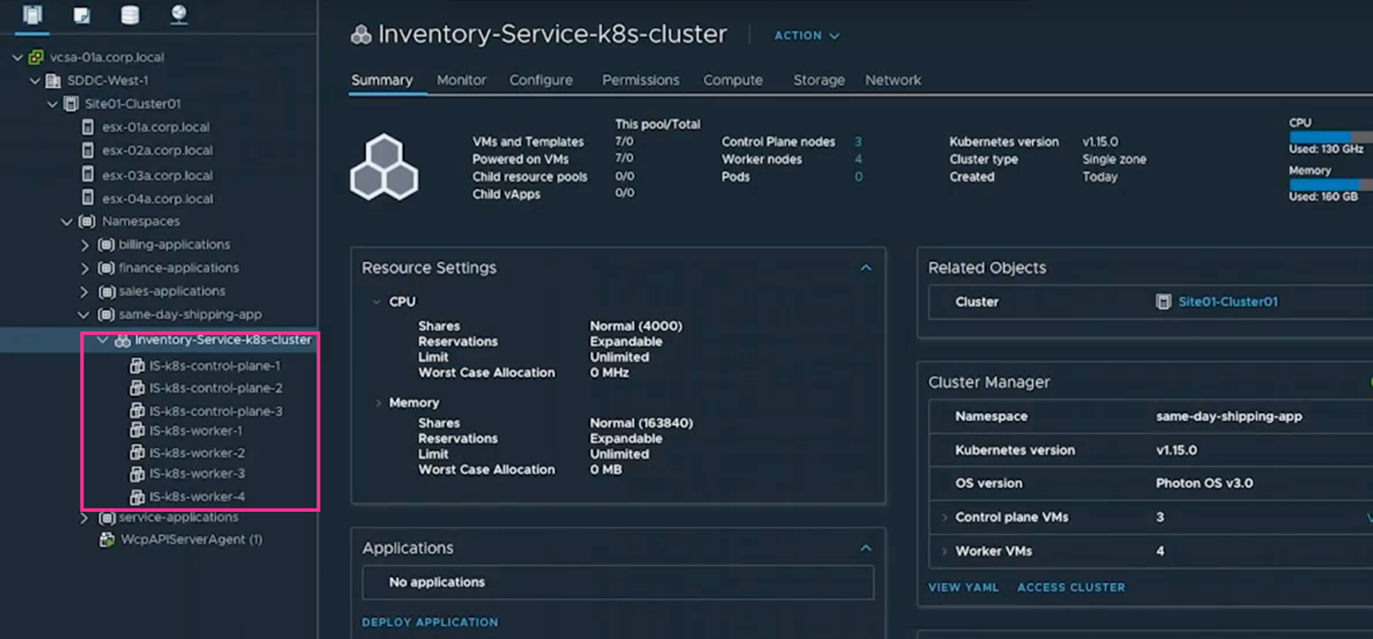

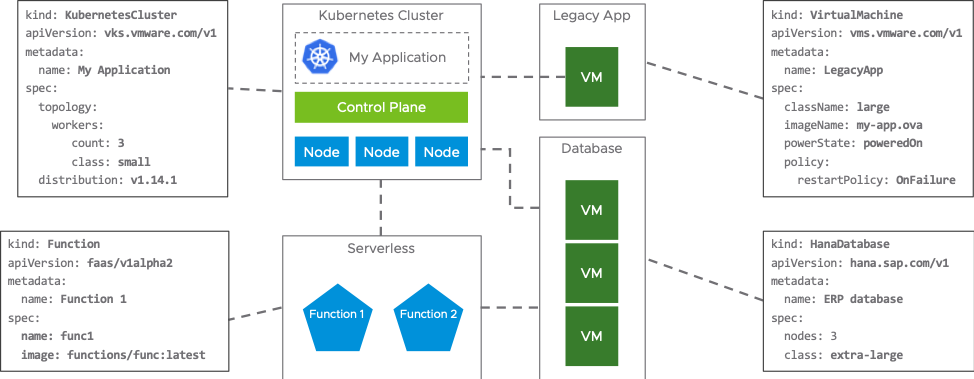

The most important aspect of VMware Project Pacific is an appropriation of the declarative paradigm. It provides the customers, both UI AND API users, with the means to describe what the application orchestration platform should perform. As for the developers, they can still use native Kubernetes API to complete their tasks with the declarative syntax for managing cloud resources like VMs, storages, and networks. Moreover, a vSphere admin would be able to manage the infrastructure of a containerized app in vCenter (Kubernetes pots) as a single block of several VMs.

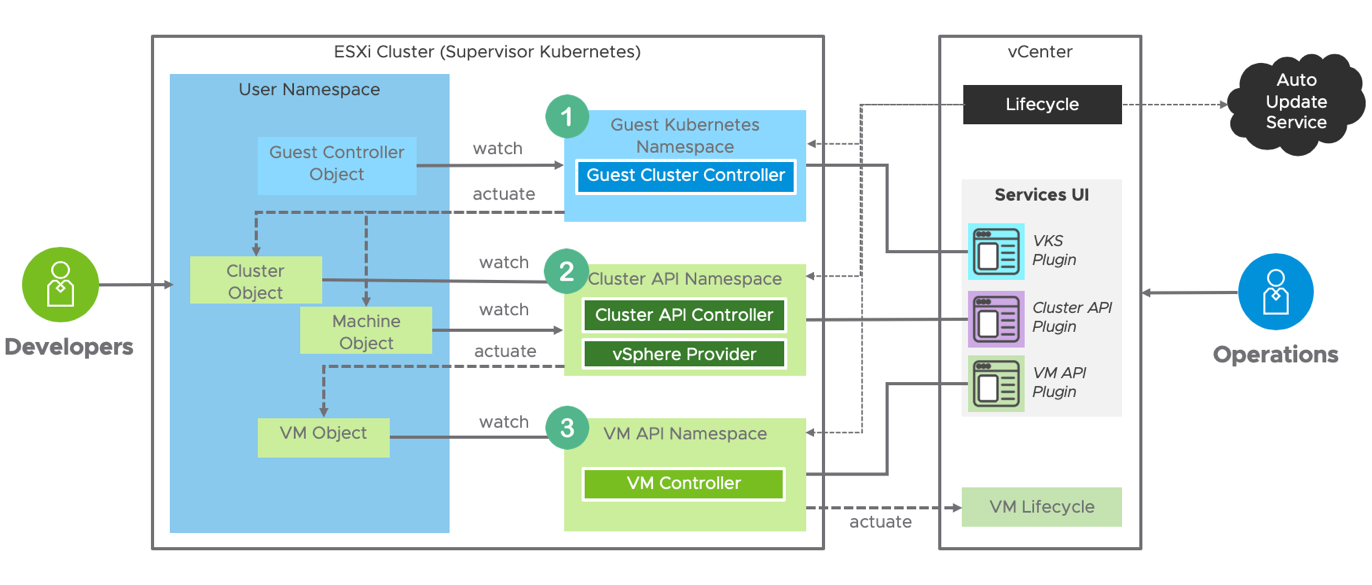

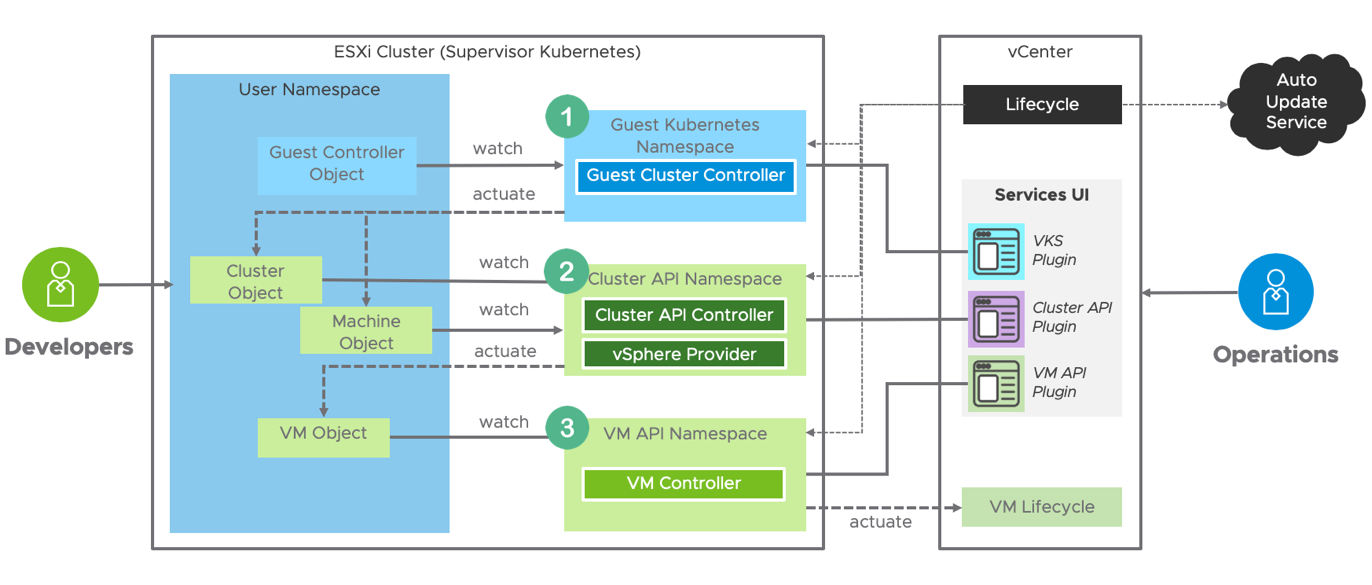

API also isn’t left behind. VMware Project Pacific significantly extends the Kubernetes API with Custom Resource Definitions (CRD), which enables DevOps teams to access vSphere API from the Kubernetes infrastructure.

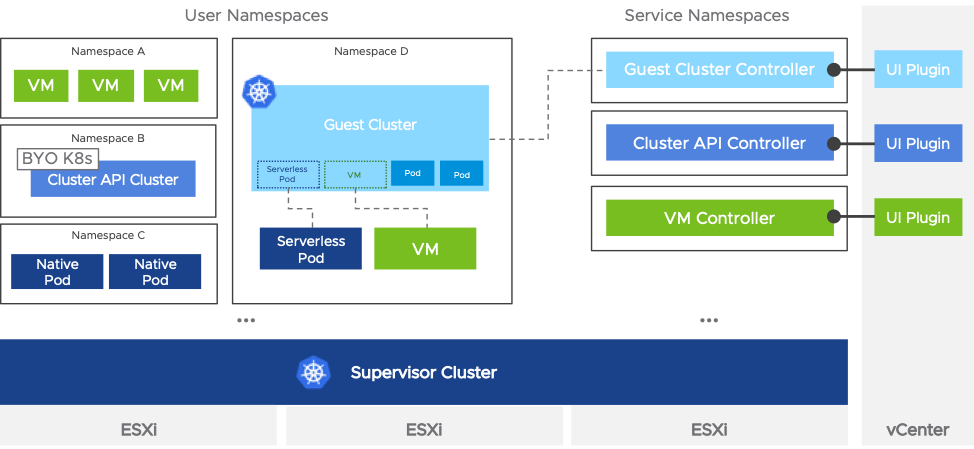

After introducing Project Pacific into the existing vSphere infrastructure, as a result, we get such components for this environment:

- Supervisor Cluster – is a special kind of cluster that uses ESXi hosts as its worker nodes. To achieve such an effect, VMware developed its own Kubelet version that integrates directly into ESXi (the implementation is called the “Spherelet”).

By design, the Supervisor Cluster is a Kubernetes cluster with extended functions offered by the vSphere platform via the vSphere API. In this case, it represents how exactly vSphere is using Kubernetes technology. Every application admin just has to write a YAML file and deploy it with kubectl, just like in the Kubernetes platform, to provision a VM, container, or any other resource.

Kubernetes users manage VMs with a Virtual Machine operator, with RLS settings, Storage Policy, and Compute policy supported. In addition, it provides APIs for Machine Class and Machine Image (it is a Content Library for the vSphere admin) management.

- CRX Runtime – as you probably already know, the VM is managed by either VMM (virtual machine manager) or VMX (virtual machine executive). Specifically for Kubernetes, VMware just added a third feature, called the CRX (container runtime executive) that runs a containerized app. It is a kind of a virtual machine that includes a Linux kernel (Photon OS) and minimal container runtime inside of the guest OS. Since it is pretty much concentrated on one goal and most of the other features have been removed, you can launch it in less than a second, according to VMware.

VMware introduces such technology as the Native Pods; a single ESXi host can simultaneously support over 1000 pods. Take notice that such Pods are paravirtualized due to the Linux kernel, which means that with a direct connection to a host hypervisor, it can be a lot faster than you’d think.

For CRX Runtime objects, VMware designed Direct Boot, which can enhance the speed significantly. Traditionally, a VM booting includes discovering devices and building in-memory structures, but CRX doesn’t need that because it exploits the pre-created in-memory structures.

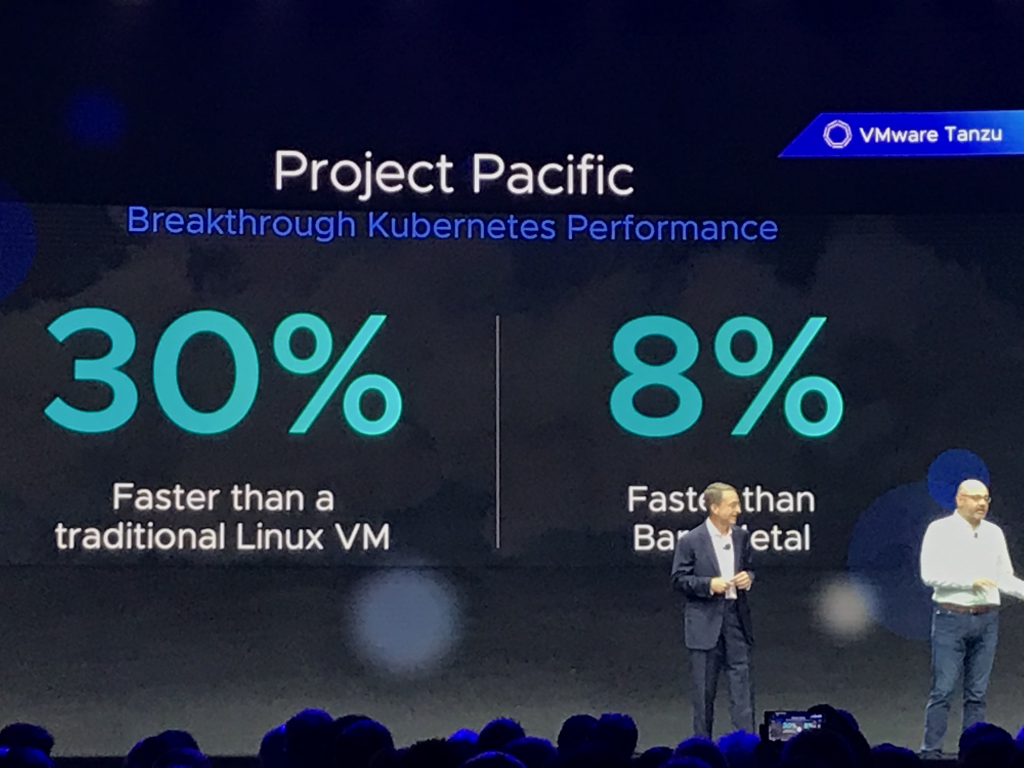

However, VMware didn’t stop here and announced that Project Pacific Kubernetes perform 30% better than ones inside a virtual machine and 8% faster than on bare metal.

They say this is because ESXi traditionally works better with the NUMA architecture than Linux. Of course, this sounds like a marketing move, but it would be a gas to take a look at the actual benchmarking results.

Containers problem and VMware solution

Despite all the pros, it’s evident that there are cons. Here, such an approach to containerizing apps ends up with an amount of VMs in the datacenter so huge that they are almost impossible to manage manually! Nobody even mentions such things as fault tolerance (HA), encryption, vMotion migrations for balancing loads on ESXi, etc.

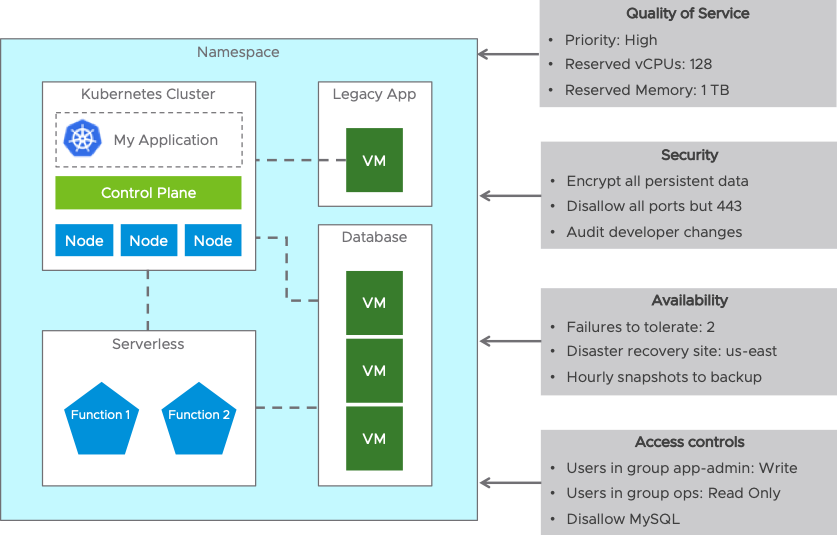

Furthermore, the app architecture usually consists of a Kubernetes cluster with a multitude nodes with containers and external services where the VMs are.

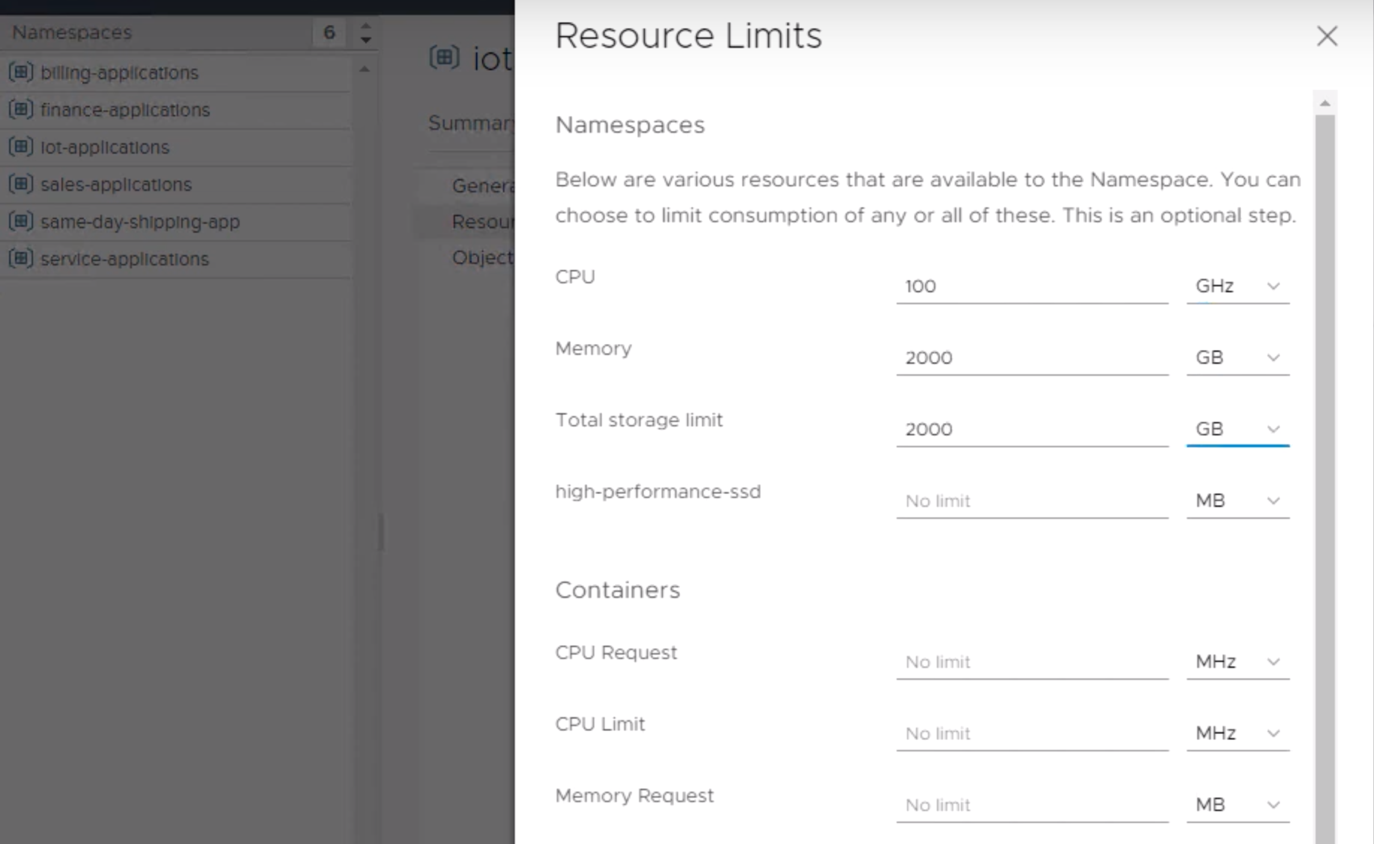

To deal with it, VMware comes up with the Kubernetes Namespace technology. A Namespace in the Kubernetes cluster includes a collection of different objects like CRX VMs or VMX VMs. Now, this is available for QoS, Security, or Availability policies in Project Pacific:

You can set, say, Resource Limits in the Namespaces:

To see how this works, check the video below:

Guest Clusters

Since the Supervisor Cluster is not a conformant Kubernetes cluster due to different Kubelet components, it most certainly may have a problem with some specific containerized apps.

To minimize the problem, VMware developed the Guest Kubernetes Cluster. A Guest Cluster basically is a usual Kubernetes cluster that runs inside virtual machines on the Supervisor Cluster. A Guest Cluster is fully upstream compliant Kubernetes, albeit working on the VMs, not on bare metal servers.

So, while the Supervisor Cluster is a VMware vSphere cluster that works on the “platform platform” Kubernetes, the guest clusters are the Kubernetes clusters working on vSphere.

To see the difference for yourself, check it here (guest server Namespace D):

Finally, the Guest Clusters in vSphere use the open source Cluster API project for the lifecycle management of the cluster components. A developer can view it just like the ordinary cluster, and an operator can get to it with the vCenter plugins:

Conclusion

Yeah, we do remember that is not the first time VMware has tried something similar to adapt Kubernetes (see, for example, Project Bonneville). However, this actually is the first time when it’s not an interim tool but something more efficient and reliable like a fully-fledged native Kubernetes platform that targets both software development and DevOps teams responsible for containerized apps. On the other hand, even an ordinary vSphere admin can still work with this, after learning the basics about the Namespace and Supervisor Cluster.

This idea also has extra cons, which aren’t that much apparent. For example, while everyone knows there can be dozens or hundreds (at best) VMs in the datacenter, there can be thousand of the Native Pods! It’ll be simply overwhelming for the admins to dig through it. Even such features as the Namespace won’t save the situation, because you’ll still have to work with it from different sides, like vSphere clusters or virtual networks.

Second, the more ways to manage the Kubernetes cluster are, the more complicated this process will turn out to be. The most time usual admins will spend on the job would be taken over by the exhausting searching for root cause of problems through all the services.