VMware virtual hardware version 20 in vSphere 8 unlocks some nice new features but also brings some completely new features to Virtual machines (VMs) in vSphere 8. As you know from the past, each release of vSphere allows bigger VMs, greater VMs per host density or greater overall scalability in management via vCenter server. The vSphere 8.0 is no different and VMware has maximized this version so much that they even had a hard time to find a hardware for testing those maximized specs.

The vSphere 8 promises to be more efficient if deployed with some of the newest hardware innovations such as Smart NICs. You might not know, but up to 30% of the CPU capacity is consumed by networking and security.

VMware vSphere 8 will also be more efficient and have more processing power thanks to Smart NICs where you’ll be able to install a ESXi instance that will run network-centric workloads freeing up to 20 % of the processing power of the CPU cores. It will offload the networking part from your main CPU. This will allow to have even better density per host and more efficient clusters.

Scalability Improvements in virtual hardware version 20

- Support for latest Intel and AMD CPUs

- Device virtualization Extensions

- Up to 32 DirectPath I/O devices

- vSphere DataSets

- Application Aware Migrations

- Latest Operating system support

- Up to 8 vGPU devices

- Device Groups

- High Latency Sensitivity improvements with Hyperthreading

Virtual machines using hardware version 20 provide the best performance and latest features available in ESXi 8.0.

Windows 11 deployment at scale with Virtual TPM provisioning policy

Many VMware admins will have to deal with Windows 11 within their environments, sooner or later. Best practices for Windows 11 deployments are that the VM should include a unique vTPM device to comply with Microsoft’s minimum hardware requirements and to ensure a higher-level security. No matter what, Windows 11 requires vTPM devices to be present in virtual machines (VMs). You can perhaps hack it for your lab environments, but when dealing with production environments, you better comply with the recommended Microsoft’s requirements.

When Cloning a VM within a VMware vSphere, and that VM has a vTPM device, you can introduce a security risk as TPM secrets are cloned together with that particular VM. (Identical copy/clone).

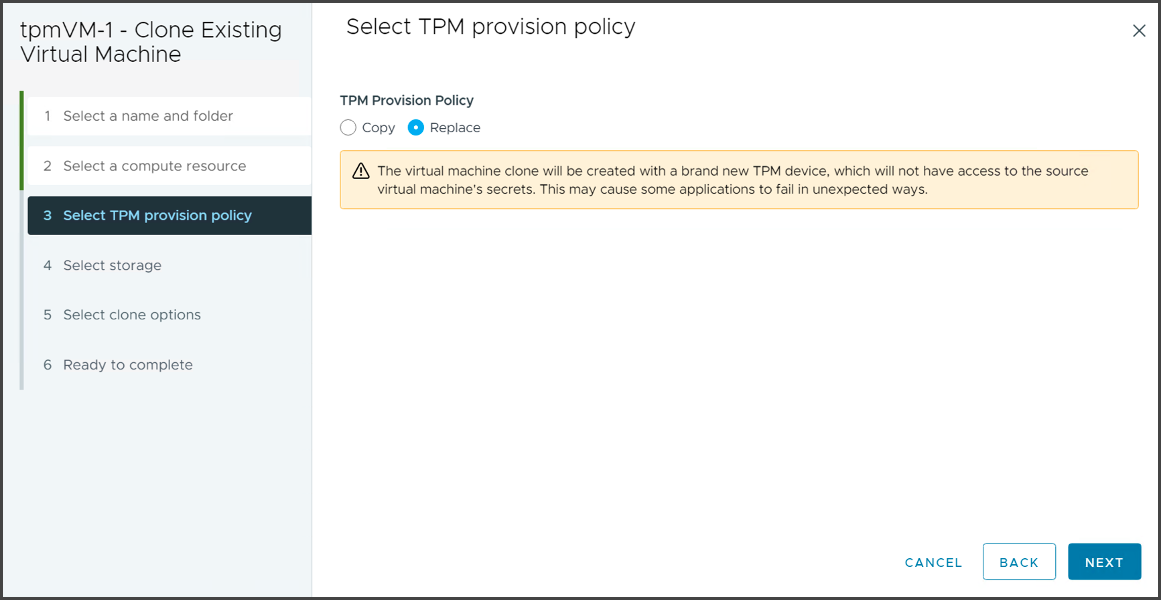

That’s why you might want to take an advantage of the new vTPM policy that will be introduced in vSphere 8.0. With this new policy you’ll be able to choose between Copy or Replace the vTPM.

The 2 choices you’ll have are:

- Copy TPM – vSphere will clone a VM with the same TPM device as the source VM. This might, as said above, introduce a security risk.

- Replace TPM – The VM clone will be created with a completely new TPM device that will not have any access to the source VM’s secrets. If some of your applications causes problems, you might prefer to use the COPY option instead.

So, if you’re plans are to deploy Windows 11 at scale, the vTPM provisioning policy will help you to easily replace and have a unique TPM device for each cloned VM.

So, when cloning (deploying windows 11) VMs from template or clone, you’ll have a nice option during the wizard, that will allow you to choose which option you need.

Please note that all screenshots within this post are from VMware as vSphere 8 has not been released yet and the vSphere 8 beta is currently under strict NDA.

TPM Provision policy for Windows 11 VMs

As you can see, in vSphere 8, the vTPM devices can be automatically replaced during clone or deployment operations. vSphere 8.0 will also have an advanced setting that allows you to force the default behavior.

The advanced setting is:

vpxd.clone.tpmProvisionPolicy

and the default clone behavior can be set to REPLACE.

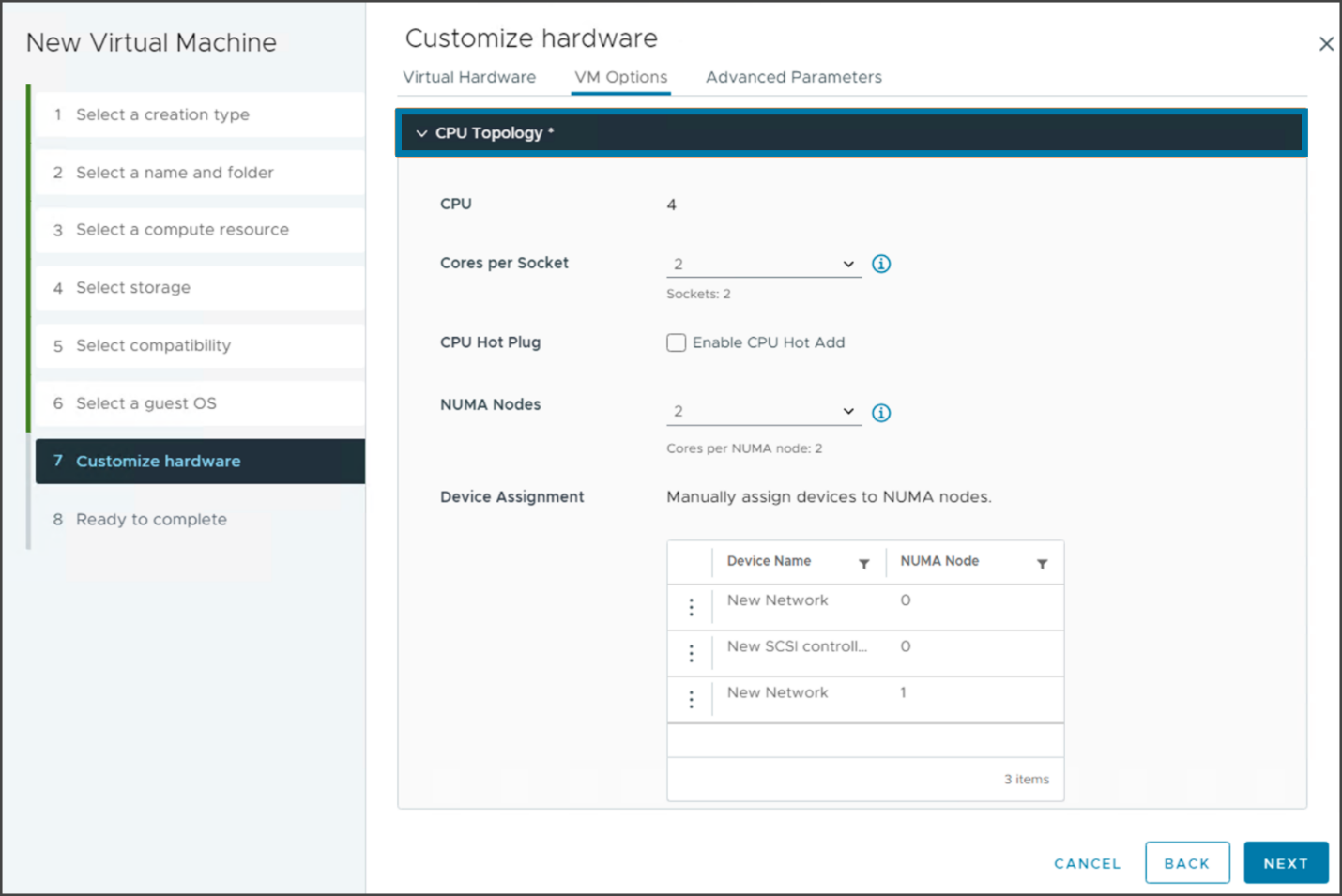

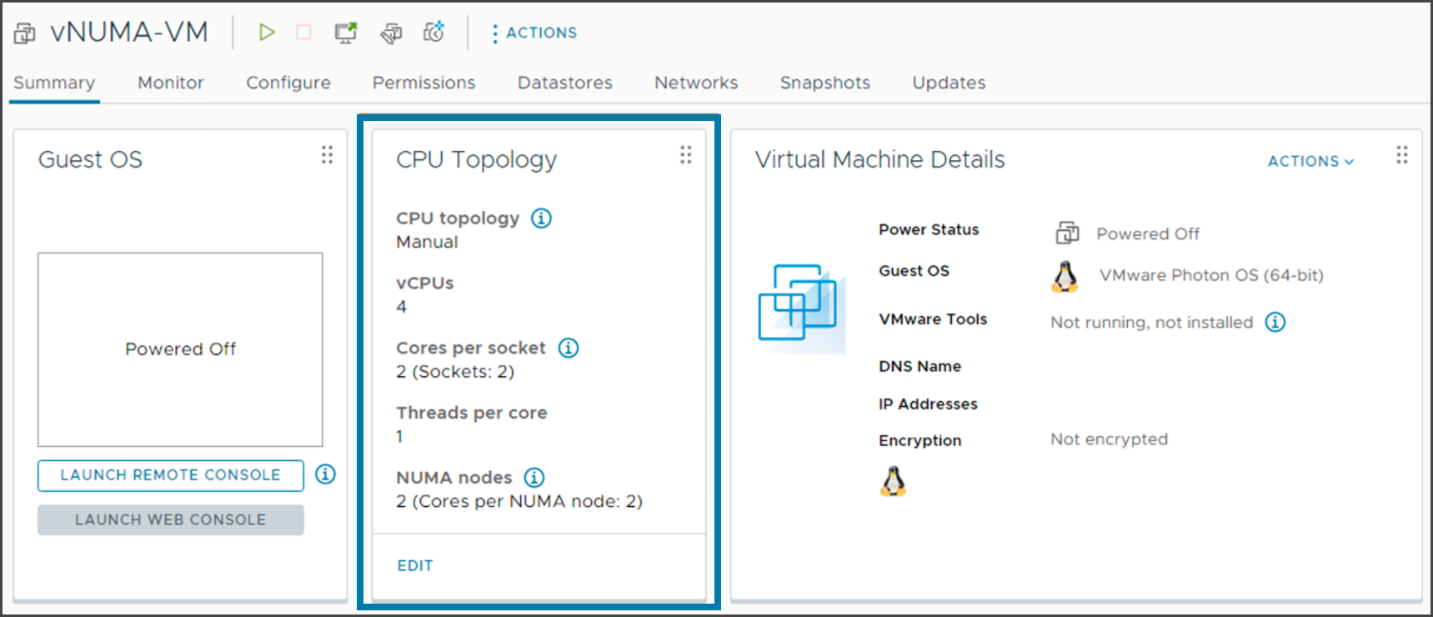

NUMA Nodes exposed in vSphere Client

vSphere 8 and virtual hardware version 20 will help you to configure vNUMA topology for your VMs via the UI. Previously this was only doable via advanced VM settings or via PowerCLI. In vSphere 7.x you associate a NUMA node with a virtual machine to specify NUMA node affinity. So, you constrain the set of NUMA nodes on which ESXi can schedule a virtual machine’s virtual CPU and memory.

Use vSphere Client to configure the vNUMA topology for virtual machines

The new setting will then also be visible via the Summary TAB where the tile displaying the CPU topology details shows the NUMA nodes details.

vSphere 8 and NUMA topology details on the CPU topology tile

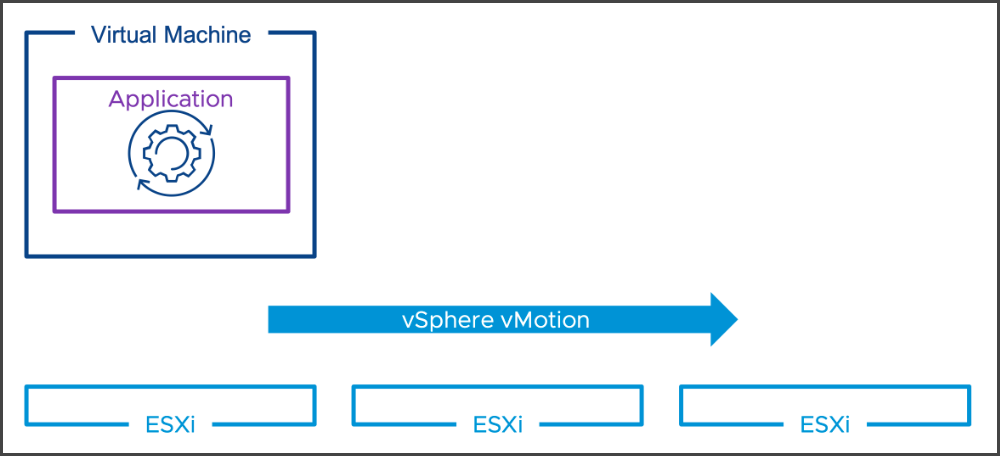

vSphere 8 application aware migrations

Some applications do not tolerate the stun times during vMotion operations at all. Those are the latency sensitive workloads such as time-sensitive applications, voice over IP or clustered applications. Some of those applications are just too sensitive to stun times and does not tolerate it at all.

What is a “stun”?

A stun is basically a quiesce. A “stun” operation means VMware will pause the execution of the VM at an instruction boundary and allow in-flight disk I/Os to complete. The stun operation itself is not normally expensive (typically a few 100 milliseconds, but it could be longer if there is any sort of delay elsewhere in the I/O stack).

VMware introduces a possibility for application vendors to interact with the vSphere management layer, via VMware tools, to make them migration aware. This allows the vMotion event to send a notification for the application and the application is aware that vMotion event will occur soon. The application can prepare (shut gracefully some services, in case of clustered applications make a cluster failover to another preconfigured cluster etc) for vMotion. And when this will take place, it will occur more quickly and is able to send back a notification to the application when it’s finished.

This technology allows the application being aware of vMotion operation, prepare for it, and not being surprised that suddenly the app is stunned without knowing why.

vSphere 8 and application aware migrations

Improvements performance for Latency sensitive applications

VMware tries to improve the latency sensitive workloads since several vSphere releases and each release introduces new and better way to do it. In vSphere 8 VMware will allows you to use hyper-threading to support this kind of workloads.

Workloads for telco providers that needs high-latency sensitivity with hyper-threading will be possible in vSphere 8 thanks to a support that has been designed specially for those applications and which is delivering better performance.

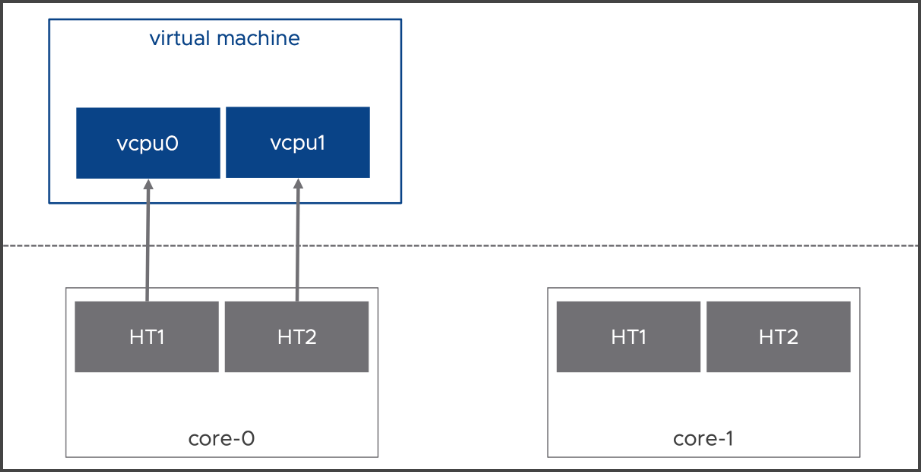

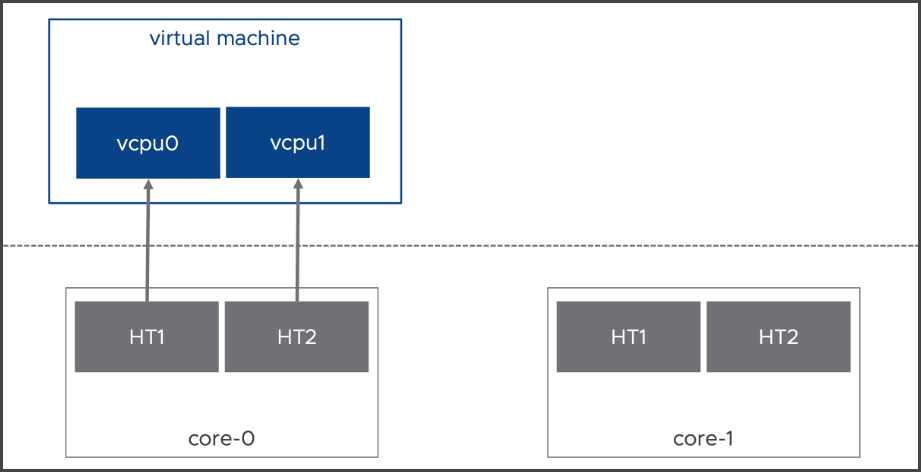

With this technology, if configured at the VM level, the VM’s vCPU are scheduled on the same hyper-threaded physical CPU core.

High latency sensitivity with hyper-threading

The settings can be enabled on per-VM level via the VMware Virtual Hardware version 20.

In order to use this technology, you’d have to first upgrade the host to ESXi 8.0 then upgrade the VM’s virtual hardware to v20 and then enable the settings.

Virtual Hardware 20 and High Latency Sensitivity with HyperThreading

Final thoughts

All those improvements are slowly going to perfection. We could already say that when vSphere 7 was released, but VMware still finds new areas of improvements to make the vSphere platform to be adapted to all use cases. We have now vSphere with Tanzu for container workloads. We have new improvements in scalability where even VMware admits, there is almost no more room for improvement. We have a public cloud management support and new versions of vSAN ESA with single-tier architecture. I know we haven’t talked about this in details yet, but I promis to cover the latest and greatest innovations for vSAN in one of our future articles.