VMware persistent memory has been introduced with vSphere 6.7 and further improved over time so it is time to give you all the new details and enhancements that has been done. It is a very interesting technology when used with supported hardware. It can boost performance of your workloads but you must pay attention on support, configuration and performance.

When adding persistent memory to your ESXi host, the host detects the hardware, so you can format and mounts it as a local PMem datastore. Your ESXi uses VMFS-L as a file system format. There can be only one local PMem datastore per host.

What is vSphere Persistent Memory?

vSphere persistent memory (PMem) takes advantage of your server’s hardware where your motherboard has to have a support for PMem modules as they plugs-into the DIMM slots. PMem is a non-volatile which means it can store data over reboots or un-planned downtimes. The device is as fast as RAM but with the advantage of being able to retain the data you’ll store even if your server loses power.

When talking cost of such technology, one has to take into account the real needs and the real use case. In general, we can say that the PMem cost sits in between DRAM and NVMe SSD. While you can see the real benefit of NVMe SSDs for your storage, when you feel that you have a super low latency application that needs the best possible storage with low latency, the answer is PMem.

PMem is located very close to the CPU compared to traditional storage so there is lower latency and faster performance.

PMem modules are available from major hardware manufacturers such as Dell or Intel. Intel’s Optane DC persistent memory has been designed to improve performance and give you almost RAM speed. The latency is a little higher than RAM, it is still within nanoseconds.

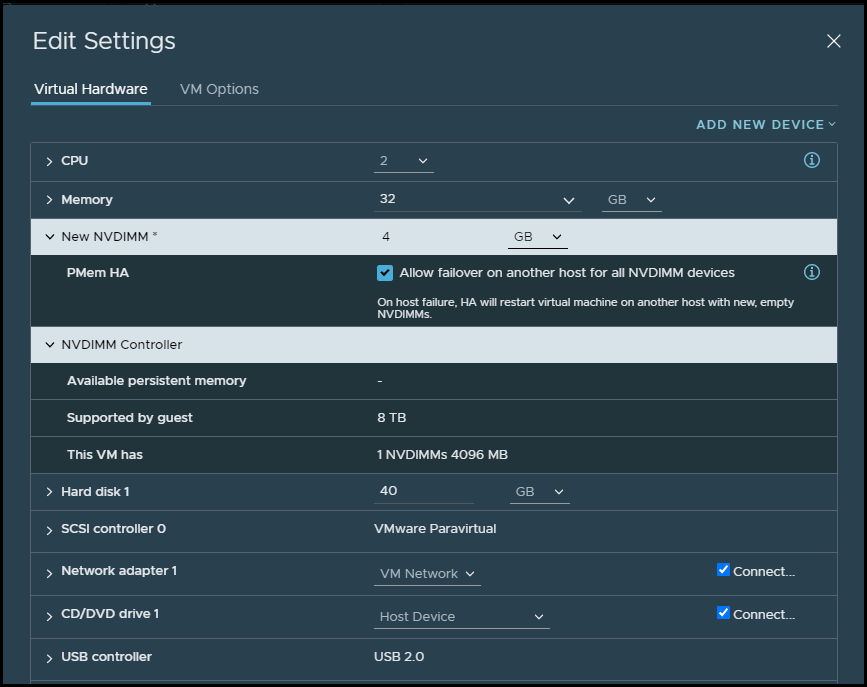

You can have PMem to be configured to allow failover on another host for all NVDIMM devices. During host failure, HA will restart virtual machine on another host with new, empty NVDIMMs.

Screenshot from VMware.

Add PMem when provisioning new VM or edit existing VM’s config

There are different access modes with PMem. Let’s have a look.

PMem Access Modes

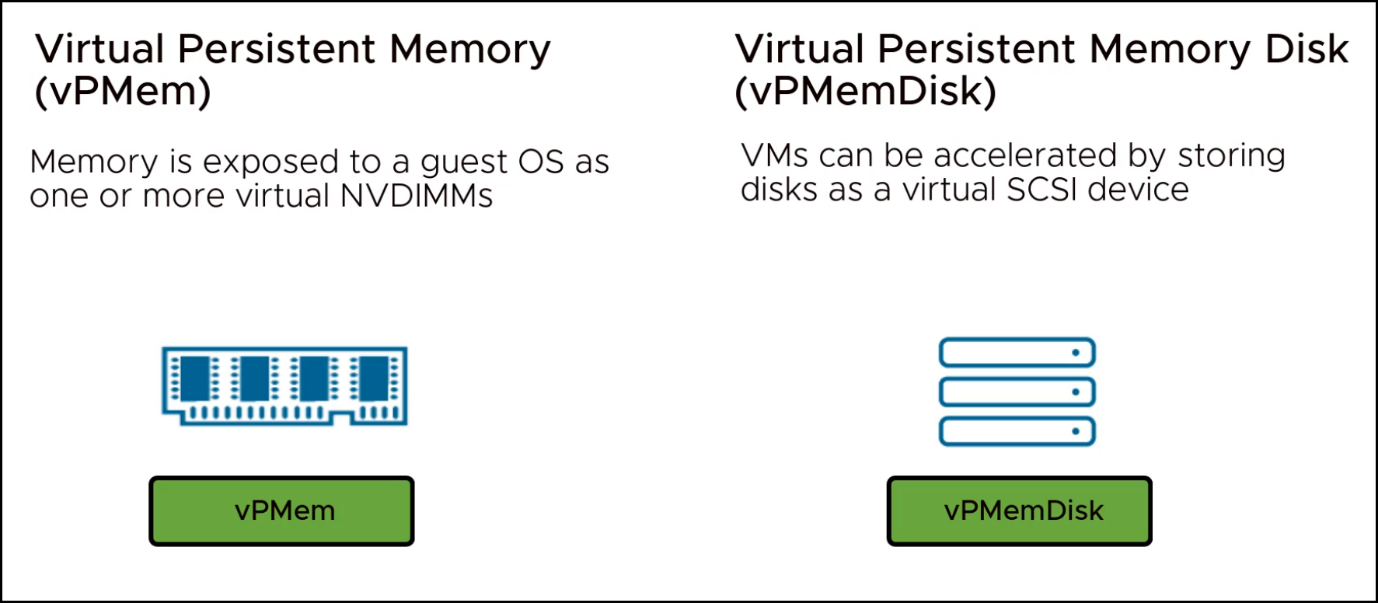

When you configure your VMs with PMem, those VMs can have direct access to persistent memory. Traditional, non-persistent configure VMs, can use fast virtual disks stored on the PMem datastore.

Direct-Access Mode – In this mode, which we also call virtual PMem (vPMem) mode, the VM is able to use the NVDIMM module as a standard byte-addressable memory that can persist across power cycles, reboots or when you experience a power failure. You don’t lose your data. You can add one or several NVDIMM modules when provisioning the VM.

The VMs must be of the hardware version ESXi 6.7 or later and have a PMem-aware guest OS (Windows Server 2016 or higher). Each NVDIMM device is automatically stored on the PMem datastore.

Virtual Disk Mode – This mode is available to any traditional VM and supports any hardware version, including all legacy versions. VMs are not required to be PMem-aware. You configure the VM a way that you create a regular SCSI virtual disk and attach a PMem VM storage policy to the disk. The policy automatically places the disk on the PMem datastore.

Different Modes of PMem with vSphere

Mixed mode – For Intel Optane DC persistent memory (DCPMM) there is also a mixed mode where you can “reserve” part of the DCPMM module to be used as memory mode and the remaining capacity as Direct-Access Mode.

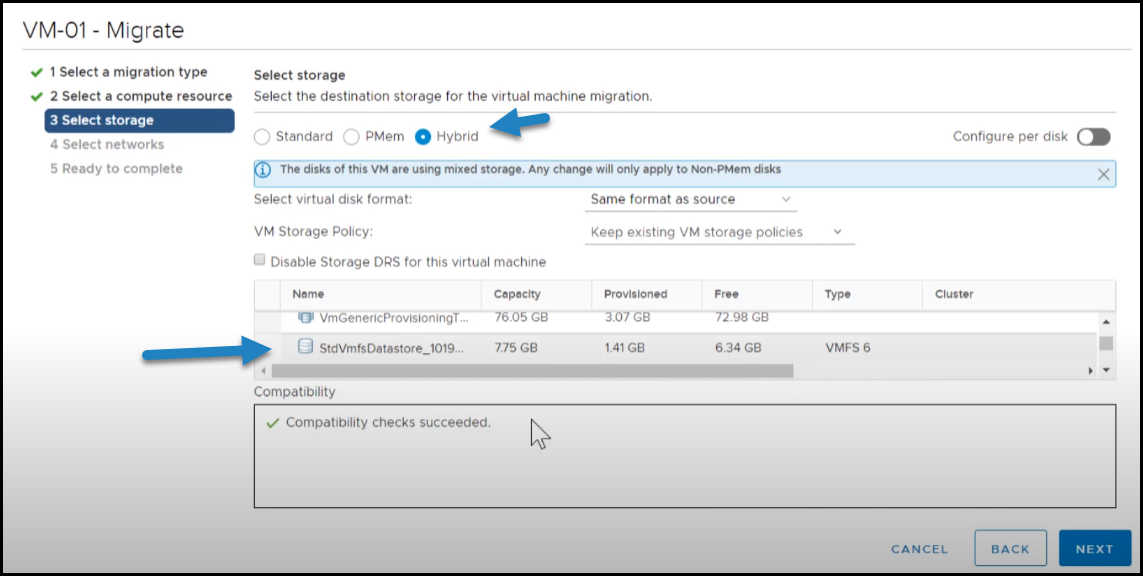

When migrating or cloning VMs using PMEM, the VM will copy its data to the destination, but you have a choice when selecting the destination storage for the particular VM.

You can select Standard, PMem or Hybrid. Below you can see when selecting the hybrid, the VM will use mixed storage (PMEM and traditional storage).

Select Hybrid mode and select standard storage source

With cloning, the same as above applies as well. If the destination host does not support PMEM, you’ll not be able to finish the wizard for migrating or cloning.

The VM home directory with the vmx and vmware.log files cannot be placed on the PMem datastore, so they are placed on traditional storage.

PMEM default storage policy

vSphere has new policy called PMEM default storage policy. When you want to place the virtual disk on the PMem datastore, you must apply the host-local PMem default storage policy to the disk. The policy is not editable.

The policy can be applied only to virtual disks and not to all VMs files. It’s because the VM home files does not reside on the PMem datastore, but on traditional storage device, so you should check that those files are stored on traditional storage.

PMEM Cluster options

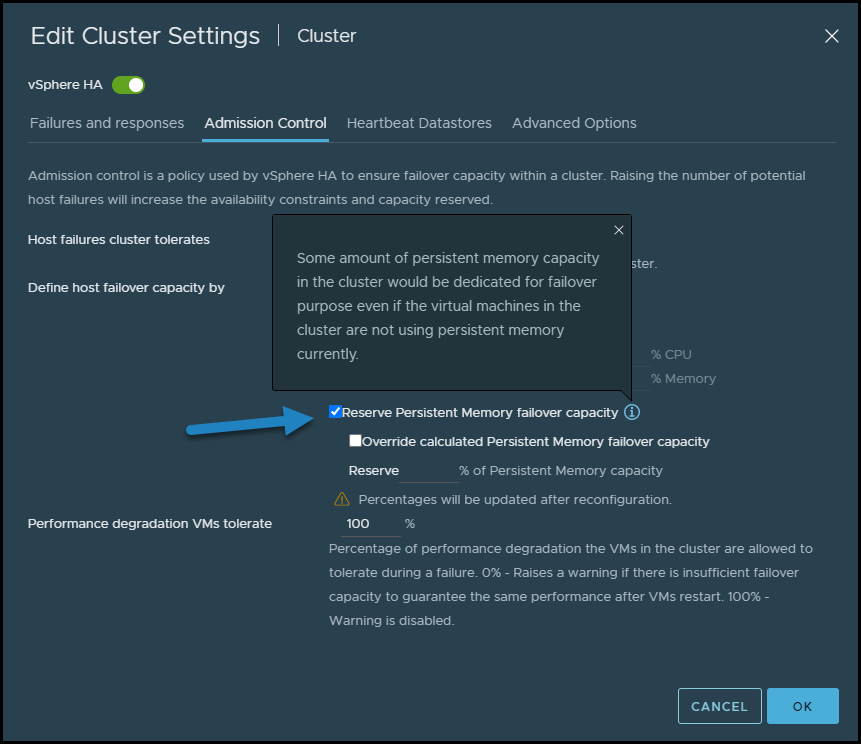

You can reserve a percentage of Persistent Memory for Host failover capacity. This is actual storage capacity that is blocked and must be considered for host power off.

Under Edit Cluster Settings you can select Admission Control to specify the number of failures the host will tolerate.

There is a checkbox allowing you to reserve persistent memory failover capacity. This option specifies whether some amount of persistent memory capacity in the cluster will be dedicated to failover even if the VMs in the cluster are not using PMEM currently.

Reserve Persistent Memory failover capacity cluster option

Final Words

I hope that this small article has helped a bit to see the benefits of VMware vSphere PMEM storage and you see the benefits of it. The workflows are simple, wizard driven where if you have unsupported hosts, the workflow does not allow you to finish the wizard.

VMware Persistent Memory allows you to have nanosecond latency datastores for some critical high-performance VMs within your organization. vSphere 7 U2 user interface is easy to use with PMEM options.