VMware has just announced its latest upcoming new release vSphere 8.0. The product is announced during the VMware EXPLORE event in San Francisco, but the availability for download will be only later this year.

The content that we talk in this post might change in the final release of the product (GA). if any of the features we’ll talk about today are finally not available in the GA, don’t blame VMware or me. Things can change. We’ve seen this in the past.

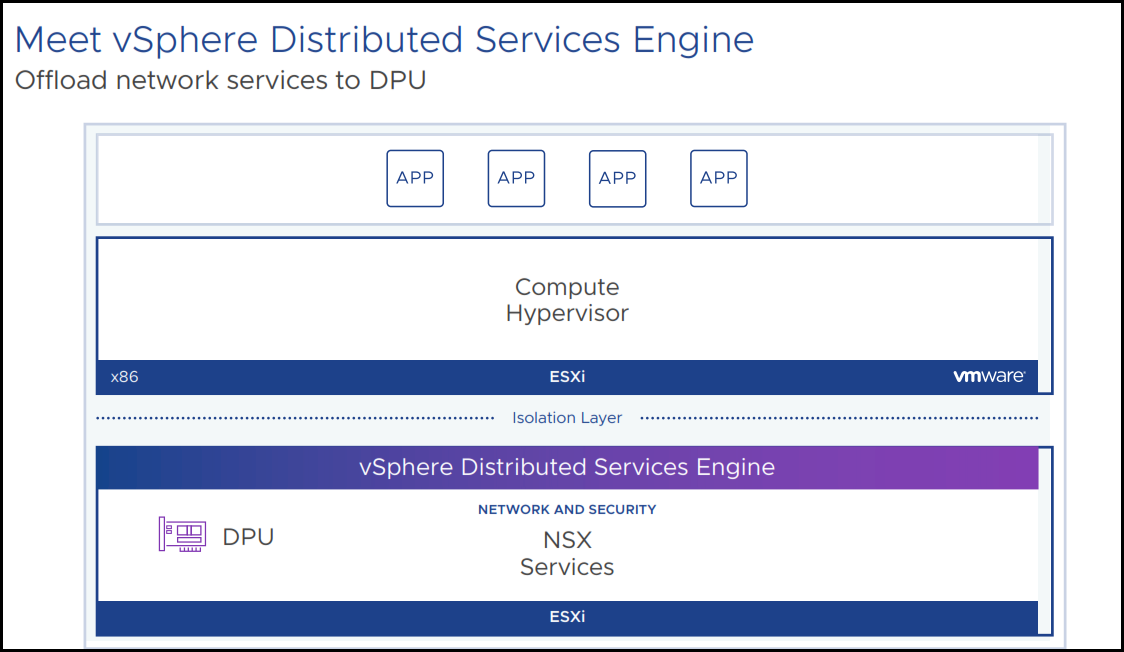

vSphere Distributed Services Engine

Formerly project Monterey, the vSphere Distributed Services Engine, allows you to improve infrastructure performance by using the Data Processing Units (DPU) for hardware accelerated data processing to improve the performance of the whole infrastructure. With vSphere 8, the applications will be able to benefit from those features. Admins will be able to simplify DPU lifecycle management and boost infrastructure security with NSX.

Project Monterey: “Project Monterey reimagines the virtual infrastructure as a distributed control fabric through tight integration with DPUs (Data Processing Unit – also known as SmartNICs). VMware is leading an industry wide initiative to deliver a solution for its customers by bringing together the best of breed DPU silicon (NVIDIA, Pensando Systems, Intel) and server designs (Dell Technologies, HPE, Lenovo).”

What’s DPU?

DPU is sitting at the hardware layer. Any PCIe devices (graphics, nic…). The ESXi, at the top layer, with the DPU and NSX services underneath. A second instance of the ESXi is directly running in the DPU which allows offloading of some of the ESXi services to the DPU for increased performance. This technology can free CPU cycles that then can be used for running workflows.

vSphere 8 GA will support greenfield installations with network offloading NSX.

VMware Distributed Services Engine

Two ESXi instances per physical server: There are now two ESXi instances running simultaneously, one on the main x86 CPU and one on the SmartNIC. These two ESXi instances can be managed separately or as a single logical instance. CSPs providing VCF-as-a-service will want the former while enterprises using VCF as normal will prefer the latter.

The vSphere distributed services engine is a lifecycle managed via vSphere Lifecycle Manager. There is no difference in versions when ESXi is installed on the DPU. When you remediate the parent host that contains the DPU, the version of ESXi that you remediate with will also get remediated to the DPU itself.

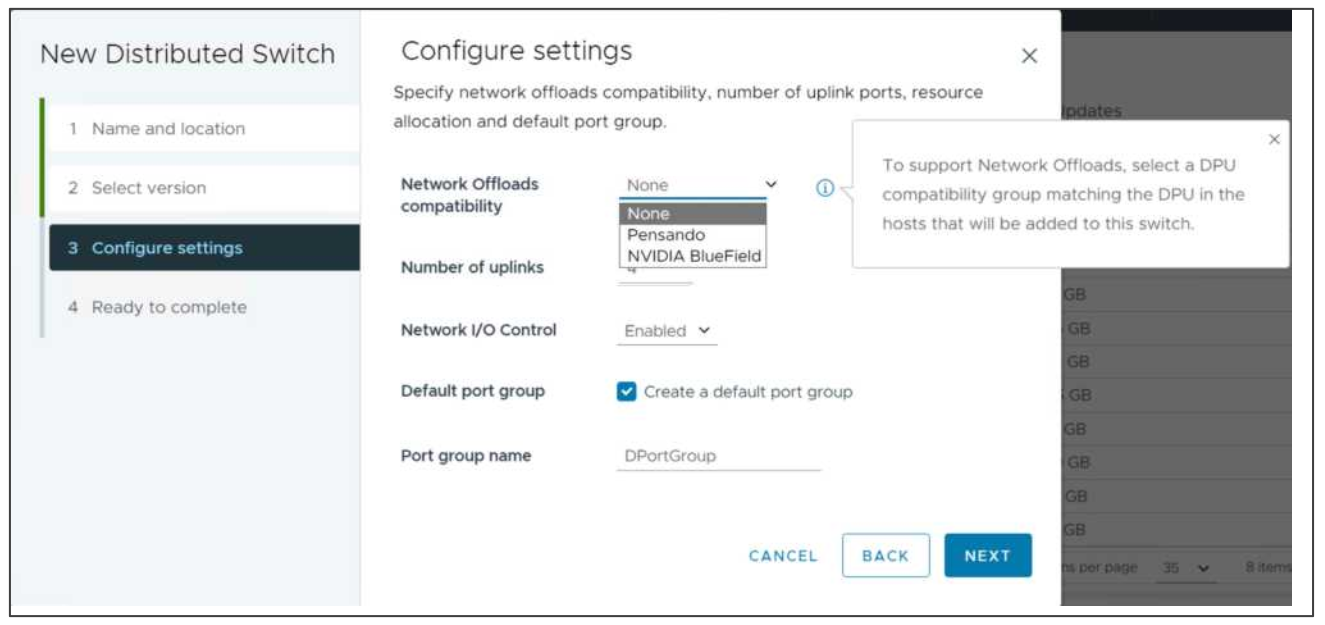

vSphere Distributed Switch version 8.0 will be introduced and it shall increase the network performance, and enhance the visibility and observability of network traffic. Also, provides security encryption, isolation, and protection from NSX.

vSphere distributed Switch 8.0

The supported DPU will appear within the drop-down menu on the Network Offload Compatibility. You should select the DPU compatibility group matching the DPU in the hosts that will be added to this switch.

A DRS and vMotion are still supported.

vSphere With Tanzu

With vSphere 8.0 VMware will release a unified version of VMware Tanzu. So far, as you could see, there were different packaging of Tanzu, but VMware wants to make things more uniform within the Tanzu and container eco-system. There will be a single TKG instance available from vSphere 8.0 onwards.

New Features:

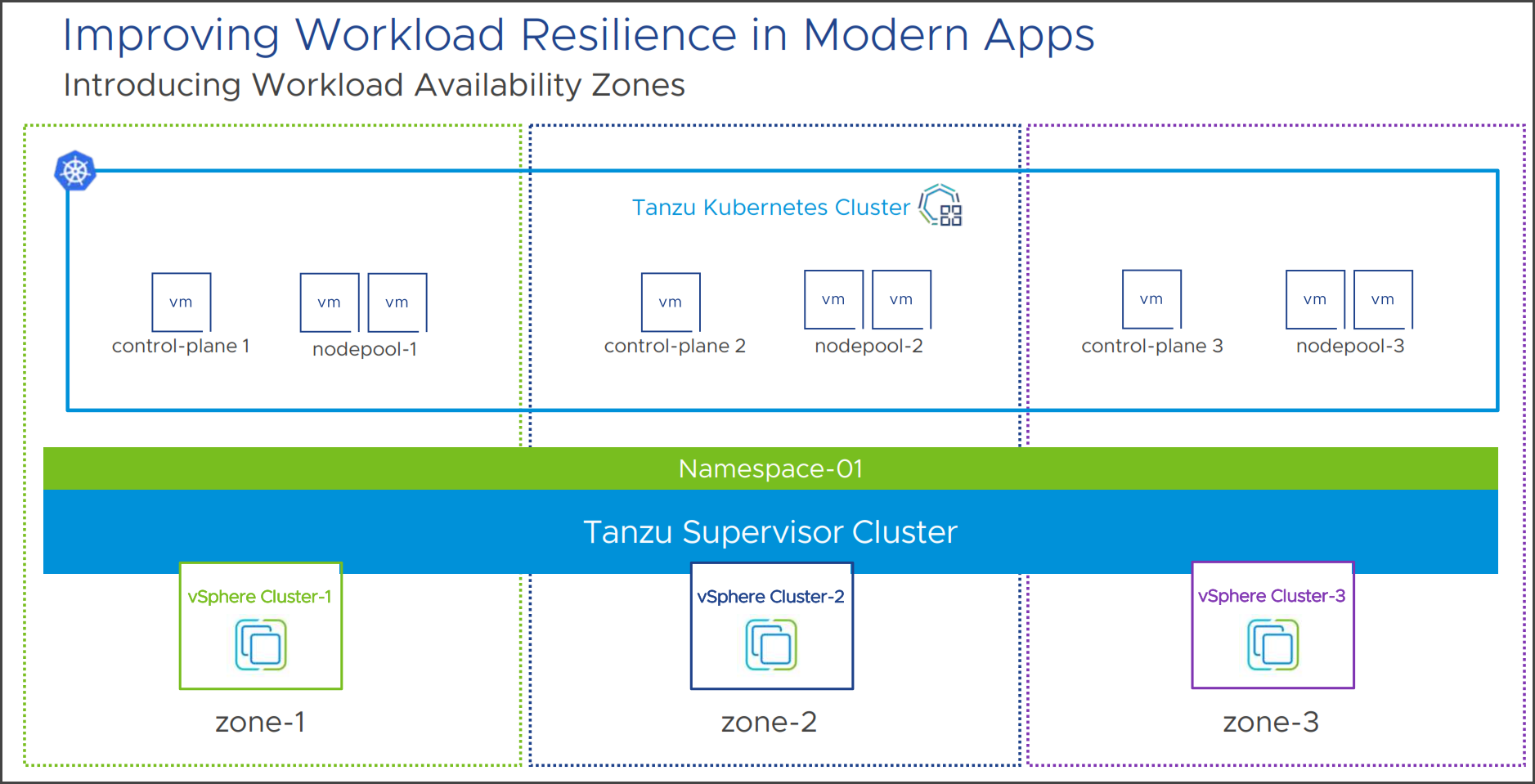

Workload Availability Zones – This feature is able to isolate workloads across vSphere clusters, supervisor clusters, and Tanzu Kubernetes clusters that can be deployed across these zones in order to increase availability. The feature ensures that the worker nodes do not share the same vSphere cluster.

VMware Workload Availability Zones

You’re no longer locked out in a single vSphere cluster, so your vSphere namespace will span availability zones to support the workloads to be deployed across zones.

vSphere 8.0 GA will require at least 3 availability zones. During the workload management activation, you’ll have the choice to deploy in the previous cluster-based option or if you have zones set up you’ll be able to choose from the workflow availability zone option. There will be a one-to-one relationship with the vSphere cluster but VMware is looking to expand this in the next release.

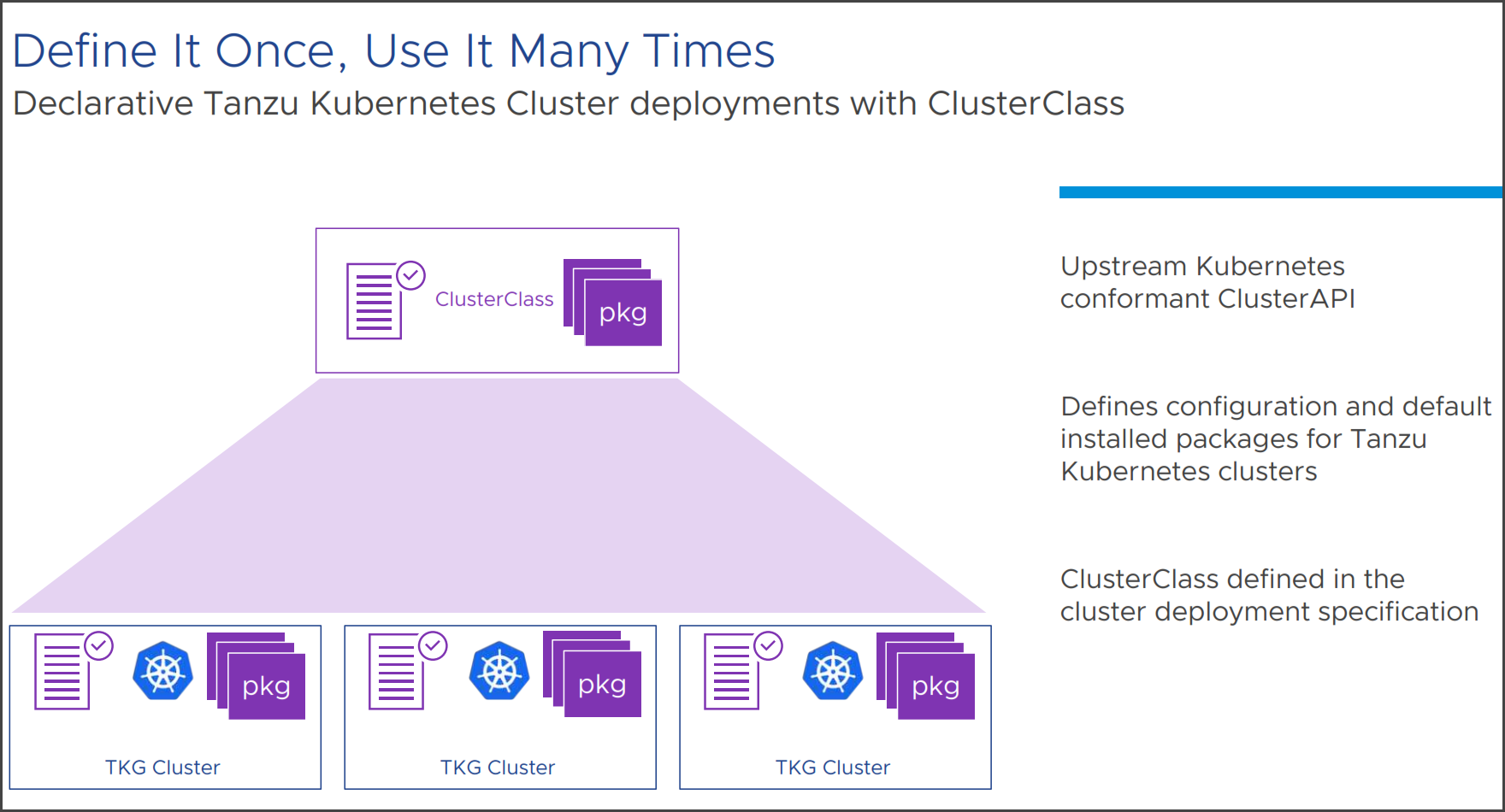

ClusterClass – open-source cluster API project to specify cluster specifications as well as pre-install packages. You can define the shape of your cluster once, and reuse it many times, abstracting the complexities and the internals of a Kubernetes cluster away.

Quote from Kubernetes site:

ClusterClass, at its heart, is a collection of Cluster and Machine templates. You can use it as a “stamp” that can be leveraged to create many clusters of a similar shape.

VMware ClusterClass

The platform team can decide which packages shall be deployed at cluster creation.

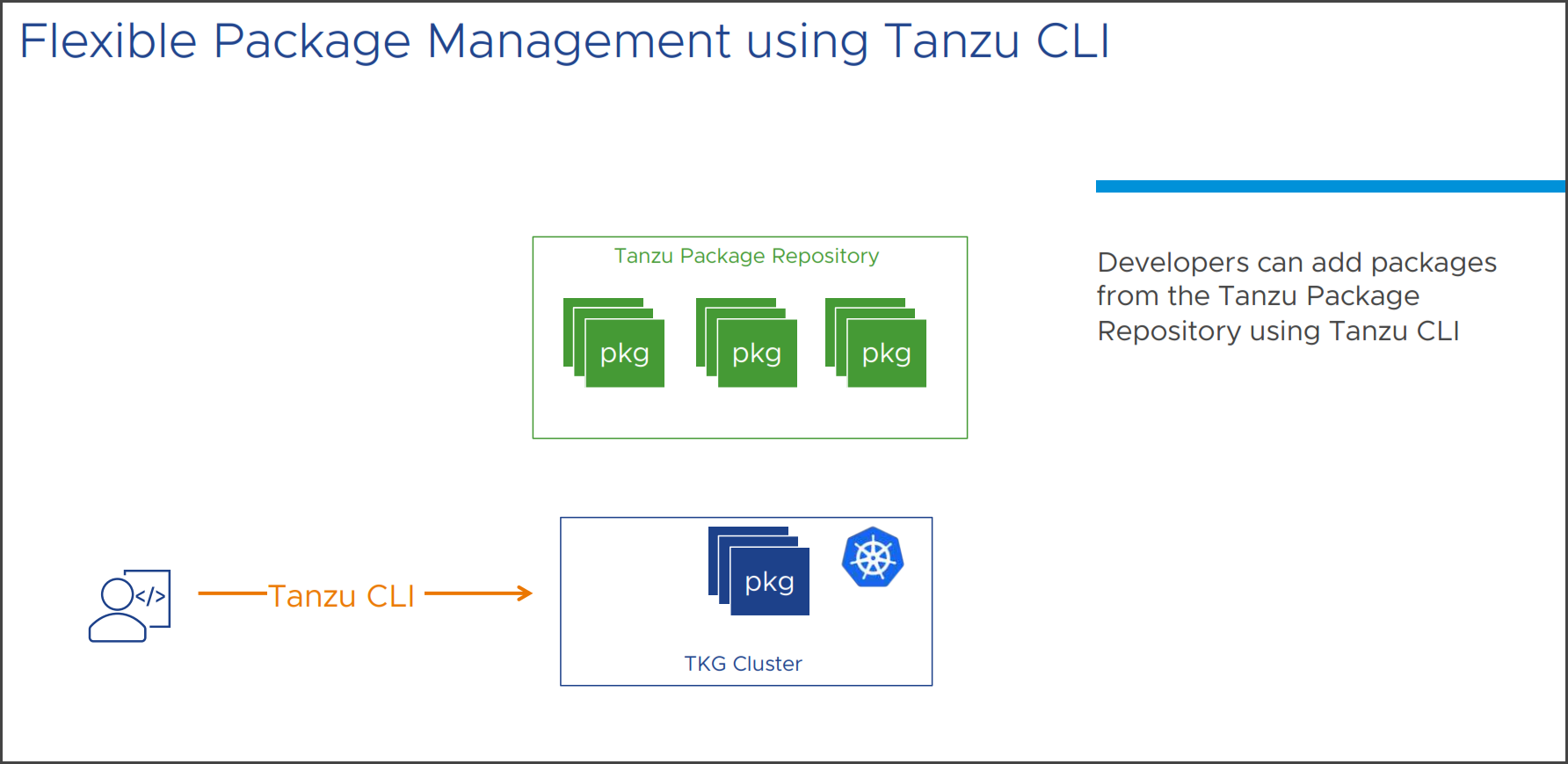

After cluster deployment, you’ll be able to add additional packages from Tanzu Package Repository using Tanzu CLI. The Tanzu standard depository doesn’t go away. The developers will still be able to use it and deploy packages via Tanzu CLI (ex. Contour, Grafana, Prometheus, or external DNS…) These are all managed as add-ons through the Tanzu CLI.

VMware Tanzu CLI

Customize PhotonOS and Ubuntu VMs – now can be customized and saved in a content library to use with Tanzu Kubernetes clusters.

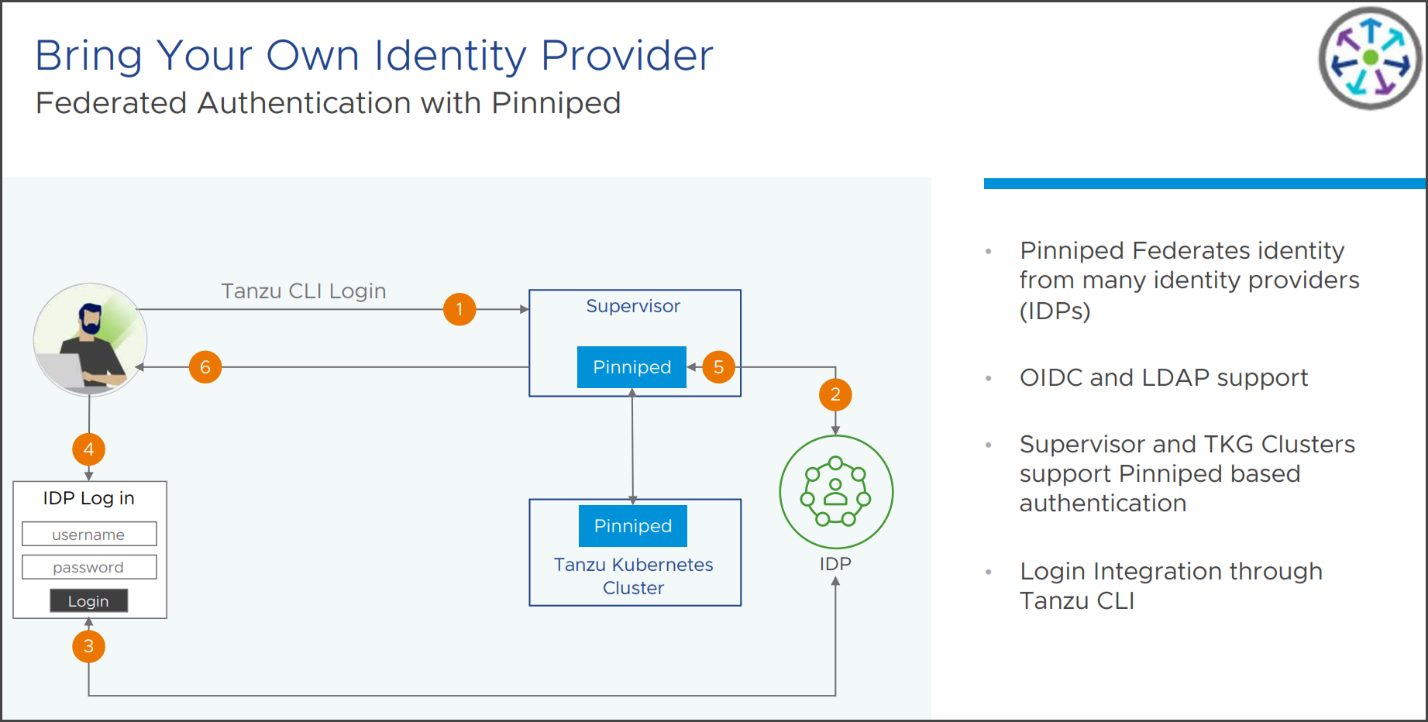

Pinniped Integration – integration to the supervisor cluster and Tanzu Kubernetes Clusters (TKG). Supports LDAP and OIDC federated authentication. This is an alternative to the dependency on the vCenter SSO. (It will still be possible to use this one). Supervisor and TKG clusters have direct access to OIDC and LDAP without relying on vCenter SSO.

Pinniped pods are automatically deployed on the supervisor and TKG in order to support the authentication.

Pinniped authentication

Lifecycle Management

The DPU is lifecycle managed. You can stage patches, and remediate them. VMware supports parallel remediation, vSphere configuration profiles (in Tech. preview), and standalone host support (via API).

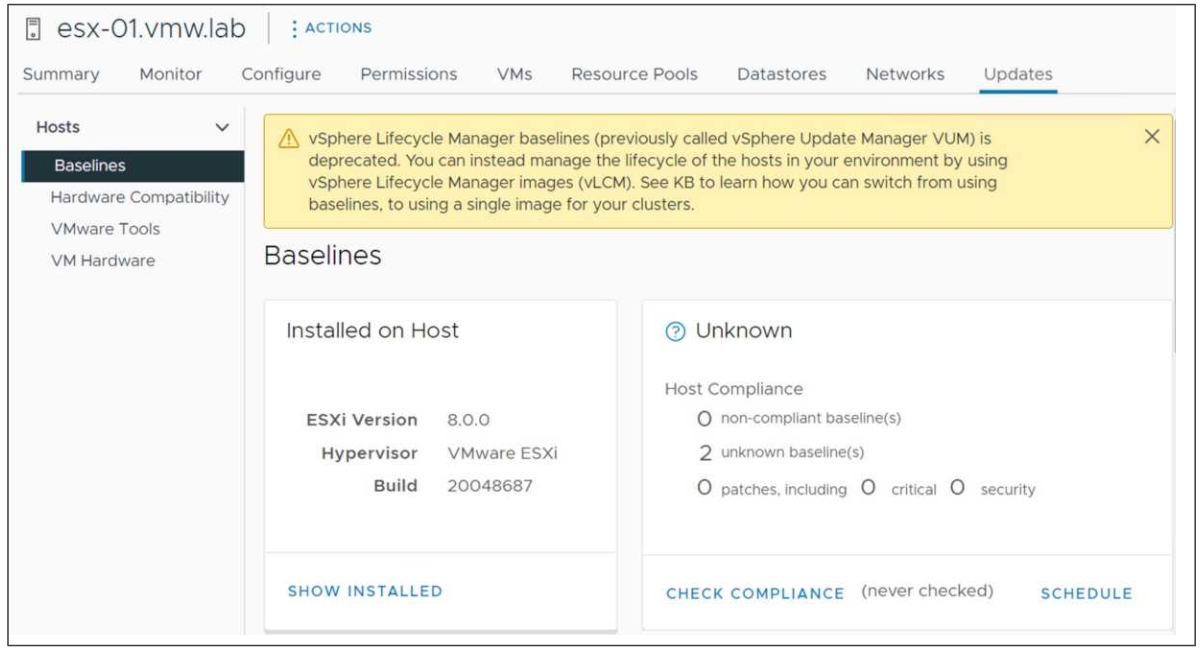

Also, vSphere 8 is the last version of vSphere that will support baselines.

vSphere 8 last version with baselines

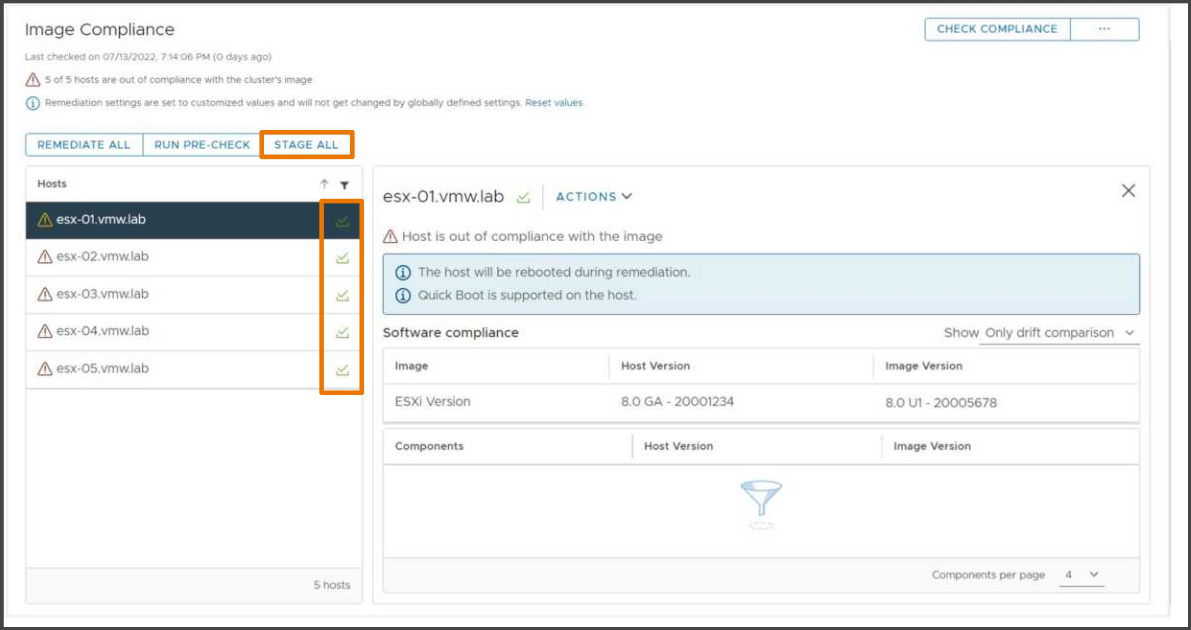

Staging clustering image updates will speed up the remediation process. That’s why VMware introduces staging. You will be able to stage, the same way you use to do with baselines.

You can pre-stage all the updates to all hosts within a cluster, then the time needed will be reduced to the maximum. You also reduce the potential risk of remediation failure from live image transfer.

Firmware payloads can also be stages, but those needs to be supported by hardware manufacturers.

Staging cluster images in vSphere 8

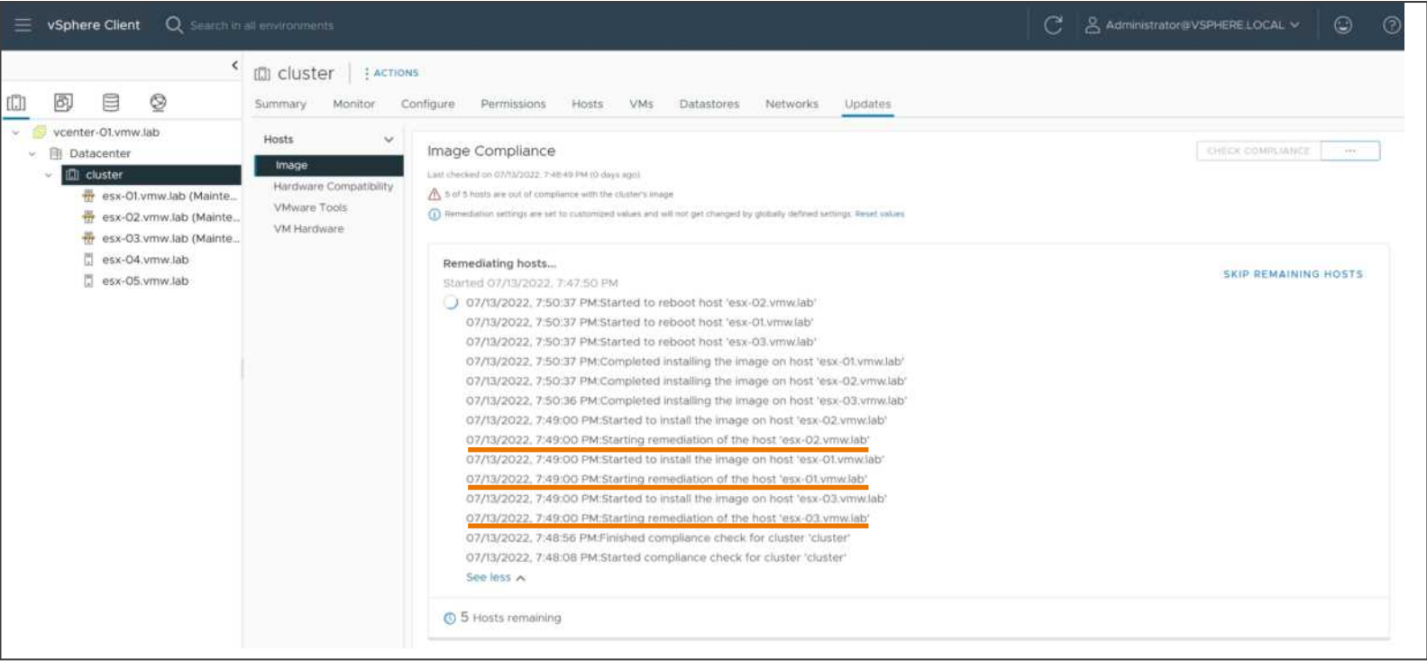

Parallel remediation will speed vSphere clusters. You’ll be able to remediate multiple hosts in parallel, which will reduce the lifecycle operation time of a cluster. It will be the vSphere admin who will decide how many hosts will be remediated in parallel by placing the desired hosts into maintenance mode. Only the hosts in maintenance mode will be remediated in parallel.

Parallel remediation

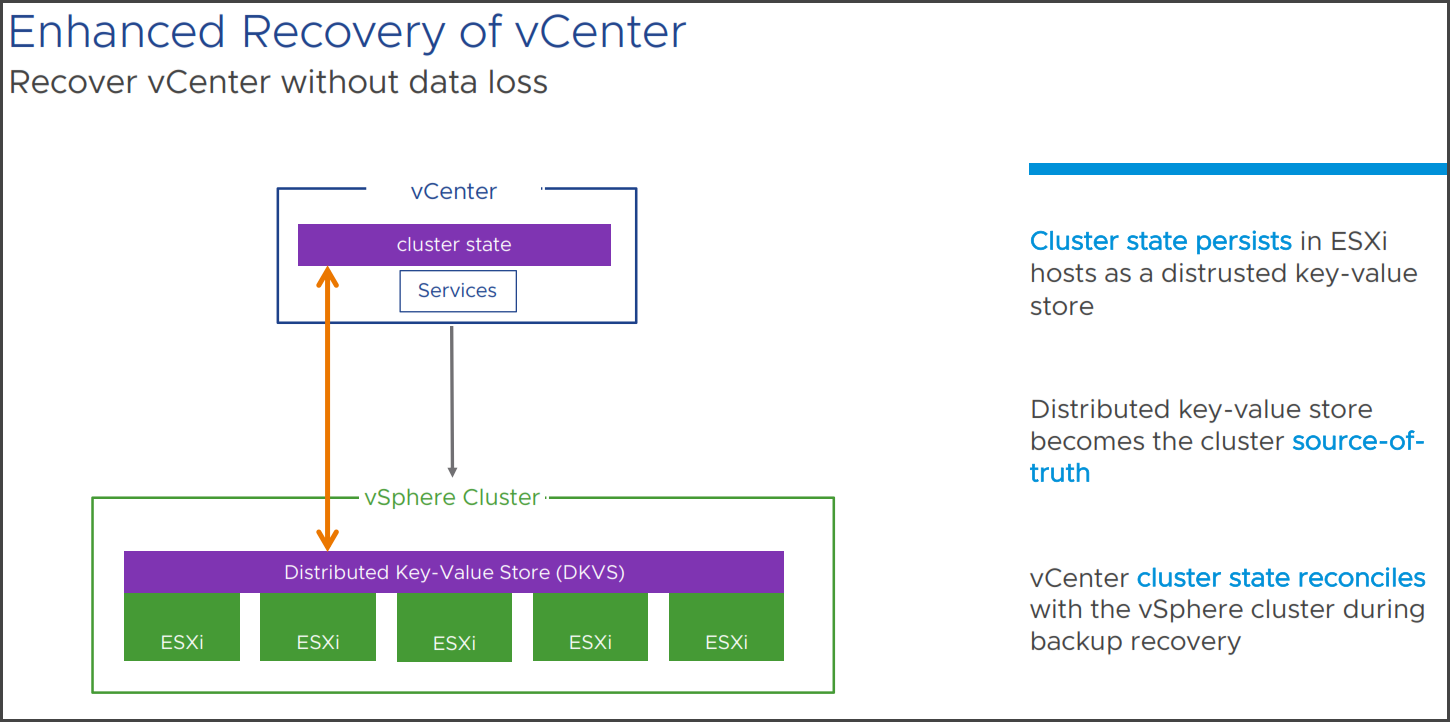

Enhanced Recovery of vCenter

VMware introduces a new concept called Distributed Key-Value Store (DKVS). It resides on ESXi hosts and it’s distributed among all the hosts within the cluster. It saves cluster information, it records the current cluster stage.

Important is to note that we need actual information about the cluster state. The problem with vCenter failure is that if for example, vCenter server was restored from backup, you won’t have up-to-date information as your file-level backups might be configured daily, or with a several days (or Weeks) interval. VMware wants the cluster the source of truth, not vCenter.

So, in the case of restore of vCenter, the DKVS will feed back to vCenter the missing information from the real state of the cluster (not from a backup made several days/weeks back). vCenter will query the DKVS to check whether there is a difference in the cluster configuration and replaces its values from the DKVS.

vCenter enhanced recovery

At GA, the system will be able to reconcile the host-cluster membership, and in the future releases of vSphere other enhancements to the system are also planned (additional configuration will be added as well).

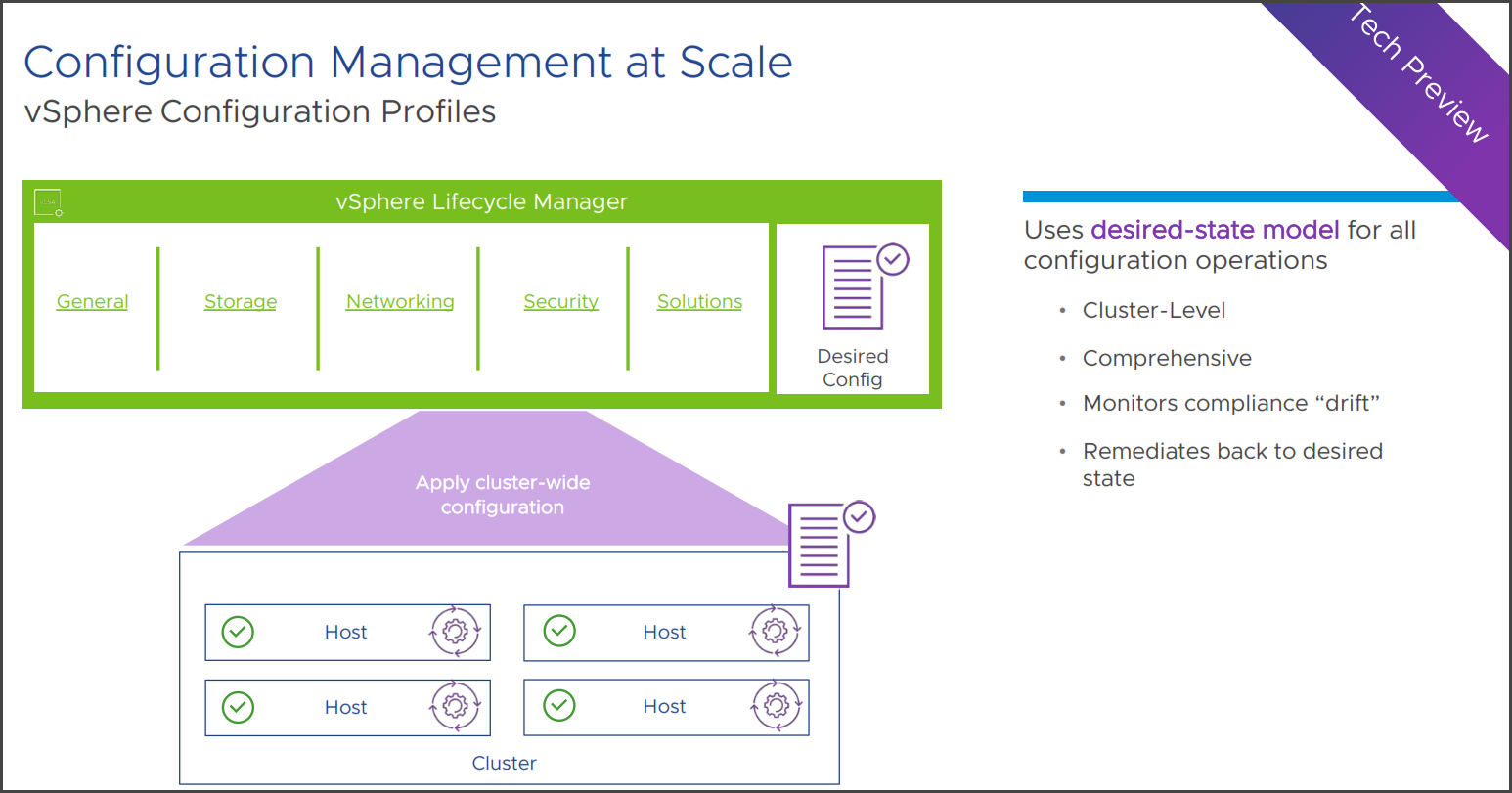

vSphere Configuration Profiles

vSphere 8 will introduce a tech preview of vSphere configuration profiles which will be the next generation of cluster configuration management. In the future, it shall completely replace host profiles. But vSphere 8 will still have host profiles and be fully supported where there will be a first initial release of vSphere Configuration Profiles (tech preview) introduced in vSphere 8.

Instead of doing profiles (where you define a profile and then attach/apply it to the host/cluster level), in the new system of vSphere configuration profiles, you will define config at the cluster configuration object itself and any hosts that are added to that cluster get that configuration pushed down.

It’s a similar concept that the vSphere lifecycle manager uses with the image-based update management, with vSphere configuration profiles, vSphere lifecycle manager here to define the cluster configuration. All hosts within the cluster will have a unified configuration.

When there is a drift in a configuration (it’s constantly monitored), the admin can remediate the configuration drift.

Tech preview still. Not advised to use in production yet !!! Use in lab only…

vSphere 8 Configuration Profiles

Wrap Up

VMware introducing a lot of new features in vSphere 8. The days and weeks to come will bring a lot of new innovations from VMware that will shape the datacenters of today and tomorrow.

This post is one of the first posts about vSphere 8 and it won’t be the only one. Stay tuned for more.