Introduction

Among the recent trends and buzzwords, one of the latest concepts is the idea of composable infrastructures. But, the question is what does it really stand for: just another fancy word (because the cloud has been used far too often), HCI 2.0, Blade 2.0, or something more?

In this post, I’m going to take a closer look at composable infrastructures to understand why they are such a hot topic.

What was before composable infrastructures?

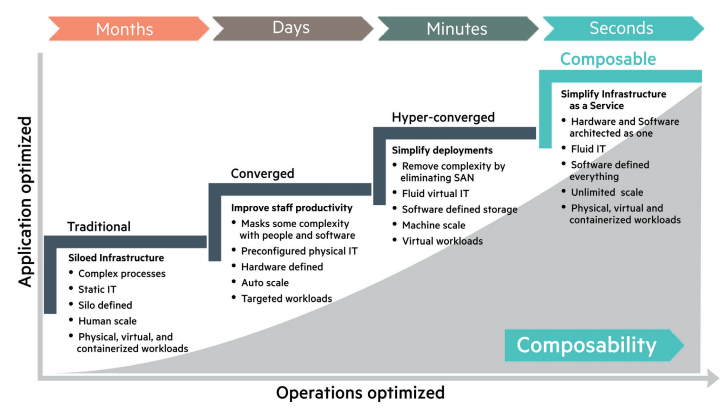

Moving from the mainframe to the client-server model is something from the past. However, the server-based environments have evolved quite fast, starting with different types like the converged infrastructures (CI) and the hyperconverged infrastructures (HCI). Let’s take a closer look at these concepts first.

Converged infrastructures operate by grouping multiple information technology (IT) components into a single optimized package; those components may include servers, storage arrays, networking equipment, and software for IT infrastructure management, automation, and orchestration.

In the real world, converged systems have different names, like blade systems, modular systems, block, rack, you name it.

In hyperconverged infrastructures, basically, we also integrate the virtualization layer and all it’s managed with a software-defined solution. Note that the hyperconverged systems are not only a bare evolution of the converged ones. Similarly, the converged solutions are not only the evolution of traditional server stacks.

There are different approaches to presenting the resources to the “consumer layer” (i.e., virtualization layer, OS layer, application layer), where HCIs are becoming more and more popular. Nevertheless, they are not a killing solution yet that can replace everything else.

State of the tech

Moving forward, different companies have tried to define the next evolution step – the composable infrastructure.

DellEMC

DellEMC has built its converged solution on Dell PowerEdge M1000e, probably the most durable Dell’s chassis (has been introduced with Generation 10 of PowerEdge), Dell PowerEdge VRTX, and then Dell PowerEdge FX platforms. Nevertheless, in last years, DellEMC has launched the replacement for their M1000e – Dell EMC PowerEdge MX.

DellEMC PowerEdge MX is the new high performance, modular infrastructure, designed to support a wide variety of traditional and emerging datacenter workloads. PowerEdge MX offers the first modular infrastructure architecture designed to easily adapt to technologies of the future and server disaggregation with its unique kinetic infrastructure. It not only brings the benefits of a modular design but also extends the flexibility of configuration down to individual storage device and all the way to memory-centric devices (DRAM, storage class memory, GPUs, FPGAs…) in the future. Kinetic infrastructure enables to assign the right resources for specific workloads and to change resource distribution dynamically; businesses are always changing, aren’t they?

NetApp

NetApp has built a new HCI solution with the concept of evolution of the family (as discussed in this blog post: https://vinfrastructure.it/2018/03/whats-really-define-an-hci-solution/).

HPE

HPE was one of the first companies that introduced the term composable infrastructure. In its materials, HPE provides a profound analysis of the problems and explains how this approach can solve them.

Also, HPE defined composable infrastructures as the evolution of hyperconverged environments:

From the HPE Composable Infrastructure document (https://h20195.www2.hpe.com/V2/getpdf.aspx/4AA5-8813ENW.pdf)

So, what makes a solution “composable”?

Are composable infrastructures just a new stage of HCI, CI, or modular solutions? Not really… you need more to have a truly composable environment.

It’s like cloud computing at the very beginning: we don’t have a formal term yet that precisely defines a composable solution.

HPE offers three core principles of the composable environment:

- Fluid resource pools provide a single pool of compute, storage, and networking resources that are kinda separated from the underlying infrastructure. This approach gives an ability to meet each application’s changing needs by allowing the composition and recomposition of single blocks of disaggregated resources.

- Software-defined intelligence provides a single management interface to integrate operational silos and eliminate complexity. Workload templates speed deployment and frictionless change eliminates downtime.

- Unified API provides a single interface to discover, search, inventory, configure, provision, update, and diagnose a composable infrastructure. A single line of code enables full infrastructure programmability and can provide the environment required for an application.

Note that composable infrastructures are not only about software: you can have a software-defined datacenter (SDDC), but it may not become a composable solution at the end. You also need the hardware that can have its resources disaggregated, pooled together, and allocated to the proper consumer. And, you need fast (very fast) fabrics to make these pools of resources usable like local resources.

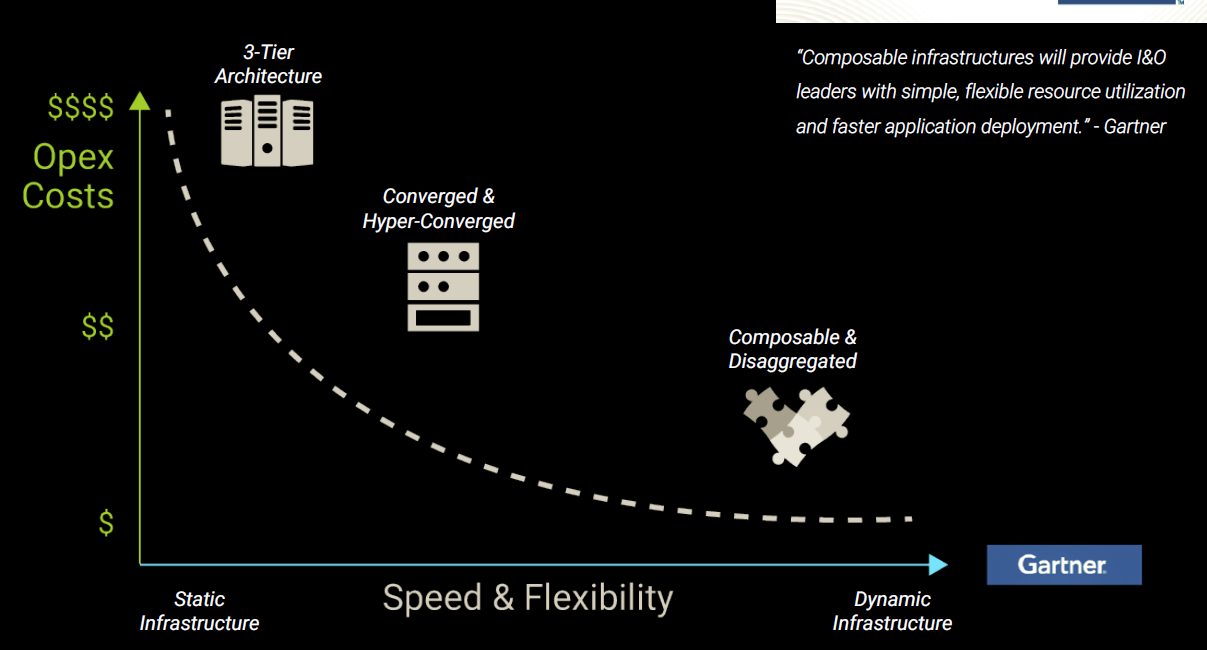

We can simplify it by saying that it’s all about defining more simple, flexible on resource utilization, and scalable solutions that must also provide faster application deployment with lower cost.

Costs are very important… but you should deploy composable solutions not only if they are affordable but also if they can optimize resource utilization.

What about the naming?

One more time: We still don’t have any formal definition of composable infrastructures yet. Notwithstanding, it may be nice to set on some simplified informal definitions as it happened with cloud computing. It may be an interesting acronym like AAA (Abstraction, Agile, Automation), or you can come up with something closer to what composable infrastructures mean for you. In my opinion, good acronyms could also be CCC (Composable, Consumable, Cost-effective) or DDD (Disaggregate, Distribute, Deliver). I like DDD more as it defines how exactly composable infrastructures work: first, disaggregate all the local resources, then, those resources are distributed and shared across a fabric to be delivered with the proper “consumers”.

Which fabric to use?

The fabric is an important element of composable environments as hardware because we need to deliver those resources with the same (or very similar) performance as the local ones.

And to do so, at first, we need a lot of bandwidth and a very low latency. Network-based fabric like Infiniband or 100 GbE can work fine… but don’t forget about PCIe! At least they are enough for resources SSD, NVMe drives, and GPUs… where we already have some interesting solutions that can “compose” those kinds of resources.

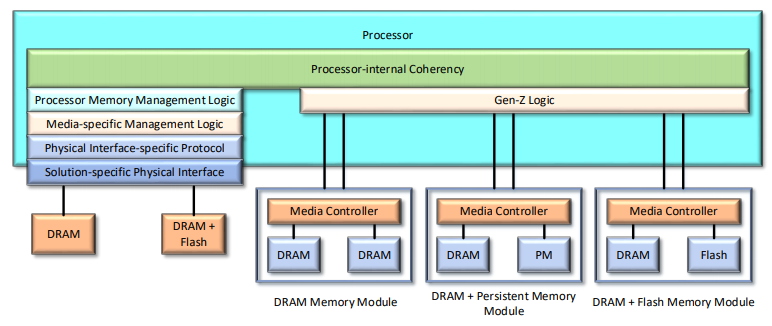

What about primary memory (and PMEM) or CPU? The great solution may be Gen-Z (https://genzconsortium.org/) – a new data-access technology designed to provide high-speed, low-latency, memory-semantic access to data and devices via direct-attached, switched, or fabric topologies. Gen-Z fabric utilizes memory-semantic communications to move data between memory of different components with minimal overhead.

Memory-semantic communications are extremely efficient and simple, which is critical to ensure optimal performance and power consumption. Each Gen-Z component can support up to 264 bytes of addressable memory.

http://genzconsortium.org/wp-content/uploads/2019/03/Gen-Z-DRAM-PM-Theory-of-Operation-WP.pdf

Gen-Z components employ low-latency read and write operations to access data directly, and use a variety of advanced operations to move data with the minimal application or processor involvement. Gen-Z delivers maximum performance without sacrificing flexibility and can be supported by unmodified operating systems and application middleware. It also supports co-packaged solutions, cache coherency, PCI and PCIe technology, atomic operations, and collective operations.

What’s the catch?

The idea behind composable infrastructures is good, but how can it be implemented, and how can it become usable and not only relegated to single vendor stack?

Gen-Z is quite promising: it allows business and technology leaders to overcome the current challenges with the existing computer architectures and provides better opportunities for innovative solutions that will be open, efficient, simple, yet cost-effective.

However, what about other possible standards, like NVMe over Fabric? Will Gen-Z ditch them, or there will be some new standard to share resources in an effective way established? And, what is also important: will Gen-Z also be able to share computing resources or those resources will always remain local? Who knows…

Who needs composable infrastructure?

Is composable infrastructure appropriate for all use cases or all users? Well, that sounds like asking whether HCI can fit in all use cases or can be the right solution for each customer. It really depends on customer needs, maybe, other solutions will work better in some cases.

But, I’d like to mention some interesting use cases where composable infrastructure can be more effective:

- Bare-metal cloud infrastructures with more flexibility, better resource utilization, and lower TCO

- Applications related to AI or ML where you may need dynamic resource allocation for each stage of AI workflow. Composable infrastructures will enable GPU Peer-2-Peer at scale and GPU Dynamic reallocation/sharing.

- HPC environments where you may need massive scale-outs and very dynamic resource management.

- Legacy applications that may scale out not so good but instead may need a deep scale-in.

- Machine containers deployed with predefined hardware containers.

- …

Composable and virtualization

Are composability and virtualization just two different approaches to manage resources?

The first principle of virtualization is that virtualization partitions the physical host resources… for a single VM though you cannot aggregate the resources from multiple hosts.

With composability, you can disaggregate and reallocate the resources any which way you need them to, but you don’t have any real granular control over resources. For example, you cannot move a single core from one system to another.

Existing products?

We stand at the beginning. Several products that actually exist are rather like modular 3.0 version solutions than composable 1.0 version ones.

Still, some solutions can become truly composable. For instance, DellEMC PowerEdge MX7000 that was equipped with a prototype of a shared memory fabric (presented at the last Dell Technology World). Another product that can already be considered a composable 1.0 solution is Liqid.

The real challenge will start with Gen-Z and the new composable 2.0 solutions.