Since its inception, VMware is improving vMotion and cold migration of VMs within the vSphere suite. In vSphere 8.0 there is a nice speed enhancement so you don’t have to wait hours to cold or hot migrate your VMs from one host to another, from one datastore to another.

VMware vSphere 8.0 which is announced as a GA the 11th of October, is introducing new features in vMotion operations, but also faster processing for cold migrations thanks to a new protocol used.

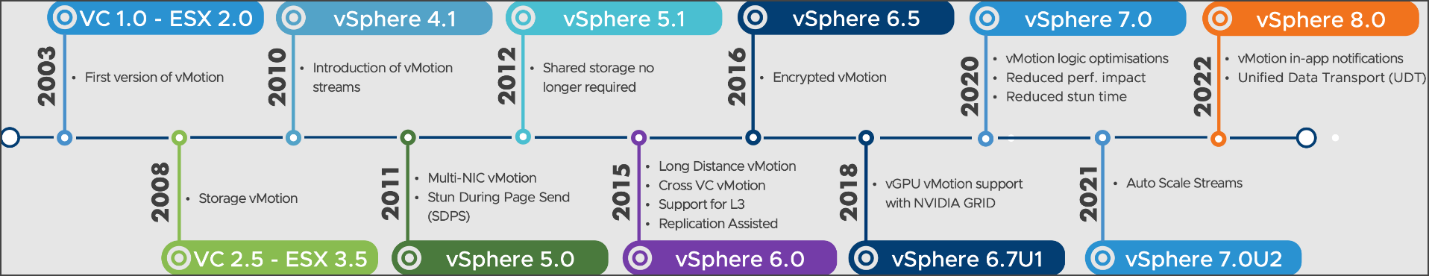

In the past, the most significant enhancement came perhaps with multi-NIC vMotion, Long Distance vMotion or cross vCenter vMotion, however when we have a speed increase it’s always nice. A demo vide from VMware shows over 3x speed increase for cold migration.

But there were quite a lot of improvements in vMotion operations over time. Screenshot from VMware

VMware vMotion Evolution over Time

vSphere 8.0 vMotion and cold migration is faster – UDT to the rescue

Until now, for cold migrations, VMware used Network File Copy (NFC) protocol. The same protocol is used for disks with snapshots when a VM is powered on. In fact, any “cold” disk copy was slow, because the NFC was used.

So, you have a VM with snapshots and you want to do a vMotion, the transport speed was slow with vSphere 7.x compared to a VM without snapshots. NFC is much slower than vMotion.

VMware introduces Unified Data Transfer (UDT) which is a combination of what’s best in NFC and vSphere vMotion together.

As you know vMotion is highly optimized multi-threaded process while NFC is a single threaded process. The vSphere vMotion uses Asynchronous I/O compared to NFC that uses synchronous I/O.

Where vSphere has a peak performance 80Gbps, the NFC is topping at 1.3Gbps. Those values are maximum values for each protocol.

With vSphere 8.0 if you transfer VM, it uses NFC but only as a control mechanism. The data transfer is offloaded to vMotion protocol with a very big increase in speed.

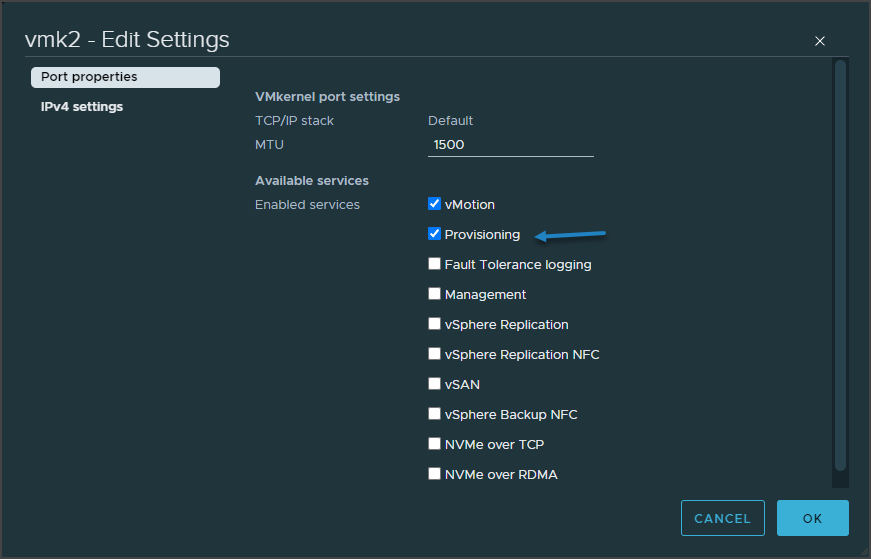

You might wonder if this technology is active by default of if there is something to activate. Well, yes, you’ll need to go and activate the provisioning service on a VMkernel interface. This can be a new VMkernel interface or for example a VMK where you already have vMotion enabled.

When you look at the vmkernel port settings, there is a new checkbox called “Provisioning”. The provisioning traffic Is used for cold migrations. That’s where you activate the UDT protocol.

It’s a new protocol that optimized the transfer speeds for cold migration, provisioning of VMs (clone operations) or operations leveraging content libraries. Even VDI environment will benefit this.

Activate VMware UDT for faster VM migrations

VMware has a demo video showing the performance increase of VM cold migration from 9’39 to 2’47 which is over 3 times faster.

Very simple activation as you can see. Simple check box to activate the Provision network and UDT protocol.

vMotion Notifications for sensitive applications

The problem with sensitive applications such as clustered applications, applications that runs distributed databases or DB’s in memory, financial applications or telco providers, voice over IP apps, have problems when we vMotion them.

That’s why VMware keep improving the vMotion technology by improving the process of migration and preparing applications for migration.

You might ask How application can prepare for migration? Well, there might be some services that needs to be shut town before the migration begins, or the application might need to be quiesced or even there might be a need for a total failover of a clustered application.

Application can delay the start of the migration until the configured timeout. The application cannot, however, completely “avoid” the migration.

The steps involved:

- A notification of migration is sent to the application.

- The Application can respond to this notification as desired. (Graceful service shutdown, failover to another node, etc).

- Then the application sends an acknowledgment to vSphere that the migration can proceed.

- The VM is migrated using via vMotion operation.

This needs to be supported by your application, however. It does not work out of the box for every application.

There are basically two ways of doing it:

1. Change the existing application by adding some code that is able to intercept the communication and able to send notification back.

2. Or, it can be a small app that “sits” in between your large app and vSphere. And in this case, it is this small app that takes care of this communication between vSphere and the main app.

There is also configurable timeout value that is set ahead, and if the application does not do within a certain period of time, it allows the vMotion to take place. The timeout is configurable at the host level or at the VM level. The vMotion notification feature is not designed to block vMotion operation.

DRS is aware of vMotion Notification for sensitive applications. If there is a sensitive application, it’s automatically removed from DRS balancing so DRS won’t automatically try to move this particular VM.

As for a host maintenance mode, the host will be able to enter the maintenance mode, however DRS won’t try to move the VM with the sensitive application installed and the vMotion Notification turned ON.

Where do I activate it?

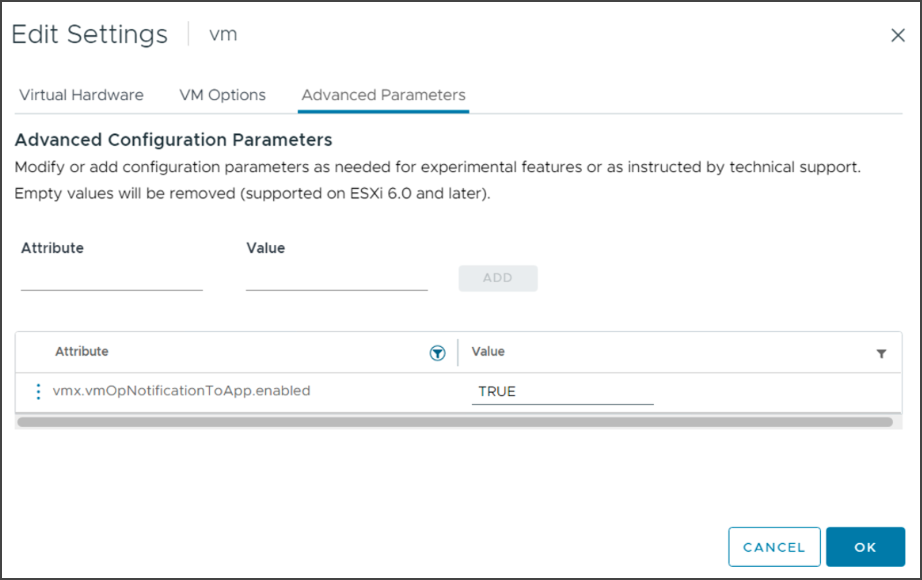

The vMotion notification is activated on per-VM level so you must go to the VM properties > advanced settings > “vmOpNotificationToAppEnabled“ must be set with the value True.

Screenshot from VMware.

Activation of vMotion Notification for Applications is at the VM level

Your VM will need VMware Virtual Hardware 20 in order to be able to use this feature. The guest OS must have the latest VMware Tools or Open VM Tools with at least version 11.0. VMware recommends using the latest available VMware Tools.

Configure host level timeout

You can configure a host level timeout that is configured using the vSphere APIs and the advanced setting “vmOpNotificationToApp.Timeout” with a value greater than 0. The value configured is in seconds.

For example, to set a timeout of 5 minutes, use a value of 300.

Wrap Up

As you can see, those are quite nice enhancements to the vMotion layer where you’ll benefit from faster cold migrations and some optimizations for vMotion notifications that allow applications to prepare for vMotion operation. But as said, VMware only prepared a framework for it, it’s now for the application developers to adjust their apps so they can find some benefit from it.