StarWind Virtual SAN: Configuration Guide for Oracle Linux Virtualization Manager [KVM], VSAN Deployed as a Controller VM (CVM) using PowerShell CLI

- August 02, 2021

- 22 min read

- Download as PDF

Annotation

Relevant Products

StarWind Virtual SAN

Purpose

This guide offers detailed instructions on how to configure shared highly-available storage for Oracle Linux Virtualization Manager. The storage is provided StarWind VSAN running as a Controller Virtual Machine (CVM). It encompasses essential system settings ensuring a seamless and effective setup.

Audience

The document is created for IT specialists, system administrators, and professionals who want to configure Oracle Linux Virtualization Manager with StarWind VSAN-based storage.

Expected Result

By the guide’s end, users will be able to configure StarWind Virtual SAN CVM for Oracle Linux Virtualization Manager.

Introduction to StarWind Virtual SAN CVM

StarWind Virtual SAN Controller Virtual Machine (CVM) comes as a prepackaged Linux Virtual Machine (VM) to be deployed on any industry-standard hypervisor. It creates a VM-centric and high-performing storage pool for a VM cluster.

This guide describes the deployment and configuration process of the StarWind Virtual SAN CVM.

StarWind VSAN System Requirements

Prior to installing StarWind Virtual SAN, please make sure that the system meets the requirements, which are available via the following link:

https://www.starwindsoftware.com/system-requirements

Recommended RAID settings for HDD and SSD disks:

https://knowledgebase.starwindsoftware.com/guidance/recommended-raid-settings-for-hdd-and-ssd-disks/

Please read StarWind Virtual SAN Best Practices document for additional information:

https://www.starwindsoftware.com/resource-library/starwind-virtual-san-best-practices

Pre-configuring the KVM Hosts

The diagram below illustrates the network and storage configuration of the solution:

1. Make sure that a oVirt engine is installed on a separate host.

2. Deploy oVirt on each server and add them to oVirt engine.

3. Define at least 2x network interfaces on each node that will be used for the Synchronization and iSCSI/StarWind heartbeat traffic. Do not use ISCSI/Heartbeat and Synchronization channels

over the same physical link. Synchronization and iSCSI/Heartbeat links can be connected either via redundant switches or directly between the nodes (see diagram above).

4. Separate Logical Networks should be created for iSCSI and Synchronization traffic based on the selected before iSCSI and Synchronization interfaces. Using oVirt engine Netowrking page create two Logical Networks: one for the iSCSI/StarWind Heartbeat channel (iSCSI) and another one for the Synchronization channel (Sync).

5. Add physical NIC to Logical network on each host and configure static IP addresses. In this document, the 172.16.10.x subnet is used for iSCSI/StarWind heartbeat traffic, while 172.16.20.x subnet is used for the Synchronization traffic.

NOTE: In case NIC supports SR-IOV, enable it for the best performance. Contact support for additional details.

Enabling Multipath Support

8. Connect to server via ssh.

9. Create file /etc/multipath/conf.d/starwind.conf with the following content:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

devices{ device{ vendor "STARWIND" product "STARWIND*" path_grouping_policy multibus path_checker "tur" failback immediate path_selector "round-robin 0" rr_min_io 3 rr_weight uniform hardware_handler "1 alua" } } |

10. Restart multipathd service.

|

1 |

systemctl restart multipathd |

11. Repeat the same procedure on the other server.

Creating NFS share

1. Make sure that each host has free storage to create NFS share.

2. Enable nfs server and rpcbind services.

|

1 |

systemctl enable --now nfs-server rpcbind |

3. Create directory for NFS share.

|

1 |

mkdir -p /mnt/nfs |

4. Change rights and owner of the share to KVM

|

1 2 |

chmod 0775 /mnt/nfs/ chown -R nobody:users /mnt/nfs/ |

5. Add NFS share to /etc/exports file.

|

1 2 |

vi /etc/exports /mnt/nfs/ *(rw,anonuid=36,anongid=36) |

6. Restart NFS server service.

|

1 |

systemctl restart nfs-server |

7. Check that share has been exported.

|

1 |

exportfs -rvv |

8. Add firewall rules for NFS.

|

1 2 |

firewall-cmd --add-service={nfs,nfs3,rpc-bind} --permanent firewall-cmd --reload |

Deploying Starwind Virtual SAN CVM

1. Download StarWind VSAN CVM KVM: VSAN by StarWind: Overview

2. Extract the VM StarWindCVM.ova file from the downloaded archive.

3. Upload StarWindCVM.ova file to the oVirt Host via any SFTP client.

4. Change owner of the StarWindCVM.ova.

|

1 |

chown -R nobody:users /mnt/nfs/ |

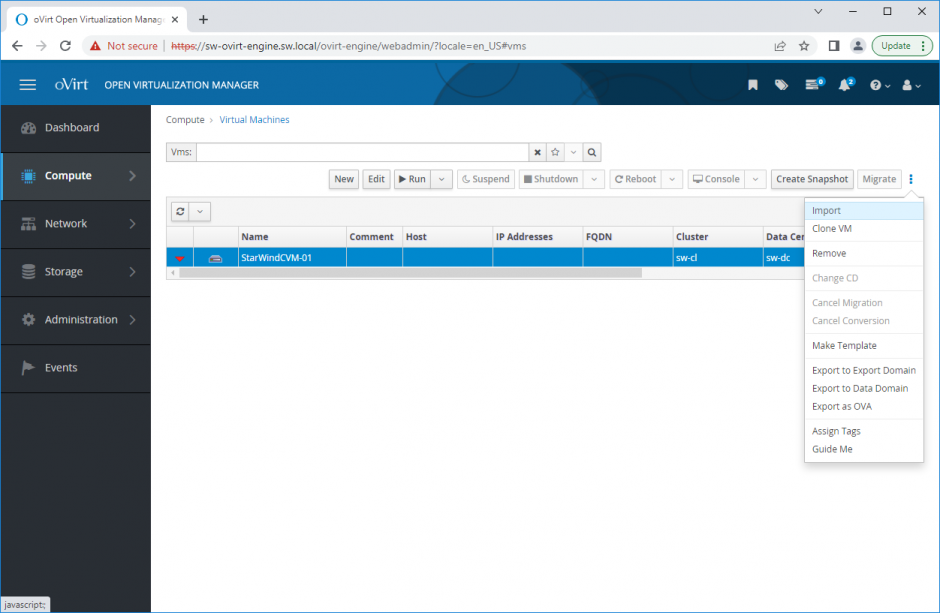

5. Login to oVirt and open Compute -> Virtual Machines page. Choose Import.

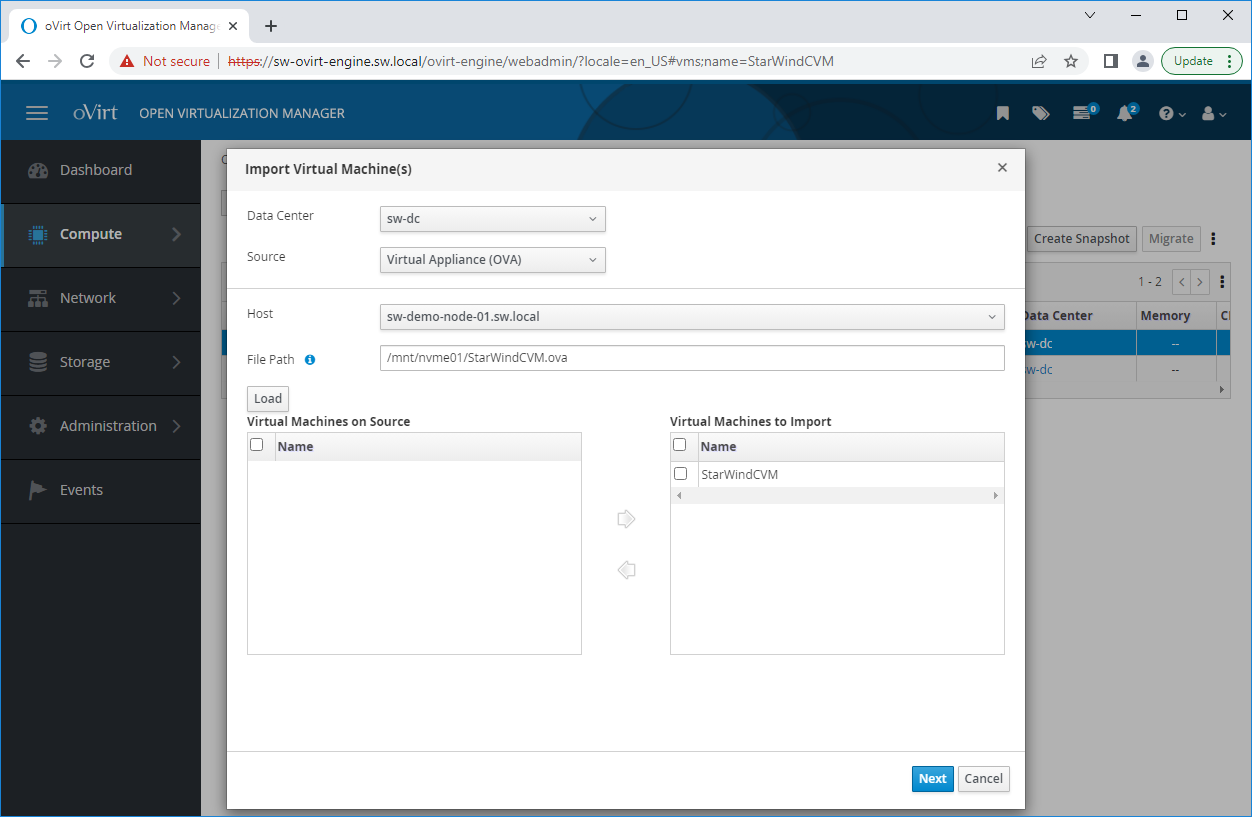

6. Specify path to .ova file and choose VM to import. Click Next.

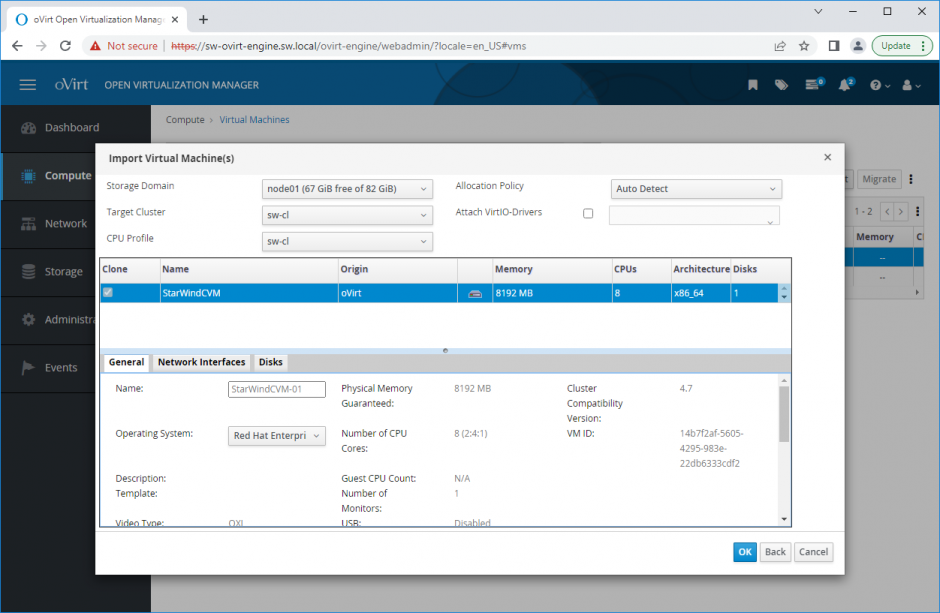

7. Verify VM settings and configure networks. Click OK.

8. Repeat all the steps from this section on other oVirt hosts.

Configuring StarWind Virtual SAN VM settings

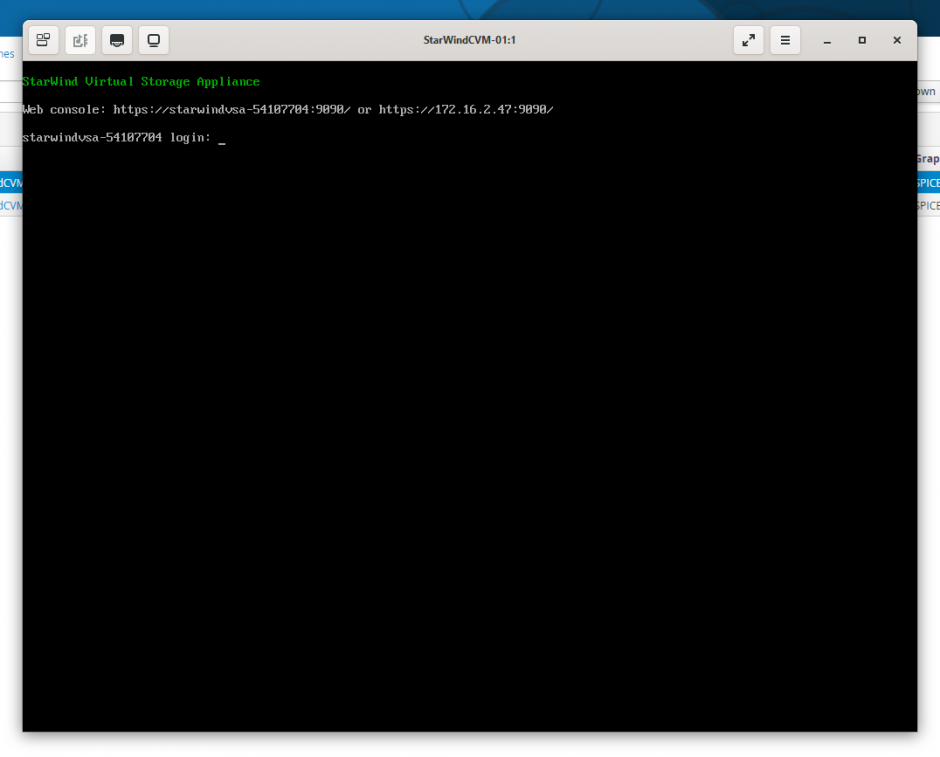

1. Open the VM console and check the IP address received via DHCP (or which was assigned manually).

Another alternative is to log into the VM via console and assign static IP using nmcli if there is no DHCP.

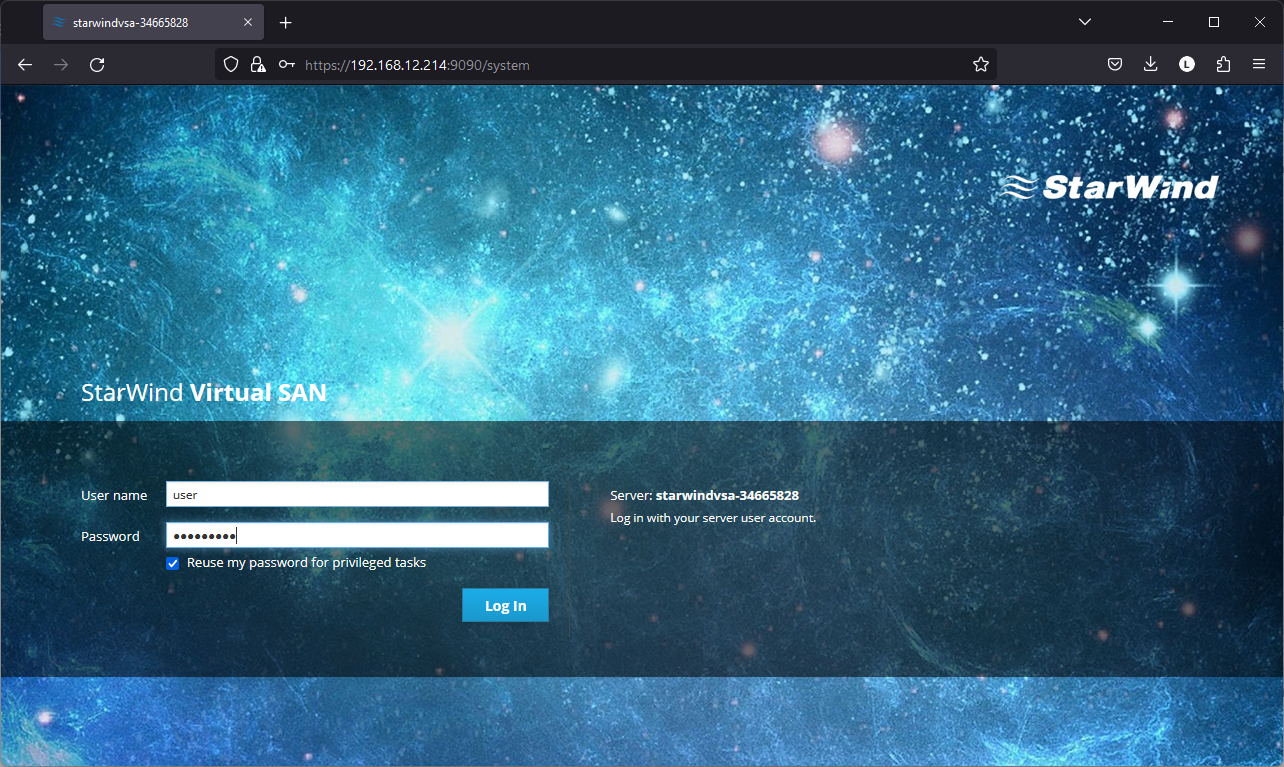

2. Now, open the web browser and enter the IP address of the VM. Log into the VM using the following default credentials:

- Username: user

- Password: rds123RDS

- NOTE: Make sure to check the “Reuse my password for privileged tasks” box.

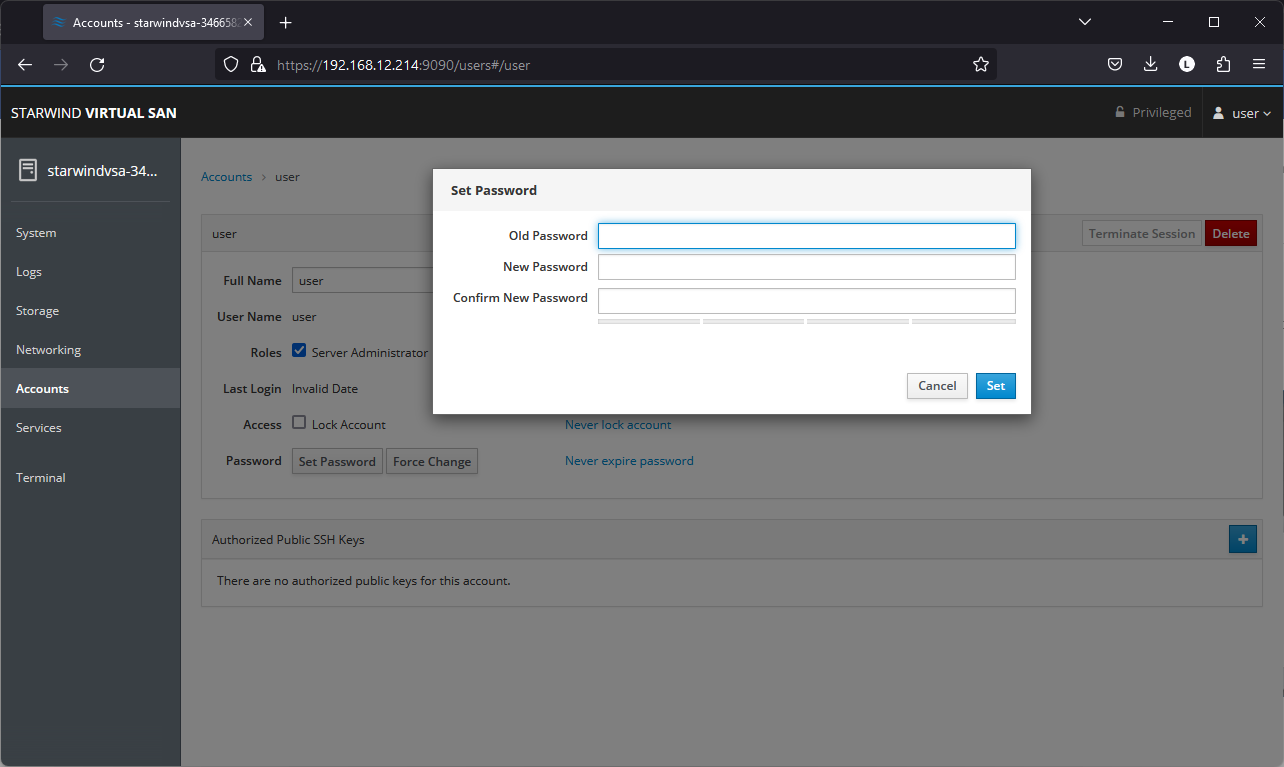

3. After a successful login, click Accounts on the left sidebar.

3. After a successful login, click Accounts on the left sidebar.

4. Select a user and click Set Password.

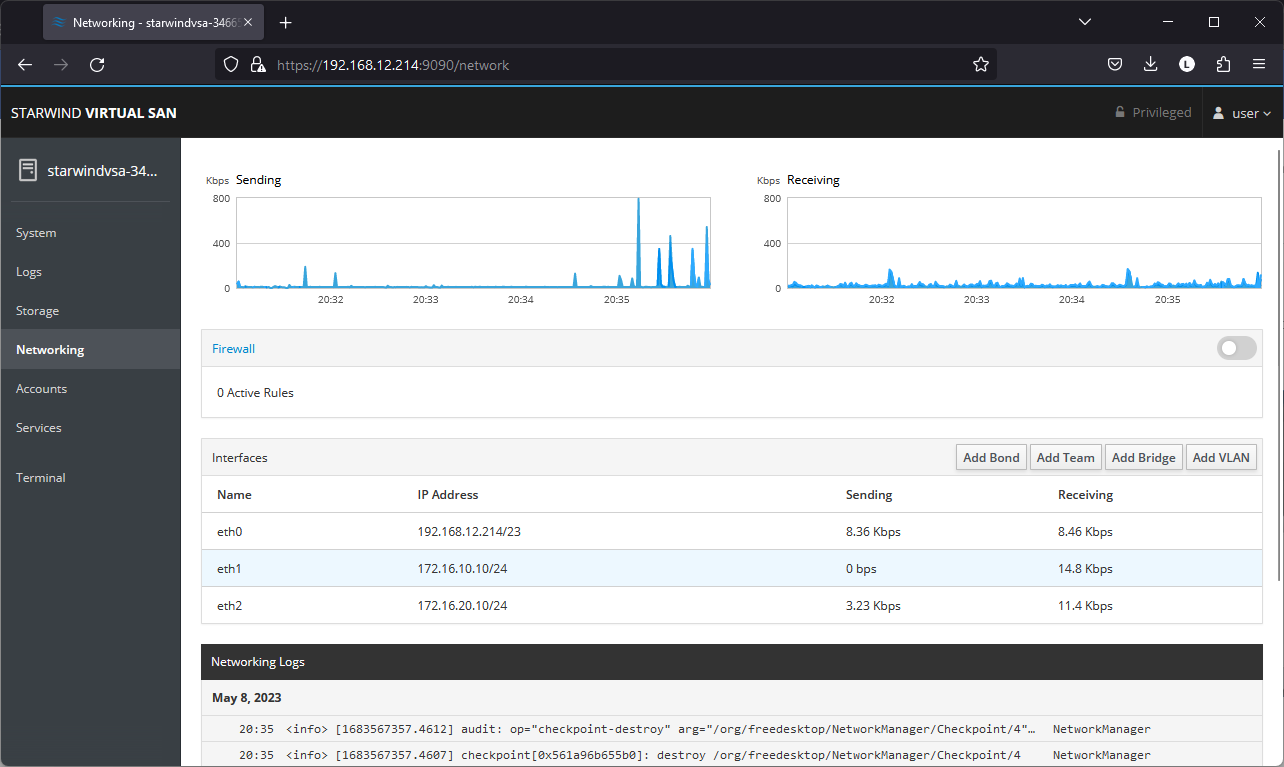

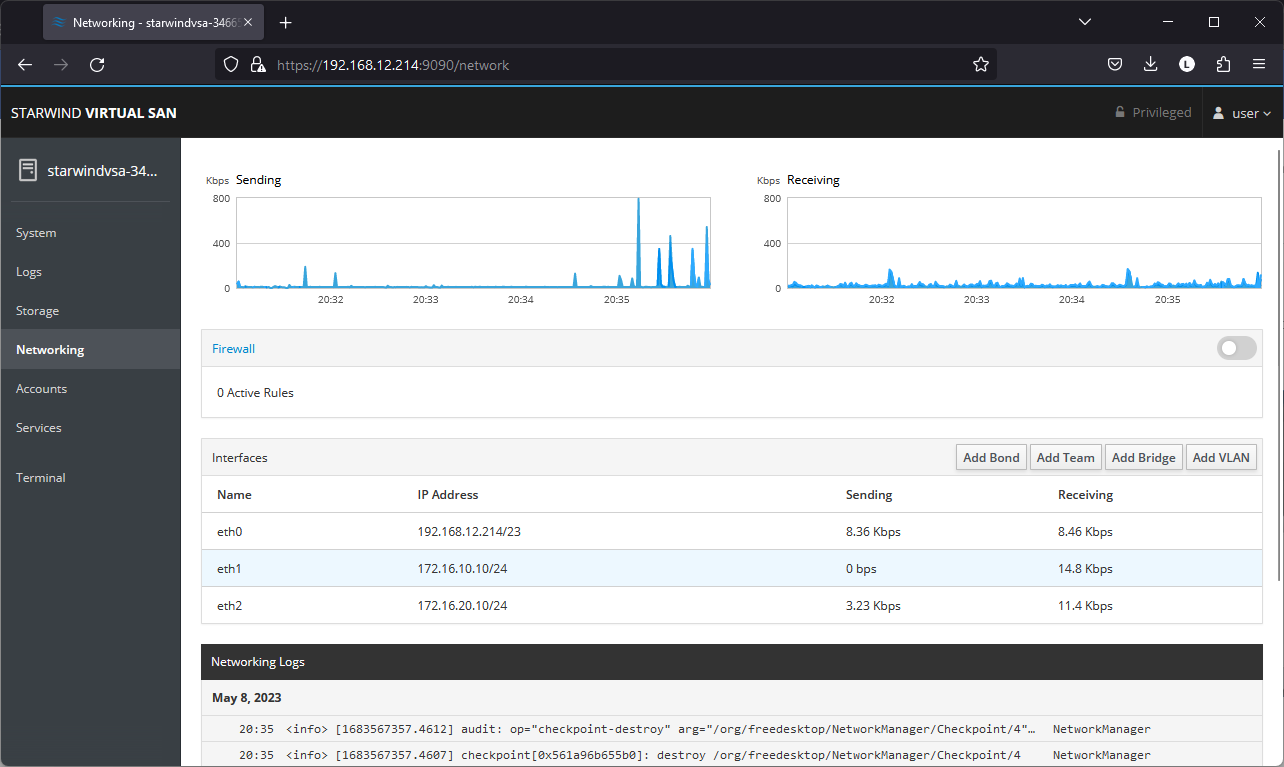

5. On the left sidebar, click Networking.

5. On the left sidebar, click Networking.

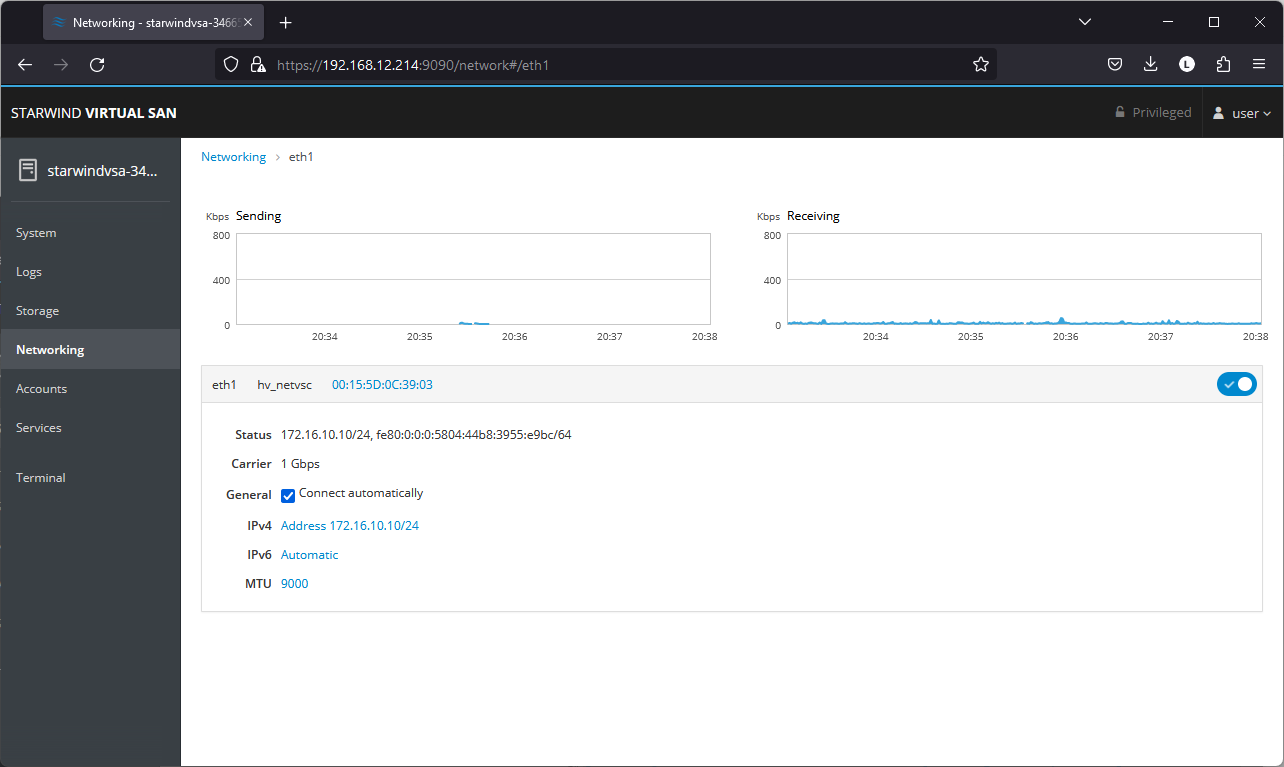

Here, the Management IP address of the StarWind Virtual SAN Virtual Machine can be configured, as well as IP addresses for iSCSI and Synchronization networks. In case the Network interface is inactive, click on the interface, turn it on, and set it to Connect automatically.

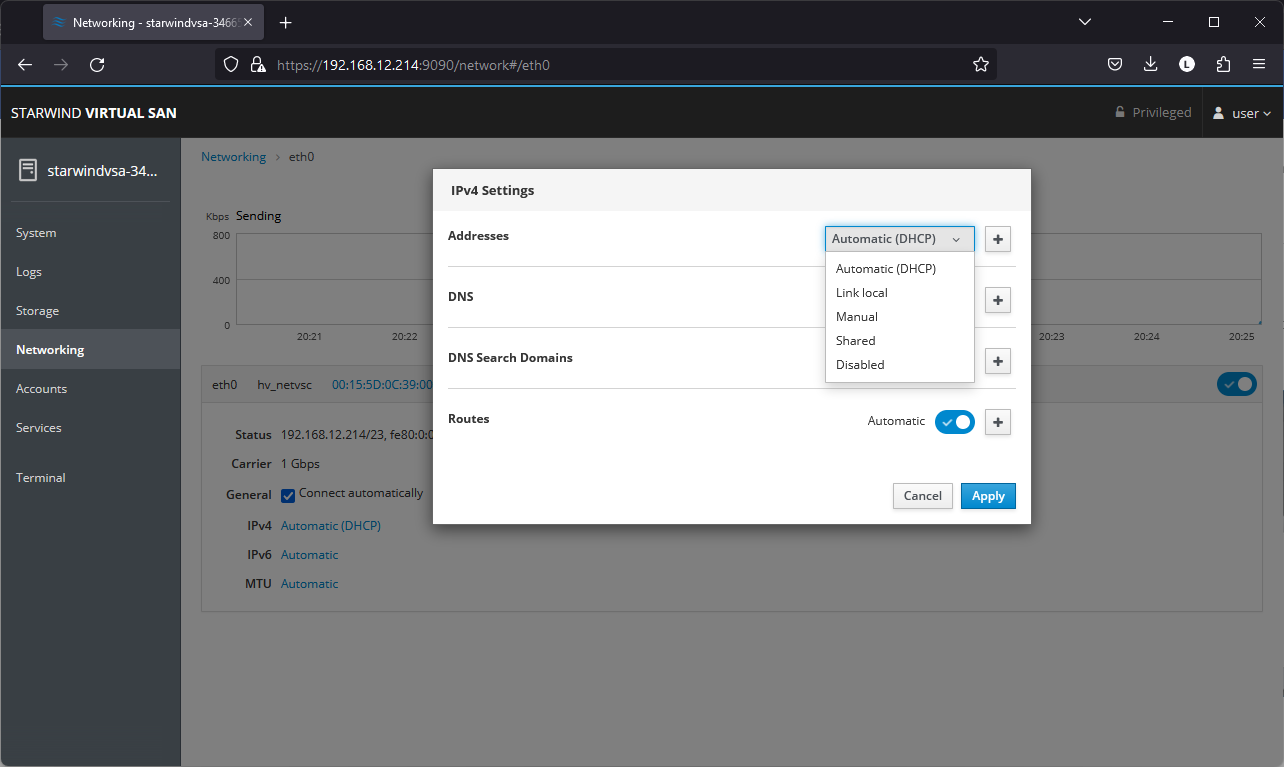

6. Click on Automatic (DHCP) to set the IP address (DNS and gateway – for Management).

7. The result should look like in the picture below:

NOTE: It is recommended to set MTU to 9000 on interfaces dedicated for iSCSI and Synchronization traffic. Change Automatic to 9000, if required.

8. Alternatively, log into the VM via the oVirt console and assign a static IP address by editing the configuration file of the interface located by the following path: /etc/sysconfig/network-scripts

9.Open the file corresponding to the Management interface using a text editor, for example: sudo nano /etc/sysconfig/network-scripts/ifcfg-eth0

10.

- Edit the file:

- Change the line BOOTPROTO=dhcp to: BOOTPROTO=static

- Add the IP settings needed to the file:

- IPADDR=192.168.12.10

- NETMASK=255.255.255.0

- GATEWAY=192.168.12.1

- DNS1=192.168.1.1

11. Restart the interface using the following cmdlet: sudo ifdown eth0, sudo ifup eth0, or restart the VM.

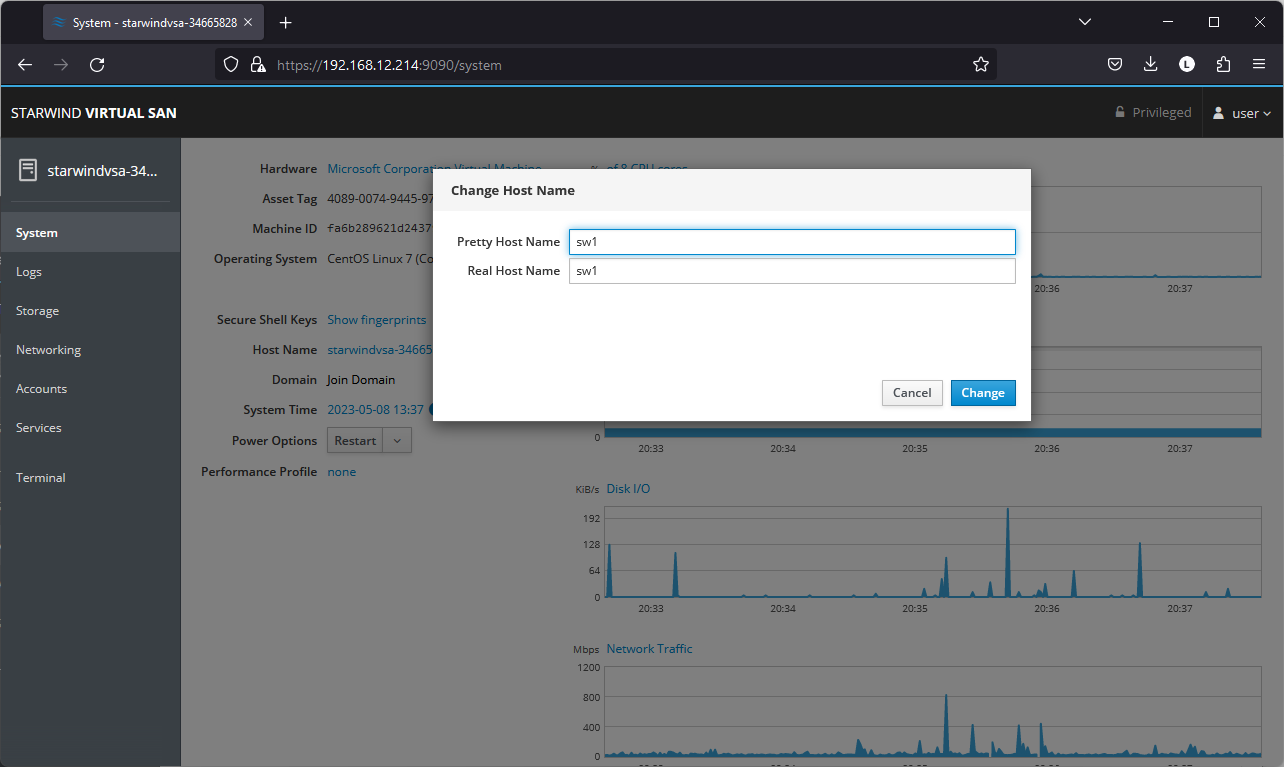

12. Change the Host Name from the System tab by clicking on it:

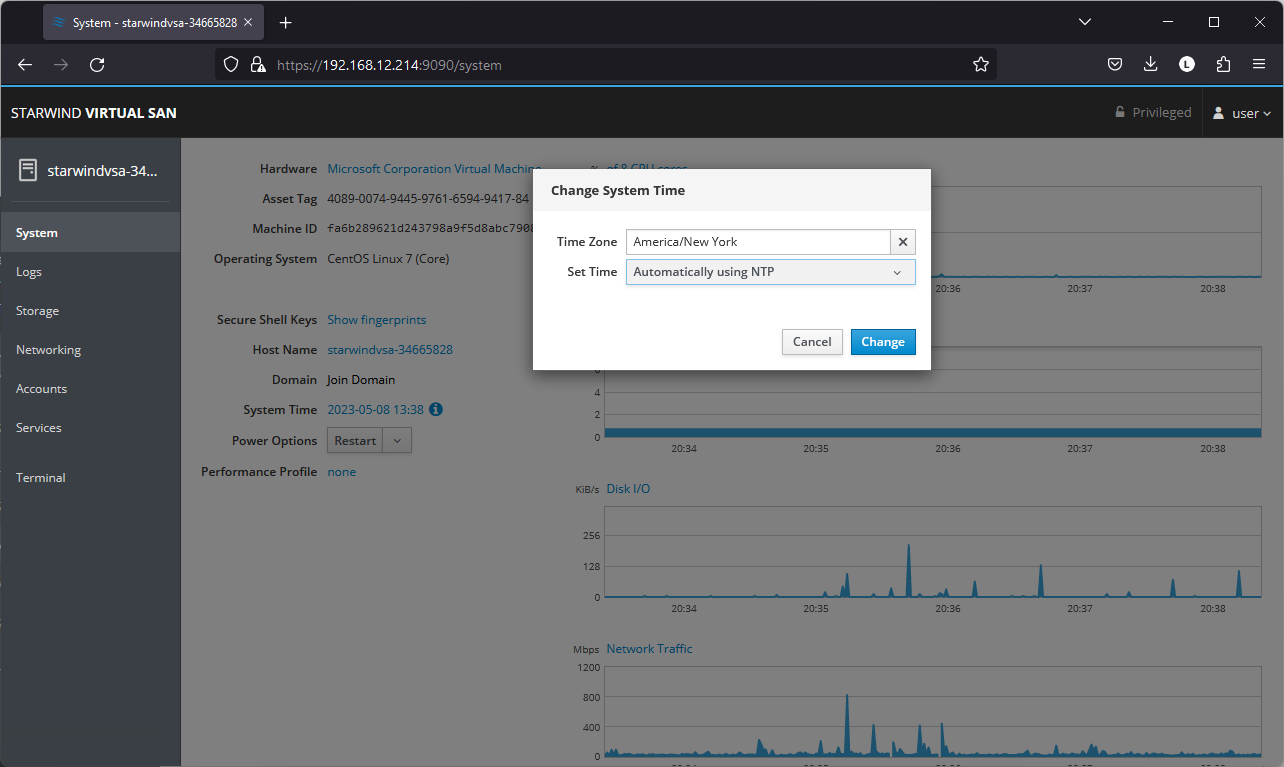

13. Change System time and NTP settings if required:

14. Repeat the steps above on each StarWind VSAN VM.

Configuring Storage

StarWind Virtual SAN for vSphere can work on top of Hardware RAID or Linux Software RAID (MDADM) inside of the Virtual Machine.

Please select the required option:

Configuring storage with hardware RAID

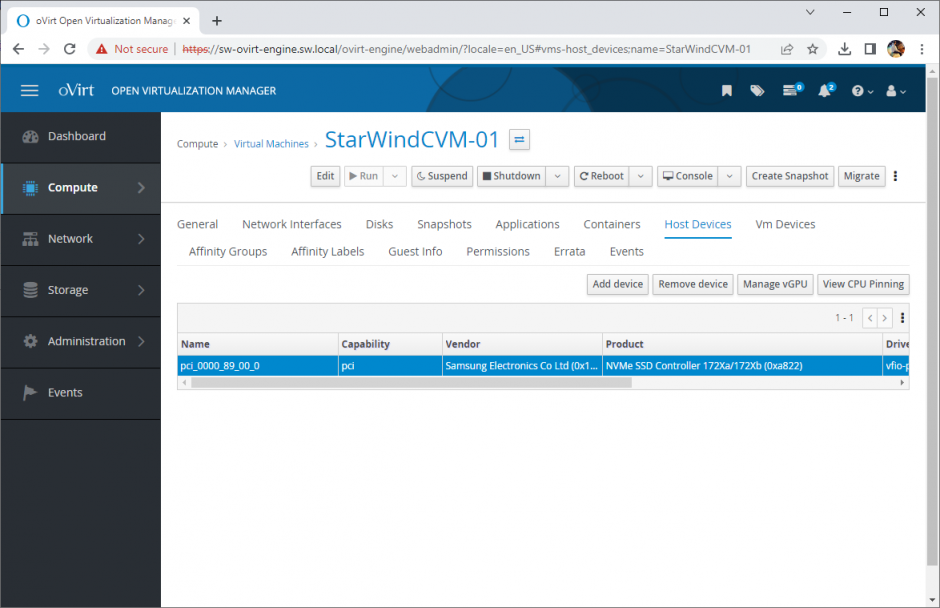

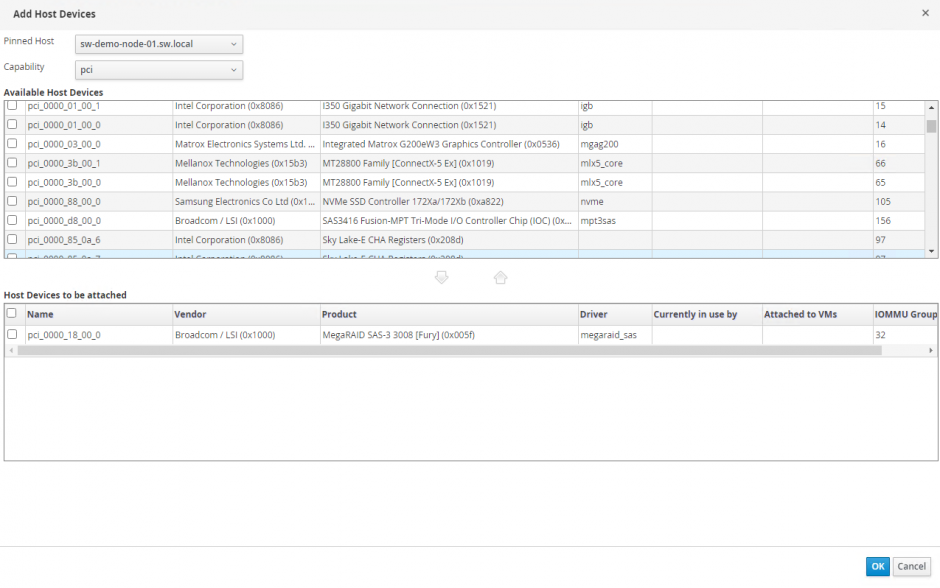

1. Open VM Settings on oVirt Virtual Machines page and choose Host Devices tab. It is recommended to pass the entire RAID array to the VM. Click Add device.

2. Choose RAID controller or HBA and pass it to the VM. Click OK.

3. Start StarWind VSAN CVM.

3. Start StarWind VSAN CVM.

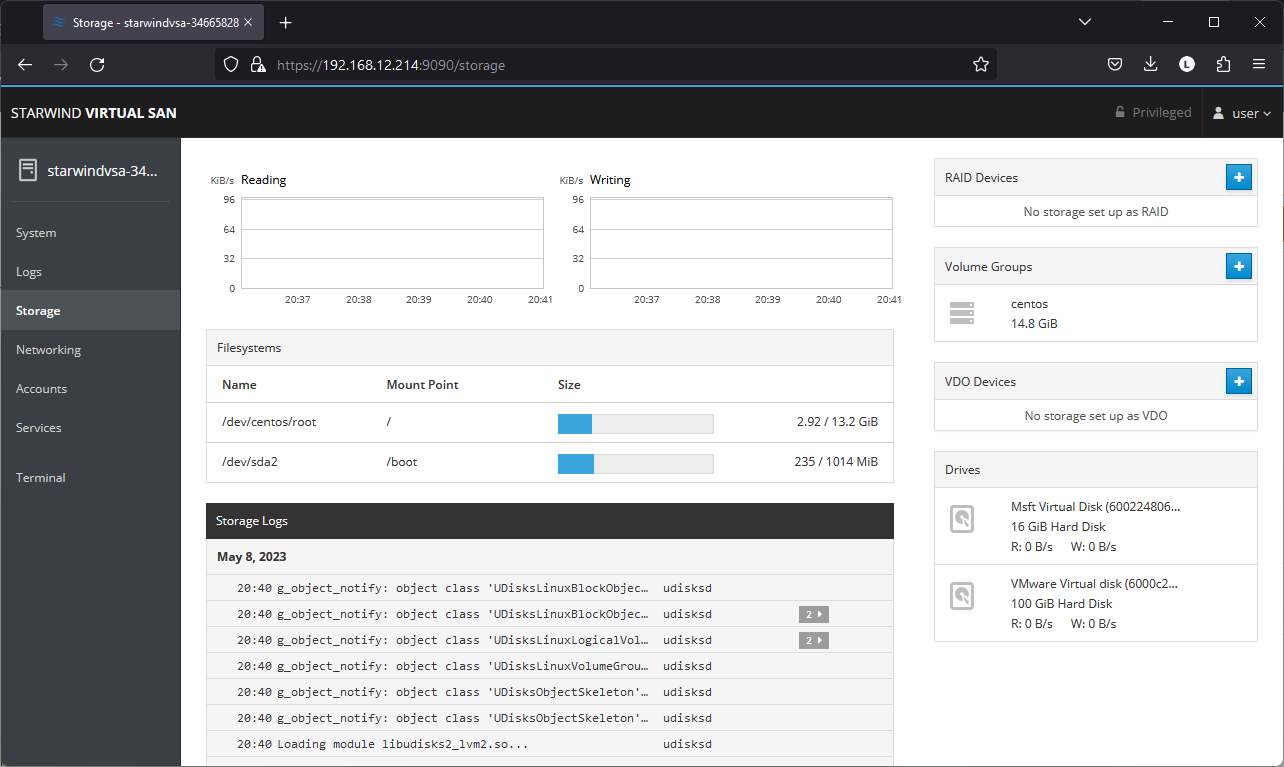

4. Login to StarWind VSAN VM web console and access the Storage section. Locate the recently added disk in the Drives section and choose it.

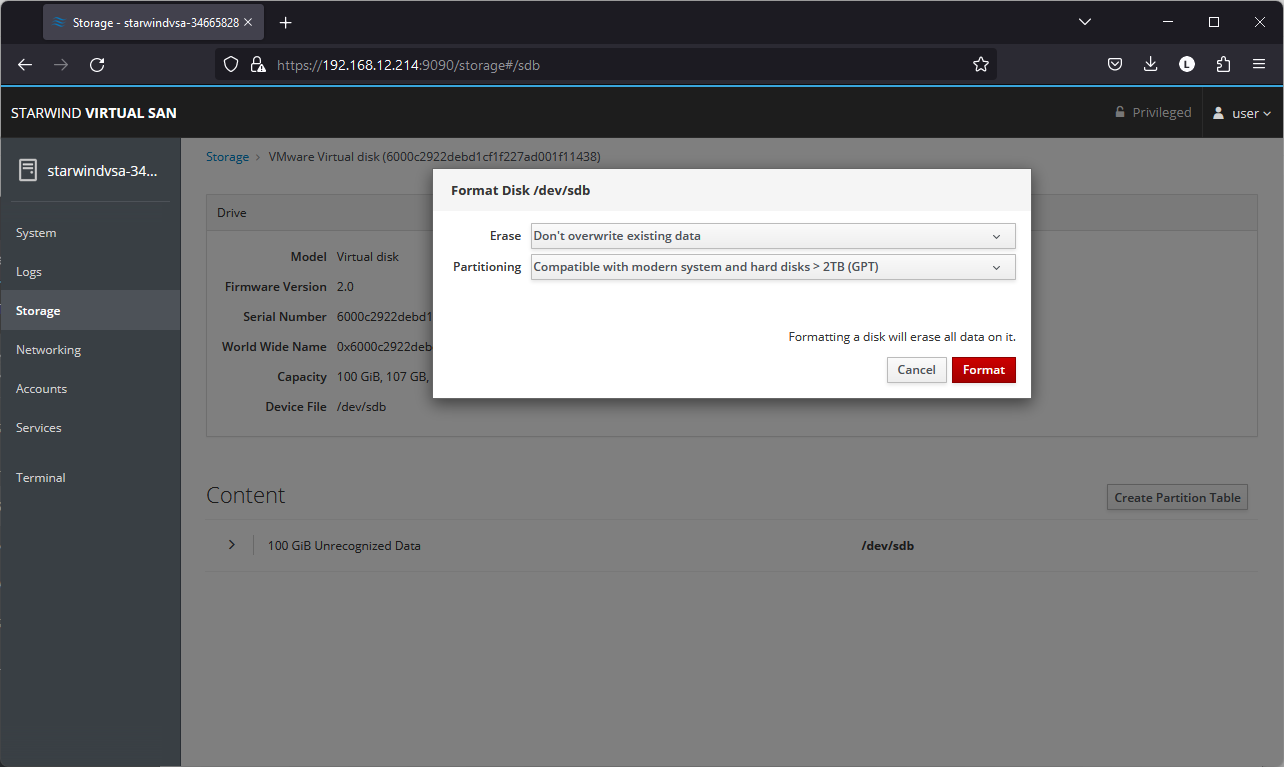

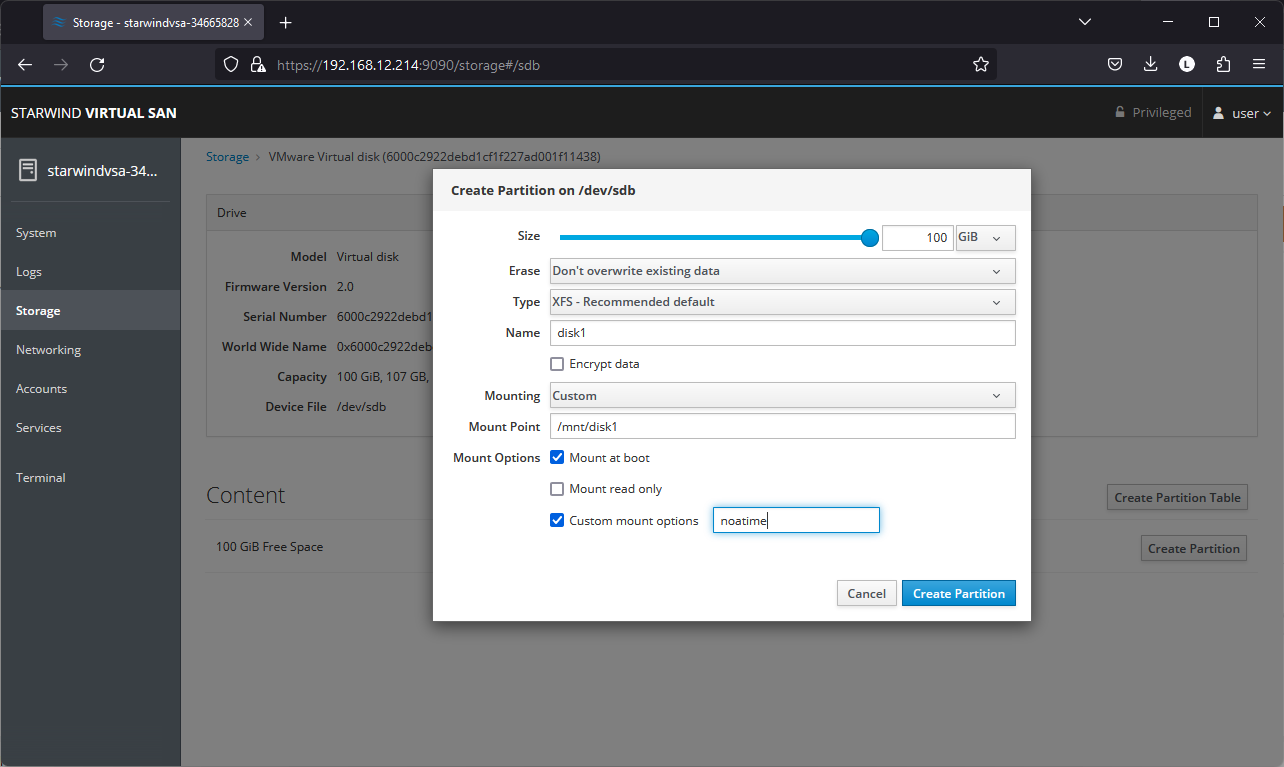

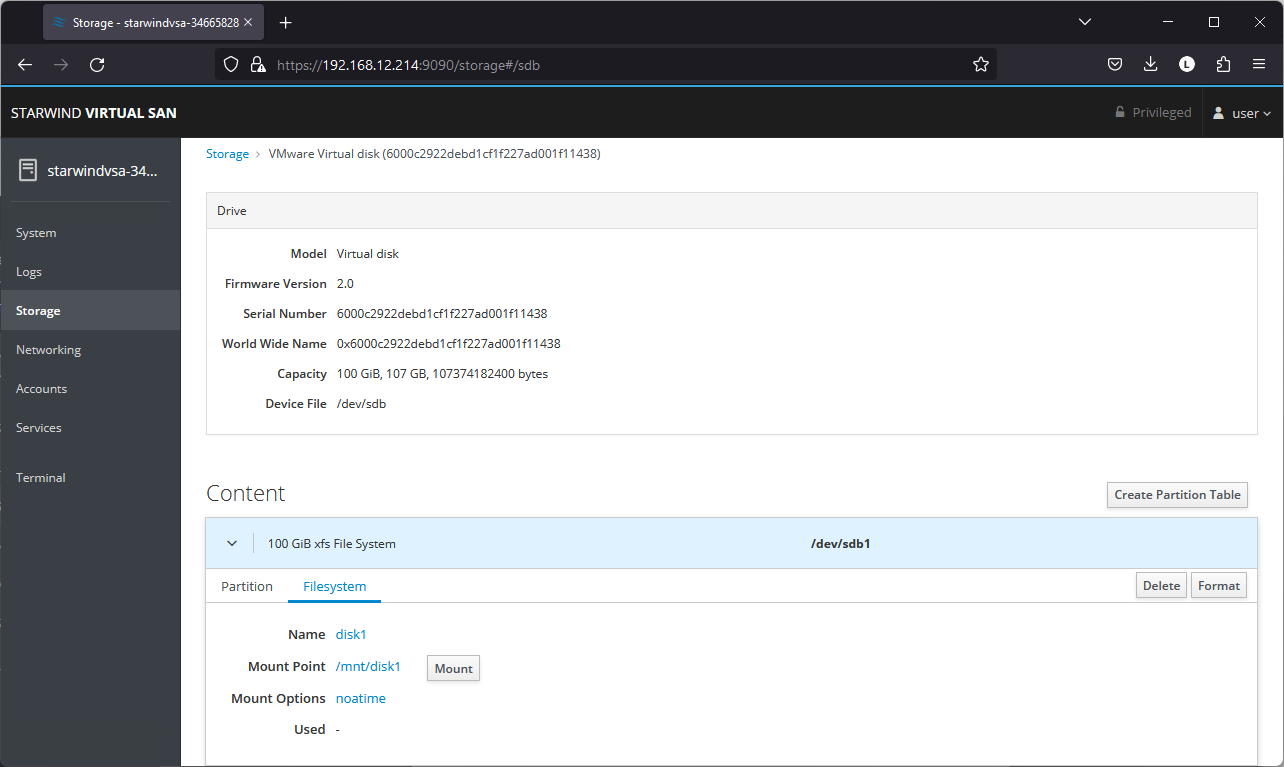

5. The added disk does not have any partitions and filesystem. Press the Create Partition Table button to create the partition.

6. Press Create Partition to format the disk and set the mount point. The mount point should be as follows: /mnt/%yourdiskname%

7. On the Storage section, under Content, navigate to the Filesystem tab. Click Mount.

Configuring StarWind Management Components

1. Install StarWind Management Console on each server or on a separate workstation with Windows OS (Windows 7 or higher, Windows Server 2008 R2 and higher) using the installer available here.

NOTE: StarWind Management Console and PowerShell Management Library components are required.

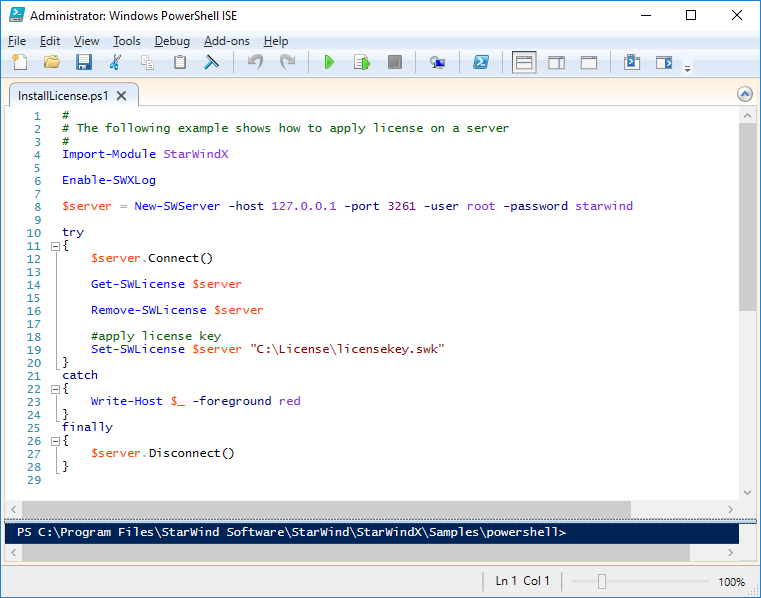

2. Open StarWind InstallLicense.ps1 script with PowerShell ISE as administrator. It can be found here:

C:\Program Files\StarWind Software\StarWind\StarWindX\Samples\powershell\InstallLicense.ps1

Type the IP address of StarWind Virtual SAN VM and credentials of StarWind Virtual SAN service (defaults login: root, password: starwind).

Add the path to the license key.

3. After the license key is applied, StarWind devices can be created.

NOTE: In order to manage StarWind Virtual SAN service (e.g. create ImageFile devices, VTL devices, etc.), StarWindX PowerShell library can be used. StarWind Management Console can be used to monitor StarWind Virtual SAN Service.

Creating StarWind HA LUNs using PowerShell

1. Open PowerShell ISE as Administrator.

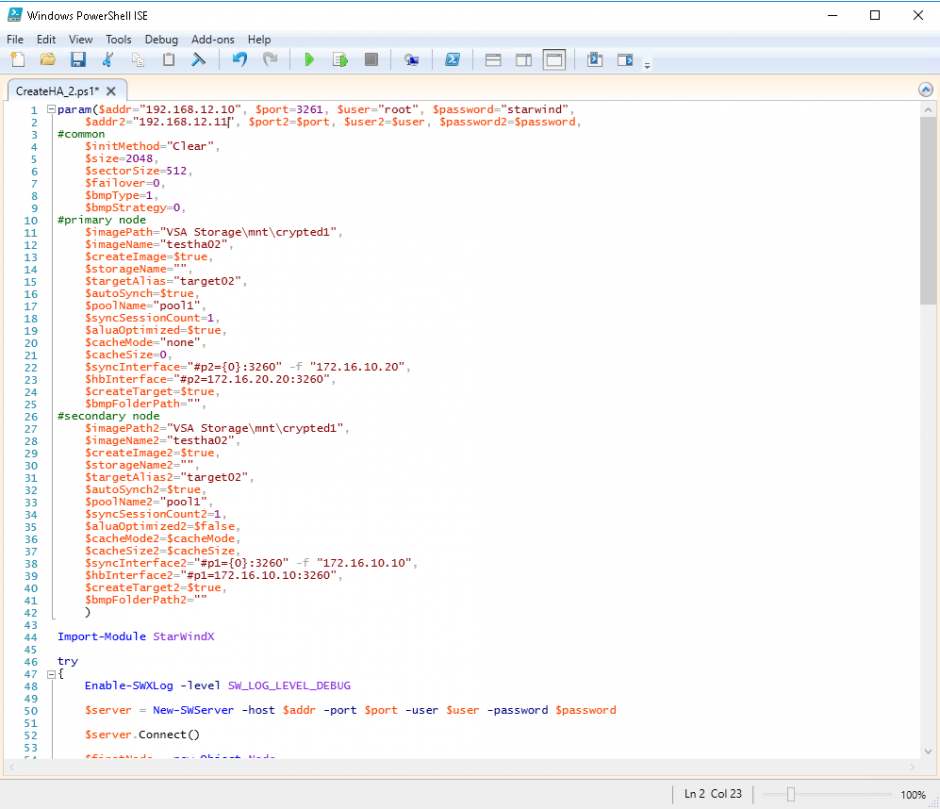

2. Open StarWindX sample CreateHA_2.ps1 using PowerShell ISE. It can be found here:

C:\Program Files\StarWind Software\StarWind\StarWindX\Samples\

2. Configure script parameters according to the following example:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 |

param($addr="192.168.12.10", $port=3261, $user="root", $password="starwind", $addr2="192.168.12.11", $port2=$port, $user2=$user, $password2=$password, #common $initMethod="Clear", $size=2048, $sectorSize=512, $failover=0, $bmpType=1, $bmpStrategy=0, #primary node $imagePath="VSA Storage\mnt\crypted1", $imageName="testha02", $createImage=$true, $storageName="", $targetAlias="target02", $autoSynch=$true, $poolName="pool1", $syncSessionCount=1, $aluaOptimized=$true, $cacheMode="none", $cacheSize=0, $syncInterface="#p2={0}:3260" -f "172.16.20.20", $hbInterface="#p2={0}:3260" -f "172.16.10.20", $createTarget=$true, $bmpFolderPath="", #secondary node $imagePath2="VSA Storage\mnt\crypted1", $imageName2="testha02", $createImage2=$true, $storageName2="", $targetAlias2="target02", $autoSynch2=$true, $poolName2="pool1", $syncSessionCount2=1, $aluaOptimized2=$false, $cacheMode2=$cacheMode, $cacheSize2=$cacheSize, $syncInterface2="#p1={0}:3260" -f "172.16.20.10", $hbInterface2="#p1={0}:3260" -f "172.16.10.10", $createTarget2=$true, $bmpFolderPath2="" ) Import-Module StarWindX try { Enable-SWXLog -level SW_LOG_LEVEL_DEBUG $server = New-SWServer -host $addr -port $port -user $user -password $password $server.Connect() $firstNode = new-Object Node $firstNode.HostName = $addr $firstNode.HostPort = $port $firstNode.Login = $user $firstNode.Password = $password $firstNode.ImagePath = $imagePath $firstNode.ImageName = $imageName $firstNode.Size = $size $firstNode.CreateImage = $createImage $firstNode.StorageName = $storageName $firstNode.TargetAlias = $targetAlias $firstNode.AutoSynch = $autoSynch $firstNode.SyncInterface = $syncInterface $firstNode.HBInterface = $hbInterface $firstNode.PoolName = $poolName $firstNode.SyncSessionCount = $syncSessionCount $firstNode.ALUAOptimized = $aluaOptimized $firstNode.CacheMode = $cacheMode $firstNode.CacheSize = $cacheSize $firstNode.FailoverStrategy = $failover $firstNode.CreateTarget = $createTarget $firstNode.BitmapStoreType = $bmpType $firstNode.BitmapStrategy = $bmpStrategy $firstNode.BitmapFolderPath = $bmpFolderPath # # device sector size. Possible values: 512 or 4096(May be incompatible with some clients!) bytes. # $firstNode.SectorSize = $sectorSize $secondNode = new-Object Node $secondNode.HostName = $addr2 $secondNode.HostPort = $port2 $secondNode.Login = $user2 $secondNode.Password = $password2 $secondNode.ImagePath = $imagePath2 $secondNode.ImageName = $imageName2 $secondNode.CreateImage = $createImage2 $secondNode.StorageName = $storageName2 $secondNode.TargetAlias = $targetAlias2 $secondNode.AutoSynch = $autoSynch2 $secondNode.SyncInterface = $syncInterface2 $secondNode.HBInterface = $hbInterface2 $secondNode.SyncSessionCount = $syncSessionCount2 $secondNode.ALUAOptimized = $aluaOptimized2 $secondNode.CacheMode = $cacheMode2 $secondNode.CacheSize = $cacheSize2 $secondNode.FailoverStrategy = $failover $secondNode.CreateTarget = $createTarget2 $secondNode.BitmapFolderPath = $bmpFolderPath2 $device = Add-HADevice -server $server -firstNode $firstNode -secondNode $secondNode -initMethod $initMethod while ($device.SyncStatus -ne [SwHaSyncStatus]::SW_HA_SYNC_STATUS_SYNC) { $syncPercent = $device.GetPropertyValue("ha_synch_percent") Write-Host "Synchronizing: $($syncPercent)%" -foreground yellow Start-Sleep -m 2000 $device.Refresh() } } catch { Write-Host $_ -foreground red } finally { $server.Disconnect() } |

Detailed explanation of script parameters:

-addr, -addr2 — partner nodes IP address.

Format: string. Default value: 192.168.0.1, 192.168.0.1

allowed values: localhost, IP-address

-port, -port2 — local and partner node port.

Format: string. Default value: 3261

-user, -user2 — local and partner node user name.

Format: string. Default value: root

-password, -password2 — local and partner node user password.

Format: string. Default value: starwind

#common

-initMethod –

Format: string. Default value: Clear

-size – set size for HA-devcie (MB)

Format: integer. Default value: 12

-sectorSize – set sector size for HA-device

Format: integer. Default value: 512

allowed values: 512, 4096

-failover – set type failover strategy

Format: integer. Default value: 0 (Heartbeat)

allowed values: 0, 1 (Node Majority)

-bmpType – set bitmap type, is set for both partners at once

Format: integer. Default value: 1 (RAM)

allowed values: 1, 2 (DISK)

-bmpStrategy – set journal strategy, is set for both partners at once

Format: integer. Default value: 0

allowed values: 0, 1 – Best Performance (Failure), 2 – Fast Recovery (Continuous)

#primary node

-imagePath – set path to store the device file

Format: string. Default value: My computer\C\starwind”. For Linux the following format should be used: “VSA Storage\mnt\mount_point”

-imageName – set name device

Format: string. Default value: masterImg21

-createImage – set create image file

Format: boolean. Default value: true

-targetAlias – set alias for target

Format: string. Default value: targetha21

-poolName – set storage pool

Format: string. Default value: pool1

-aluaOptimized – set Alua Optimized

Format: boolean. Default value: true

-cacheMode – set type L1 cache (optional parameter)

Format: string. Default value: wb

allowed values: none, wb, wt

-cacheSize – set size for L1 cache in MB (optional parameter)

Format: integer. Default value: 128

allowed values: 1 and more

-syncInterface – set sync channel IP-address from partner node

Format: string. Default value: “#p2={0}:3260”

-hbInterface – set heartbeat channel IP-address from partner node

Format: string. Default value: “”

-createTarget – set creating target

Format: string. Default value: true

Even if you do not specify the parameter -createTarget, the target will be created automatically.

If the parameter is set as -createTarget $false, then an attempt will be made to create the device with existing targets, the names of which are specified in the -targetAlias (targets must already be created)

-bmpFolderPath – set path to save bitmap file

Format: string.

#secondary node

-imagePath2 – set path to store the device file

Format: string. Default value: “My computer\C\starwind”. For Linux the following format should be used: “VSA Storage\mnt\mount_point”

-imageName2 – set name device

Format: string. Default value: masterImg21

-createImage2 – set create image file

Format: boolean. Default value: true

-targetAlias2 – set alias for targetFormat: string.

Default value: targetha22

-poolName2 – set storage pool

Format: string. Default value: pool1

-aluaOptimized2 – set Alua Optimized

Format: boolean. Default value: true

-cacheMode2 – set type L1 cache (optional parameter)

Format: string. Default value: wb

allowed values: wb, wt

-cacheSize2 – set size for L1 cache in MB (optional parameter)

Format: integer. Default value: 128

allowed values: 1 and more

-syncInterface2 – set sync channel IP-address from partner node

Format: string. Default value: “#p1={0}:3260”

-hbInterface2 – set heartbeat channel IP-address from partner node

Format: string. Default value: “”

-createTarget2 – set creating target

Format: string. Default value: true

Even if you do not specify the parameter -createTarget, the target will be created automatically.If the parameter is set as -createTarget $false, then an attempt will be made to create the device with existing targets, the names of which are specified in the -targetAlias (targets must already be created)

-bmpFolderPath2 – set path to save bitmap file

Format: string.

Selecting the Failover Strategy

StarWind provides 2 options for configuring a failover strategy:

Heartbeat

The Heartbeat failover strategy allows avoiding the “split-brain” scenario when the HA cluster nodes are unable to synchronize but continue to accept write commands from the initiators independently. It can occur when all synchronization and heartbeat channels disconnect simultaneously, and the partner nodes do not respond to the node’s requests. As a result, StarWind service assumes the partner nodes to be offline and continues operations on a single-node mode using data written to it.

If at least one heartbeat link is online, StarWind services can communicate with each other via this link. The device with the lowest priority will be marked as not synchronized and get subsequently blocked for the further read and write operations until the synchronization channel resumption. At the same time, the partner device on the synchronized node flushes data from the cache to the disk to preserve data integrity in case the node goes down unexpectedly. It is recommended to assign more independent heartbeat channels during the replica creation to improve system stability and avoid the “split-brain” issue.

With the heartbeat failover strategy, the storage cluster will continue working with only one StarWind node available.

Node Majority

The Node Majority failover strategy ensures the synchronization connection without any additional heartbeat links. The failure-handling process occurs when the node has detected the absence of the connection with the partner.

The main requirement for keeping the node operational is an active connection with more than half of the HA device’s nodes. Calculation of the available partners is based on their “votes”.

In case of a two-node HA storage, all nodes will be disconnected if there is a problem on the node itself, or in communication between them. Therefore, the Node Majority failover strategy requires the addition of the third Witness node or file share (SMB) which participates in the nodes count for the majority, but neither contains data on it nor is involved in processing clients’ requests. In case an HA device is replicated between 3 nodes, no Witness node is required.

With Node Majority failover strategy, failure of only one node can be tolerated. If two nodes fail, the third node will also become unavailable to clients’ requests.

Please select the required option:

Provisioning StarWind HA Storage to Hosts

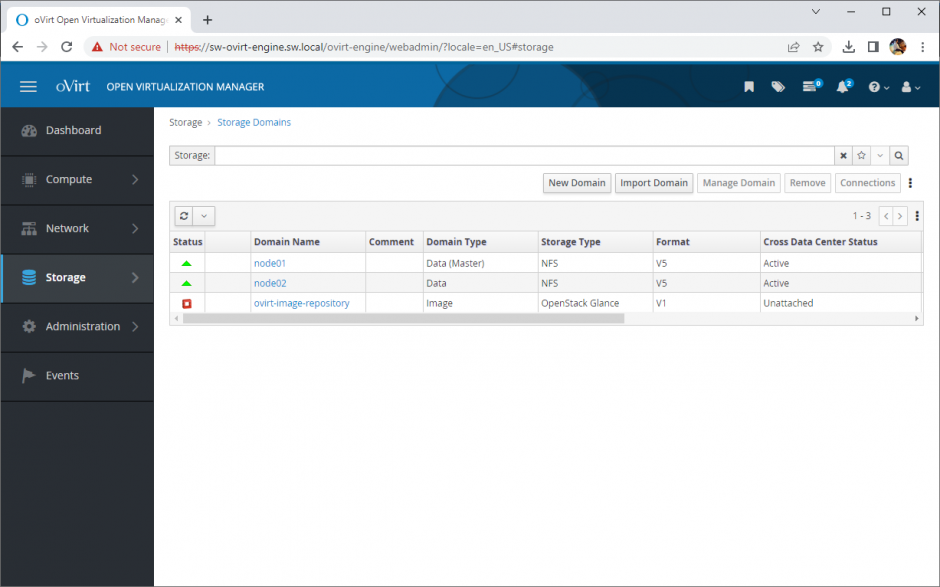

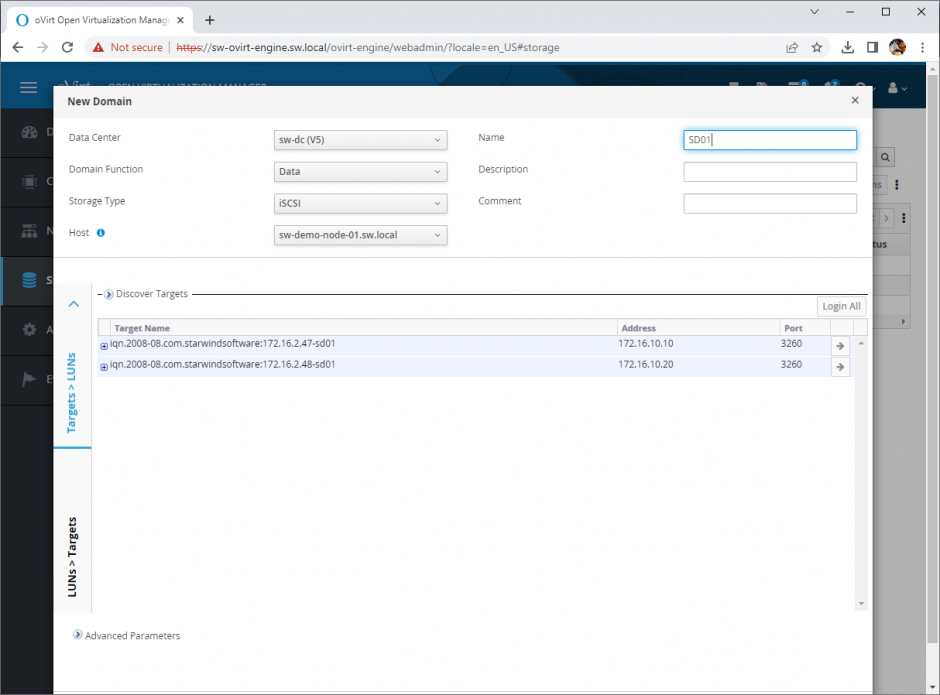

1. Login to Engine and open Storage -> Domain. Click New Domain.

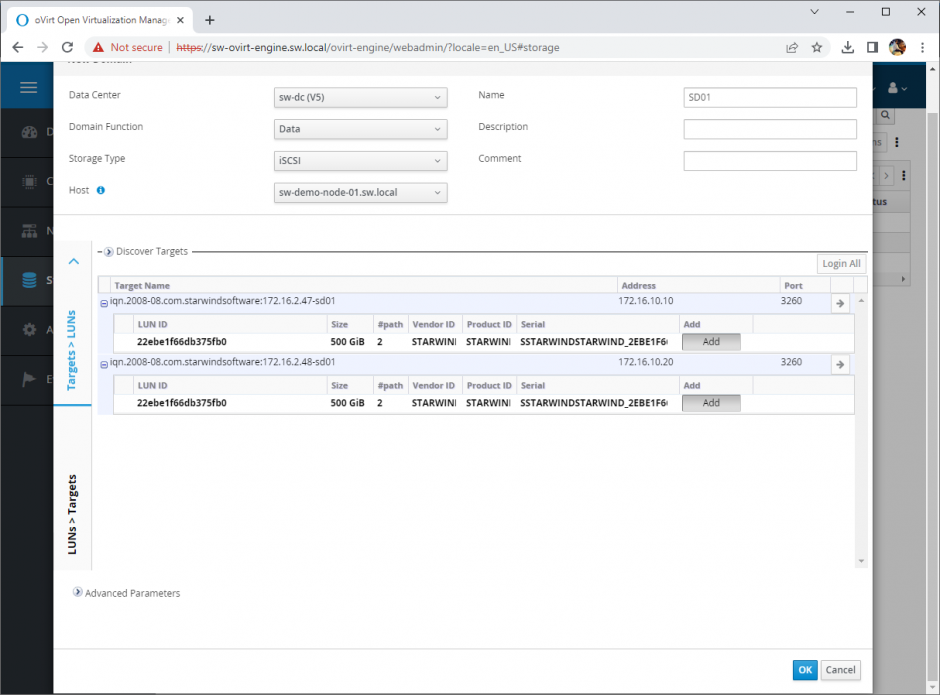

2. Choose Storage Type – iSCSI, Host and Name of Storage Domain. Discover targets via iSCSI links, which were previously configured. Click Login All.

3. Add LUN from each iSCSI target. Click OK.

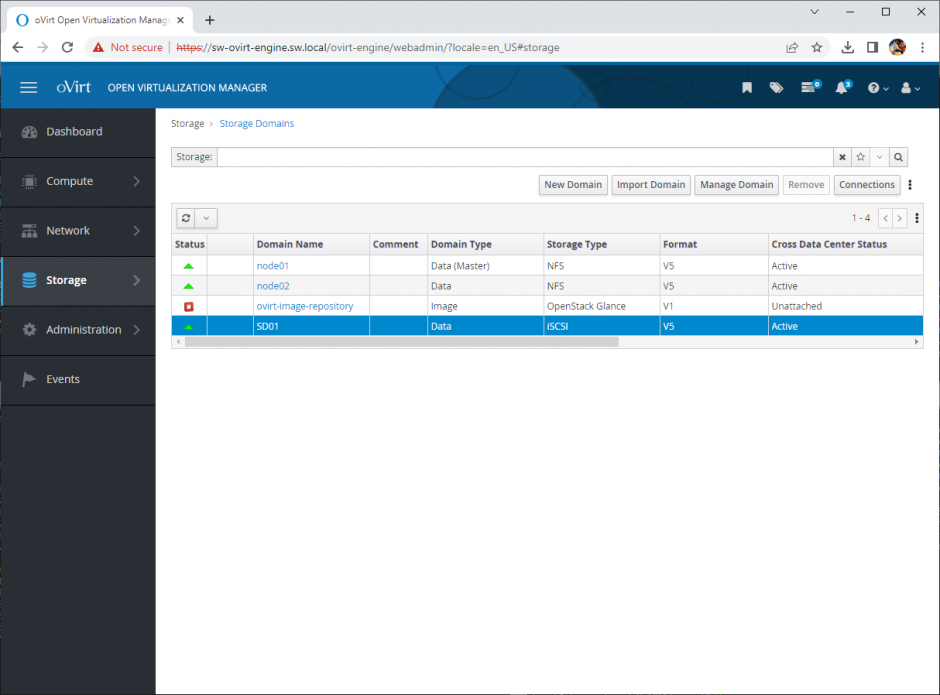

4. Storage Domain will be added to the list of Domain and can be used as a storage for VMs.

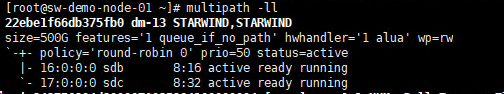

5. Login to each host and verify that multipathing policy has been applied using the following command.

|

1 |

multipath -ll |

Conclusion

Deploying and configuring StarWind Virtual SAN for the Oracle Linux Virtualization Manager (OLVM) is a new move for organizations seeking a powerful KVM-based VM-centric storage solution. This guide ensures that IT professionals have the information to perform a successful setup of StarWind VSAN for OLVM.