StarWind Virtual SAN Configuring HA shared storage for Scale-Out File Server in Windows Server 2012 R2

- December 18, 2017

- 23 min read

INTRODUCTION

This technical paper covers the Highly Available Shared Storage configuration for Scale-Out File Servers in Windows Server 2012 R2. It describes how to configure StarWind Virtual SAN, create CSV(s), and build the Scale-out File Server (SoFS) which allows keeping server application data on file shares over SMB protocol and makes files continuously accessible for end users. Providing VSAN reliability, this architecture is designed to ensure file share availability and accessibility for clustered nodes and VMs in the cluster.

StarWind Virtual SAN® is a hardware-less storage solution that creates a fault-tolerant and high-performing storage pool built for virtualization workloads by mirroring existing server’s storage and RAM between the participating storage cluster nodes. The mirrored storage resource, in this case, is treated just like local storage. StarWind Virtual SAN ensures the simple configuration of highly available shared storage for SoFS and delivers the excellent performance and advanced data protection features.

This guide is intended for experienced Windows Server users, or system administrators. It provides detailed instructions on how to configure HA Shared Storage for Scale-Out File Server in Windows Server 2012 R2 with StarWind Virtual SAN as a storage provider.

A full set of up-to-date technical documentation can always be found here, or by pressing the Help button in the StarWind Management Console.

For any technical inquiries, please, visit our online community, Frequently Asked Questions page, or use the support form to contact our technical support department.

Pre-Configuring the Servers

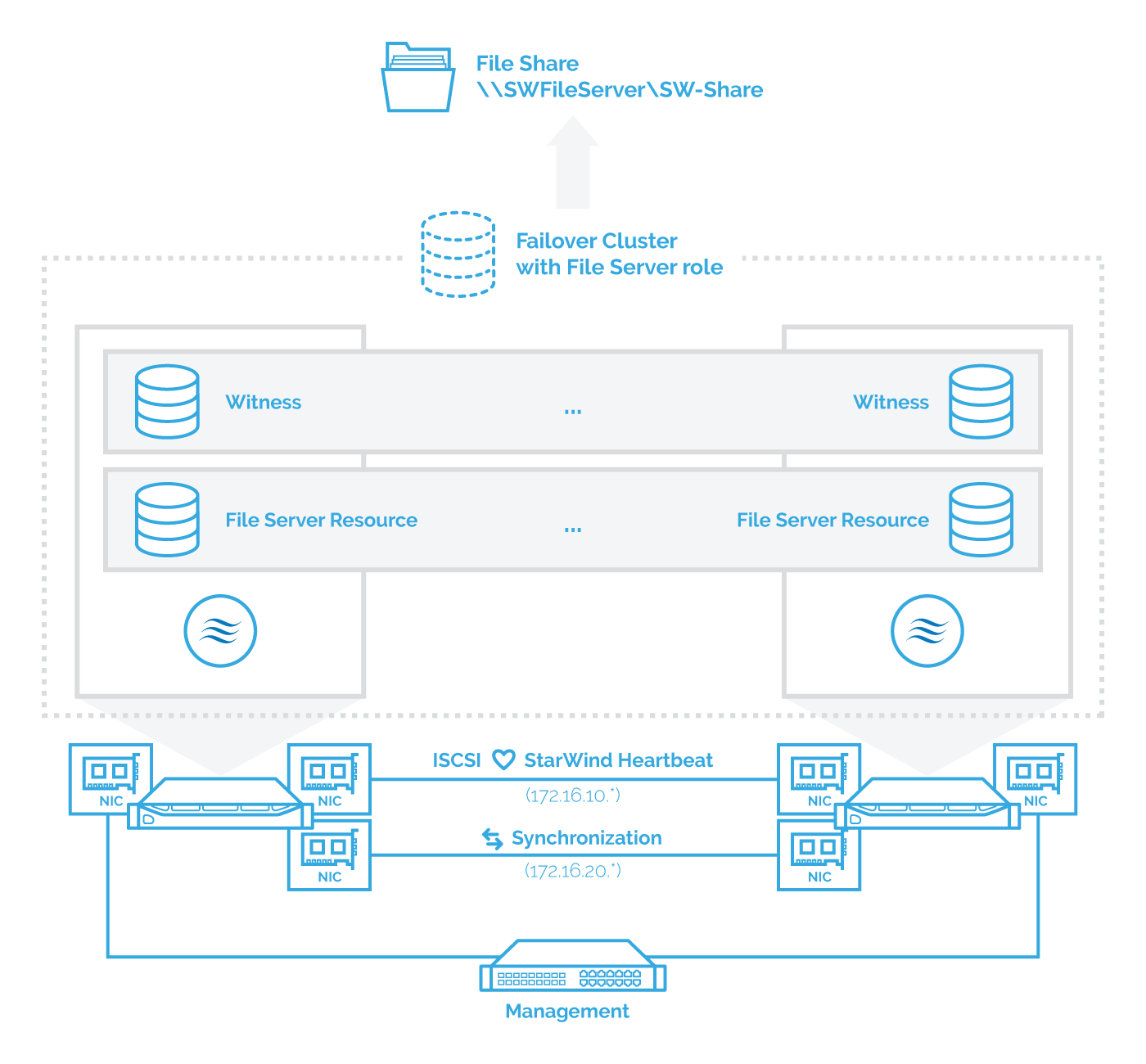

The reference network diagram of the configuration described further in this guide is provided below.

NOTE: Additional network connections may be necessary, depending on the cluster setup and applications requirements. For any technical help with configuring the additional networks, please, do not hesitate to contact StarWind support department via online community forum, or via support form (depends on your support plan).

1. Make sure that you have a domain controller and you have added the servers we are configuring to the domain.

2. Install Failover Clustering, Multipath I/O features, and the Hyper-V role on both servers. That can be done through Server Manager (Add Roles and Features menu item).

3. Configure network interfaces on each node to make sure that Synchronization and iSCSI/StarWind heartbeat interfaces are in different subnets and connected according to the network diagram above.

In this document, 172.16.10.x subnet is used for iSCSI/StarWind heartbeat traffic, while 172.16.20.x subnet is used for the Synchronization traffic.

4. In order to allow iSCSI Initiators to discover all StarWind Virtual SAN interfaces, StarWind configuration file (StarWind.cfg) should be changed after stopping StarWind Service on the node where it will be edited.

Locate StarWind Virtual SAN configuration file (the default path is: C:\Program Files\StarWind Software\StarWind\StarWind.cfg ) and open it with Wordpad as Administrator.

Find the string and change the value from 0 to 1 (should look as follows: ). Save the changes and exit Wordpad. Once StarWind.cfg is changed and saved, StarWind service can be started.

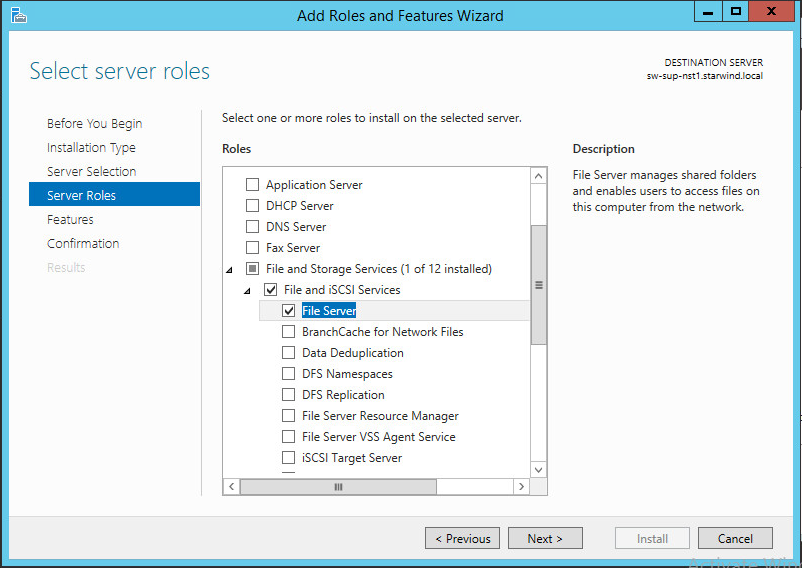

Installing File Server Role

5. Open Server Manager: Start -> Server Manager.

6. Select: Manage -> Add Roles and Features

7. Follow the Wizard’s steps to install the selected role.

NOTE: Restart the server after installation is completed.

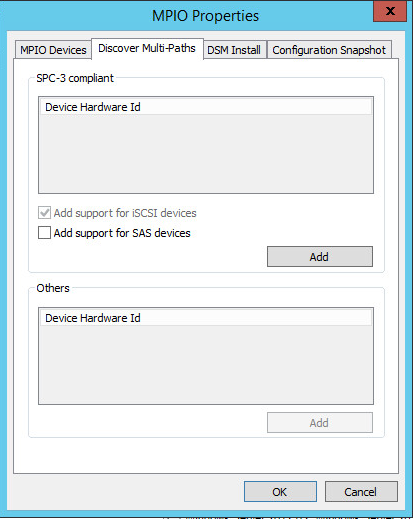

Enabling Multipath Support

8. On cluster nodes, open the MPIO manager: Start->Administrative Tools->MPIO;

9. Go to the Discover Multi-Paths tab;

10. Tick the Add support for iSCSI devices checkbox and click Add

11. When prompted to restart the server, click Yes to proceed.

NOTE: Repeat the procedure on the second server.

Downloading, Installing, and Registering the Software

12. Download the StarWind setup executable file from our website by following this link:

https://www.starwind.com/registration-starwind-virtual-san

NOTE: The setup file is the same for x86 and x64 systems, as well as for all Virtual SAN deployment scenarios.

13. Launch the downloaded setup file on the server where you wish to install StarWind Virtual SAN or one of its components. The setup wizard will appear:

14. Read and accept the License Agreement.

Click Next to continue.

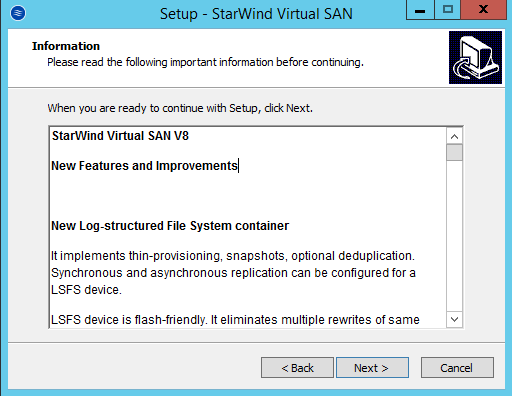

15. Carefully read the information about new features and improvements. Red text that indicates warnings for users who update existing software installations.

Click Next to continue.

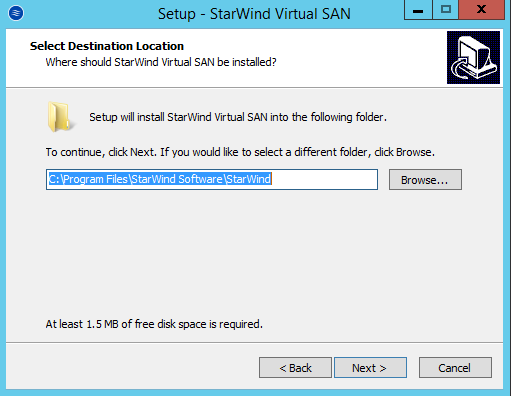

16. Click Browse to modify the installation path if necessary.

Click Next to continue.

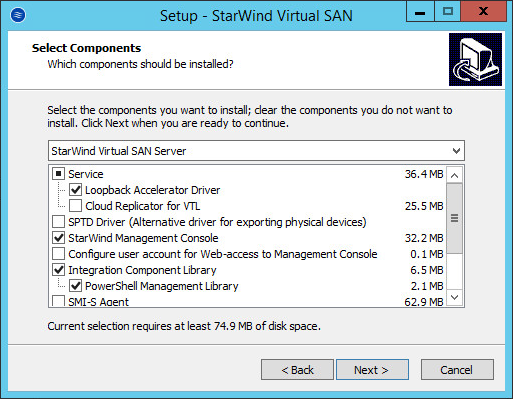

17. Select the following components for the minimum setup:

- StarWind Virtual SAN Service

StarWind Service is the core of the software. It can create iSCSI targets as well as share virtual and physical devices. The service can be managed from StarWind Management Console on any Windows computer or VSA that is on the same network. Alternatively, the service can be managed from StarWind Web Console, deployed separately. - StarWind Management Console

The Management Console is the Graphic User Interface (GUI) part of the software that controls and monitors all storage-related operations (e.g., allows users to create targets and devices on StarWind Virtual SAN servers connected to the network).

Click Next to continue.

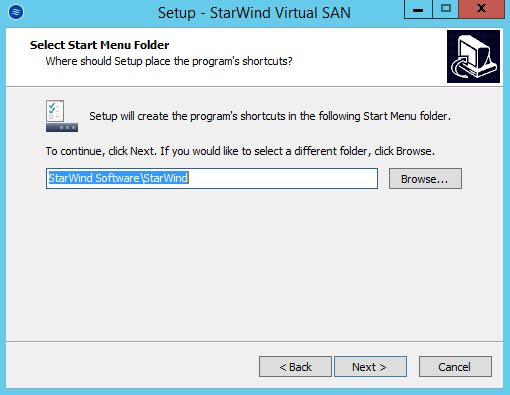

18. Specify the Start Menu folder.

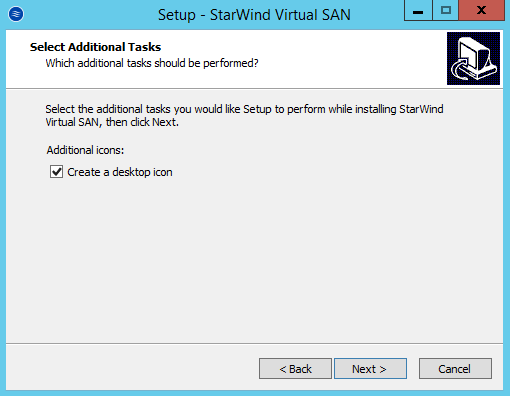

19. Enable the checkbox if you want to create a desktop icon.

Click Next to continue.

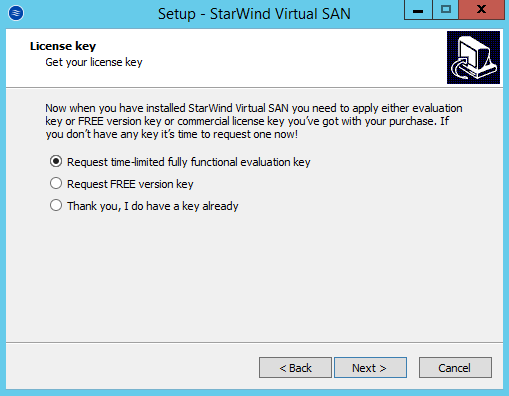

20. You will be asked to request a time-limited fully functional evaluation key, a FREE version key, or a fully-commercial license key sent to you with the purchase of StarWind Virtual SAN. Select the appropriate option.

Click Next to continue.

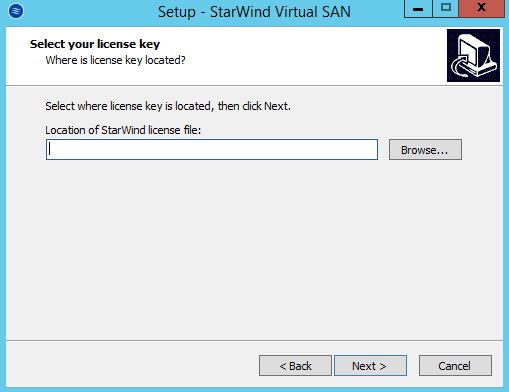

21. Click Browse to locate the license file.

Click Next to continue.

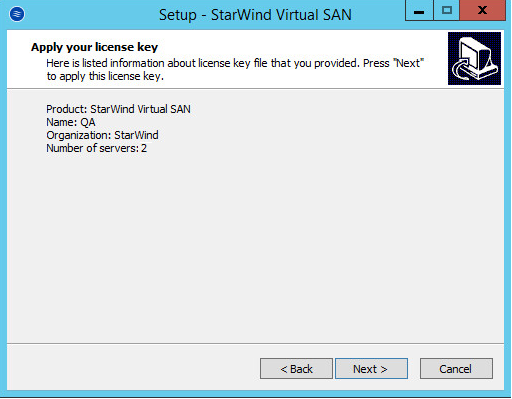

22. Review the licensing information.

Click Next to apply the license key.

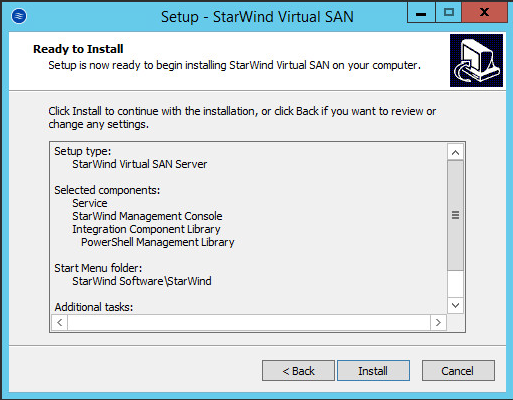

23. Verify the installation settings. Click Back to make any changes, or Install to continue.

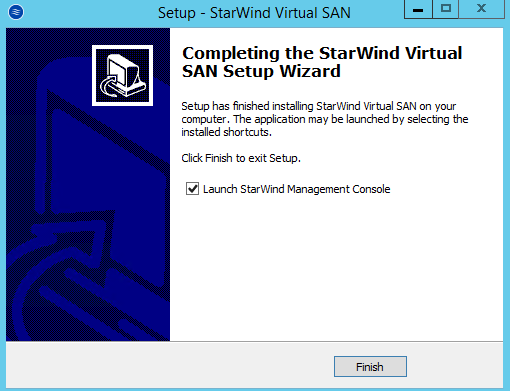

24. Select the appropriate checkbox to launch the StarWind Management Console immediately after the setup wizard is closed.

Click Finish to close the wizard.

25. Repeat the installation steps on the partner node.

NOTE: To manage StarWind Virtual SAN installed on a Server Core OS edition, StarWind Management console must be installed on a different computer running the GUI-enabled Windows edition.

Configuring Shared Storage

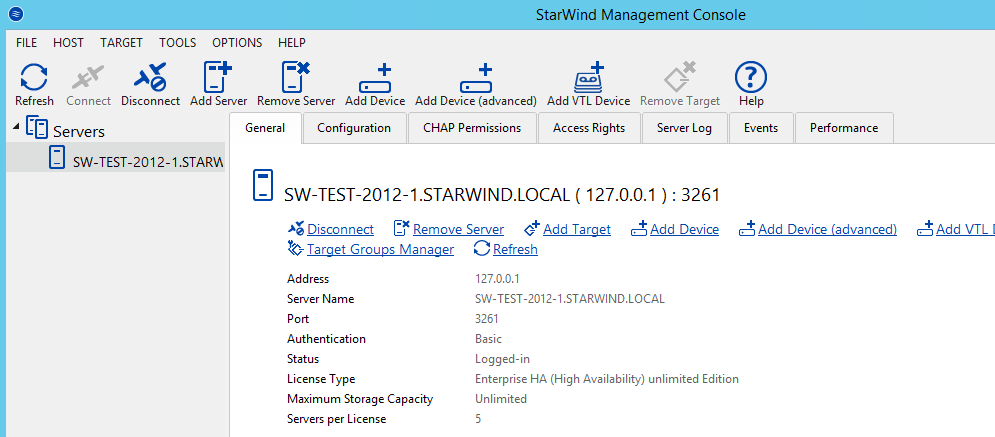

26. Launch StarWind Management Console by double-clicking the StarWind tray icon.

NOTE: StarWind Management Console cannot be installed on an operating system without a GUI. You can install it on Windows Desktop 7, 8, 8.1, 10, and Windows Server 2008 R2, 2012, 2012 R2, 2016 editions including the desktop versions of Windows.

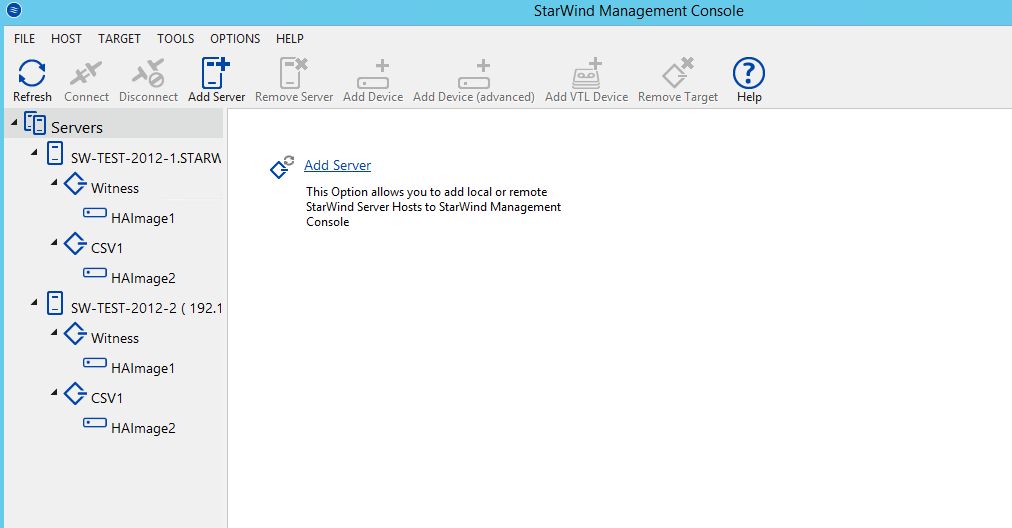

If StarWind Service and Management Console are installed on the same server, the Management Console will automatically add the local StarWind node to the Console after the first launch. Then, Management Console automatically connects to it using the default credentials. To add remote StarWind servers to the console, use the Add Server button on the control panel.

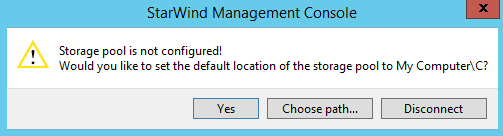

27. StarWind Management console will ask you to specify the default storage pool on the server you’re connecting to for the first time. Please, configure the default storage pool to use one of the volumes you have prepared earlier. All the devices created through the Add Device wizard will be stored on it. Should you decide to use an alternative storage pool for your StarWind virtual disks, please use the Add Device (advanced) menu item.

Press the Yes button to configure the storage pool. Should you require to change the storage pool destination, press Choose path… and point the browser to the necessary disk.

NOTE: Each array used by StarWind Virtual SAN to store virtual disk images should meet the following requirements:

- initialized as GPT;

- have a single NTFS-formatted partition;

- have a drive letter assigned.

On the steps below, you can find how to prepare an HA device for Witness drive. Other devices should be created in the same way.

28. Select either of two StarWind servers to start the device creation and configuration.

29. Press the Add Device (advanced) button on the toolbar.

30. Add Device Wizard will appear. Select Hard Disk Device and click Next.

31. Select the Virtual disk and click Next.

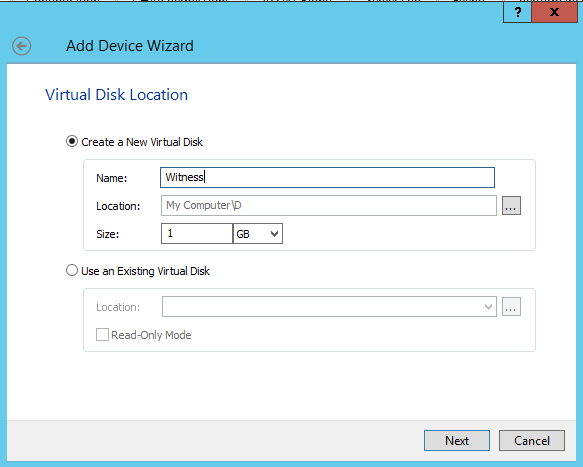

32. Specify the virtual disk name, location, and size and click Next.

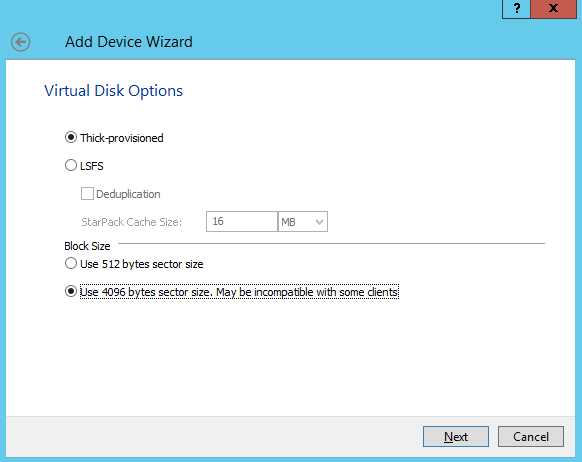

33. Choose the type of StarWind device (Thick-provisioned, or LSFS device), specify virtual block size and click Next.

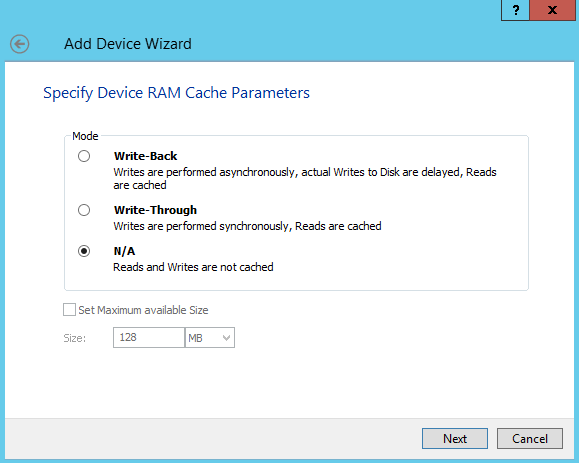

34. Define the caching policy, specify the cache size, and click Next.

NOTE: It is recommended to assign 1 GB of L1 cache in Write-Back or Write-Through mode per 1 TB storage capacity if necessary.

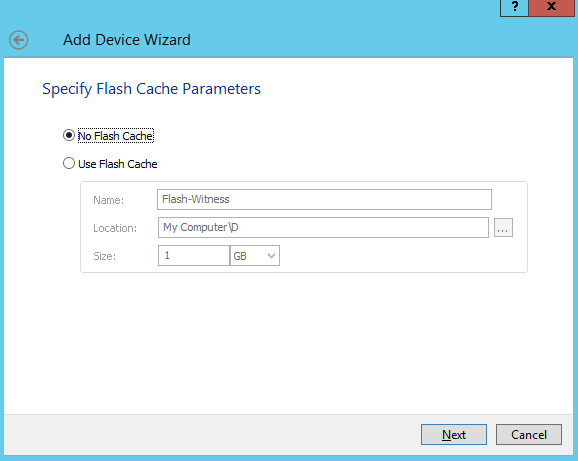

35. Define the Flash Cache Parameters policy and size if necessary. Choose an SSD location in the wizard. Click Next to continue.

NOTE: The recommended size of the L2 cache is 10% of the initial StarWind device capacity.

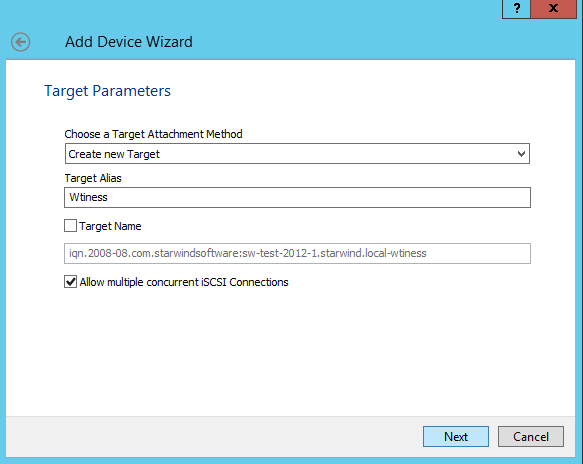

36. Specify the target parameters.

Select the Target Name checkbox to enter a custom name of the target. Otherwise, the name will be generated automatically based on the target alias. Click Next to continue.

37. Click Create to add a new device and assign it to the target. Then, click Close to close the wizard.

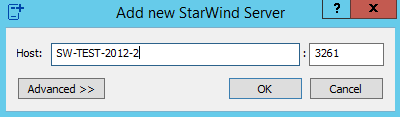

38. Right-click the servers field and click the Add Server button. Add new StarWind Server which will be used as the partner HA node.

Press OK and Connect buttons to continue.

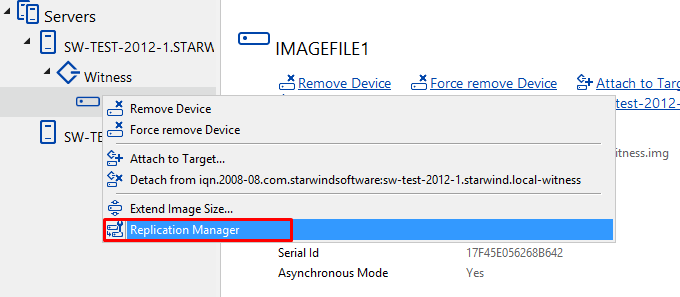

39. Right-click the device you have just created and select Replication Manager.

Replication Manager Window will appear. Press the Add Replica button.

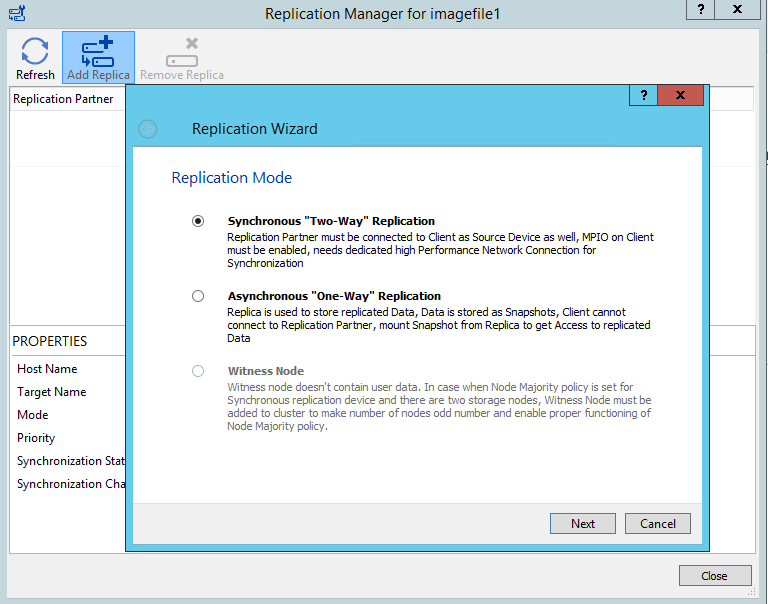

40. Select Synchronous two-way replication and click Next to proceed.

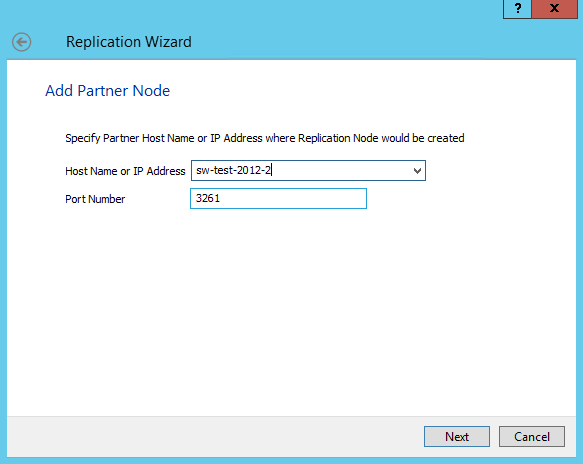

41. Specify the partner server IP address.

Default StarWind management port is 3261. If you have configured a different port, make sure to change the Port Number value parameter accordingly. Click Next.

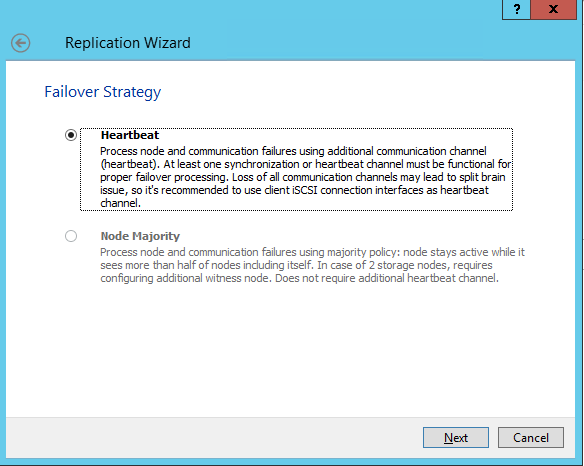

42. Select Heartbeat Failover Strategy and click Next.

43. Choose Create new Partner Device and click Next.

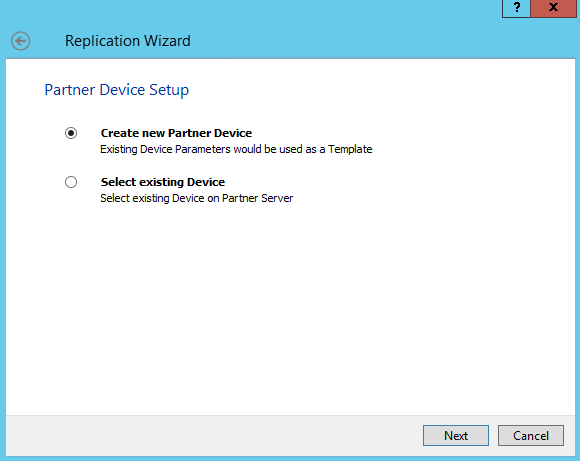

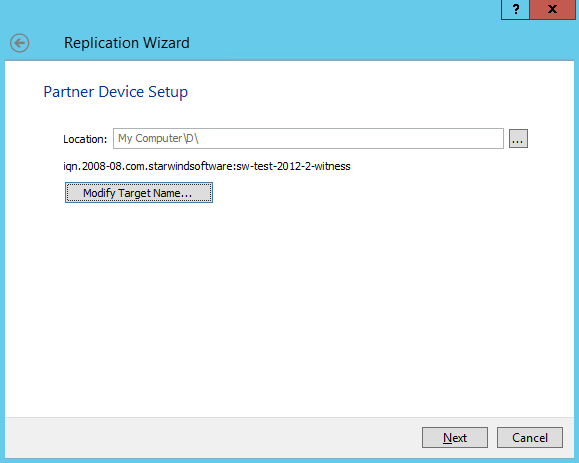

44. Specify the partner device location or the target name of the device if necessary.

Click Next.

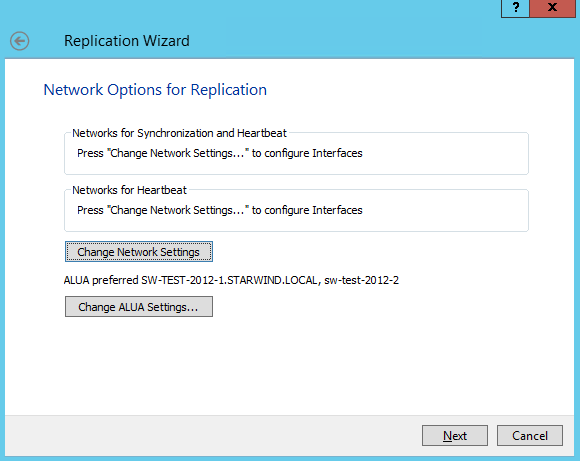

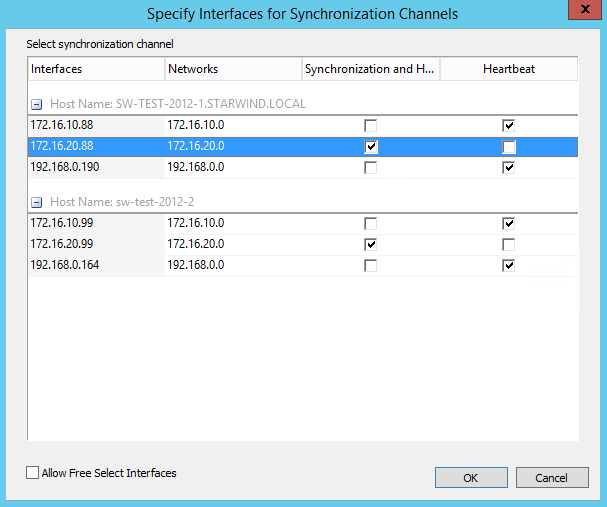

45. Select synchronization and heartbeat channels for the HA device by clicking the Change network settings button.

46. Specify the interfaces for synchronization and Heartbeat. Click OK. Then click Next.

NOTE: It is recommended configuring Heartbeat and iSCSI channels on the same interfaces to avoid the split-brain issue. If Synchronization and Heartbeat interfaces are located on the same network adapter, it is recommended to assign one more Heartbeat interface to a separate adapter.

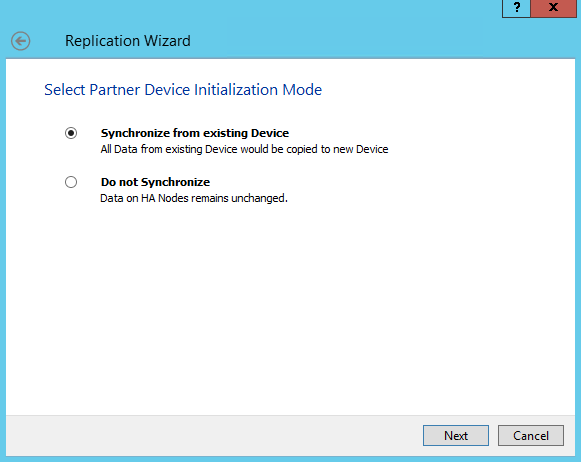

47. Select Synchronize from existing Device as a partner device initialization mode and click Next.

48. Press the Create Replica button. Then click Close to close the wizard.

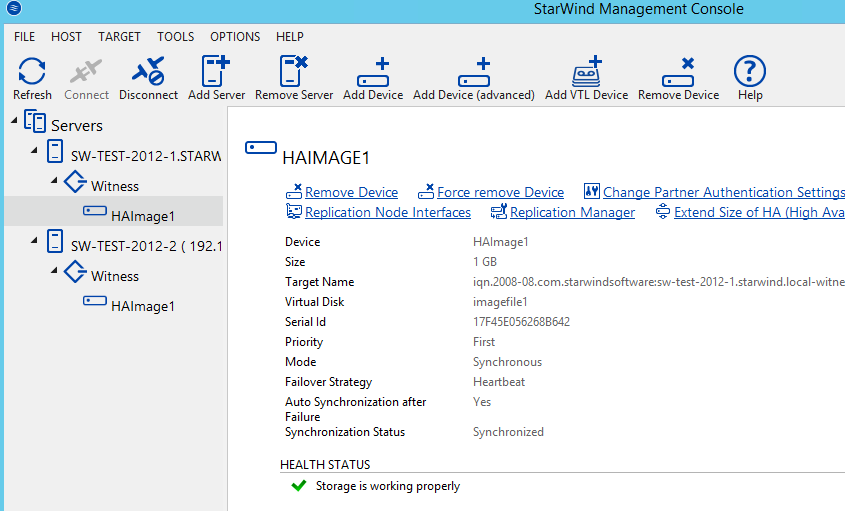

49. The added devices will appear in the StarWind Management Console.

Repeat the steps above to create other virtual disks if necessary.

Once all devices are created, the Management console should look as follows.

Discovering Target Portals

In this section, we discuss how to discover Target Portals on each StarWind node.

50. Launch Microsoft iSCSI Initiator on the first StarWind node: Start > Administrative Tools > iSCSI Initiator or iscsicpl from the command line interface. The iSCSI Initiator Properties window will appear.

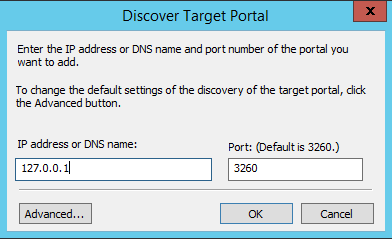

51. Navigate to the Discovery tab. Click the Discover Portal button. In Discover Target Portal dialog box, enter the local IP address – 127.0.0.1.

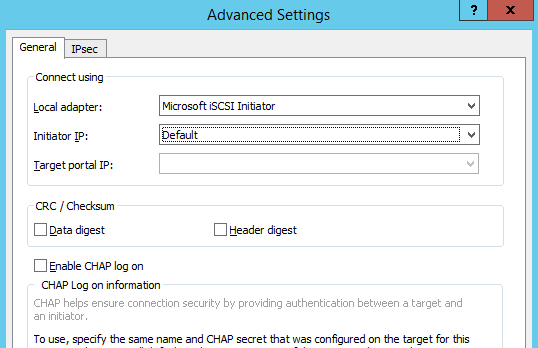

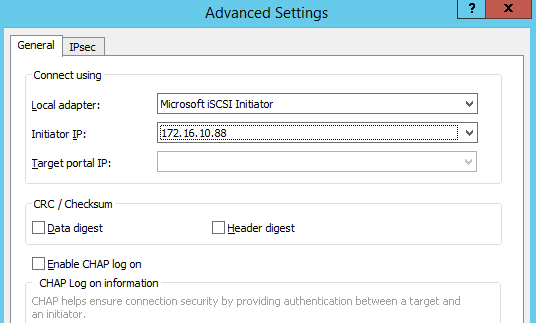

52. Click the Advanced button, select Microsoft iSCSI Initiator as your Local adapter and keep Initiator IP as it is set by default. Press OK twice to complete the Target Portal discovery.

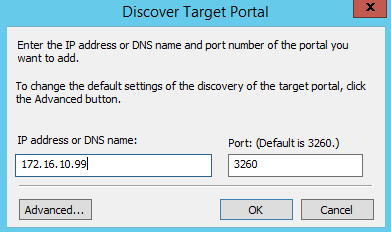

53. Click the Discover Portal button once again.

54. In the Discover Target Portal dialog box, enter the iSCSI IP address of the partner node and click the Advanced button.

55. Select Microsoft iSCSI Initiator as a Local adapter, and select the initiator IP address from the same subnet. Click OK twice to add the Target Portal.

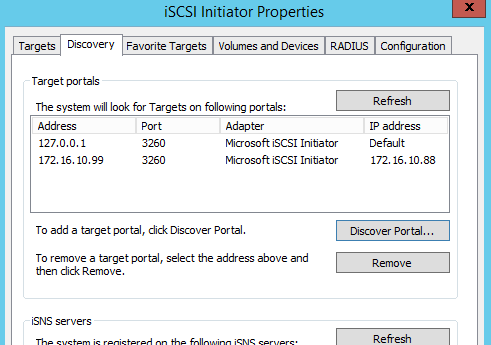

56. Target portals are added on the local node.

57. Go through the same steps on the partner node.

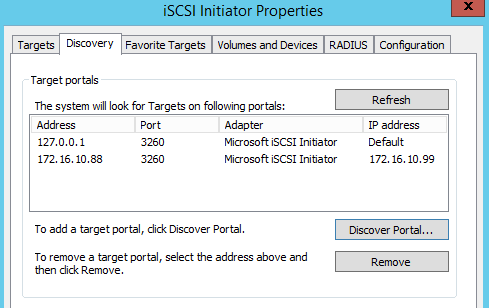

58. All target portals are added to the partner node.

Connecting Targets

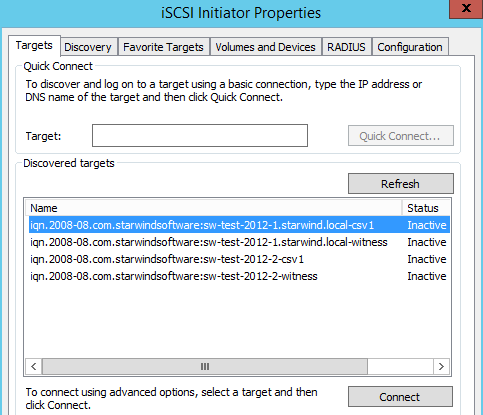

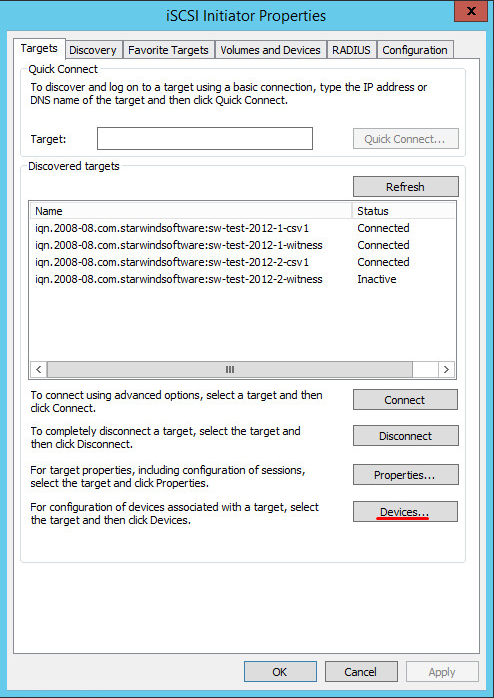

59. Launch Microsoft iSCSI Initiator on the first StarWind node and click on the Targets tab. The previously created targets should be listed in the Discovered Targets section.

NOTE: If the created targets are not listed, check the firewall settings of the StarWind Server and the list of networks served by the StarWind Server (go to StarWind Management Console -> Configuration -> Network).

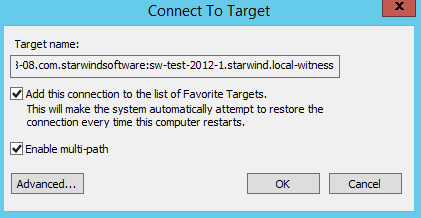

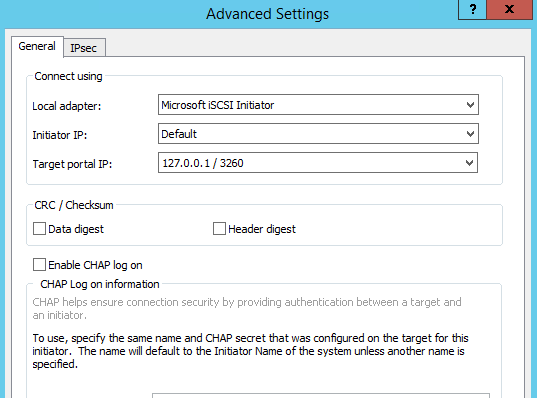

60. Select a target for the Witness device, discovered from the local server and click Connect.

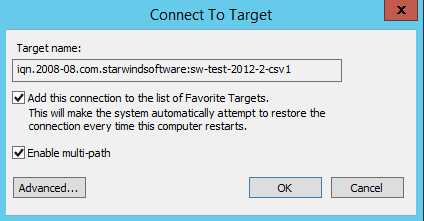

61. Enable checkboxes like in the image below and click Advanced.

62. Select Microsoft iSCSI Initiator in the Local adapter text field.

Select 127.0.0.1 in the Target portal IP list.

Click OK twice to connect the target.

NOTE: It is recommended to connect Witness device only by loopback (127.0.0.1) address. Do not connect the target to the Witness device from the partner StarWind node.

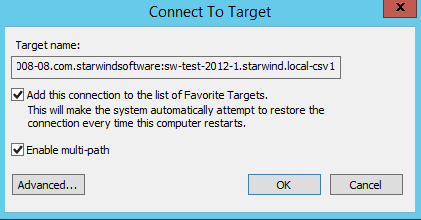

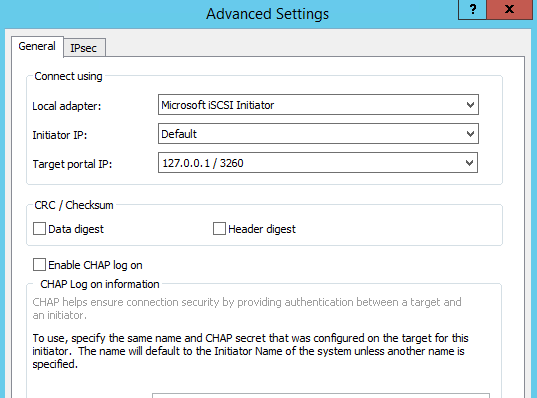

63. Select the target for the CSV1 device discovered from the local server and click Connect.

64. Enable checkboxes like in the image below and click Advanced

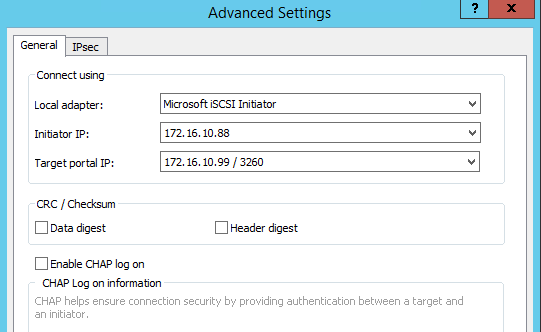

65. Select Microsoft iSCSI Initiator in the Local adapter text field.

Select 127.0.0.1 in the Target portal IP area.

Click OK twice to connect the target.

66. Select the target for the CSV device discovered from the partner StarWind node and click Connect.

67. Enable checkboxes, like it is shown in the image below, and click Advanced.

68. Select Microsoft iSCSI Initiator in the Local adapter text field.

69. In Target portal IP, select the IP address for the iSCSI channel on the partner StarWind Node and Initiator IP address from the same subnet. Click OK twice to connect the target.

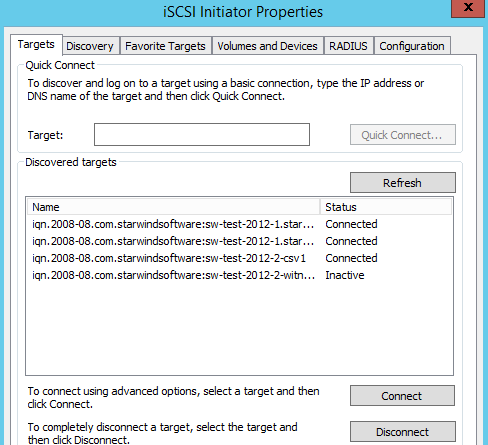

70. Repeat the above actions for all HA device targets. The result should look like in the picture below.

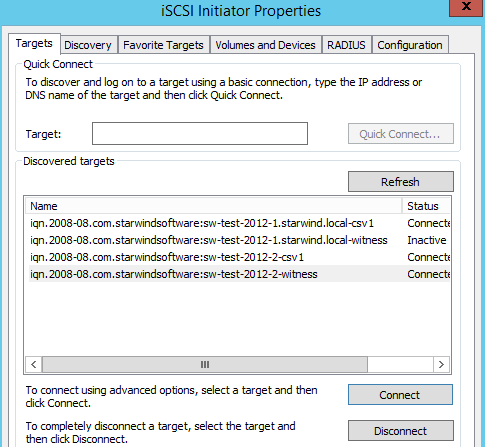

71. Repeat the steps described in this section on partner StarWind node, specifying corresponding IP addresses for the iSCSI channel. The result should look like in the screenshot below.

Multipath Configuration

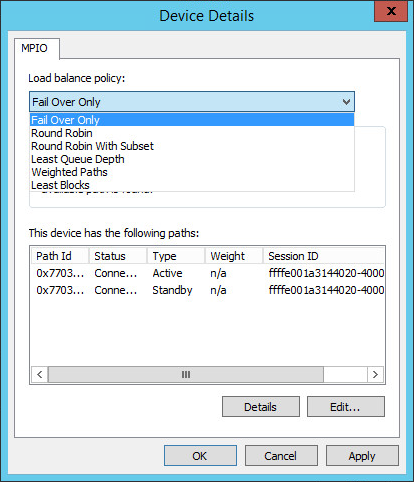

NOTE: It is recommended configuring different MPIO policies depending on iSCSI channel throughput. For 1 Gbps iSCSI channel throughput, it is recommended to set Failover Only MPIO policy. For 10 Gbps iSCSI channel throughput, it is recommended to set Round Robin or Least queue depth MPIO policy.

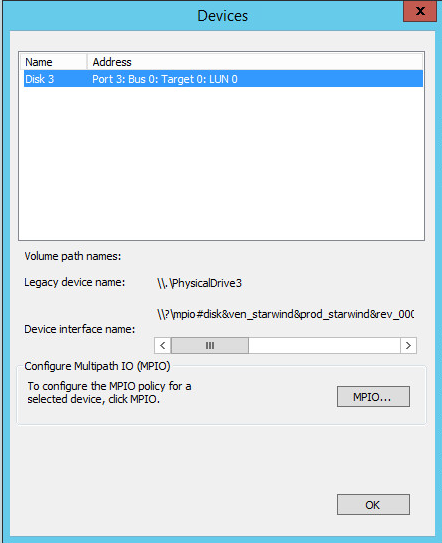

72. With Failover Only MPIO policy, it is recommended to set localhost (127.0.0.1) as the active path. Select a target located on the local server and click Devices.

73. The Devices dialog appears. Click MPIO.

74. Select Fail Over Only load balance policy, and then designate the local path as active.

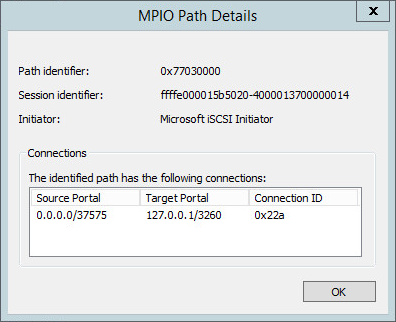

75. You can verify that 127.0.0.1 is the active path by selecting it from the list and clicking Details.

76. Repeat the same steps for each device on both nodes.

77. Round Robin or Least Queue Depth MPIO policy can be set in Device Details window.

78. Initialize the disks and create partitions on them using the Disk Management snap-in. The disk devices are required to be initialized and formatted on both nodes in order to create the cluster.

NOTE: It is recommended to initialize the disks as GPT.

Creating a Cluster

NOTE: To avoid issues during cluster validation configuration, it is recommended to install the latest Microsoft updates on each node.

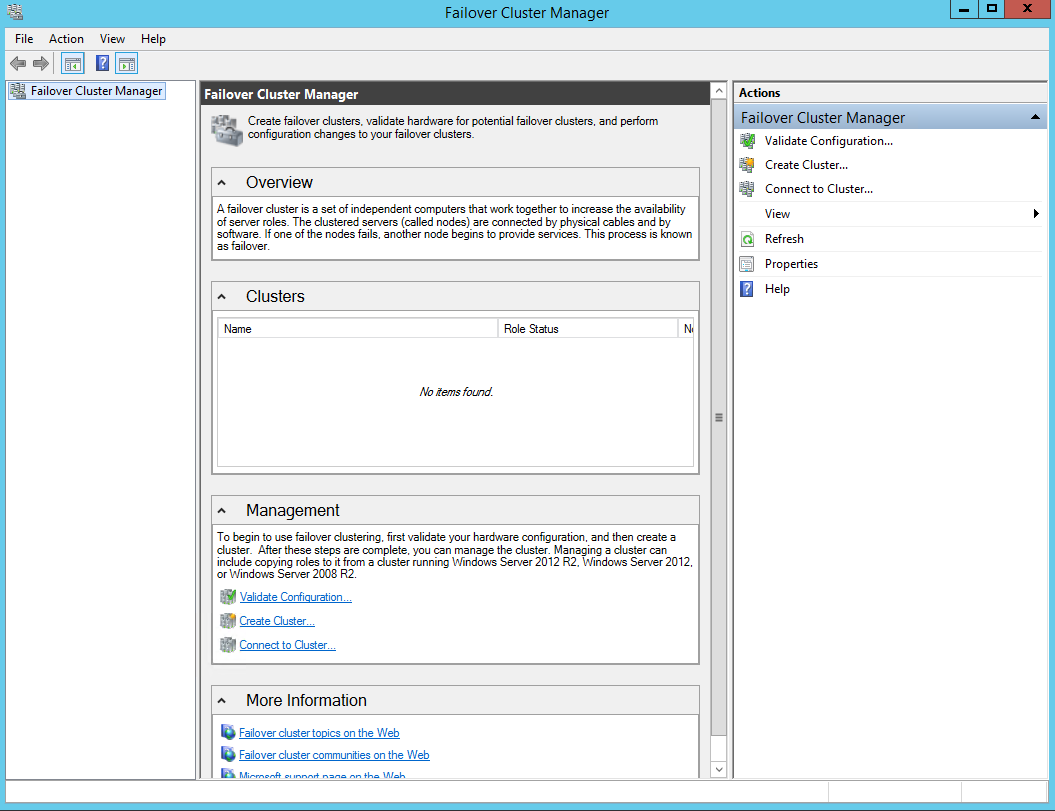

79. Open Server Manager. Select the Failover Cluster Manager item from the Tools menu.

80. Click the Create Cluster link in the Actions section of the Failover Cluster Manager.

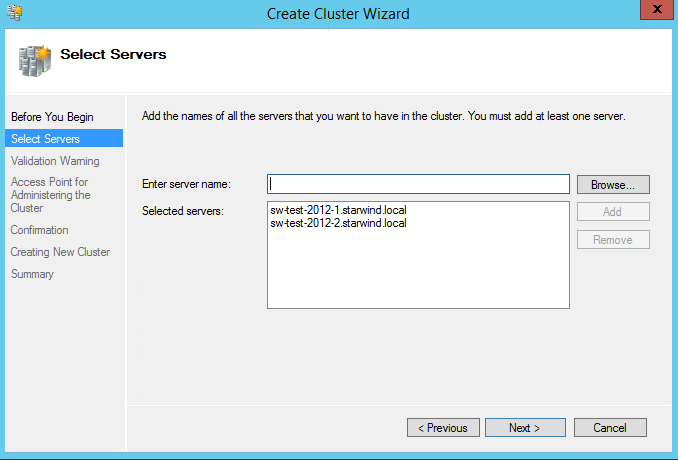

81. Specify the servers which are to be added to the cluster.

Click Next to continue.

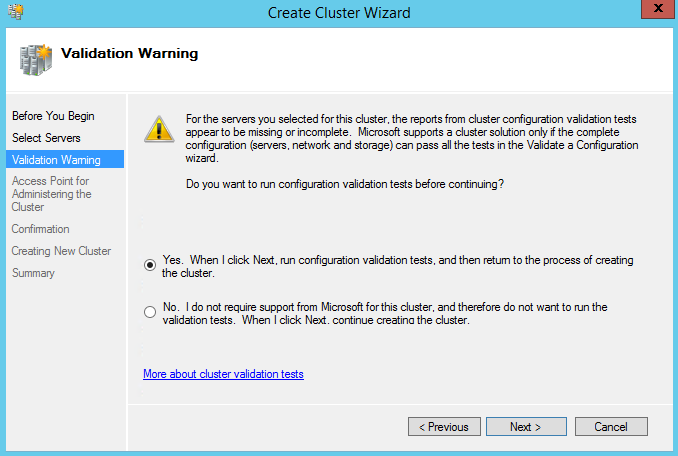

82. Validate the configuration by running the cluster validation tests: select “Yes…” and click Next to continue.

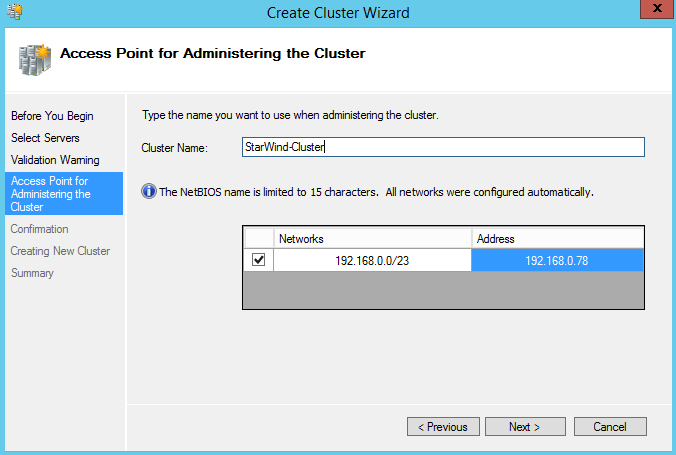

83. Specify the cluster name.

NOTE: If the cluster servers get IP addresses over DHCP, the cluster also gets its IP address over DHCP. If the IP addresses are set statically, you will be prompted to set the cluster IP address manually.

Click Next to continue.

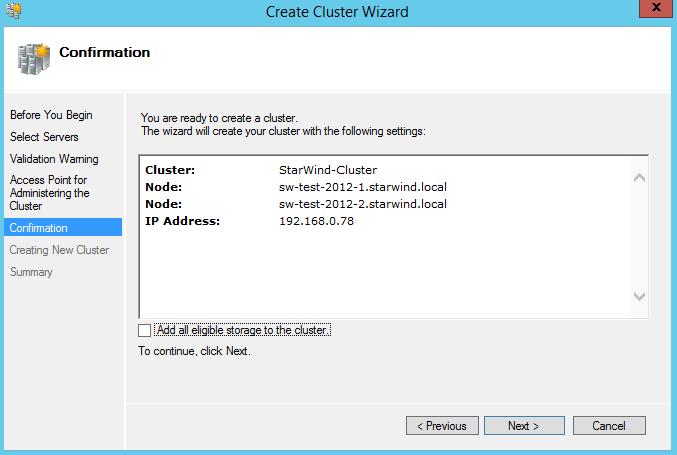

84. Make sure that all the settings are correct. Click Previous to change the settings (if necessary):

NOTE: If checkbox “Add all eligible storage to the cluster” is enabled, the wizard will add all disks to the cluster automatically. The device with smallest storage volume will be assigned as Witness. It is recommended to uncheck it before you click Next and add cluster disks and the Witness drive manually.

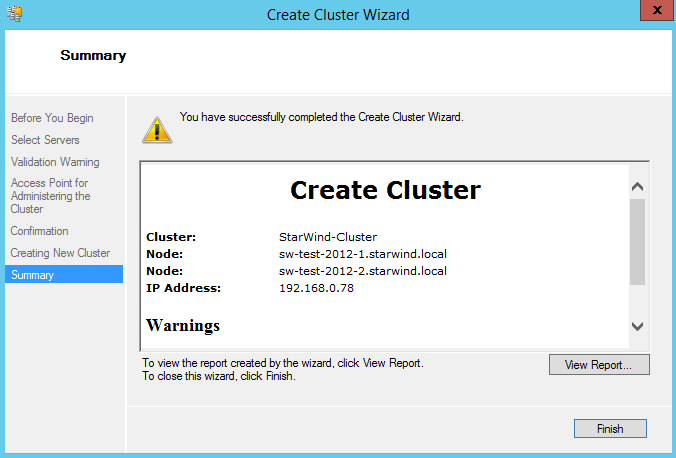

85. The process of cluster creation starts. Upon completion, the system displays a summary with detailed information.

Click Finish to close the wizard.

Adding Witness and Cluster Shared Volumes

Follow these steps to add Cluster Shared Volumes (CSV) that are necessary for working with Hyper-V virtual machines:

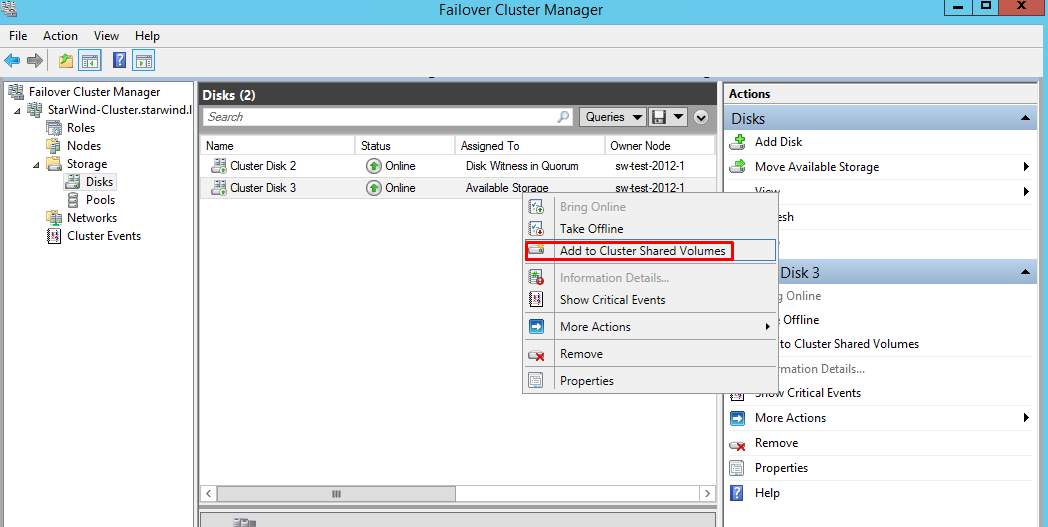

86. Open Failover Cluster Manager.

87. Go to Cluster->Storage -> Disks.

88. Click Add Disk in the Actions panel, choose StarWind disks from the list, and click OK.

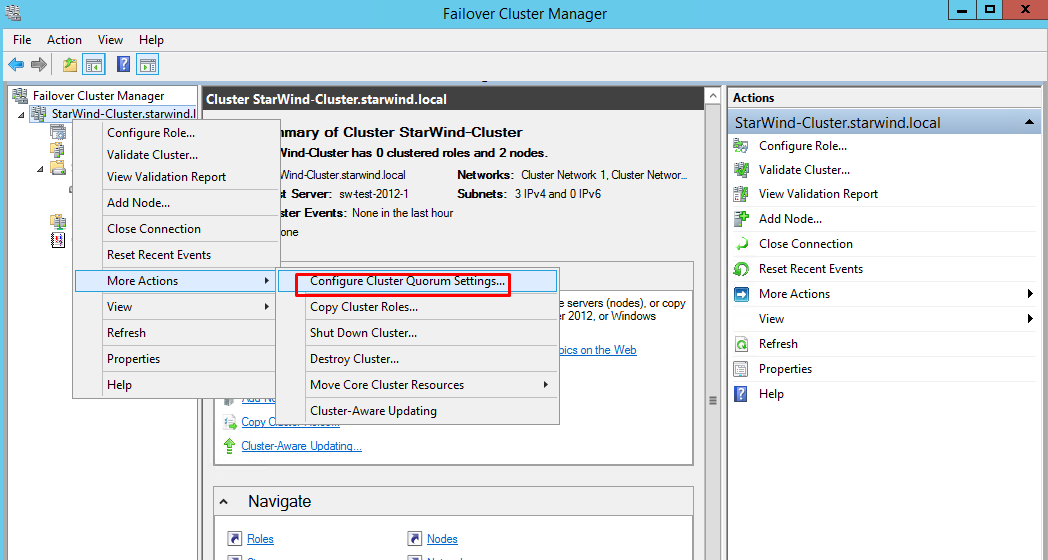

89. To configure the Witness drive, right-click Cluster->More Actions->Configure Cluster Quorum Settings, follow the wizard, and use the default quorum configuration.

90. Right-click the previously added disk and select Add to Cluster Shared Volumes.

Configuring the Scale-Out File Server Role

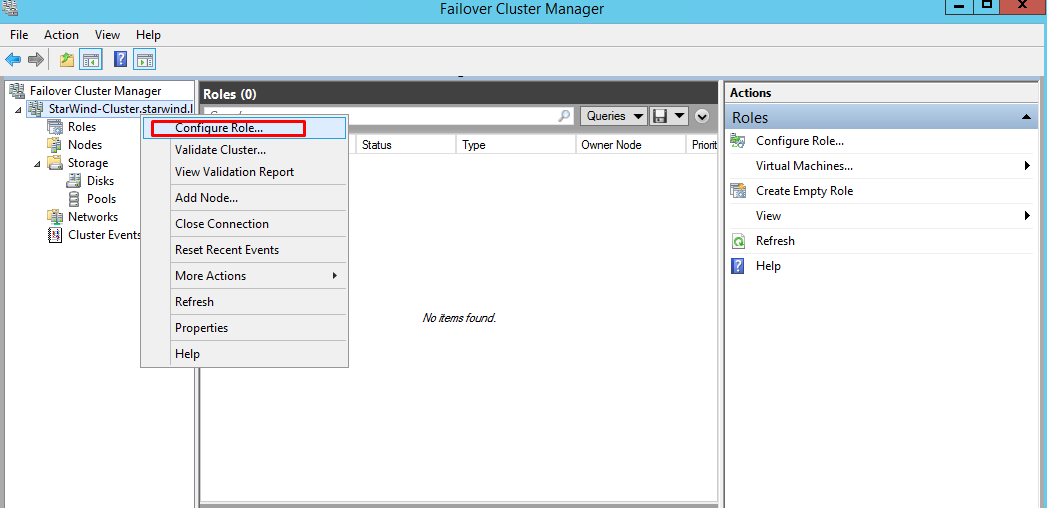

91. To configure the Scale-Out File Server role, open Failover Cluster Manager.

92. Right-click on the cluster name, then click Configure Role, and click Next to continue.

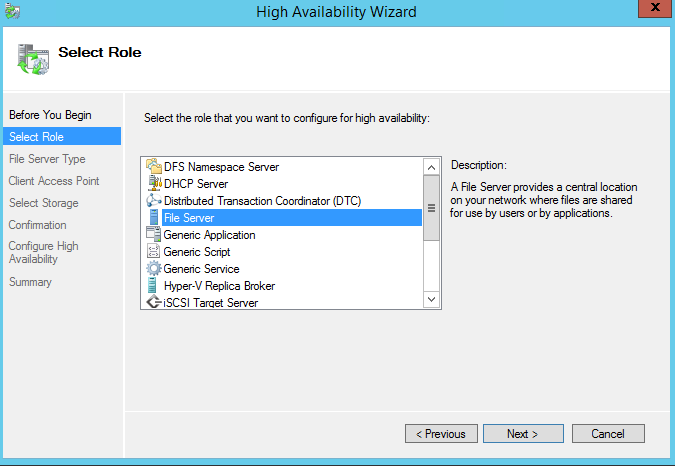

93. Select the File Server item from the list in High Availability Wizard and click Next to continue.

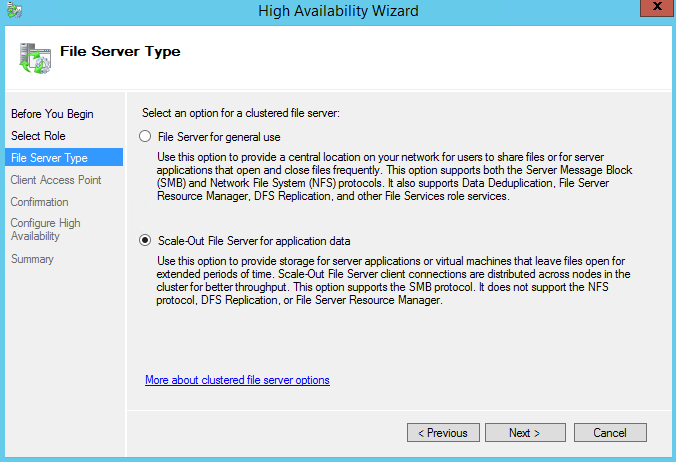

94. Select Scale-Out File Server for application data and click Next.

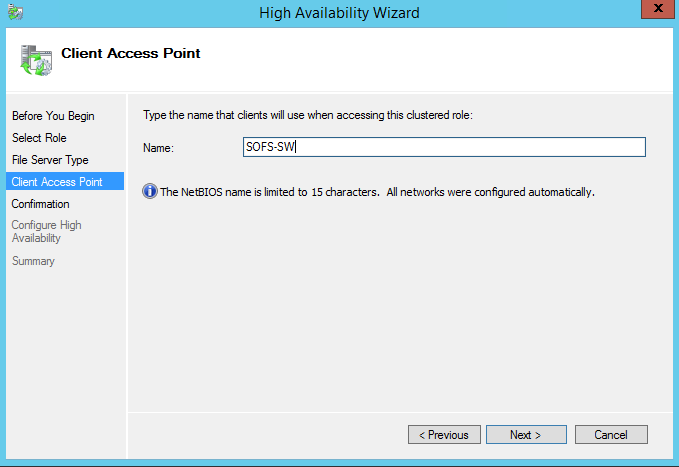

95. On the Client Access Point page, in the Name text field, type NETBIOS, the name that will be used to access a Scale-Out File Server.

Click Next to continue.

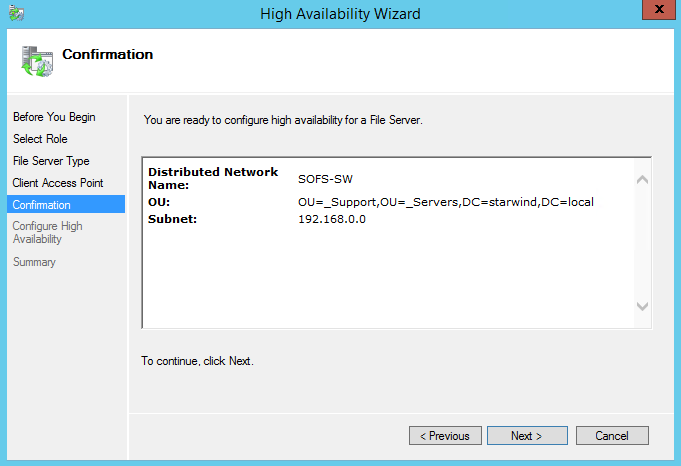

96. Check whether the specified information is correct. Click Next to proceed, or Previous to change the settings.

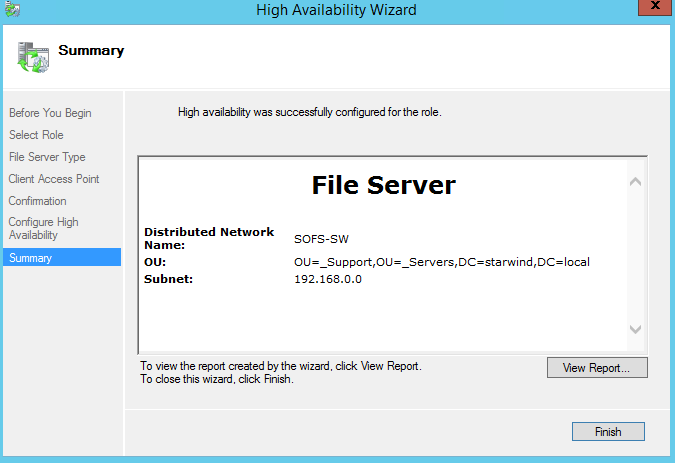

97. Once installation finishes successfully, the Wizard should look as shown in the screenshot below.

Click Finish to close the Wizard.

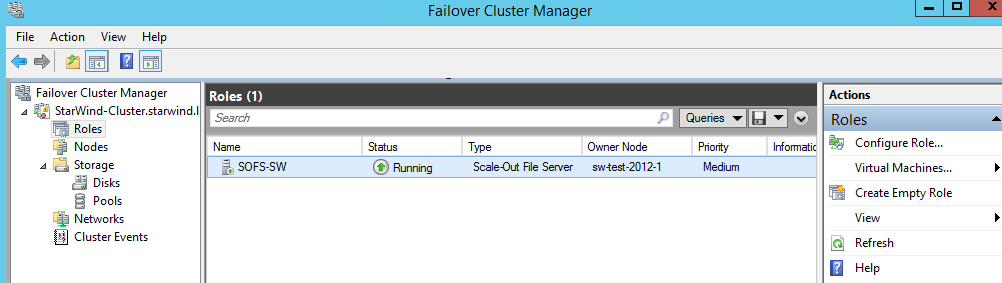

98. The newly created role should look as shown in the screenshot below.

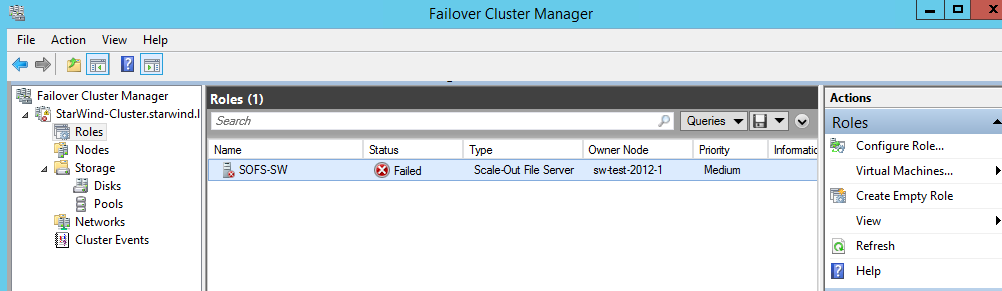

NOTE: If the role status is Failed and it is unable to Start, please, follow the next steps:

- Open Active Directory Users and Computers;

- Enable Advanced view if it is not enabled;

- Edit the properties of the OU containing the cluster computer object (in our case – StarWind-Cluster);

- Open the Security tab and click Advanced;

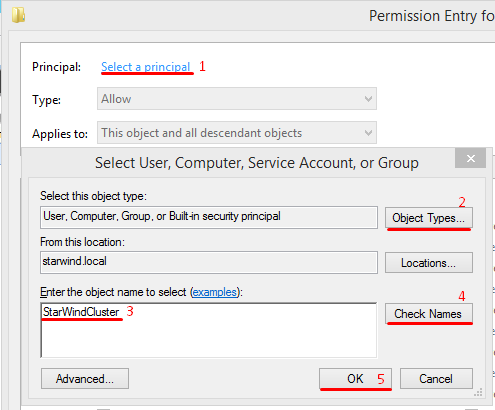

- In the emerged window, press Add (the Permission Entry dialog box opens), click Select a principal;

- In the appeared window, click Object Types, select Computers, and click OK;

- Enter the name of the cluster computer object (in our case – StarWindCluster);

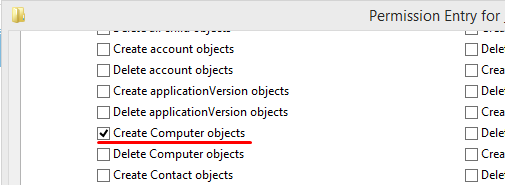

Go back to the Permission Entry dialog, scroll down, and select Create Computer Objects.

- Click OK on all opened windows to confirm the changes.

- Open Failover Cluster Manager, right-click the SOFS role, and click Start Role.

Sharing a Folder

To share a folder:

99. Open Failover Cluster Manager.

100. Expand the cluster and then click Roles.

101. Right-click the file server role and then press Add File Share.

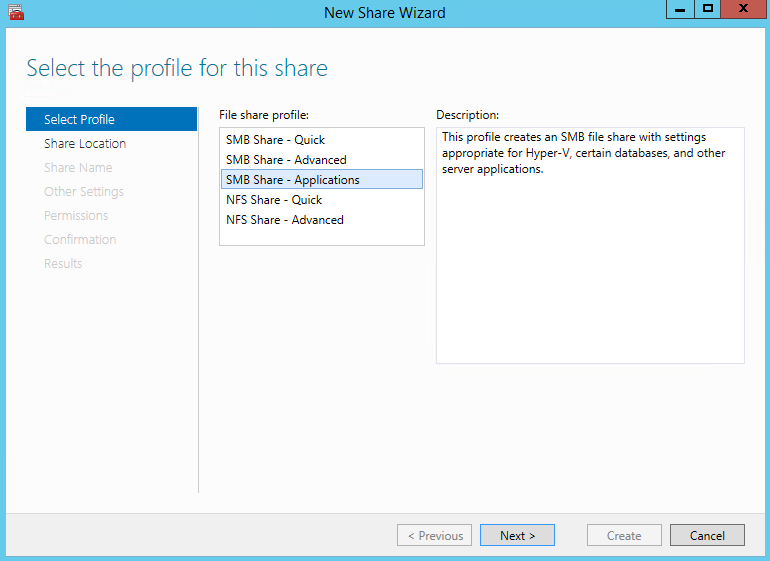

102. On the Select the profile for this share page, click SMB Share – Applications and then click Next.

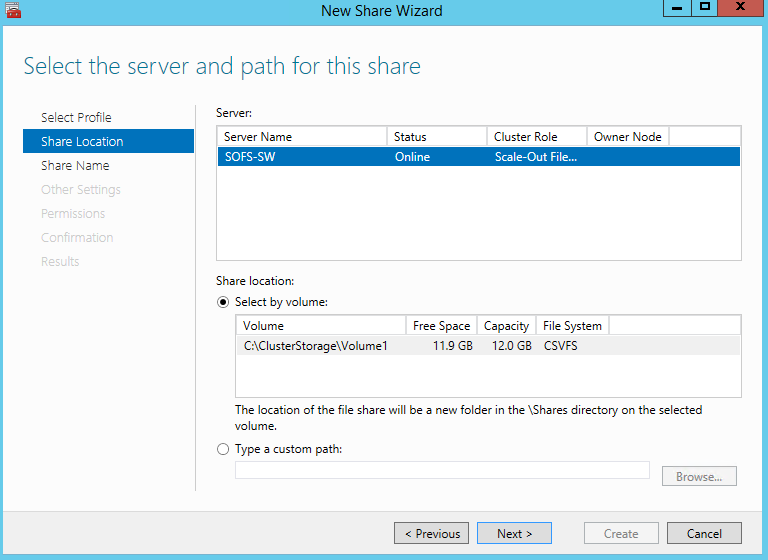

103. Select a CSV to host the share. Click Next to proceed.

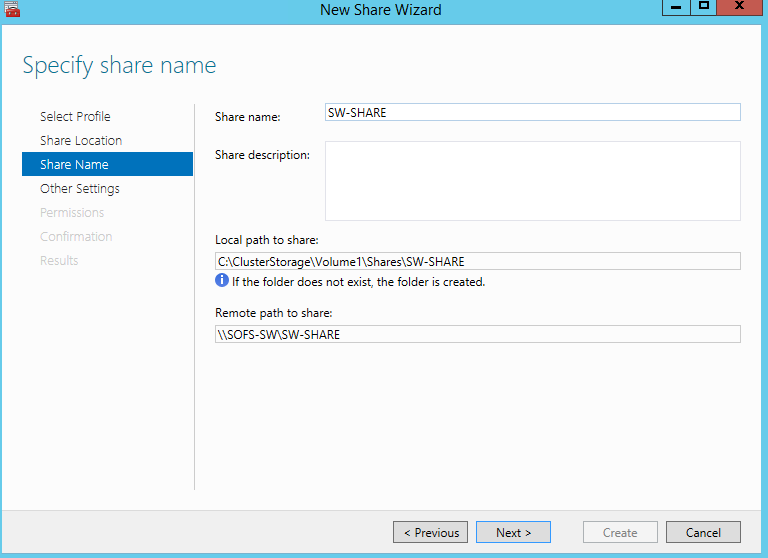

104. Type in the file share name and click Next.

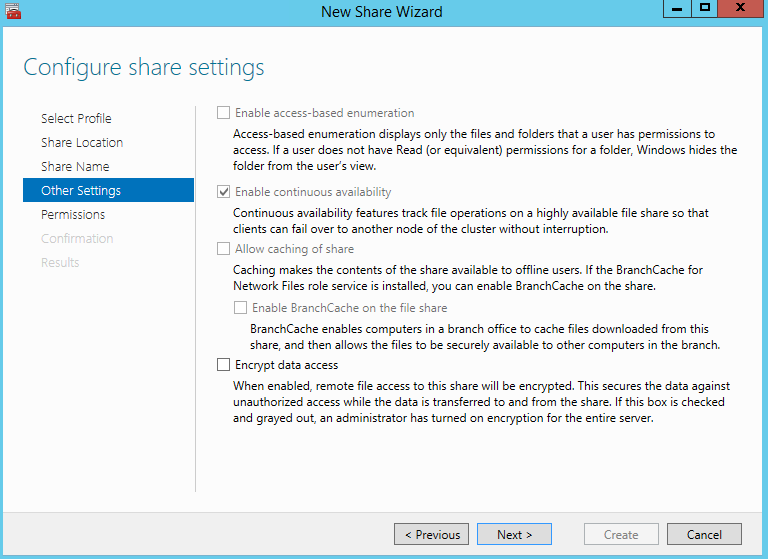

105. Make sure that the Enable Continuous Availability box is checked. Click Next to proceed.

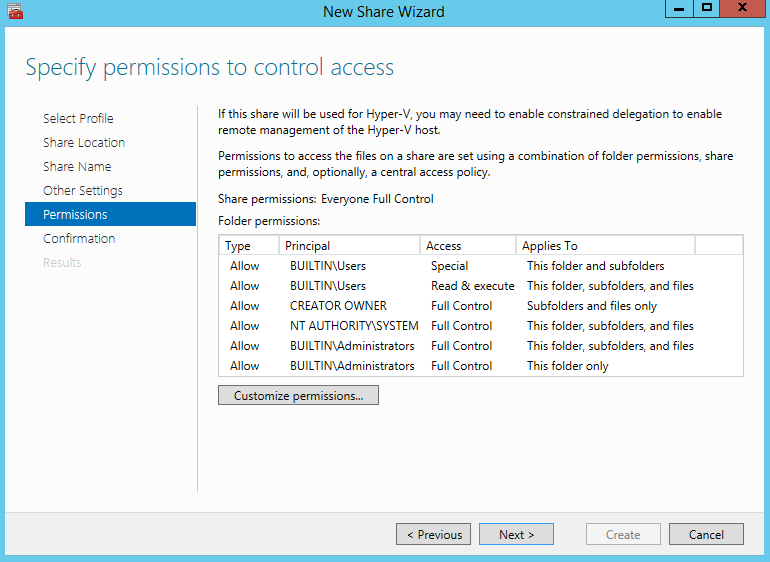

106. Specify the access permissions for your file share.

NOTE:

- If you use Scale-Out File Server for Hyper-V, you must provide all Hyper-V computer accounts, the SYSTEM account, and all Hyper-V administrators with the full control on the share and file system.

- If you use Scale-Out File Server on Microsoft SQL Server, the SQL Server service account must be granted full control on the share and the file system.

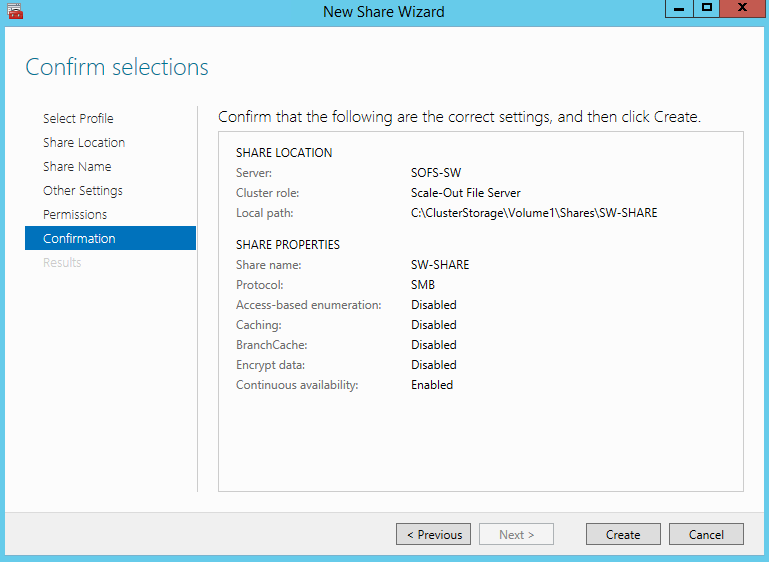

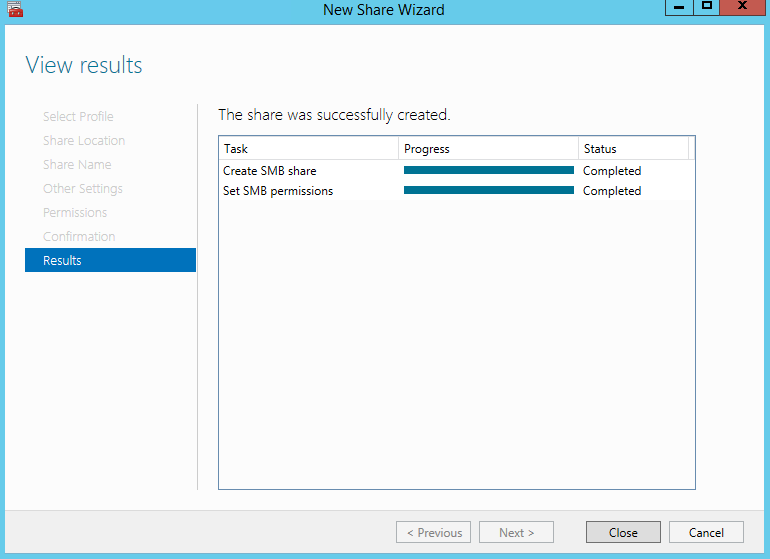

107. Check whether specified settings are correct. Click Previous to make any changes or click Create to proceed.

108. Check a summary, and click Close to close the Wizard.

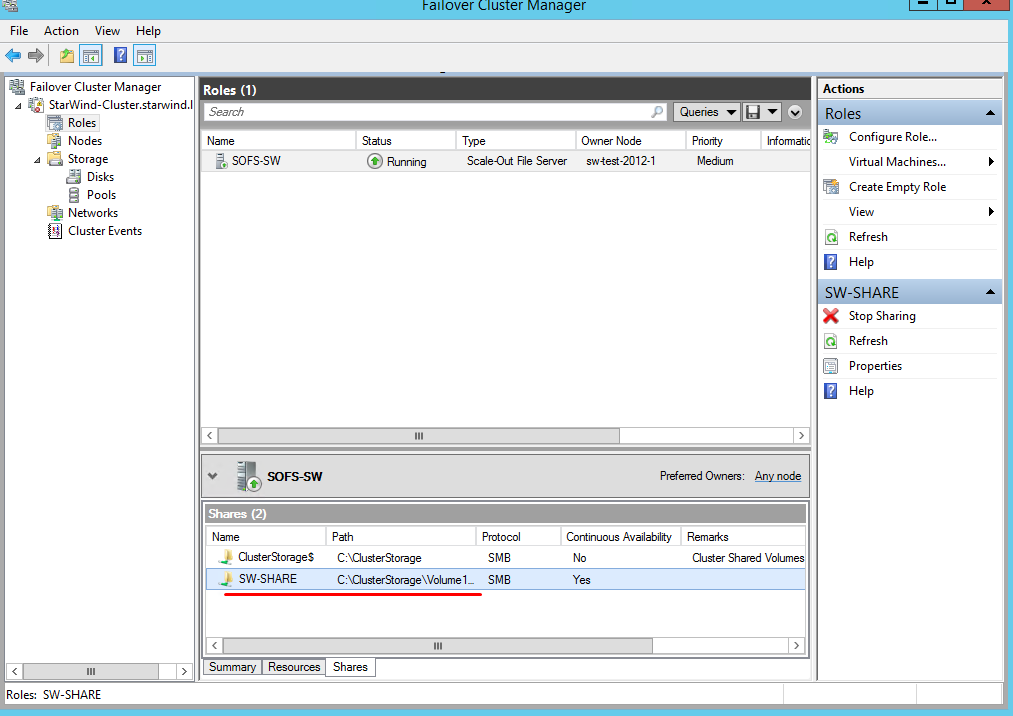

To manage created shares:

109. Open Failover Cluster Manager.

110. Expand the cluster and click Roles.

111. Choose the file share role, select the Shares tab, right-click the created file share, and select Properties.

CONCLUSION

We have configured a two-node fault-tolerant cluster in Windows Server 2012 R2 with StarWind Virtual SAN as a backbone for your HA shared storage. Now, you have a continuously available Scale-Out File Server file share(s) available over SMB protocol that can be used for storing VMs that leave files open for extended periods of time, or other purposes.