StarWind iSCSI SAN & NAS: Using SW SMI-S Agent with SC VMM 2012 R2

- September 04, 2013

- 17 min read

INTRODUCTION

With growth of popularity of private cloud infrastructures, automation of storage and hypervisor management becomes ever more acute.

The solution offered by Microsoft for Hyper-V virtual machines management was initially System Center Virtual Machine Manager (SC VMM). However, until recently there was no usable tool for automatic storage management. This functionality appeared in the recently released SC VMM 2012 R2 as well as support of SMI-S standard.

Now the VMM console enables users to create, remove, format, assign and connect disks to the Hyper-V hosts. Originally, these tasks were done via utilities provided by the storage array manufacturers which complicated management.

This article describes how to configure, connect and use SMI-S provider to automate management of storage arrays. We will use StarWind iSCSI SAN V8 beta as an example of iSCSI storage that can be operated with SMI-S. Click here to download the storage software.

About SMI-S

Storage Management Initiative – Specification (SMI-S) is a standard of the disk storage management. SMI-S is based on the Common Information Model (CIM) open standard and on the Web-Based Enterprise Management (WBEM) technology. SMI-S is certified as an ISO standard and is supported by many storage system vendors.

SMI-S is fully supported in Windows Server 2012 by the Storage Spaces functionality. Storage Spaces enables the use of SMI-S in PowerShell scripts for automation of administration.

Naturally, disk array manufacturers also have to ensure SMI-S support by their products. They typically provide a so-called SMI-S provider or an “agent” that mediates communication between an SMI-S client and server storage array. An SMI-S client is connected to the SMI-S provider via CIM-XML protocol, while SMI-S provider itself can use proprietary interfaces to manage the disk array.

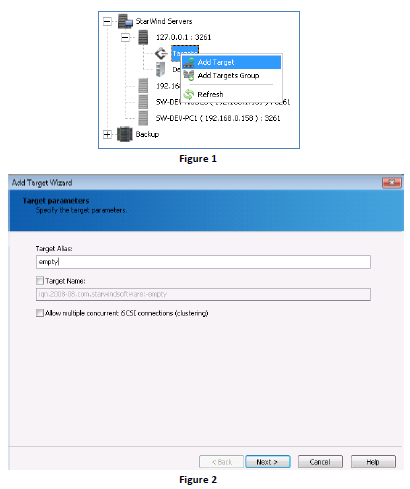

Configuring StarWind Service

Before configuring StarWind SMI-S Agent, you need first to configure StarWind iSCSI SAN Service. Use StarWind Management Console to connect to StarWind Service and then create an empty iSCSI target (Figure 1 and Figure 2). Let’s give it the name “empty”.

For more information on StarWind Management console, refer to StarWind Help.

Installing and configuring StarWind SMI-S Agent

StarWind Software, Inc. offers free SMI-S provider for storage management – called StarWind SMI-S Agent. Now its beta is available for download.

Click here to download StarWind SMI-S Agent. This version of StarWind SMI-S Agent supports only StarWind iSCSI SAN V8 beta.

The installation process is rather common – the installer guides you through several wizard steps, then unpacks the files and registers the system service.

Note: It is not necessary to install StarWind SMI-S Agent on the same server with of StarWind iSCSI SAN.

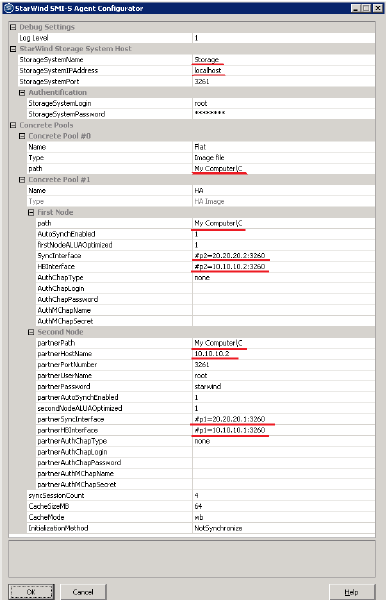

After installation of StarWind SMI-S Agent configure it with the StarWindSMISConfigurator utility (Figure 3).

To configure StarWind SMI-S Agent:

1. To launch it, press Win + R in the Run window.

2. Type StarWindSMISConfigurator.

3. Click OK.

4. In the StorageSystemName text field specify the name for a disk array, e.g. Storage.

5. Specify address of the host running StarWind Service.

6. Leave the number in the StorageSystemPort field unchanged.

Note: Please distinguish between StarWind Service management Port 3261 and the Port 5988 which provides an access to StarWind SMI-S Agent.

7. In the path fields specify correct paths to folders where disk device images will be stored. You have to specify paths to the server where StarWind iSCSI target is launched.

Note: When you fill in the path fields, use the same format as that used for creation of virtual devices in StarWind Management Console.

Note: Configuration of an HA storage pool is described below.

8. After you applied the new settings restart the StarWind SMI-S Agent service.

Note: Before restarting StarWind SMI-S Agent, make sure the StarWind Service is up and running.

Configuring an HA storage pool

Basic StarWind SMI-S Agent configuration allows building a 2-node HA storage pool.

To build a 2-node HA storage pool, configure the following parameters:

- Path and partnerPath

- Authorization parameters for each node

- HBInterface and partnerHBInterface

- SyncInterface and partnerSyncInterface

Other settings should be left as default.

Short descriptions of parameters:

1. Path and partnerPath – paths to the folders with disk image files of each HA device node.

2. AutoSynchEnabled – automatic node synchronization with the partners (“1” – enabled).

3. firstNodeALUAOptimized and secondNodeALUAOptimized – ALUA optimization.

4. SyncInterface and partnerSyncInterface – an address and port of the partner sync interface. “#p2” is used to indicate the second partner.

5. HBInterface and partnerHBInterface – an address and port of the partner heartbeat interface.

6. syncSessionCount – a number of iSCSI connections required to perform synchronization operations.

7. InitializationMethod – a method of a new device initialization. Possible values:

- “Clean” – initialization of all HA device disk images with zeros. It is used to prevent unauthorized access to the data of an HA device that was previously removed to free space in a storage pool. Note that this value slows down the process of creating a new device.

- “NotSynchronize” – initialization without initial synchronization. Use this value to speed up the process of creating a device.

8. CacheMode – a cache operation mode.

9. CacheSizeMB – a cache size in MB.

Connecting SMI-S provider to SC VMM 2012 R2

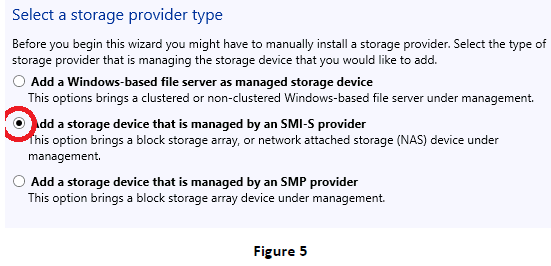

To enable disk array management using VMM you need to connect the appropriate SMI-S provider.

To connect SMI-S provider:

1. Click the Add Resources button (Figure 4, highlighting 1) on the toolbar of the SC VMM console.

2. Select Storage Devices (Figure 4, highlighting 2).

3. Specify type of a storage provider. Select Add a storage device that is managed by an SMI-S provider radio button (Figure 5).

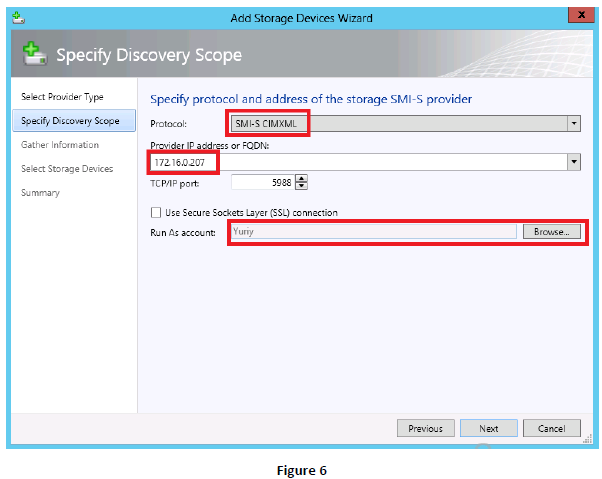

4. Select SMI-S CIMXML protocol (Figure 6).

5. Enter address of the host where StarWind SMI-S Agent is running and the user name that will be used by VMM for authorization on StarWind SMI-S Agent. If there is no appropriate user, create it here.

Attention. A host with installed StarWind SMI-S Agent must be located in the same domain with MS SCVMM 2012. If your organization doesn’t use a domain, make sure that DNS has a record that links a host IP with its name.

Note: By default, there is no password to access StarWind SMI-S Agent. To change these settings, modify the cimserver_planned.conf file located in the installation folder of StarWind SMI-S Agent. Add the following line to the file:

enableAuthentication = true

Save the file and restart the StarWind SMI-S Agent service. Then enter to the command line the following:

cimuser-a-u <username>-w <password>

The <username> field should be filled in with the name of the existing account on the host that runs StarWind SMI-S Agent.

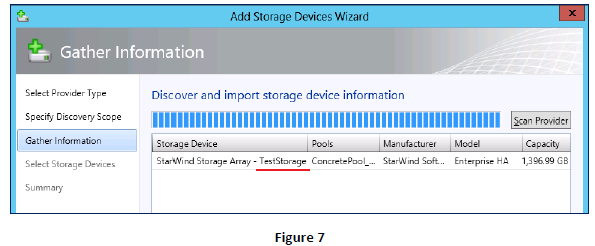

6. If the wizard succeeds to connect to provider, it will display all available disk arrays. Figure 7 illustrates that wizard has detected the StarWind’s storage device called TestStorage.

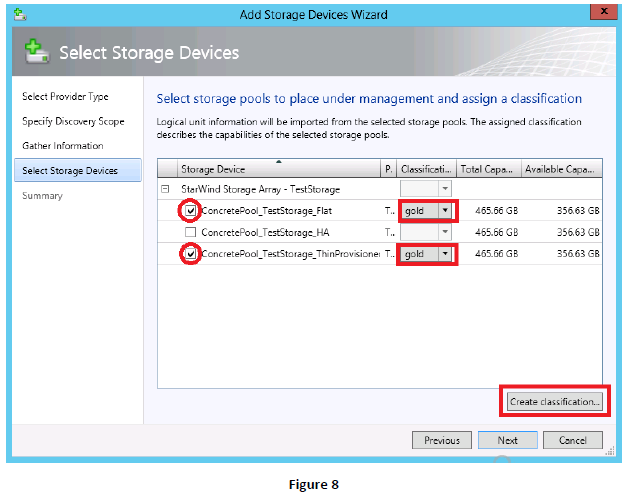

7. Click Next and select pools to be assigned to VMM (Figure 8). Every selected pool has to be classified. If there are no classification options yet, click Create classification to carry out the required action.

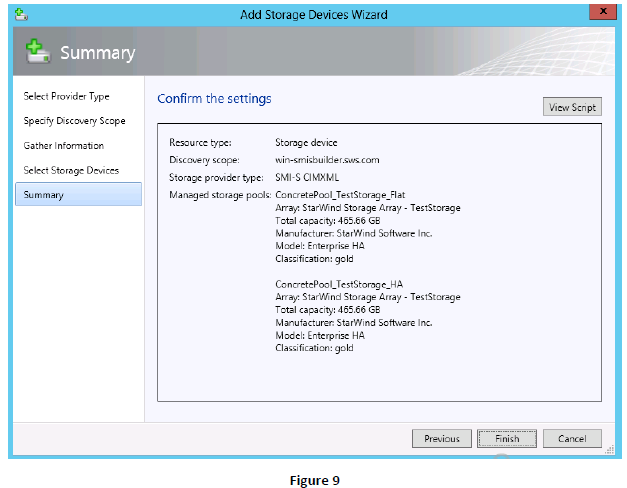

8. Confirm the settings specified before. Summary displays the information regarding disk array, provider and pools to be managed by VMM (Figure 9).

Creating a logical unit

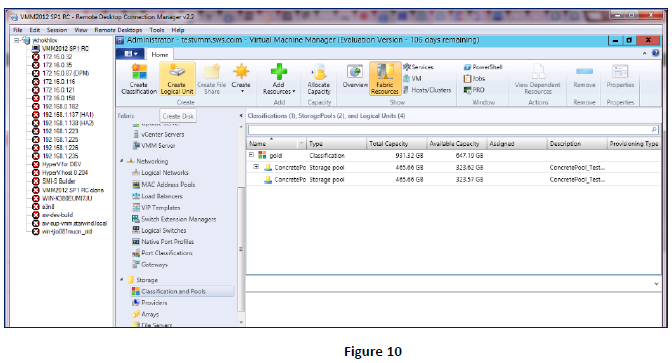

A logical unit (LU, Storage Volume) is created on the basis of a storage pool. All created devices are displayed in the Classification and Pools column (Figure 10). The screenshot below illustrates that there aren’t any logical units yet.

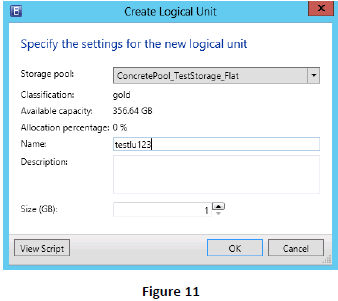

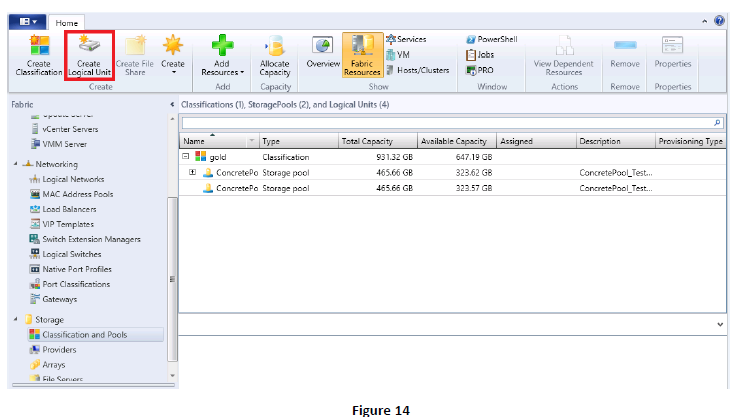

To add a new logical unit:

1. Сlick Create Logical Unit on the toolbar (Figure 10).

2. In the Create Logical Unit dialog select a storage pool, specify name and size of a LU, and click OK.

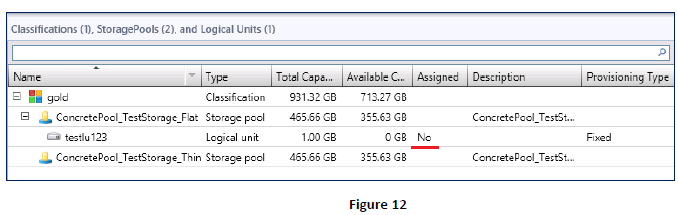

A newly created device appears on the list (view the Name column).

Note: The new logical unit testlu123 is not assigned to any host which is displayed by the value No in the Assigned field.

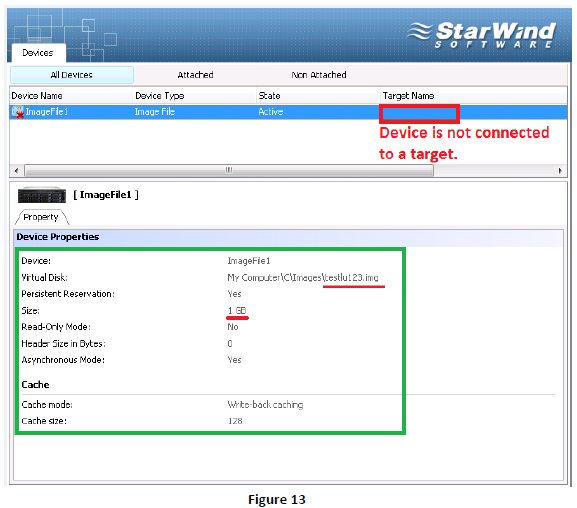

After this operation StarWind Management Console displays a new device ImageFile1 (Figure 13).

The device is active, but since it is not connected to any target yet, it is not available. Note the image file location is My Computer\C\Images.

Allocating storage pools and logical units

SC VMM allows for allocating storage pools and logical units to different Hyper-V hosts and host groups. To make a storage pool or logical unit available for a host or host group, allocate them for a particular host or host group.

Attention. If you are going to create logical units from an HA storage pool, you have to previously create a service HA logical unit with minimum 1GB capacity. Note that this service unit must be created before assigning storage pools to hosts and before adding disk arrays on hosts. Do not assign the service HA unit to any host to avoid using it by mistake.

To allocate storage pools and logical units:

1. Click Allocate Capacity on the toolbar.

2. In the Allocate Storage Capacity dialog, click use one of the following buttons:

- Allocate Storage Pools

- Allocate Logical Units

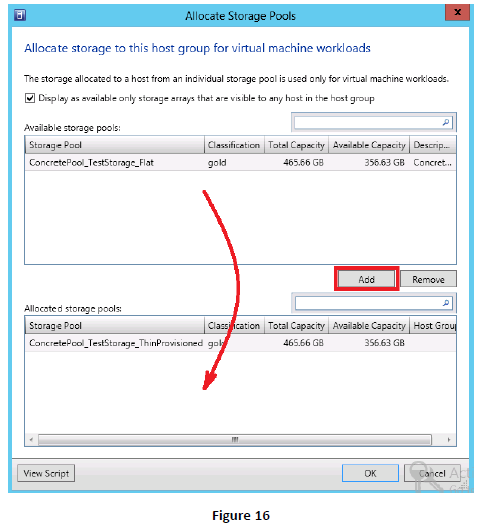

3. In the Allocate Storage Pools window, select the Display as available only storage arrays that are visible to any host in the group checkbox to verify that storage pools are available on the network.

Note: If after selection of this checkbox the storage pools disappeared from the list, you need to refresh the information on a Hyper-V host and storage provider.

- To refresh information on a Hyper-V host, click Refresh on the shortcut menu of a respective host.

- To refresh information on a storage provider, click Rescan on the shortcut menu of SMI-S provider.

If storage pools aren’t displayed on the list, check the firewall settings, network paths, network zoning, etc. It is likely that the Hyper-V hosts from the group have no access to the StarWind iSCSI SAN service.

4. Select a storage pool and click the Add button.

5. Repeat the same procedure for every storage pool.

6. Click OK.

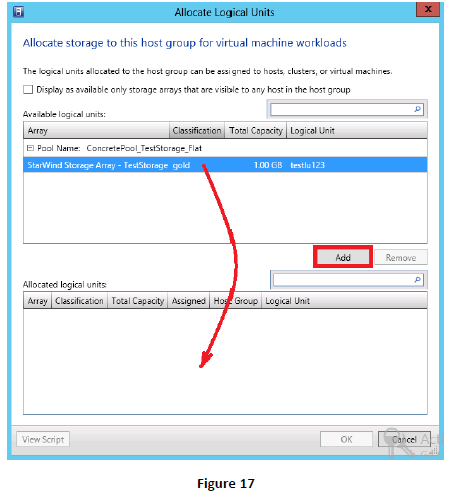

7. Follow the same procedure to allocate the logical unit to the All Hosts group (Figure 17).

Connecting logical units to Hyper-V hosts

To connect a logical unit to a Hyper-V host:

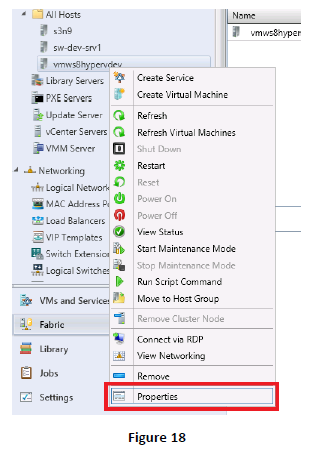

1. Choose a host and click Properties on the shortcut menu.

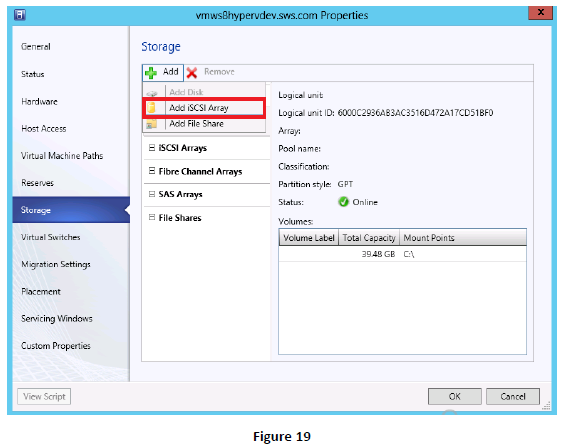

2. In the Properties window, click Storage on the left pane (Figure 19).

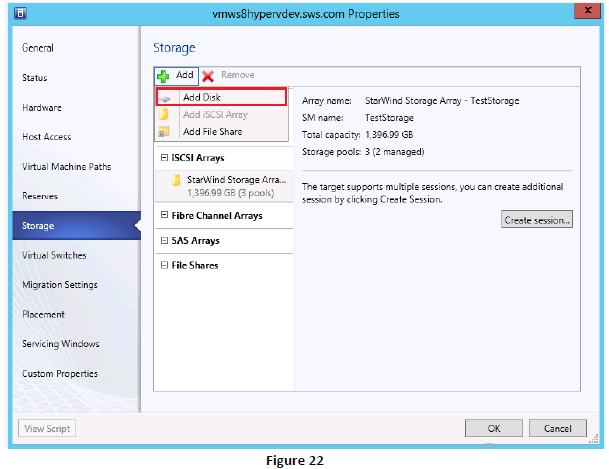

3. Click Add iSCSI Array to add a disk array (Figure 19).

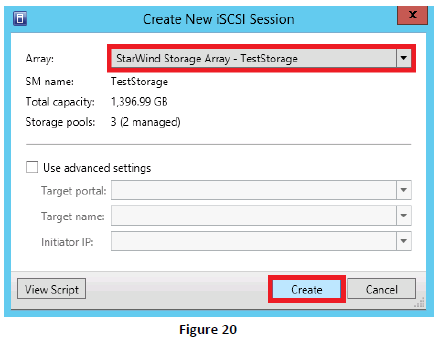

4. In the Create New iSCSI Session dialog, select a disk array and click OK.

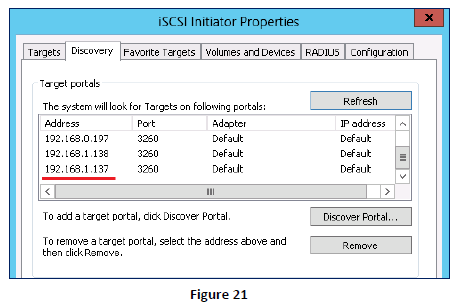

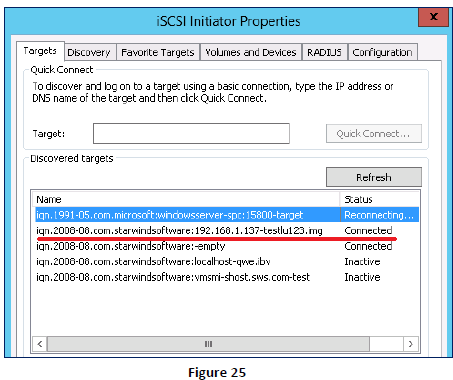

A new disk array appears on the Target portals list on the Discovery tab of an iSCSI initiator Properties of the selected Hyper-V host.

Figure 21 displays a new line. 192.168.1.137 is an IP address of the host where StarWind iSCSI SAN is installed and runs.

Now as a new array has been added, you can base on it new disks and connect to it the existing ones.

5. Click Add and then Add Disk to connect a new disk to the host.

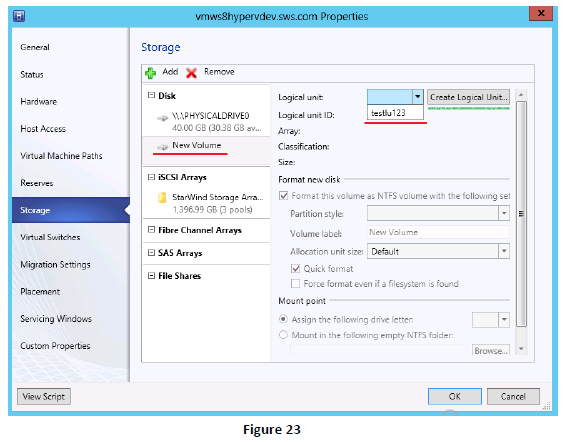

Note: Since the logical unit testlu123 has been allocated to the host group All hosts, now it is shown on the list of available devices (Figure 23, red underlined).

Click the Create Logical Unit button (Figure 23, green underlined) to create a new logical unit right from this window.

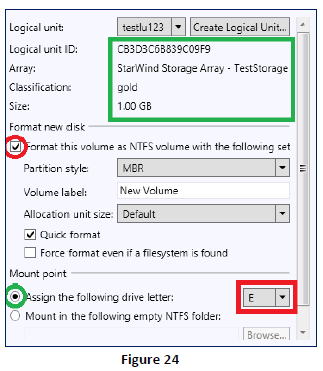

As soon as you selected the logical unit, the system provides all information available for it (Figure 24). VMM suggests disk initialization, it’s formatting to NTFS and assigning a disk letter to make a logical unit available for storing VHD-files of VMs.

6. Click OK to connect a logical unit to a Hyper-V host.

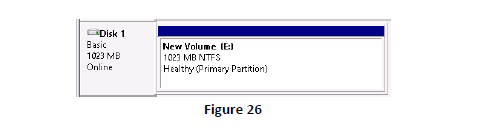

The host has connected to the iSCSI target iqn.2008-08.com.starwindsoftware.192.168.1.137-testlu123.img, and a new 1GB volume has been formatted to NTFS (Figure 26). This proves the connection of a logical unit to a Hyper-V host succeeded.

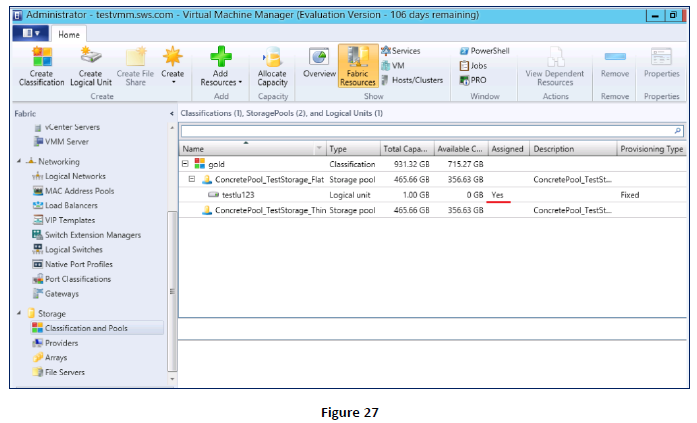

7. Expand Storage, right-click Classification and Pools of the SC VMM 2012 Console (Figure 27) and view that the details of a logical unit testlu123 have been updated. The property Assigned got the value Yes.

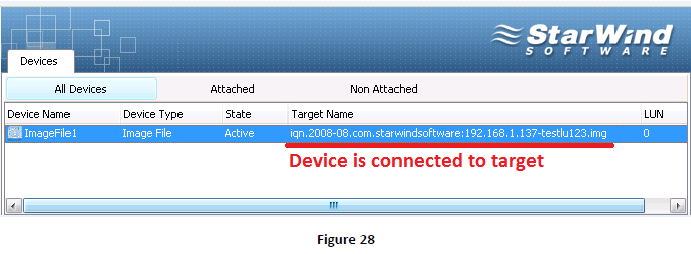

On the Devices tab of StarWind Management Console we can view that the device ImageFile1 is now connected to the target iqn.2008-08.com.starwindsoftware.192.168.1.137-testlu123.img as a LUN0 (Figure 28).

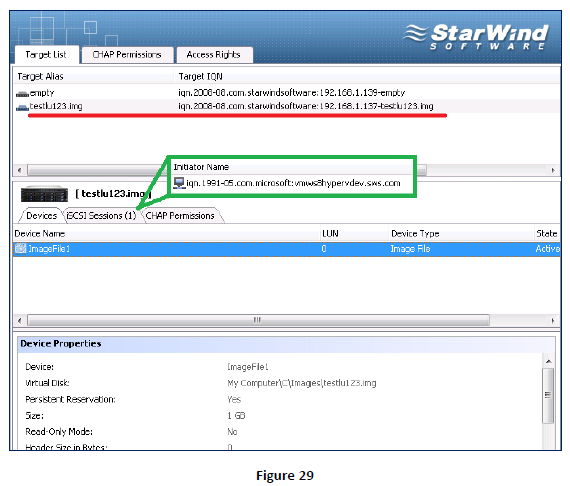

The same information is displayed on the Target List tab of StarWind Management Consol. iSCSI Sessions tab shows the initiator iqn.1991-05.com.microsoft:vmws8hypervdev.sws.com to be currently connected to the selected target (Figure 29).

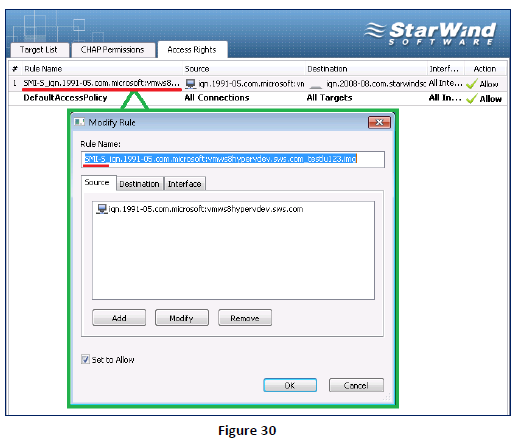

When a logical unit is allocated to a host, SMI-S provider also adds to the StarWind’s ACL service the access rule permitting connection of the selected host to a target (Figure 30).

Note: Names of ACL rules created by SMI-S provider always include the prefix SMI-S. We do not recommend to change these rules or manually delete them from StarWind Management Console.

Disconnecting logical units from Hyper-V hosts

To disconnect logical units:

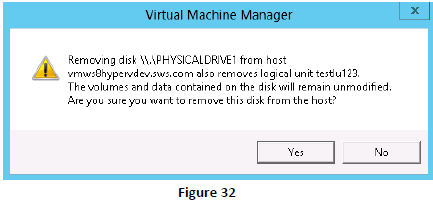

1. Open Properties of a Hyper-V host, click Storage.

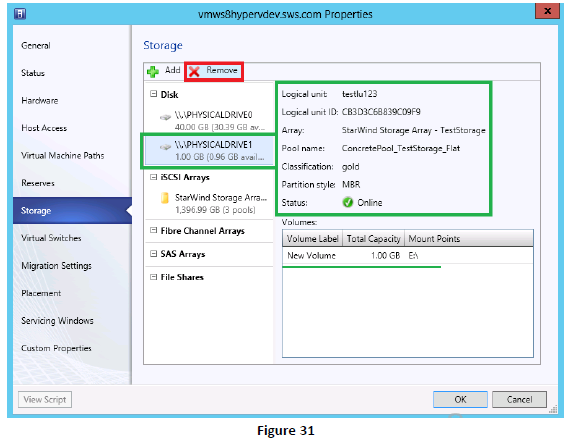

2. On the Storage pane select a disk you need to remove and click the Remove Disk button (Figure 31).

3. Clicking Yes in the VMM dialog to confirm disconnection of the selected logical unit (Figure 32). The logical unit and its data remain untouched so you will be able to connect this device later at any time.

Deleting logical units

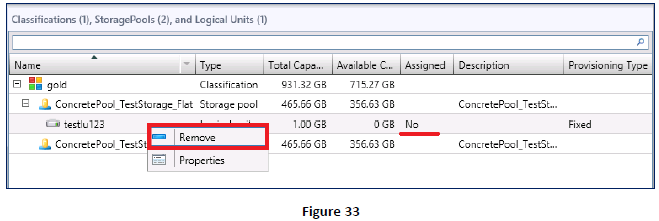

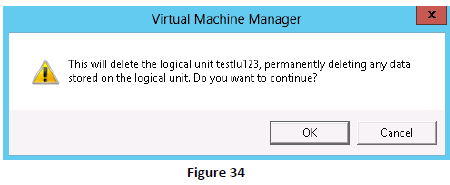

If you don’t need a logical unit anymore, you can delete it thereby freeing the space occupied in the storage pool. A logical unit should be disconnected from all Hyper-V hosts in order to be deleted. You need to make sure that the property Assigned has the value No.

To delete a logical unit:

1. Expand Storage, right-click Classification and Pools of the SC VMM 2012 Console (Figure 33).

2. Select a logical unit that you need to delete and click Remove on the shortcut menu.

3. Click OK in the VMM dialog to confirm the deletion (Figure 34).

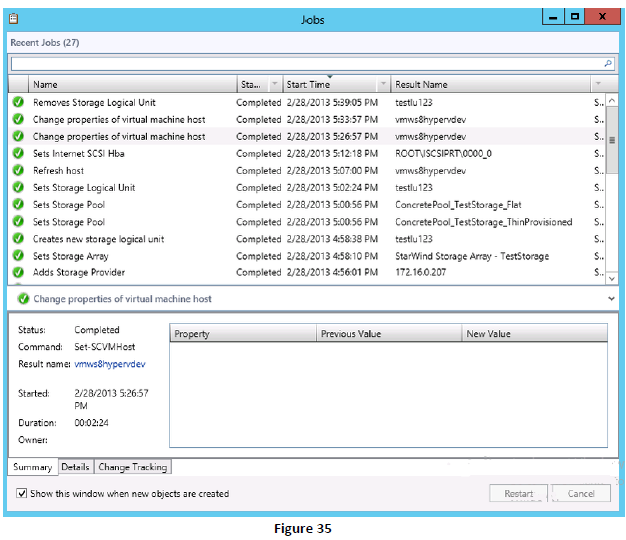

A list of jobs

All current and previous operations of SC VMM are listed on the Jobs window (Figure 35). The green checkmark and the status Completed show that all operations mentioned above have been successfully completed.

CONCLUSION

Support of the SMI-S standard by a new version of SC VMM 2012 is an important step for the automation of the cloud infrastructure management. Now most operations can be performed from the VMM Console and there is no need to address management utilities provided by the disk array manufacturers, for example, StarWind Management Console. Use of SMI-S substantially simplifies administration as it eliminates the need to operate different disk arrays produced by various vendors.

All the operations mentioned above can be also performed by means of PowerShell scripts, which provide even more automation capabilities and simplifies management.