StarWind Virtual SAN Reference Architecture for Dell PowerEdge R730

- February 14, 2015

- 13 min read

Microsoft Windows Server 2012 R2

Since the release and presentation of a Hyper-V 3.0 Microsoft is now treated as a serious contender of the for virtualization market. It basically took away SMBs and ROBOs from VMware and now continues its advance into the Enterprise scale. Within our implementation Windows Server is used as a hypervisor (Hyper-V is just a role enabled in a Windows Server 2012 R2 installation) and also as a platform to run StarWind Virtual SAN in a so-called “bare metal” setup for “Compute and Storage Separated” installations covering for both Hyper-V and vSphere. Within these configurations StarWind Virtual SAN is running on a set of Windows-running Dell servers and provides “external” hypervisor cluster with a shared storage over iSCSI and SMB3 protocols for vSphere and Hyper-V.

Microsoft has its own initiatives for Software Defined Storage (SDS) targeting both smaller and bigger virtualization deployments, and they are called Clustered Storage Spaces and Scale-Out File Server respectively. Clustered Storage Spaces are block- based and Scale-Out File Server is a SMB3-based file front-end or gateway on top of it to work around limited Clustered Storage Spaces scalability. Clustered Storage Spaces are not completely “software” as Microsoft heavily relies on an existing all-SAS storage infrastructure and thus needs SAS hard disks and / or flash (both high capacity SATA and uber-fast PCIe-attached flash are out of game) mounted into external SAS JBODs (no way to use internally-mounted server storage with Clustered Storage Spaces even if it is SAS). Additionally: SAS HBAs and cabling to create all-connected mesh between two or three servers and set of SAS JBODs. Inter-node data is wired with SAS fabric and distributed access locks are not present within Microsoft SDS implementation – they are using SAS hardware ones.

On the other hand, StarWind Virtual SAN does not need SAS connectivity, it wires inter-node communications over 10 or 40/56 gigabit Ethernet which is both faster and more cost effective. StarWind does not rely on SAS hardware locks so can use internally mounted SAS capacity and also inexpensive SATA hard disks and high performing PCIe flash. StarWind Virtual SAN adds some more important features like in-line write-targeted deduplication pairing Microsoft off-line read-targeted one, log-structured file system dramatically improving performance for all-random typical VM workload, and distributed write-back caches pairing Microsoft read-only CSV cache and write-back flash cache. So at the end of the day StarWind Virtual SAN provides Hyper-V nodes with a virtual shared storage with no SAS-related hardware dependencies in hyper-converged scenario or completely takes control of a block back-end being basement for a Scale-Out File Server in “Compute and Storage Separated” scenario

Hardware

Dell PowerEdge R730

R730 is the best fit for the hyper-converged virtualization environment building block role. It provides a customer with the widest possible choice of components and also supports multiple internal storage combinations including all types of storage, from 3.5” disks and PCIe flash to 1.8” flash. The Intel® Xeon® E5 2600 v3 line provides a broad selection of CPUs to be used depending on the implementation scenario.

Technical specifications:

Processor

Up to 2 Intel® Xeon® E5 2600 v3 series processors.

The CPU ranges from 1.6 GHz 6 core / 6 threads Intel® Xeon® E5 2603 v3 to 2.3 GHz 18 core / 36 threads Intel® Xeon® E5 2699 v3

Chipset

Intel C610 series chipset

Memory

Up to 768GB (24 DIMM slots): 4GB/8GB/16GB/32GB DDR4 up to 2133MT/s

Storage

HDD: SAS, SATA, Near-line SAS SSD: SAS, SATA

16 x 2.5” – up to 29TB via 1.8TB hot-plug SAS hard drives”

8 x 3.5” – up to 48TB via 6TB hot-plug NL SAS hard drives”

Drive Bays

Internal hard drive bay and hot-plug backplane:

Up to 16 x 2.5” HDD: SAS, SATA, Near-line SAS SSD: SAS, SATA

Up to 8 x 3.5” HDD: SAS, SATA, Near-line SAS SSD: SAS, SATA

RAID Controllers

Internal:

PERC H330

PERC H730

PERC H730P

External:

PERC H830

Network Controller

4 x 1Gb, 2 x 1Gb + 2 x 10Gb, 4 x 10Gb

Typical server configurations

Configuration 1: SAS storage, hyper-converged

2 x Intel Xeon E5-2650v3 Processor

2.5” chassis with up to 16 Hard drives

8x 16 GB RDIMM RAM, 128 GB total

16x 600GB 15K RPM SAS 6Gbps 2.5in Hot-plug Hard Drive

PERC H730 integrated RAID controller, 1GB NV Cache

Broadcom 57800 2x10Gb DA/SFP+ + 2x1Gb BT Network Daughter Card (SAN + LAN)

Intel Ethernet I350 QP 1Gb Server Adapter (LAN)

iDRAC8 Enterprise w/ vFlash, 8GB SD

Dual, Hot-plug, Redundant Power Supply (1+1), 750W

Usable capacity:

up to 4.8 TB with SAS RAID 10

up to 9.6 TB with SAS RAID 0

Total: $ 18,427.83 1,2

Configuration 2: Hybrid storage, hyper-converged

2 x Intel Xeon E5-2650v3 Processor

3.5” chassis with up to 8 Hard drives

16x 16 GB RDIMM RAM, 256 GB total

4x 4 TB 7.2K RPM SATA 6Gbps disks

2x 800GB Read intensive Solid State Drive SAS MLC 12Gbps

PERC H730 integrated RAID controller, 1 GB NV Cache

Broadcom 57800 2x10Gb DA/SFP+ + 2x1Gb BT Network Daughter Card (SAN + LAN)

Intel Ethernet I350 QP 1Gb Server Adapter (LAN)

iDRAC8 Enterprise w/ vFlash, 8GB SD

Dual, Hot-plug, Redundant Power Supply (1+1), 750W

Usable capacity:

up to 8,8 TB with SATA RAID 10 & SAS SSD RAID 1

up to 12,8 TB with SATA RAID 5 & SAS SSD RAID 1,

up to 17,8 TB with SATA RAID 0 & SAS SSD RAID 0

Total: $ 16,980,92 1,2

Configuration 3: all-flash, hyper-converged

2 x Intel Xeon E5-2690v3 Processor

3.5” chassis with up to 8 Hard drives

24x 16 GB RDIMM RAM, 384 GB total

8x 800GB Mix use Solid State Drive SATA MLC 12Gbps

PERC H330 integrated RAID controller

Broadcom 57800 2x10Gb DA/SFP+ + 2x1Gb BT Network Daughter Card (LAN)

Mellanox ConnectX-3 Dual Port 40Gb Direct Attach/QSFP Server Network Adapter (SAN)

iDRAC7 Enterprise w/ vFlash, 8GB SD

Dual, Hot-plug, Redundant Power Supply (1+1), 750W

Usable capacity:

up to 3,2 TB with SAS SSD RAID 10

up to 5,6 TB with SAS SSD RAID 5

up to 6,4 TB with SAS SSD RAID 0

Total: $ 29,749.62 1,2

Ethernet switch

Dell S55 has been chosen as the main switch for all hypervisor and user traffic in the environment. With 44 1-GbE, and 4 10-GbE ports it can easily accommodate all the management and hypervisor traffic in the given configurations.

Additional 10 GbE switches like Dell N4810 may be added to support iSCSI backbone scale-out beyond 3 nodes.

1. The prices are not final and provided for reference only.

Prices were calculated using the “Customize and buy” section on Dell web site.

2. Default service contract selected.

StarWind Virtual SAN® Reference Architecture

StarWind Virtual SAN supports both hyper-converged and “Compute and Storage Separated” virtualization scenarios. With Hyper-Converged scenario both hypervisor and storage virtualization stack share the same hardware so most of I/O happens locally bypassing Ethernet wiring. With “Compute and Storage Separated” hypervisor cluster and storage cluster are physically stand-alone entities and all I/O happens over Ethernet fabric.

We will start with a setup built “from scratch” to match I/O and other requirements of customer setup. We will also leave some space for expansion and provide details on how to do Scale-Out of the cluster to match updated needs (more VMs, more served storage etc) later in the Scale-Out section.

Hyper-converged

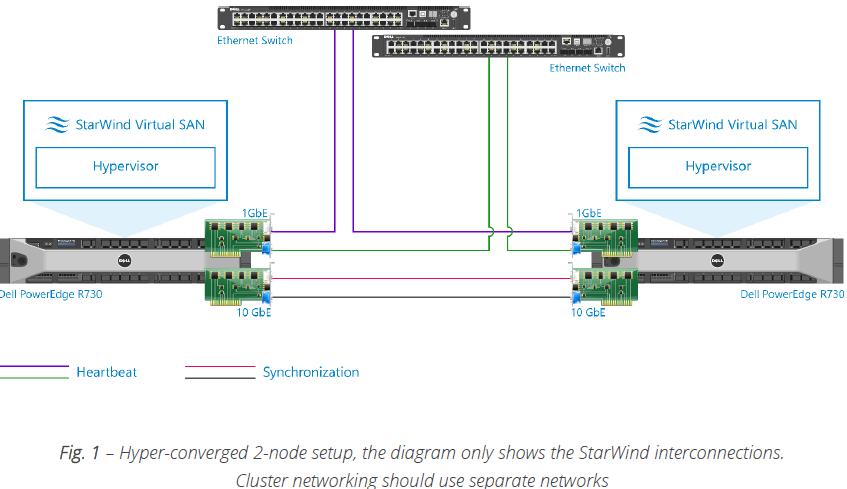

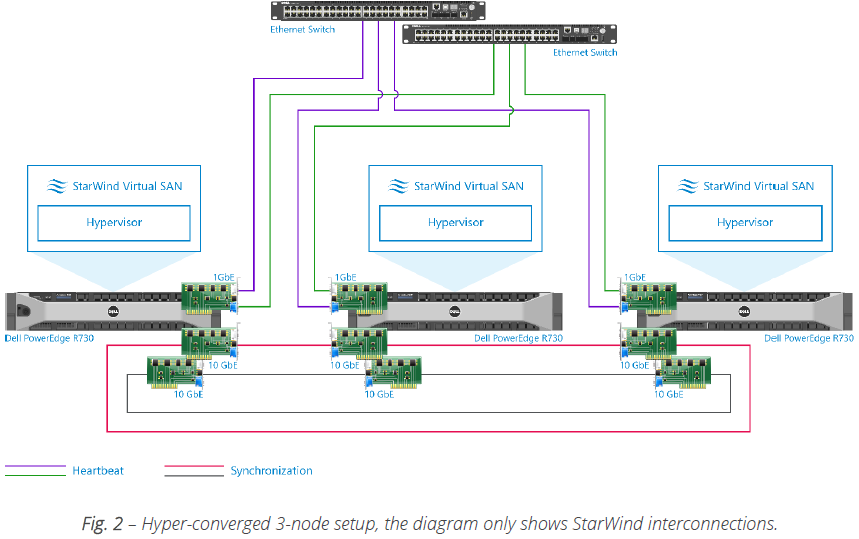

StarWind Virtual SAN simplifies the virtualization environment architecture by unifying the storage and compute powers into a single layer making each server an equal part of the cluster. This results in a hyper-converged unit which does not require any dedicated shared storage resource. The minimum number of servers required for a fault tolerant configuration is 2. The minimum number of servers for a fault tolerant configuration with storage setup in RAID 0 is 3.

All read I/O in this type of setups is local only (and can also be aggregated using partner nodes thanks to MPIO). Writes are only confirmed with the partner nodes mirroring the same LU so their amount is reduced, RAM and Flash cache is local so with data in cache there are no Ethernet sends or receives. Since server’s RAM is used for the hypervisor, VM needs, and StarWind Virtual SAN L1 cache, sizing RAM is very important. Provisioning insufficient amount of RAM to the hypervisor, VMs, or StarWind can lead to a performance decrease of the corresponding component or whole configuration as such.

Memory configuration

Minimal possible setup starts with 16GB of RAM. We assume 4 GB are to be reserved for the hypervisor and base OS. StarWind Virtual SAN L1 cache memory requirement starts with 1 GB. Each VM typically requires 2-4 GB of RAM. Also, the server has to maintain some reserve RAM resources to accept VMs migrated from another cluster node. As a result we can provision no more than 40% of server’s RAM for the locally hosted VMs. E.g. with 1 TB of clustered storage this configuration has about 11 GB of RAM available for clustered VMs.

Storage Configuration

Storage is described by 2 key characteristics: performance and capacity. In virtualization, main performance metric is the number of IO commands it can process per second (IOPS). This value has to be determined based on thorough application IO monitoring and calculation of multiple applications working together on one host as VMs. The number of IOPS underlying storage can process has to be at least equal to the sum of all VM IOPS together. Additional power reserve is then calculated and added to the storage requirement for the future performance growth.

Capacity determines how much data you can store on a given server without adding SAN & NAS external storage. The storage array of the server is chosen based on these 2 characteristics. This process is usually unique for each environment so there is no rule of thumb for storage array to be chosen depending on the company size or number of VMs.

Networking

The interconnections in the virtualization environment also determine how fast the users can communicate with the applications running inside virtualization environment.

The minimum networking configuration is 4x 1-GbE ports. In this configuration 2 ports are used exclusively for StarWind traffic. 1 Port is used for VM migration between the hosts, and another for the management, client, and StarWind heartbeat connections. In a 3-node hyper-converged setup additional 2 ports per node are required.

In a clustered hyper-converged environment, networking also determines the maximum write performance, because each written block has to be transferred to the partner cluster node to guarantee storage redundancy. Thus, the throughput of the links used for StarWind synchronization and iSCSI traffic has to be higher than the maximum required storage performance in order to avoid bottlenecks.

An additional synchronization and iSCSI connection has to be added as soon as storage traffic starts maxing out the existing interconnects.

Currently, one of the most cost effective ways to interconnect a 2-node hyper-converged setup is a direct 10 GbE connection between the nodes. It allows both throughput capabilities for iSCSI and cluster traffic, as well as maintains a power reserve to scale the configuration.

With a 3-node setup 10 GbE direct connections are also supported, but only for iSCSI and synchronization traffic.